Download topic as PDF

Download topic as PDF

Supported ingestion methods and data sources for the Splunk Data Stream Processor

You can get data into your data pipeline in the following ways.

Supported ingestion methods

The following ingestion methods are supported.

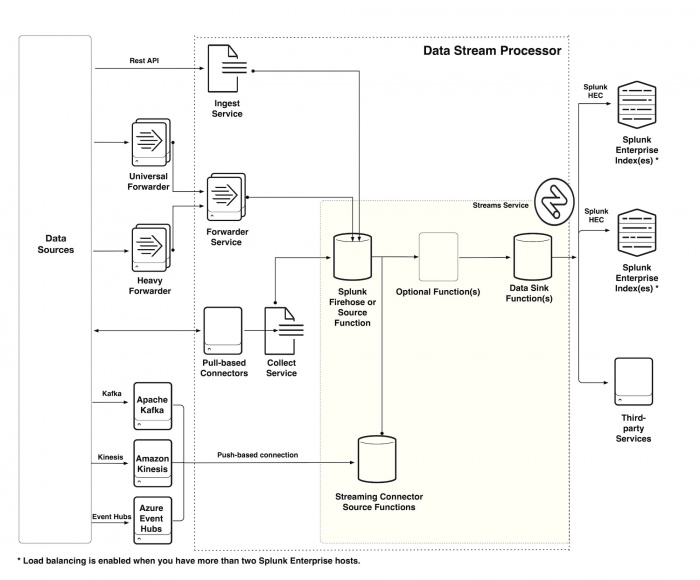

Send events using the Ingest Service

Use the Ingest Service to send JSON objects to the /events or the /metrics endpoint. See Format and send events using the Ingest Service. For the Ingest REST API Reference, see the Ingest REST API on the Splunk developer portal.

Send events using the Forwarders service

Send data from a Splunk forwarder to the Splunk Forwarders service. See Send events using a forwarder. For the Forwarders REST API Reference, see the Forwarders REST API on the Splunk developer portal.

Get data in using the Collect service

You can use the Collect service to manage how data collection jobs ingest event and metric data. See the Collect service documentation.

Get data in using a connector

A connector connects a data pipeline with an external data source. There are two types of connectors:

- Push-based connectors continuously send data from an external source into a pipeline. See Get data in with a push-based connector. The following push-based connectors are supported:

- Pull-based connectors collect events from external sources and send them into your DSP pipeline though the Collect service. See Get data in with a pull-based connector. The following pull-based connectors are supported:

Read from Splunk Firehose

Use Splunk Firehose to read data from the Ingest REST API, Forwarders, and Collect services. See Splunk Firehose.

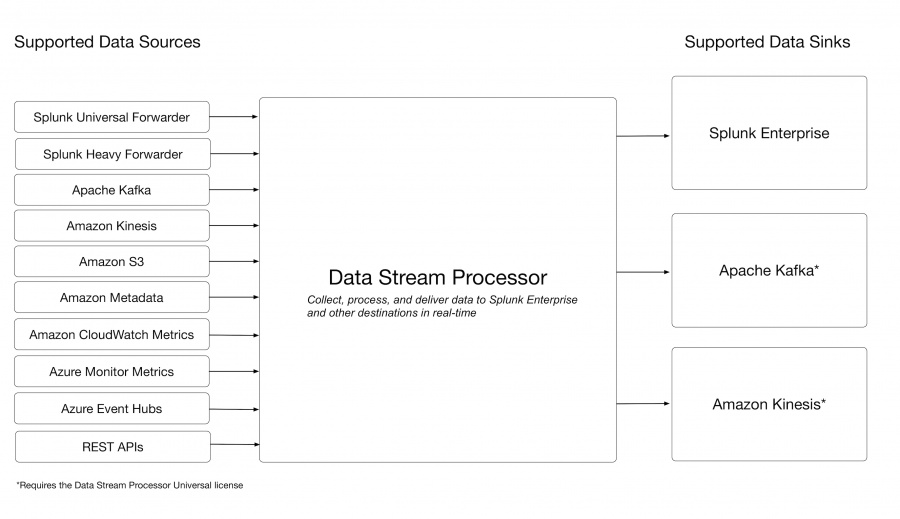

Supported data sinks

Use the Splunk Data Stream Processor to send data to the following sinks, or destinations:

Send data to a Splunk index

See Write to Index and Write to Splunk Enterprise in the Splunk Data Stream Processor Function Reference manual.

Send data to Apache Kafka

See Write to Kafka in the Splunk Data Stream Processor Function Reference manual. Sending data to Apache Kafka requires the DSP universal license. See DSP universal license in the Install and administer the Data Stream Processor manual.

Send data to Amazon Kinesis

See Write to Kinesis in the Splunk Data Stream Processor Function Reference manual. Sending data to Amazon Kinesis requires the DSP universal license. See DSP universal license in the Install and administer the Data Stream Processor manual.

Architecture diagrams

The following diagram summarizes supported data sources and data sinks.

The following diagram shows the different services and ways that data can enter your data pipeline.

|

NEXT Getting data in overview for the Splunk Data Stream Processor |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.0.1

Feedback submitted, thanks!