Create pipelines for Edge Processors

To specify how you want your Edge Processors to process and route your data, you must create pipelines and apply them to the Edge Processors.

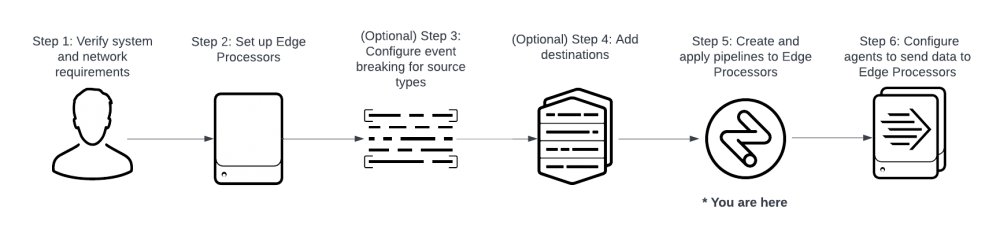

This is step 5 of 6 for using an Edge Processor to process data and route it to a destination. To see an overview of all of the steps, see Quick start: Process and route data using Edge Processors.

A pipeline is a set of data processing instructions written in the Search Processing Language, version 2 (SPL2). To create a valid pipeline, you must complete the following tasks:

- Define the pipeline's partition, or the subset of data that you want this pipeline to process.

- Optionally, you can also add sample data to ensure your pipeline processes data as desired.

- Specify the destination that the pipeline sends processed data to.

- Configure an SPL2 statement that defines what data to process, how to process it, and where to send the processed data to.

When you apply a pipeline to an Edge Processor, the Edge Processor uses those instructions to process the data that it receives.

Preventing data loss

Each pipeline creates a partition of the incoming data based on specified conditions, and only processes data that meets those conditions. For example, if you configure the partition of your pipeline to keep data that meets the condition sourcetype=buttercup, then your pipeline only accepts and processes events that have the sourcetype field set to buttercup. All other data is excluded from the pipeline.

As another example, if you configure the partition to remove data that meets the condition sourcetype=buttercup, then your pipeline only accepts and processes events that do not have the sourcetype field set to buttercup. Any data that has the sourcetype field set to buttercup is excluded from the pipeline.

If the Edge Processor doesn't have an additional pipeline that accepts the excluded data, that data is either routed to the default destination or dropped. To configure a default destination for unprocessed data, see Add or manage destinations and Add an Edge Processor.

As a best practice for preventing unwanted data loss, make sure to always have a default destination for your Edge Processors. Otherwise, all unprocessed data is dropped. See Add an Edge Processor.

Prerequisites

Before starting to create a pipeline, confirm the following:

- The source type of the data that you want the pipeline to process is listed on the Source types page of your tenant. If your source type is not listed, then you must add that source type to your tenant and configure event breaking and merging definitions for it. See Add source types for Edge Processors for more information.

- The destination that you want the pipeline to send data to is listed on the Destinations page of your tenant. If your destination is not listed, then you must add that destination to your tenant. See Add or manage destinations for more information.

Steps

Complete these steps to create a pipeline that receives data associated with a specific source type, source, or host, optionally processes it, and sends that data to a destination.

- Navigate to the Pipelines page and then select New pipeline.

- Select Blank pipeline and then select Next.

- Specify a subset of the data received by the Edge Processor for this pipeline to process. To do this, you must define a partition by completing these steps:

- Select the plus icon (

) next to Partition or select the option that matches how you would like to create your partition in the Suggestions section.

) next to Partition or select the option that matches how you would like to create your partition in the Suggestions section. - In the Field field, specify the event field that you want the partitioning condition to be based on.

- To specify whether the pipeline includes or excludes the data that meets the criteria, select Keep or Remove.

- In the Operator field, select an operator for the partitioning condition.

- In the Value field, enter the value that your partition should filter by to create the subset. Then select Apply. You can create as many conditions for a partition in a pipeline by selecting the plus icon (

).

). - Once you have defined your partition, select Next.

- (Optional) Enter or upload sample data for generating previews that show how your pipeline processes data.

The sample data must be in the same format as the actual data that you want to process. See Getting sample data for previewing data transformations for more information.

- Select Next to confirm your sample data or to go to the next step.

- Select the name of the destination that you want to send data to.

- (Optional) If you selected a Splunk platform S2S or Splunk platform HEC destination, you can configure index routing:

- Select one of the following options in the expanded destinations panel:

Option Description Default The pipeline does not route events to a specific index.

If the event metadata already specifies an index, then the event is sent to that index. Otherwise, the event is sent to the default index of the Splunk platform deployment.Specify index for events with no index The pipeline only routes events to your specified index if the event metadata did not already specify an index. Specify index for all events The pipeline routes all events to your specified index. - If you selected Specify index for events with no index or Specify index for all events, then in the Index name field, select or enter the name of the index that you want to send your data to.

Be aware that the destination index is determined by a precedence order of configurations. See How does an Edge Processor know which index to send data to? for more information.

- Select one of the following options in the expanded destinations panel:

- Select Done to confirm the data destination.

After you complete the on-screen instructions, the pipeline builder displays the SPL2 statement for your pipeline. - (Optional) Select the Preview Pipeline icon (

) to generate a preview that shows what the sample data looks like when it passes through the pipeline.

) to generate a preview that shows what the sample data looks like when it passes through the pipeline. - (Optional) To process the incoming data before sending it to a destination, add processing commands to the SPL2 statement. You can do that by selecting the plus icon (

) next to Actions and selecting a data processing action, or by typing SPL2 commands and functions directly in the editor. Especially recommended for SPL2 beginners, under the plus icon (

) next to Actions and selecting a data processing action, or by typing SPL2 commands and functions directly in the editor. Especially recommended for SPL2 beginners, under the plus icon ( ) you can also select Apply custom command function to discover, select, and apply user defined SPL2 functions. For information about the supported SPL2 syntax, see Edge Processor pipeline syntax.

) you can also select Apply custom command function to discover, select, and apply user defined SPL2 functions. For information about the supported SPL2 syntax, see Edge Processor pipeline syntax.

The pipeline builder includes the SPL to SPL2 conversion tool, which you can use to convert SPL into SPL2 that is valid for Edge Processor pipelines. See SPL to SPL2 Conversion tool in the SPL2 Search Reference.

- To save your pipeline, do the following:

- Select Save pipeline.

- In the Name field, enter a name for your pipeline.

- (Optional) In the Description field, enter a description for your pipeline.

- Select Save.

The pipeline is now listed on the Pipelines page, and you can now apply it to Edge Processors as needed.

- To apply this pipeline to an Edge Processor, do the following:

- Navigate to the Pipelines page.

- In the row that lists your pipeline, select the Actions icon (

) and then select Apply/Remove.

) and then select Apply/Remove. - Select the Edge Processors that you want to apply the pipeline to, and then select Save.

You can only apply pipelines to Edge Processors that are in the Healthy status.

- Navigate to the Edge Processors page. Then, verify that the Instance health column for the affected Edge Processors shows that all instances are back in the Healthy status.

- Navigate to the Pipelines page. Then, verify that the Applied column for the pipeline contains a The pipeline is applied icon (

).

).

To see how your pipeline processes your sample data with each added action, select the Actions icon (![]() ) on a data processing action, and select Preview up to this action. This generates a preview of your processed data up to the selected action.

) on a data processing action, and select Preview up to this action. This generates a preview of your processed data up to the selected action.

It can take a few minutes for this process to be completed. During this time, the affected Edge Processors enter the Pending status. To confirm that the process completed successfully, do the following:

You might need to refresh your browser to see the latest updates.

For information about other ways to apply pipelines to Edge Processors, see Apply pipelines to Edge Processors.

The Edge Processors that you applied the pipeline to can now process and route data as specified in the pipeline configuration.

Next step

After creating a pipeline and applying it to your Edge Processor, you can configure data sources to send data to your Edge Processor. See Get data from a forwarder into an Edge Processor and Get data into an Edge Processor using HTTP Event Collector.

See also

- The Process data using pipelines chapter

- The Route data using pipelines chapter

| Edge Processor pipeline syntax | Edit or delete pipelines for Edge Processors |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.0.2209, 9.0.2303, 9.0.2305, 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406, 9.3.2408, 9.3.2411 (latest FedRAMP release)

Download manual

Download manual

Feedback submitted, thanks!