Store expired Splunk Cloud Platform data in your private archive

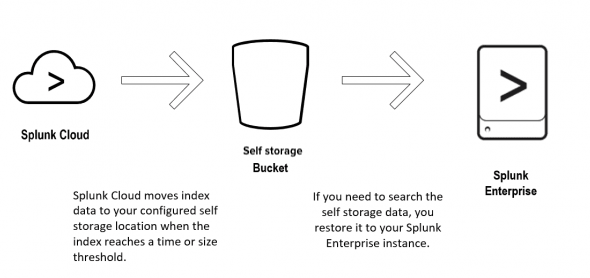

Dynamic Data Self Storage (DDSS) lets you move your data from your Splunk Cloud Platform indexes to a private storage location in your AWS or GCP environment. You can use DDSS to maintain access to older data that you might need for compliance purposes.

You can configure Splunk Cloud Platform to move data automatically from an index when the data reaches the end of the Splunk Cloud Platform retention period that you configure. You can also restore the data from your self storage location to a Splunk Enterprise instance. To ensure there is no data loss, DDSS maintains your data in the Splunk Cloud Platform environment until it is safely moved to your self storage location.

Requirements for Dynamic Data Self Storage

To configure a self storage location in your AWS or GCP environment, you must have sufficient permissions to create Amazon S3 or Google Cloud Storage buckets and apply policies or permissions to them. If you are not the AWS or GCP administrator for your organization, make sure that you can work with the appropriate administrator during this process to create the new bucket.

After you move the data to your self storage location, Splunk Cloud Platform does not maintain a copy of this data and does not provide access to manage the data in your self storage environment, so make sure that you understand how to maintain and monitor your data before moving it to the bucket.

If you intend to restore your data, you also need access to a Splunk Enterprise instance.

Performance

DDSS is designed to retain your expired data with minimal performance impact. DDSS also ensures that the export rate does not spike in the case of a large volume of data. For example, if you reduce the retention period from one year to ninety days, the volume increases, but the export rate does not spike. This ensures that changes in data volume do not impact performance.

For more information on DDSS performance and limits, see Service limits and constraints.

How Dynamic Data Self Storage works

Splunk Cloud Platform moves data to your self storage location when the index meets a configured size or time threshold. Note the following:

- If an error occurs, a connection issue occurs, or a specified storage bucket is unavailable or full, Splunk Cloud Platform attempts to move the data every 15 minutes until it can successfully move the data.

- Splunk Cloud Platform does not delete data from the Splunk Cloud Platform environment until it has successfully moved the data to your self storage location.

- Data is encrypted by SSL during transit to your self storage location. Because Splunk Cloud Platform encryption applies only to data within Splunk buckets, you might want to encrypt data in the target bucket after transit. For Amazon S3 buckets, to ensure your data is protected, enable AES256 SSE-S3 on your target bucket so that data encryption resumes immediately upon arrival at the SSE-S3 bucket. Enabling AES256 SSE-S3 provides server-side encryption with Amazon S3 Managed keys (SSE-S3) only. This feature does not work with KMS keys. For GCP buckets, data encryption is enabled by default.

After Splunk Cloud Platform moves your data to your self storage location, you can maintain the data using your cloud provider's tools. If you need to restore the data so that it is searchable, you can restore the data to a Splunk Enterprise instance. The data is restored to a thawed directory, which exists outside of the thresholds for deletion you have configured on your Splunk Enterprise instance. You can then search the data and delete it when you finish.

When you restore data to a thawed directory on Splunk Enterprise, it does not count against the indexing license volume for the Splunk Enterprise or Splunk Cloud Platform deployment.

Configure self storage locations

You can set up one or more buckets in Amazon S3 or Google Cloud Storage (GCS) to store your expired data. For configuration details, see:

To manage self storage locations in Splunk Cloud Platform your role must hold the indexes_edit capability. The sc_admin role holds this capability by default. All self storage configuration changes are logged in the audit.log file.

For information on how to configure DDSS self storage locations programmatically without using Splunk Web, see Manage DDSS self storage locations in the Admin Config Service Manual.

Configure self storage in Amazon S3

To configure a new self storage location in Amazon S3, you must create an S3 bucket in your AWS environment, and configure the S3 bucket as a new self storage location in the Splunk Cloud Platform UI. When you configure the S3 bucket as a new storage location, Splunk Cloud Platform generates a resource-based bucket policy that you must copy/paste to your S3 bucket to grant Splunk Cloud the required access permissions.

For information on how to create and manage Amazon S3 buckets, see the AWS documentation. For information on the differences between AWS identity-based policies and resource-based policies, see https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_identity-vs-resource.html

Create an Amazon S3 bucket in your AWS environment

When creating an Amazon S3 bucket, follow these important configuration guidelines:

- Region: You must provision your Amazon S3 bucket in the same region as your Splunk Cloud Platform environment.

- Object Lock: Do not activate AWS S3 Object Lock when creating a bucket. Locking the bucket prevents DDSS from moving data to the bucket. For more information, see https://docs.aws.amazon.com/AmazonS3/latest/user-guide/object-lock.html

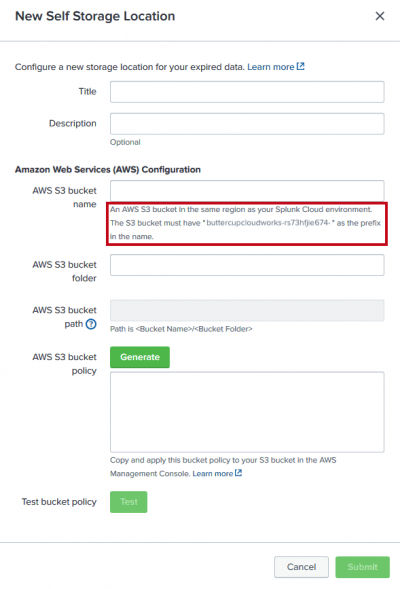

- Naming: When you name the S3 bucket, it must include the Splunk prefix provided to you and displayed in the UI under the AWS S3 bucket name field. Enter the prefix before the rest of the bucket name. This prefix contains your organization's Splunk Cloud ID, which is the first part of your organization's Splunk Cloud URL, and a 12-character string. The complete S3 bucket name has the following syntax:

Splunk Cloud ID-{12-character string}-{your bucket name}

- For example, if you administer Splunk Cloud Platform for Buttercup Cloudworks, and your organization's Splunk Cloud URL is

buttercupcloudworks.splunkcloud.com, then your Splunk Cloud ID isbuttercupcloudworks. The image shows the following example prefix you'd see when configuring an S3 bucket using the New Self Storage Location dialog box:

buttercupcloudworks-rs73hfjie674-{your bucket name}

- If you do not use the correct prefix, Splunk cannot write to your bucket. By default, your Splunk Cloud Platform instance has a security policy applied which disallows write operations to S3 buckets that do not include your Splunk Cloud ID. This security policy allows the write operation only for those S3 buckets that you create for the purpose of storing your expired Splunk Cloud data.

If you have enabled AES256 SSE-S3 on your target bucket, the data will resume encryption at rest upon arrival at the SSE-S3 bucket.

Configure a self storage location for the Amazon S3 bucket

To configure your Amazon S3 bucket as a self storage location in Splunk Cloud Platform:

- In Splunk Web, click Settings > Indexes > New Index.

- In the Dynamic Data Storage field, click the radio button for Self Storage.

- Click Create a self storage location.

The Dynamic Data Self Storage page opens. - Give your location a Title and an optional Description.

- In the Amazon S3 bucket name field, enter the name of the S3 bucket that you created.

- (Optional) Enter the bucket folder name.

- Click Generate. Splunk Cloud Platform generates a bucket policy.

- Copy the bucket policy to your clipboard. Note: Customers with an SSE-S3 encrypted bucket must use the default policy and not modify the policy in any way.

- In a separate window, navigate to your AWS Management console and apply this policy to the S3 bucket you created earlier.

- In the Self Storage Locations dialog, click Test.

Splunk Cloud writes a 0 KB test file to the root of your S3 bucket to verify that Splunk Cloud Platform has permissions to write to the bucket. A success message displays, and the Submit button is enabled. - Click Submit.

- In the AWS Management Console, verify that the 0 KB test file appears in the root of your bucket.

You cannot edit or delete a self storage location after it is defined, so verify the name and description before you save it.

Configure self storage in GCP

To configure a new self storage location in GCP, you must create a Google Cloud Storage (GCS) bucket in your GCP environment and configure the GCS bucket as a new self storage location in the Splunk Cloud Platform UI. For detailed information on how to create and manage GCS buckets, see the GCP documentation.

Create a GCS bucket in your GCP environment

When creating a GCS bucket, follow these important configuration guidelines:

Bucket configurations that deviate from these configuration guidelines can incur unintentional GCS charges and interfere with DDSS successfully uploading objects to your GCS bucket.

- Region: You must provision your GCS buckets in the same GCP region as your Splunk Cloud Platform deployment. Your GCP region depends on your location. For more information, see Available regions.

- Bucket lock/bucket retention policy: Do not set a retention policy for your GCS bucket. The bucket lock/bucket retention policy feature is not compatible with the GCS parallel composite upload feature DDSS uses to transfer files to GCS buckets and can interfere with data upload. For more information on parallel composite uploads, see https://cloud.google.com/storage/docs/parallel-composite-uploads.

- Default storage class: Make sure to use the Standard default storage class when you create your GCS bucket. Using other default storage classes can incur unintentional GCS charges.

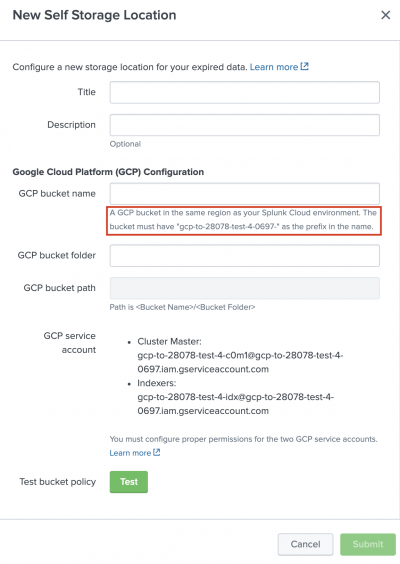

- Permissions: You must configure permissions for the 2 GCP service accounts associated with your Splunk Cloud Platform deployment. These service accounts are shown under GCP service account in the New Self Storage Location modal when you configure a new self storage location in Splunk Web.

- To configure permissions for the GCP service accounts, you must assign the following predefined GCP roles to the 2 GCP service accounts using the GCP console:

Storage Legacy Bucket WriterStorage Legacy Object Reader

- For more information on GCP roles, see IAM roles for Cloud Storage in the GCP documentation.

- Naming: Your GCS bucket name must include the prefix that Splunk Cloud Platform provides and displays in the UI under the GCP bucket name field. The following image shows an example of this prefix. This prefix contains your Splunk Cloud Platform ID, which is the first part of your Splunk Cloud Platform URL, and a 4-character string. The complete GCS bucket name has the following syntax:

Splunk Cloud ID-{4-character string}-{your bucket name}

Configure a self storage location for the GCS bucket

To configure your GCS bucket as a self storage location:

- In Splunk Web, select Settings > Indexes > New Index.

- Under Dynamic Data Storage, select the Self Storage radio button.

- Select Create a self storage location.

The Dynamic Data Self Storage Locations page opens. - Select New Self Storage Location.

The New Self Storage Location modal opens. - Give your new storage location a Title and a Description (optional).

- In the GCP bucket name field, enter the name of the GCS bucket you created.

- (Optional) In the GCP bucket folder field, enter the name of the GCS bucket folder.

- Under GCP service account, note the 2 service account strings. In your GCP console, make sure that each service account is assigned the proper GCP roles of

Storage Legacy Bucket WriterandStorage Legacy Object Reader, as discussed under "permissions" in the previous section. - Select Test.

Splunk Cloud Platform writes a 0 KB test file to the root of your GCS bucket to verify that Splunk Cloud Platform has permissions to write to the bucket. A success message appears, and the Submit button is activated. - Select Submit.

Manage self storage settings for an index

Enable Dynamic Data Self Storage on any Splunk Cloud Platform index to allow expired data to be stored to an Amazon S3 or GCP bucket.

Managing self storage settings requires the Splunk indexes_edit capability. All self storage configuration changes are logged in the audit.log file.

Enable self storage for an index

Prerequisite

You must have configured a self storage location. See

Configure Self Storage Locations for details.

- Go to Settings > Indexes.

- Click New Index to create a new index, or click Edit in the Actions column for an existing index.

- In the Dynamic Data Storage field, click the radio button for Self Storage.

- Select a storage location from the drop-down list.

- Click Save.

Disable self storage for an index

If you disable self storage for an index, expired data is deleted.

- Go to Settings > Indexes.

- Click Edit in the Actions column for the index you want to manage.

- In the Dynamic Data Storage field, click the radio button for No Additional Storage.

- Click Save. Self storage is disabled for this index. When data in this index expires, it is deleted.

Disabling self storage for an index does not change the configuration of the external location, nor does it delete the external location or the data stored there. Disabling self storage also does not affect the time or size of the data retention policy for the index.

Verify Splunk Cloud Platform successfully moved your data

To verify that your data was successfully moved to your self storage location, you can search the splunkd.log files.

You must have an sc_admin role to search the splunkd.log files.

Follow the appropriate procedure for your deployment's Splunk Cloud Platform Experience. For how to determine if your deployment uses the Classic Experience or Victoria Experience, see Determine your Splunk Cloud Platform Experience.

Classic Experience procedure

- Search your

splunkd.logfiles to view the self storage logs. You search these files in Splunk Web by running the following search:index="_internal" component=SelfStorageArchiver - Search to see which buckets were successfully moved to the self storage location:

index="_internal" component=SelfStorageArchiver "Successfully transferred" - Verify that all the buckets you expected to move were successfully transferred.

Victoria Experience procedure

- Search your

splunkd.logfiles to view the self storage logs. You search these files in Splunk Web by running the following search:

The results of this search also show you which buckets were successfully moved to the self-storage location.index="_internal" "transferSelfStorage Successfully transferred raw data to self storage" - Verify that all the buckets you expected to move were successfully transferred.

Monitor changes to self storage settings

You might want to monitor changes to self storage settings to ensure that the self storage locations and settings meet your company's requirements over time. When you make changes to self storage settings, Splunk Cloud logs the activity to the audit.log. You can search these log entries in Splunk Web by running the following search.

index="_audit"

Note that Splunk Cloud Platform cannot monitor the settings for the self storage bucket on AWS or GCP.

For information about monitoring your self storage buckets, see the following:

- AWS: Access the Amazon S3 documentation and search for "Monitoring Tools".

- GCP: Access the Google Cloud Storage documentation and search for Working with Buckets and Cloud Monitoring.

The following examples apply to AWS and GCP and show the log entries available for monitoring your self storage settings.

Log entry for a new self storage location

Splunk Cloud Platform logs the activity when you create a new self storage location. For example:

10-01-2017 11:28:26.180 -0700 INFO AuditLogger - Audit:[timestamp=10-01-2017 11:28:26.180, user=splunk-system-user, action=self_storage_enabled, info="Self storage enabled for this index.", index="dynamic_data_sample" ][n/a]

You can search these log entries in Splunk Web by running the following search.

index="_audit" action=self_storage_create

Log entry when you remove a self storage location

Splunk Cloud Platform logs the activity when you remove a self storage location. For example:

10-01-2017 11:33:46.180 -0700 INFO AuditLogger - Audit:[timestamp=10-01-2017 11:33:46.180, user=splunk-system-user, action=self_storage_disabled, info="Self storage disabled for this index.", index="dynamic_data_sample" ][n/a]

You can search these log entries in Splunk Web by running the following search.

index="_audit" action=self_storage_disabled

Log entry when you change settings for a self storage location

Splunk Cloud Platform logs the activity when you change the settings for a self storage location. For example:

09-25-2017 21:14:21.190 -0700 INFO AuditLogger - Audit:[timestamp=09-25-2017 21:14:21.190, user=splunk-system-user, action=self_storage_edit, info="A setting that affects data retention was changed.", index="dynamic_data_sample", setting="frozenTimePeriodInSecs", old_value="440", new_value="5000" ][n/a]

The following table shows settings that might change.

| Field | Description |

|---|---|

| info="Archiver index setting changed." | Notification that you successfully changed self storage settings for the specified index. |

| index="dynamic_data_sample" | Name of the index for which self storage settings were modified. |

| setting="frozenTimePeriodInSecs" | The number of seconds before an event is removed from an index. This value is specified in days when you configure index settings. |

| old_value="440" | Value before the setting was updated. |

| new_value="5000" | Value after the setting has been updated. |

You can search these log entries in Splunk Web by running the following search.

index="_audit" action=self_storage_edit

Restore indexed data from a self-storage location

You might need to restore indexed data from a self-storage location. You restore this data by moving the exported data into a thawed directory on a Splunk Enterprise instance, such as $SPLUNK_HOME/var/lib/splunk/defaultdb/thaweddb. When it is restored, you can then search it.

You can restore one bucket at a time. Make sure that you are restoring an entire bucket that contains the rawdata journal, and not a directory within a bucket.

An entire bucket initially contains a rawdata journal and associated tsidx and metadata files. During the DDSS archival process, only the rawdata journal is retained.

For more information on buckets, see How the indexer stores indexes in Splunk Enterprise Managing Indexers and Clusters of Indexers.

Data in the thaweddb directory is not subject to the server's index aging scheme, which prevents it from immediately expiring upon being restored. You can put archived data in the thawed directory for as long as you need it. When the data is no longer needed, simply delete it or move it out of the thawed directory.

As a best practice, restore your data using a 'nix machine. Using a Windows machine to restore indexed data to a Splunk Enterprise instance might result in a benign error message. See Troubleshoot Dynamic Data Self Storage.

Restore indexed data from an AWS S3 bucket

- Set up a Splunk Enterprise instance. The Splunk Enterprise instance can be either local or remote. If you have an existing Splunk Enterprise instance, you can use it.

You can restore self storage data only to a Splunk Enterprise instance. You can't restore self storage data to a Splunk Cloud Platform instance.

- Install the AWS Command Line Interface tool on your local machine. The AWS CLI tool must be installed in the same location as the Splunk Enterprise instance responsible for rebuilding.

- Configure the AWS CLI tool with the credentials of your AWS self storage location. For instructions on configuring the AWS CLI tool, see the Amazon Command Line Interface Documentation.

- Use the recursive copy command to download data from the self storage location to the

thaweddbdirectory for your index. You can restore only one bucket at a time. If you have a large number of buckets to restore, consider using a script to do so. Use syntax similar to the following:aws s3 cp s3://<self storage bucket>/<self_storage_folder(s)>/<index_name> /SPLUNK_HOME/var/lib/splunk/<index_name>/thaweddb/ --recursive

Make sure you copy all the contents of the archived Splunk bucket because they are needed to restore the data. For example, copy starting at the following level: db_timestamp_timestamp_bucketID. Do not copy the data at the level of raw data (.gz files). The buckets display in the

thaweddbdirectory of your Splunk Enterprise instance. - Restore the indexes by running the following command:

When the index is successfully restored, a success message displays and additional bucket files are added to the thawed directory, including

./splunk rebuild <SPLUNK_HOME>/var/lib/splunk/<index_name>/thaweddb/<bucket_folder> <index_name>

tsidxsource types. - After the data is restored, go to the Search & Reporting app, and search on the restored index as you would any other Splunk index.

When you restore data to the thawed directory on Splunk Enterprise, it does not count against the indexing license volume for the Splunk Enterprise or Splunk Cloud Platform deployment.

Restore indexed data from a GCP bucket

- Set up a Splunk Enterprise instance. The Splunk Enterprise instance can be either local or remote. If you have an existing Splunk Enterprise instance, you can use it.

You can restore self storage data only to a Splunk Enterprise instance. You can't restore self storage data to a Splunk Cloud Platform instance.

- Install the GCP command line interface tool,

gsutil, on your local machine. The GCP CLI tool must be installed in the same location as the Splunk Enterprise instance responsible for rebuilding. - Configure the

gsutiltool with the credentials of your GCP self storage location. For instructions on configuring thegsutiltool , see the gsutil tool documentation. - Use the recursive copy command to download data from the self storage location to the

thaweddbdirectory for your index. You can restore only one bucket at a time. If you have a large number of buckets to restore, consider using a script to do so. Use syntax similar to the following:gsutil cp -r gs:<self storage bucket>/<self_storage_folder(s)>/<index_name> /SPLUNK_HOME/var/lib/splunk/<index_name>/thaweddb/

Make sure you copy all the contents of the archived Splunk bucket because they are needed to restore the data. For example, copy starting at the following level:

db_timestamp_timestamp_bucketID. Do not copy the data at the level of raw data (.gz files). The buckets display in thethaweddbdirectory of your Splunk Enterprise instance. - Restore the indexes by running the following command:

When the index is successfully restored, a success message displays and additional bucket files are added to the thawed directory, including

./splunk rebuild <SPLUNK_HOME>/var/lib/splunk/<index_name>/thaweddb/<bucket_folder> <index_name>

tsidxsource types. - After the data is restored, go to the Search & Reporting app, and search on the restored index as you would any other Splunk index.

When you restore data to the thawed directory on Splunk Enterprise, it does not count against the indexing license volume for the Splunk Enterprise or Splunk Cloud deployment.

Troubleshoot DDSS with AWS S3

This section lists possible errors when implementing DDSS with AWS S3.

I don't know the region of my Splunk Cloud Platform environment

I received the following error when testing my self storage location:

Your S3 bucket must be in the same region as your Splunk Cloud Platform environment <AWS Region> .

Diagnosis

Splunk Cloud Platform detected that you created your S3 bucket in a different region than your Splunk Cloud Platform environment.

Solution

If you are unsure of the region of your Splunk Cloud Platform environment, review the error message. The <AWS Region> portion of the error message displays the correct region to create your S3 bucket. After you determine the region, repeat the steps to create the self storage location.

I received an error when testing the self storage location

When I attempted to create a new self storage location, the following error occurred when I clicked the Test button:

Unable to verify the region of your S3 bucket, unable to get bucket_region to verify. An error occurred (403) when calling the Headbucket operation: Forbidden. Contact Splunk Support.

Diagnosis

You might get an error for the following reasons:

- You modified the permissions on the bucket policy.

- You pasted the bucket policy into the incorrect Amazon S3 bucket.

- You did not paste the bucket policy to the Amazon S3 bucket, or you did not save the changes.

- An error occurred during provisioning.

Solution

- Ensure that you did not modify the S3 bucket permissions. The following actions must be allowed: s3:PutObject, s3:GetObject, s3:ListBucket, s3:ListBucketVersions, s3:GetBucketLocation.

- Verify that you applied the bucket policy to the correct S3 bucket, and that you saved your changes.

- If you created the S3 bucket in the correct region, the permissions are correct and you applied and saved the bucket policy to the correct S3 bucket, contact Splunk Support to further troubleshoot the issue.

To review the steps to create the S3 bucket, see Configure self storage in Amazon S3 in this topic.

To review how to apply a bucket policy, see the Amazon AWS S3 documentation and search for "how do I add an S3 bucket policy?".

I'm using Splunk Cloud Platform for a US government entity, and received an error message that the bucket couldn't be found.

I received the following error message:

Cannot find the bucket '{bucket_name}', ensure that the bucket is created in the '{region_name} region.

Diagnosis

For security reasons, S3 bucket names aren't global for US government entities using Splunk Cloud Platform because Splunk can only verify the region of the stack. Buckets with the same name can exist in the available AWS regions that Splunk Cloud Platform supports. For more information, see the Available regions section in the Splunk Cloud Platform Service Description. For more information about AWS GovCloud (US), see the AWS GovCloud (US) website and the AWS GovCloud (US) User Guide.

Solution

If buckets that share the same name must exist in both regions, add the missing bucket to the appropriate region.

Troubleshoot DDSS with GCP

I received a region error

I received one of the following errors when testing my GCP self storage location:

Error 1

Unable to verify the region of your bucket=<bucket-id>.404 GET https://storage.googleapis.com/storage/v1/b/<bucket-id>?projection=noAcl&prettyPrint=false: Not Found. Contact Splunk Support.

Error 2

Unable to verify the region of your bucket=<bucket-id>.403 GET https://storage.googleapis.com/storage/v1/b/<bucket-id>?projection=noAcl&prettyPrint=false: <gcp-cm-serviceaccount> does not have storage.buckets.get access to the Google Cloud Storage bucket. Contact Splunk Support.

Error 3

Your bucket in US-EAST4 is NOT in the same region as your Splunk Cloud environment: US-CENTRAL1.

Diagnosis

Splunk Cloud detected region or permissions issues with your GCP self storage location that must be resolved.

Solution

Error 1

This error may indicate that the bucket may not exist, or that the bucket does not have read access. Confirm that the bucket exists and that it has the correct permissions.

Error 2

This indicates an issue with the DDSS bucket access. Splunk Cloud GCP CM and IDX service accounts must have access to the bucket. Ensure that both service accounts have Storage Legacy Bucket Writer role access to the DDSS bucket.

Error 3

This error indicates that the assigned region for the GCP bucket does not match the assigned region for your Splunk Cloud Platform environment.

When using DDSS with GCP, Splunk Cloud Platform does not support multi-region buckets.

To review the steps to create the GCP bucket in your GCP environment and then configure it for Splunk Cloud Platform, see Configure self storage in GCP in this topic.

I received an error when testing the self storage location

When I attempted to create a new GCP self storage location, I received one of the following errors when I clicked the Test button.

- The GCP CM service account doesn't have

create objectsaccess.

Something went wrong with bucket access. Check that the bucket exists and that the service account is granted permission. Error details: 403 POST https://storage.googleapis.com/upload/storage/v1/b/<bucket-id>/o?uploadType=multipart: { "error": { "code": 403, "message": "<gcp-cm-serviceaccount> does not have storage.objects.create access to the Google Cloud Storage object.", "errors": [ { "message": "<gcp-cm-serviceaccount> does not have storage.objects.create access to the Google Cloud Storage object.", "domain": "global", "reason": "forbidden" } ] } } : ('Request failed with status code', 403, 'Expected one of', <HTTPStatus.OK: 200>)- The GCP CM service account doesn't have

delete objectsaccess.

Something went wrong with bucket access. Check that the bucket exists and that the service account is granted permission. Error details: 403 DELETE https://storage.googleapis.com/storage/v1/b/<bucket-id>/o/splunk_bucket_policy_test_file1619122815.2109005?generation=1619122815419331&prettyPrint=false: <gcp-cm-serviceaccount> does not have storage.objects.delete access to the Google Cloud Storage object.

Diagnosis

You might get an error for the following reasons:

- The GCP CM and IDX service accounts must have CRUD access to the bucket. Ensure that both service accounts have the same permissions to the bucket.

- You did not assign the correct GCP role in the GCP service account field. The correct role is

Storage Legacy Bucket Writer. - You did not save the changes.

- An error occurred during provisioning.

Solution

- Verify that you assigned the correct GCP role to the correct GCP bucket, and that you saved your changes.

- If you created the GCP bucket in the correct region, the permissions are correct and you applied and saved the bucket policy to the correct GCP bucket, contact Splunk Support to further troubleshoot the issue.

To review the steps to create the GCP bucket in your GCP environment and then configure it for Splunk Cloud Platform, see Configure self storage in GCP in this topic. For more information on managing GCP service accounts, see the Google Cloud documentation Creating and managing service accounts.

Troubleshoot DDSS when restoring data using Windows

This section lists possible errors when restoring data using a Windows machine.

I received an error when using Windows to restore data.

I attempted to restore data using a Windows machine, but the following error occurred:

Reason='ERROR_ACCESS_DENIED'. Will try to copy contents

Diagnosis

This error occurs only on Windows builds and is benign. Splunk Cloud Platform bypasses this error by copying the content. You can safely ignore the error and continue with the restore process.

Solution

This error is benign. You can ignore it and continue with the restore process. See Restore indexed data from a self storage location.

| Manage Splunk Cloud Platform indexes | Store expired Splunk Cloud Platform data in a Splunk-managed archive |

This documentation applies to the following versions of Splunk Cloud Platform™: 8.2.2112, 8.2.2201, 8.2.2202, 8.2.2203, 9.0.2205, 9.0.2208, 9.0.2209, 9.0.2303, 9.0.2305, 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406, 9.3.2408, 9.3.2411 (latest FedRAMP release)

Download manual

Download manual

Feedback submitted, thanks!