Performance expectations for sending data from a data pipeline to Splunk Enterprise

This page provides reference information about the performance testing of the Splunk Data Stream Processor performed by Splunk, Inc when sending data to a Splunk indexer with the Write to Splunk Enterprise sink function. Use this information to optimize your "Write to Splunk Enterprise" pipeline performance.

Many factors affect performance results, including file compression, event size, number of concurrent pipelines, deployment architecture, and hardware. These results represent reference information and do not represent performance in all environments.

To go beyond these general recommendations, contact Splunk Services to work on optimizing performance in your specific environment.

Improve performance

To maximize your performance, consider taking the following actions:

- Enable batching of events.

- Do not use an SSL-enabled Splunk Enterprise server.

- Enable

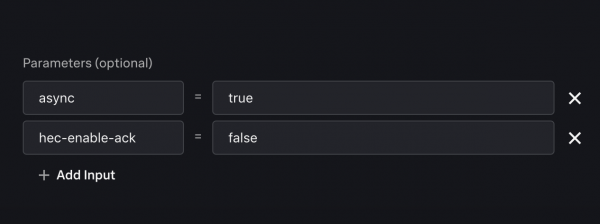

async = truein the Write to Splunk Enterprise function. - Disable HEC acknowledgments in the Write to Splunk Enterprise function.

- Run DSP on a 5 GigE full duplex network.

- Parallelization of the Data Stream Processor with your data source. Parallelization of Data Stream Processor jobs is determined by how many partitions or shards are in the upstream source.

- When using Kafka as a data source, use multiple partitions (example: 16) in the Kafka topic that your DSP pipeline reads from.

- When using Kinesis as a data source, use multiple shards (example: 16) in the Kinesis stream that your DSP pipeline reads from.

Your Write to Splunk Enterprise sink function should have the following additional parameters for performance optimization.

| Batch Events to optimize throughput to Splunk Enterprise indexes in DSP | Monitor your pipeline with data preview and real-time metrics in DSP |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.0.0

Download manual

Download manual

Feedback submitted, thanks!