Send Syslog events to a DSP data pipeline using SC4S with DSP HEC

You can ingest syslog data into DSP using Splunk Connect for Syslog (SC4S) and the DSP HTTP Event Collector (DSP HEC). See SC4S Configuration Variables for more information about configuring SC4S.

Make sure that the SC4S disk buffer configuration is correctly set up to minimize the number of lost events if the connection to DSP HEC is temporarily unavailable. See Data Resilience - Local Disk Buffer Configuration and SC4S Disk Buffer Configuration for more information on SC4S disk buffering.

Update your SC4S workflow to send syslog data to a DSP pipeline

- Create a new pipeline in DSP. See Create a pipeline using the Canvas Builder for more information on creating a new pipeline.

- Add Read from Splunk Firehose as your data source.

- Add transforms and data destinations as needed in your DSP pipeline.

- Use the DSP Ingest API to create a DSP HEC token.

- You must use

sc4sfor the<hec-token-name>when you create the DSP HEC token. - Make sure you copy the

<dsphec-token>after it is created. You will need it for the next step.

- You must use

- Change the following variables in your SC4S configuration:

- Set

SPLUNK_HEC_URLtohttps://<DSP_HOST>:31000. - Set

SPLUNK_HEC_TOKENto<dsphec-token>.

- Set

- Restart your SC4S workflow.

- After you ingest syslog events into DSP, you can format, filter, aggregate or do any data transformations using DSP functions and route data to different destinations. See Sending data from DSP to the Splunk platform and Sending data to other destinations for more information.

Verify that your syslog data is available in your DSP pipeline

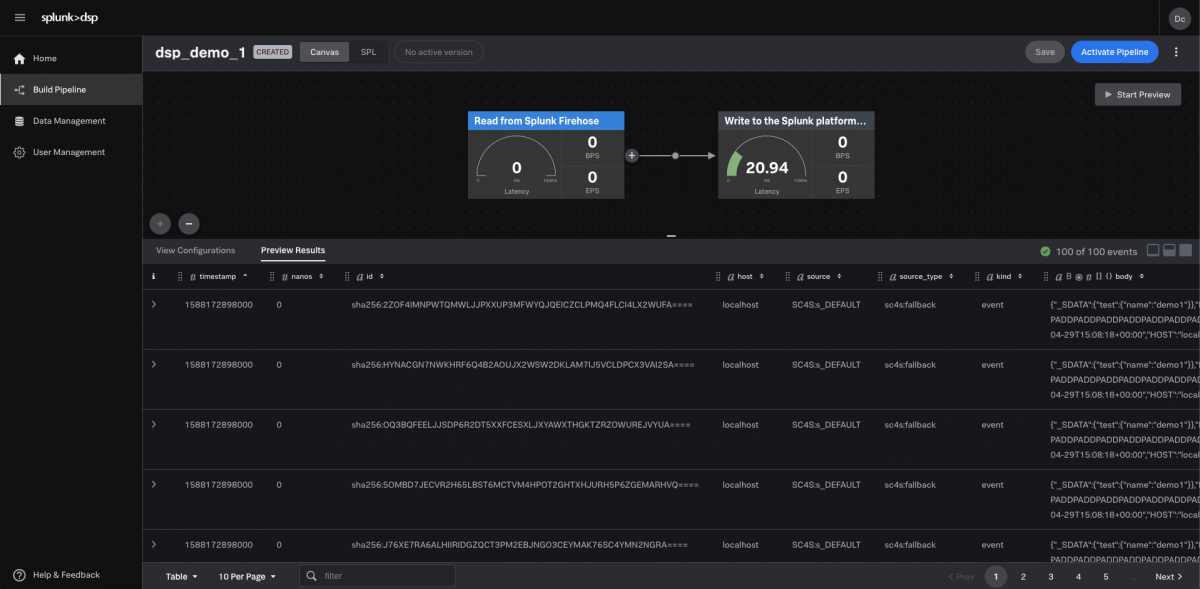

You can quickly verify that your syslog data is now available in DSP. You can click Start Preview on your current pipeline or you can create a new pipeline to verify that the data is available.

- Create a new pipeline in DSP. See Create a pipeline using the Canvas Builder for more information on creating a new pipeline.

- Add Read from Splunk Firehose as your data source.

- (Optional) End your pipeline with the Write to the Splunk platform with Batching sink function.

- Click Start Preview.

You can now see your syslog data in the pipeline preview. See Navigating the Data Stream Processor for more information on using DSP to transform and manage your data.

| Set and use default field values in DSP HEC | Send events to a DSP data pipeline using a Splunk forwarder |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.1.0

Download manual

Download manual

Feedback submitted, thanks!