All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

Tutorial

The (DSP) is a stream processing service that processes data while sending it from a source to a destination through a data pipeline. If you are new to DSP, use this tutorial to get familiar with the capabilities of DSP.

In this tutorial, you will do the following:

- Create a data pipeline in the DSP user interface (UI).

- Send data to your pipeline using the Ingest service. To do that, you will use the Splunk Cloud Services CLI (command-line interface) tool to make API calls to the Ingest service.

- Transform the data in your pipeline by extracting interesting data points and redacting confidential information.

- Send the transformed data to a Splunk Enterprise index.

Before you begin

To complete this tutorial, you need to have the following:

| Prerequisite | How to obtain |

|---|---|

| A licensed instance of the . | See the following documentation: |

| The URL for accessing the user interface (UI) of your DSP instance. | The URL is https://<IP_Address>, where <IP_Address> is the IP address associated with your DSP instance. You can also confirm the URL by opening a terminal window in the extracted DSP installer directory and running the sudo dsp admin print-login command. The hostname field shows the URL.

|

| Credentials for logging into your DSP instance. | If you need to retrieve your login credentials, open a terminal window in the extracted DSP installer directory and then run the sudo dsp admin print-login command.

|

| The Splunk Cloud Services CLI tool, configured to send data to the DSP instance. | See Get started with the Splunk Cloud Services CLI for more details.

This tutorial uses Splunk Cloud Services CLI 4.0.0, the version used when running the base |

| The demodata.txt tutorial data. | The /examples folder in the extracted DSP installer directory contains a copy of this file. You can also download a copy of the file here.

|

| Access to a Splunk Enterprise instance that has the HTTP Event Collector (HEC) enabled. You'll also need to know the HEC endpoint URL and HEC token associated with the instance. | Ask your Splunk administrator for assistance. See Set up and use HTTP Event Collector in Splunk Web in the Splunk Enterprise Getting Data In manual. |

What's in the tutorial data

The demodata.txt tutorial data contains purchase history for the fictitious Buttercup Games store.

The tutorial data looks like this:

A5D125F5550BE7822FC6EE156E37733A,08DB3F9FCF01530D6F7E70EB88C3AE5B,Credit Card,14,2018-01-13 04:37:00,2018-01-13 04:47:00,-73.966843,40.756741,4539385381557252 1E65B7E2D1297CF3B2CA87888C05FE43,F9ABCCCC4483152C248634ADE2435CF0,Game Card,16.5,2018-01-13 04:26:00,2018-01-13 04:46:00,-73.956451,40.771442

Log in to the DSP UI

Start by navigating to the DSP UI and logging in to it.

- In a browser, navigate to the URL for the DSP UI.

- Log in with your DSP username and password.

The DSP UI shows the home page.

Create pipeline using the Canvas View in the DSP UI

Create a pipeline that receives data from the Ingest service and sends the data to your default Splunk Enterprise instance.

In DSP, you can choose to create pipelines in the Canvas View or the SPL2 (Search Processing Language 2) View. The Canvas View provides graphical user interface elements for building a pipeline, while the SPL2 View accepts SPL2 statements that define the functions and configurations in a pipeline.

For this tutorial, use the Canvas View to create and configure your pipeline.

- Click Get Started, then select the Splunk DSP Firehose data source.

- On the pipeline canvas, click the + icon beside the Splunk DSP Firehose function, and select Send to Splunk HTTP Event Collector from the function picker.

- Create a connection to your Splunk Enterprise instance so that the Send to Splunk HTTP Event Collector function can send data to an index on that instance. On the View Configurations tab, from the connection_id drop-down list, select Create New Connection.

- In the Connection Name field, enter a name for your connection.

- In the Splunk HEC endpoint URLs field, enter the HEC endpoint URL associated with your Splunk Enterprise instance.

- In the Splunk HEC endpoint token field, enter the HEC endpoint token associated with your Splunk Enterprise instance.

- Click Save.

- Back on the View Configurations tab, finish configuring the Send to Splunk HTTP Event Collector function.

- In the index field, enter null.

- In the default_index field, enter "main" (including the quotation marks).

- Click the More Options

button located beside the Activate Pipeline button, and select Validate to check that all functions in your pipeline are configured correctly.

button located beside the Activate Pipeline button, and select Validate to check that all functions in your pipeline are configured correctly. - To save your pipeline, click Save.

- Give your pipeline a name and a description, and make sure that the Save As drop-down list is set to Pipeline. Then, click Save again.

You now have a basic pipeline that can read data from Splunk DSP API services such as the Ingest service and then send the data to an index on your Splunk Enterprise instance.

Send data to your pipeline using the Ingest service

Now that you have a pipeline to send data to, let's send some data to it!

Use the Ingest service to send data from the demodata.txt file into your pipeline. To achieve this, you use the Splunk Cloud Services CLI tool to make API calls to the Ingest service.

Because this pipeline is not activated yet, it is not actually sending data through to your Splunk Enterprise instance at this time. You'll activate the pipeline and send the data to its destination later in this tutorial.

- Open a terminal window and navigate to the extracted DSP installer directory. This directory is also the working directory for the Splunk Cloud Services CLI tool.

- Log in to the Splunk Cloud Services CLI using the following command. When prompted to provide your username and password, use the credentials from your DSP account.

./scloud login

The Splunk Cloud Services CLI doesn't return your login metadata or access token. If you want to see your access token you must log in using the verbose flag:

./scloud login --verbose. - In the DSP UI, with your pipeline open in the Canvas View, select the Splunk DSP Firehose function and then click the Start Preview

button to begin a preview session.

button to begin a preview session. - In the terminal window, send the sample data to your pipeline by running the following command.

cat examples/demodata.txt | while read line; do echo $line | ./scloud ingest post-events --host Buttercup --source syslog --sourcetype Unknown; done

It can take up to a minute to send the entire file.

- In the DSP UI, view the Preview Results tab. Confirm that your data events are flowing through the pipeline and appearing in the preview.

You've now confirmed that your pipeline is ingesting data successfully.

Transform your data

Let's add transformations to your data before sending it to a Splunk Enterprise index.

In this section, you change the value of a field to provide more meaningful information, extract interesting nested fields into top-level fields, and redact credit card information from the data before sending it off to a Splunk Enterprise index for indexing.

- Change the

source_typeof your data events fromUnknowntopurchases. - In the DSP UI, on the pipeline canvas, click the + icon between the Splunk DSP Firehose and Send to Splunk HTTP Event Collector functions, and select Eval from the function picker.

- On the View Configurations tab, enter the following SPL2 expression in the function field:

source_type="purchases"

- Click the More Options

button located beside the Activate Pipeline button, and select Validate to check that all functions in your pipeline are configured properly.

button located beside the Activate Pipeline button, and select Validate to check that all functions in your pipeline are configured properly. - To see how this function transforms your data, send your sample data to your pipeline again and preview the data that comes out from the Eval function.

- On the pipeline canvas, select the Eval function in your pipeline and then click the Start Preview

button to restart your preview session.

button to restart your preview session. - Wait a few seconds before running the following command in the Splunk Cloud Services CLI. Because your pipeline isn't activated, resending your sample data doesn't result in data duplication.

cat examples/demodata.txt | while read line; do echo $line | ./scloud ingest post-events --host Buttercup --source syslog --sourcetype Unknown; done

- Back in DSP, view the data on the Preview Results tab and confirm that the value of the

source_typefield is nowpurchases. - In the data preview, you'll notice there are several interesting fields in the

bodyfield including the type of card used, the purchase amount, and the sale date. Extract some of these nested fields into theattributesfield. - On the pipeline canvas, select the Eval function.

- On the View Configurations tab, click +Add and then enter the following SPL2 expression in the newly added field. This SPL2 expression casts the

bodydata to the string data type, extracts key-value pairs from those strings using regular expressions, and then inserts the extracted key-value pairs into theattributesfield. Thebodydata must be cast to a different data type first because by default it is considered to be a union of all DSP data types, and theextract_regexfunction only accepts string data as its input.attributes=extract_regex(cast(body, "string"), /(?<tid>[A-Z0-9]+?),(?<cid>[A-Z0-9]+?),(?<Type>[\w]+\s\w+),(?<Amount>[\S]+),(?<sdate>[\S]+)\s(?<stime>[\S]+),(?<edate>[\S]+)\s(?<etime>[\S]+?),(?<Longitude>[\S]+?),(?<Latitude>[\S]+?),(?<Card>[\d]*)/)

- Now that you've extracted some of your nested fields into the

attributesfield, take it one step further and promote these attributes as top-level fields in your data.- On the pipeline canvas, click the + icon between the Eval and Send to Splunk HTTP Event Collector functions, and then select Eval from the function picker.

- On the View Configurations tab, enter the following SPL2 expression in the function field for the newly added Eval function. These expressions turn the key-value pairs in the

attributesfield into top-level fields so that you can easily see the fields that you've extracted.Transaction_ID=map_get(attributes, "tid"), Customer_ID=map_get(attributes, "cid"), Type=map_get(attributes, "Type"), Amount=map_get(attributes, "Amount"), Start_Date=map_get(attributes, "sdate"), Start_Time=map_get(attributes, "stime"), End_Date=map_get(attributes, "edate"), End_Time=map_get(attributes, "etime"), Longitude=map_get(attributes, "Longitude"), Latitude=map_get(attributes, "Latitude"), Credit_Card=map_get(attributes, "Card")

- Notice that your data contains the credit card number used to make a purchase. Redact that information before sending it to your index.

- On the pipeline canvas, click the + icon between the second Eval function and the Send to Splunk HTTP Event Collector function, and then select Eval from the function picker.

- On the View Configurations tab, enter the following SPL2 expression in the function field for the newly added Eval function. This SPL2 expression uses a regular expression pattern to detect credit card numbers and replace the numbers with

<redacted>.Credit_Card=replace(cast(Credit_Card, "string"), /\d{15,16}/, "<redacted>")

- The original body of the event had sensitive credit card information, let's also redact that information from the

bodyfield. - On the pipeline canvas, select the Eval function that you added during the previous step.

- On the View Configurations tab, click +Add and then enter the following SPL2 expression in the newly added field. This SPL2 expression casts the

bodydata to the string data type, detects credit card numbers in those strings using a regular expression pattern, and then replaces the numbers with<redacted>. Thebodydata must be cast to a different data type first because by default it is considered to be a union of all DSP data types, and thereplacefunction only accepts string data as its input.body=replace(cast(body,"string"),/[1-5][0-9]{15}/,"<redacted>") - The

attributesfield also contains sensitive credit card information, so let's also redact the information there.- On the pipeline canvas, select the Eval function that you modified during the previous step.

- On the View Configurations tab, click +Add and then enter the following SPL2 expression in the newly added field. This SPL2 expression does the following:

- Gets the

Cardvalue from theattributesmap, and then casts that value to the string data type. - Detects credit card numbers in the

Cardvalue using a regular expression pattern, and then replaces the numbers with<redacted>. - Converts the

Cardvalue from the string data type back to the map data type.

The

Cardvalue must be converted between the string and map data types becauseattributescontains map data, and thereplacefunction only accepts string data as its input.attributes=map_set(attributes, "Card", replace(ucast(map_get(attributes, "Card"), "string", null), /[1-5][0-9]{15}/, "<redacted>")) - Gets the

- Now that you've constructed a full pipeline, preview your data again to see what your transformed data looks like.

- Click the More Options

button located beside the Activate Pipeline button, and select Validate to check that all functions in your pipeline are configured properly.

button located beside the Activate Pipeline button, and select Validate to check that all functions in your pipeline are configured properly. - Select the last Eval function in the pipeline, and then click the Start Preview

button to restart your preview session.

button to restart your preview session. - Wait a few seconds, and then run this command again in the Splunk Cloud Services CLI. Because your pipeline isn't activated yet, resending your sample data will not result in data duplication.

cat examples/demodata.txt | while read line; do echo $line | ./scloud ingest post-events --host Buttercup --source syslog --sourcetype Unknown; done

- Back in DSP, view the data on the Preview Results tab and confirm the following:

- The value in the

source_typefield ispurchases. - The

attributesfield contains information such as the type of card used, the purchase amount, and the sale date. - The

bodyandattributesfields do not show any credit card numbers. - To save these transformations to your pipeline, click Save.

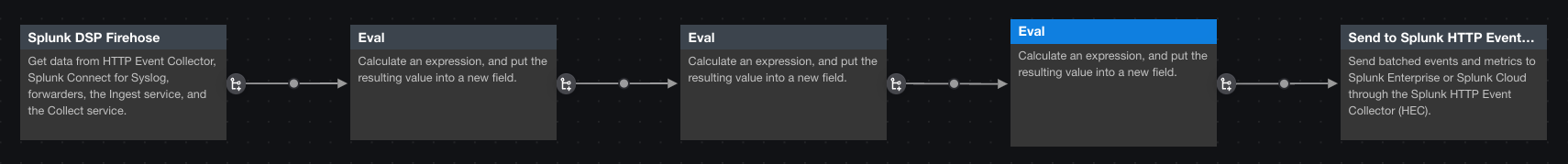

After following these steps, you have a DSP pipeline that resembles the following.

Send your transformed data to Splunk Enterprise

Now that you have a completed pipeline that extracts useful information and redacts sensitive information, activate the pipeline and send the transformed data to your default Splunk Enterprise instance.

- In DSP, click Activate Pipeline > Activate. Do not check Skip Restore State or Allow Non-Restored State. Neither of these options are valid when you activate your pipeline for the first time. Activating your pipeline also checks to make sure that your pipeline is valid. If you are unable to activate your pipeline, check to see if you've configured your functions correctly.

- Wait a few seconds after activating your pipeline, and then send the sample data to your activated pipeline by running the following command in the Splunk Cloud Services CLI.

cat examples/demodata.txt | while read line; do echo $line | ./scloud ingest post-events --host Buttercup --source syslog --sourcetype Unknown; done

- To confirm that DSP is sending your transformed data to your Splunk Enterprise instance, open the Search & Reporting app in your Splunk Enterprise instance and search for your data. Use the following search criteria:

index="main" host="Buttercup" | table *

You've now successfully sent transformed data to Splunk Enterprise through DSP!

Additional resources

For detailed information about the DSP features and workflows that you used in this tutorial, see the following DSP documentation pages:

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.4.0, 1.4.1, 1.4.2, 1.4.3, 1.4.4, 1.4.5, 1.4.6

Download manual

Download manual

Feedback submitted, thanks!