All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

Performance expectations for sending data from DSP pipelines to Splunk Enterprise

This page provides reference information about the performance testing of the performed by Splunk, Inc when sending data to a Splunk index with the Send to Splunk HTTP Event Collector or the Send to a Splunk Index sink functions. Use this information to optimize your Splunk Enterprise pipeline performance.

Many factors affect performance results, including file compression, event size, number of concurrent pipelines, deployment architecture, and hardware. These results represent reference information and do not represent performance in all environments.

To go beyond these general recommendations, contact Splunk Services to work on optimizing performance in your specific environment.

Improve performance

To maximize your performance, consider taking the following actions:

- Enable batching. If you are using the Send to Splunk HTTP Event Collector function, then batching is already done for you. Otherwise, use either the Batch Bytes or Batch Records functions in your pipeline.

- Do not use an SSL-enabled Splunk Enterprise server.

- Disable HEC acknowledgments in the Send to Splunk HTTP Event Collector or the Send to a Splunk Index function.

- Enable

async = truein Send to Splunk HTTP Event Collector or the Send to a Splunk Index function. - Run DSP on a 5 GigE full duplex network.

- Parallelize DSP with your data source. Parallelization of DSP jobs is determined by the number of partitions or shards in the upstream source.

- When using Kafka as a data source, use multiple partitions (example: 16) in the Kafka topic that your DSP pipeline reads from.

- When using Kinesis as a data source, use multiple shards (example: 16) in the Kinesis stream that your DSP pipeline reads from.

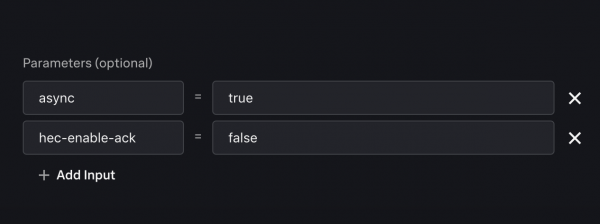

Your Send to Splunk HTTP Event Collector or Send to a Splunk Index sink functions should have the following additional parameters for performance optimization.

| Format metrics data in DSP for Splunk indexes | Connecting Splunk Observability to your DSP pipeline |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.3.0, 1.3.1, 1.4.0, 1.4.1, 1.4.2, 1.4.3, 1.4.4, 1.4.5, 1.4.6

Download manual

Download manual

Feedback submitted, thanks!