Download topic as PDF

Download topic as PDF

Splunk OpenTelemetry Collector for Kubernetes

This Splunk validated architecture (SVA) applies to Splunk Cloud Platform and Splunk Enterprise products.

Splunk OpenTelemetry Collector for Kubernetes is the latest evolution in Splunk data collection solutions for Kubernetes. It enables exporting Kubernetes data sources simultaneously to Splunk HTTP Event Collector (HEC) and Splunk Observability Cloud. The OpenTelemetry Collector for Kubernetes improves the work started in Splunk Connect for Kubernetes (Fluentd) and is now the Splunk-recommended option for Kubernetes logging and metrics collection.

If you are using Splunk Connect for Kubernetes, review Migration from Splunk Connect for Kubernetes on GitHub as the end of support date for Splunk Connect for Kubernetes is January 1, 2024. See https://github.com/splunk/splunk-connect-for-kubernetes#end-of-support for more information.

OpenTelemetry Collector incorporates all the key features Splunk delivered in Connect for Kubernetes, while delivering many additional benefits, including:

- Built on OpenTelemetry open standards.

- Improved logging scale with OTel logging versus Fluentd. See https://github.com/signalfx/splunk-otel-collector-chart/blob/main/docs/advanced-configuration.md#performance-of-native-opentelemetry-logs-collection.

- Advanced metrics collection features.

- Advanced pipeline features including data manipulation.

- Support for trace collection.

- Support for Kubernetes annotations provides ability to route namespace and pod logs to certain indexes, set sourcetypes or even include or exclude logs from being monitored. See https://github.com/signalfx/splunk-otel-collector-chart/blob/main/docs/advanced-configuration.md#managing-log-ingestion-by-using-annotations.

- Support for multiline logs via the filelog receiver's recombine operator. Users can define their line breaking rules in the collector to ensure multiline logs are properly rendered in Splunk. See https://github.com/signalfx/splunk-otel-collector-chart/blob/main/docs/advanced-configuration.md#processing-multi-line-logs.

- Access to OpenTelemetry components. See all components supported in the Splunk Helm chart at https://github.com/signalfx/splunk-otel-collector/blob/main/docs/components.md#components.

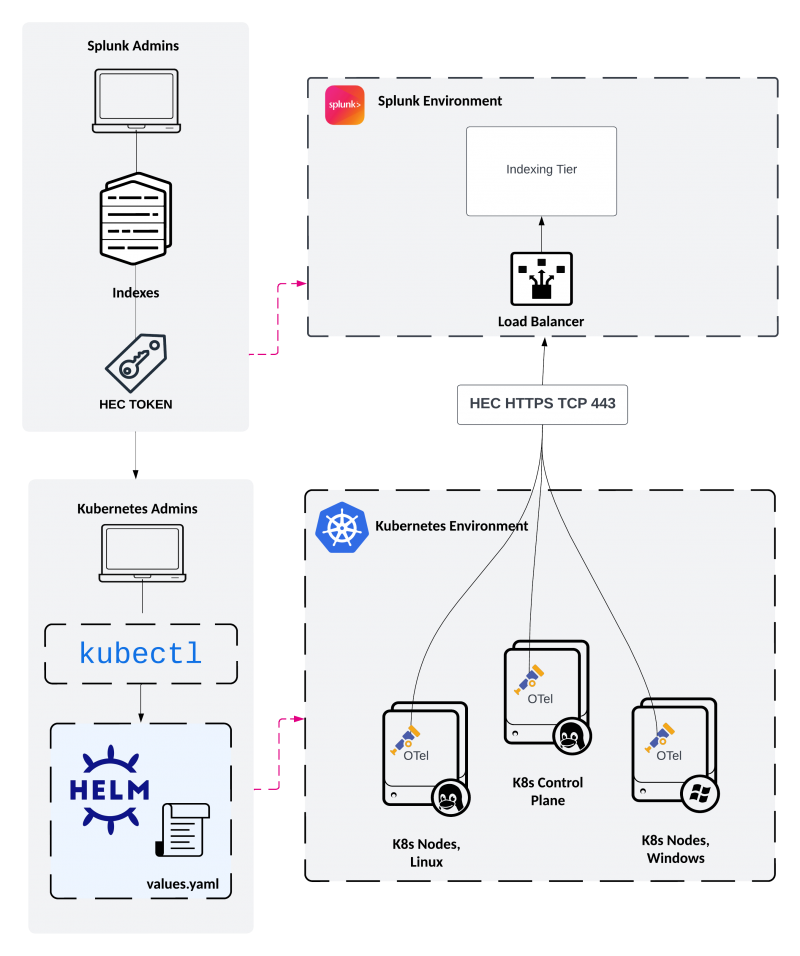

To deploy the OpenTelemetry Collector, some configuration is required by both Splunk admins and Kubernetes admins. The following sections describe the high-level process of getting started with the Splunk OpenTelemetry Collector for Kubernetes and key topics to review as you prepare to deploy in your environment.

Splunk admin deployment guidance

Splunk admins need to prepare Splunk indexes and HEC token(s). Care should be taken to avoid token and index sprawl. Splunk Platform Solution Architects recommend creating one event and one metrics index to serve as a catchall indexes, then using OTel's index routing features to send the data where it needs to go in order to satisfy your organization's data segregation and storage requirements. See https://github.com/signalfx/splunk-otel-collector-chart/blob/main/docs/advanced-configuration.md#managing-log-ingestion-by-using-annotations. We also recommend one HEC token per Kubernetes cluster. After your Splunk environment is ready to receive data, move to the next section to work with your Kubernetes admins to deploy the Splunk OpenTelemetry Collector Helm Chart to the Kubernetes environment.

Kubernetes admin deployment guidance

After Splunk admins have prepared the index(es) and HEC token(s), Kubernetes admins with functioning cluster access via kubectl will review Splunk's OTel Helm chart Github repository and prepare an environment specific values.yaml to deploy with Helm.

Following the "Node Agent" Kubernetes logging pattern, the "OTel agent" is deployed as a daemonset and is configured to send log and metric data directly to the Splunk platform. Optionally, if you enable metrics, kubernetes api events, or objects collection, the "OTel Cluster Receiver" deployment deploys a single pod that runs anywhere in the Kubernetes cluster and also forwards data directly to the Splunk platform. Both the OTel agent daemonset and the OTel Cluster receiver deployment connect to the Splunk platform via the HTTP Event Collector using the services/collector/event endpoint.

To ensure the agent and cluster-receiver have enough resources, review Sizing Splunk OTel Collector Containers on recommended cpu and memory settings. As per the sizing guidelines, Kubernetes admins should update the default agent or cluster-receiver configuration based on the estimated amount of logs and metrics being sent to the Splunk platform.

Forwarding topology guidance

Due to the volume of data that can be produced in a Kubernetes environment, Splunk Platform Solution Architects recommend connecting directly to the Splunk indexing tier via a load balancer. If you must use an intermediate forwarding tier, a load balancer should still be used and care must be taken to scale the intermediate forwarding tier. For example, tuning Splunk HEC inputs settings to ensure they can handle the volume of data. Please see the intermediate forwarding section for more information.

About buffer and retry mechanisms

In the case of failure to connect to the Splunk platform, the OTel collectors will buffer to memory and retry. See https://github.com/signalfx/splunk-otel-collector-chart/blob/a39247985e1738f4ac8b54569a0d43f6b1a9db41/helm-charts/splunk-otel-collector/values.yaml#L78-L97. OTel Collector for Kubernetes does not implement Splunk's "ACK" options due to the impact it can have on scalability and performance. Instead, it relies on HTTP protocol and retries/memory buffers when sending fails. There is already a persistent disk buffer provided by the node and kublet's settings for log rotation, so in most cases persistent queuing to disk is not necessary, however a persistent queue using file storage is available.

A note on file rotation

As part of the deployment, users of the Splunk OpenTelemetry Collector for Kubernetes should be mindful of file rotation defaults in Kubernetes environments. See https://kubernetes.io/docs/concepts/cluster-administration/logging/#log-rotation. Splunk Platform Solution Architects recommend adjusting the file rotation in environments that generate a high logging rate to avoid them rotating files too quickly. The default trigger for file rotation is 10MB, which in our experience is very small for production workloads. Log rotation can lead to data loss if not handled properly and also impacts kubernetes administration with kubectl. Consult your Kubernetes provider for more information on the kubelet settings available to you.

Supported Kubernetes distributions

The Splunk OpenTelemetry Collector for Kubernetes Helm chart can be deployed to managed Kubernetes services like Amazon EKS, Google GKE, Azure AKS, as well as Red Hat OpenShift and other CNCF compliant Kubernetes distributions. See https://github.com/signalfx/splunk-otel-collector-chart#supported-kubernetes-distributions. While there may be no access to the Kubernetes control plane in some managed services, the Helm chart can still be deployed on the customer managed worker nodes. Control plane data, like Kubernetes API audit logs, is collected directly from the cloud service provider using existing Splunk integration options for cloud providers or with a separate agent deployment. See GitHub docs for more on fully managed environments that don't allow daemonsets, for example Amazon EKS Fargate. See https://github.com/signalfx/splunk-otel-collector-chart/blob/main/docs/advanced-configuration.md#eks-fargate-support. In these scenarios, logging is achieved through the use of sidecars, which is currently out of the scope of this validated architecture.

Evaluation of pillars

| Design principles / best practices | Pillars

| |||||

|---|---|---|---|---|---|---|

| Availability | Performance | Scalability | Security | Management | ||

| #1 | When implementing OTel Collector use a load balancer to spread HEC traffic to indexers or intermediate forwarders | True | True | True | True | |

| #2 | Review inputs.conf for HEC configuration parameters for tuning in a high traffic environment | True | True | |||

| #3 | See OTel sizing guide for tuning guidance | True | True | True | ||

| #4 | File rotation | True | ||||

| #5 | Review buffer and retry settings based on the performance and resiliency requirements in your environment | True | True | True | ||

| #6 | Use one HEC token per cluster | True | ||||

|

PREVIOUS Getting Google Cloud Platform data into the Splunk platform |

NEXT Customer managed centralized SOC architectures |

This documentation applies to the following versions of Splunk® Validated Architectures: current

Feedback submitted, thanks!