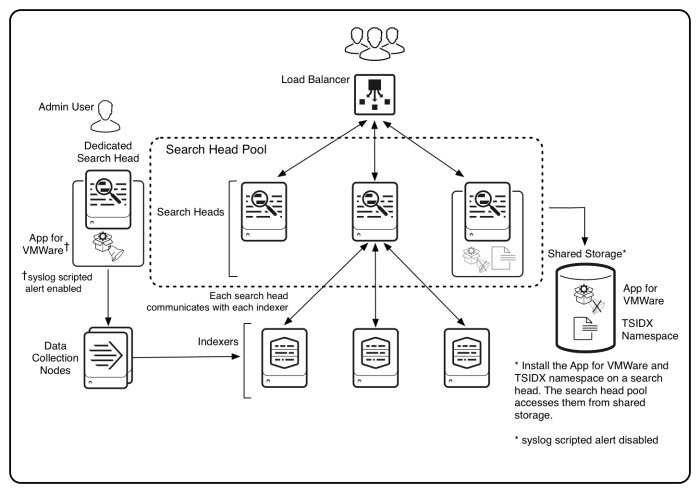

Deploy Splunk App for VMware in a search head pooled environment

Perform the steps described in this topic to set up Splunk App for VMware in a distributed search environment. This configuration improves overall performance of Splunk App for VMware and eliminates conflicts with other apps that you install on the same Splunk Enterprise deployment.

Prerequisites

- You have a version of Splunk Enterprise installed and running in your environment.

- You have configured your Splunk search heads to operate as a search head pool. See "Configure search head pooling" in the Distributed Deployment Manual.

- See "Key implementation issues" in the Splunk Enterprise documentation before you set up a search head pool.

- You have access to the Splunk App for VMware and permission to install it.

Note Do not use real-time searches in a search head pooled environment. You can reduce the performance of the app if you do so.

Install Splunk App for VMware in a search head pool

As an administrator, configure Splunk App for VMware from the dedicated search head in a search head pool. Log in to Splunk Web on a search head inside the pool to use the dashboards in Splunk App for VMware. Follow the instructions in this topic to install Splunk App for VMware in a search head pool.

Deploy Splunk App for VMware in a search head pool

- Install Splunk App for VMware on one or more search heads in the pool. See "Download and install Splunk App for VMware. A search head pool stores all apps in a central location, such as a Network File System (NFS) mount. All of the search heads in the pool use this shared disk space. Check that you have set up shared storage for all the search heads.

- Install Splunk App for VMware on a dedicated search head, outside the search head pool. See "Download and install Splunk App for VMware

- Configure the Data Collection Nodes to work with the Distributed Collection Scheduler. See Configure a Data Collection Node. Use the dedicated search head to configure and start the Distributed Collection Scheduler.

- Click Start Scheduler on the Collection Configuration dashboard on the dedicated search head. This starts the Distributed Collection Scheduler.

Splunk App for VMware creates a number of tsidx namespaces to store the summary statistical data used by the dashboards. See Namespaces used in Splunk App for VMware.

When you use tsidx namespaces in a search head pool, you must share the namespaces because the tsidx namespaces exist in the shared storage.

Create the tsidx namespace

Create the tsidx namespace on one of the search heads in the pool

- Go to

splunk_for_vmware/local/, then open theindexes.conffile. - Edit the file to set the

tsidxStatsHomePathparameter to the absolute path to the shared storage location. For example, if the shared storage is mounted under/mnt/shp/home, editsplunk_for_vmware/local/indexes.confto include the following default stanza:[default] tsidxStatsHomePath=/mnt/shp/home

- Create the tsidx namespace on one of the search heads in the search head pool. This search populates the tsidx namespace.

Test the tsidx namespace

After creating the namespace, you must test that search head pooling with tsidx namespaces works in the app.

Test the tsidx namespace

- In Splunk Web, run this search to test that the tsidx namespace exists. Replace foo with the name of your shared namespace. This search is successful if it returns data and populates the shared namespace.

index=_internal | head 10 | table host,source,sourcetype | `tscollect("foo")` - If the search is successful, run the following tstats query on the shared namespace on each of the search heads in the pool. This search is successful if the shared namespace collects data and the result count returned when you run the search on each search head in the pool is the same for all search heads in the pool.

| `tstats` count from foo | stats count | search count=10 </code>

These search results show that all of the search heads in the pool are identical instances and that search head pooling with tsidx namespaces works.

Set up a namespace retention policy

You can set up a retention policy for any of the namespaces that Splunk App for VMware uses.

Set up your namespace retention policy

- Create a local copy of the

$SPLUNK_HOME/etc/apps/SA-Utils/default/tsidx_retention.conffile. You can use the default settings in the file or you can modify the settings to limit the size of the tsidx namespaces and to limit the length of time that namespaces are retained. - Uncomment the tags for the namespaces that you want to use in Splunk App for VMware and the values associated with those namespaces.

- Log in to Splunk Web on any of the search heads in the pool to use Splunk App for VMware.

Disable the syslog scripted alert in the app

The Splunk App for VMware includes a scripted alert (vmw_esxlog_interruption_alert) that notifies you of any failures in the transmission of syslog data over TCP from vCenter to the indexers.

When you install the app in a search head pooled environment, initially this alert is active on the dedicated search head outside of the search head pool and on the search head within the pooled environment.

Disable the alert in the app in the pooled environment because the search head does not store the collection configuration data for the app. The alert uses the collection configuration information stored on the dedicated search head outside of the pooled environment to identify syslog transmission failures.

Note The app disables the alert by default if you retrieve syslog data using a method other than TCP.

Disable the scripted alert in one of the following ways:

- Use Splunk Web to disable the syslog alert.

- Edit

savedsearches.confto disable the syslog alert.

Use Splunk Web to disable the syslog alert

You can disable the syslog alert using Splunk Web:

- On one of the search heads in the pool, log in to Splunk Web.

- From the Settings menu, select Searches, reports, and alerts.

- On the Searches, reports, and alerts page, click Disable. You have now disabled the syslog scripted alert.

- Restart Splunk Enterprise.

Edit savedsearches.conf to disable the syslog alert

You can disable the syslog alert in savedsearches.conf:

- On one of the search heads in the pooled environment, copy the

savedsearches.conffile from the$SPLUNK_HOME/etc/apps/splunk_for_vmware/defaultfolder, to the$SPLUNK_HOME/etc/apps/splunk_for_vmware/localfolder. - Edit the

savedsearches.conffile in your local directory - Set the disabled attribute to

true.# Syslog scripted alert [vmw_esxlog_interruption_alert] disabled = true

- Save your changes and close the

savedsearches.conffile. - Restart Splunk Enterprise.

This documentation applies to the following versions of Splunk® App for VMware (EOL): 3.1.1, 3.1.2, 3.1.3, 3.1.4

Download manual

Download manual

Feedback submitted, thanks!