Overview

The Splunk Common Information Model Add-on (Splunk_SA_CIM) version 3.0 includes a number of pre-configured data models you can use with Splunk 6.x to create reports and dashboards.

Note: Previously this information was part of the SA-CommonInformationModel supporting add-on, included with the Splunk App for Enterprise Security.

New Feature Highlights

The Splunk Common Information Model Add-on contains:

- Splunk 6 Data models (JSON files) implementing the CIM

- Expanded domain coverage for inventory, performance, JVM, network sessions, and more

The Splunk Common Information Model (CIM) is a set of field names and tags for event data, which are used to define the least common denominator of a domain of interest. These tags and fields provide a standard method of parsing, categorizing, and normalizing data. These fields and tags are documented in this manual and implemented as JSON data model files in the Splunk_SA_CIM app.

The Common Information Model is important for bringing data sources into Splunk apps. Data indexed with Splunk is much easier to interact with when it conforms to a standardized data model. Using the fields and tags prescribed by the CIM ensures a high degree of success when using Splunk developed/supported applications.

With the Common Information Model and Splunk 6.x, a developer, services engineer, or advanced customer should be able to implement a map of a new data source to the proper interface, validate that the domain interface has the expected data, and start writing or using an app which expects that domain interface.

Common Information Model

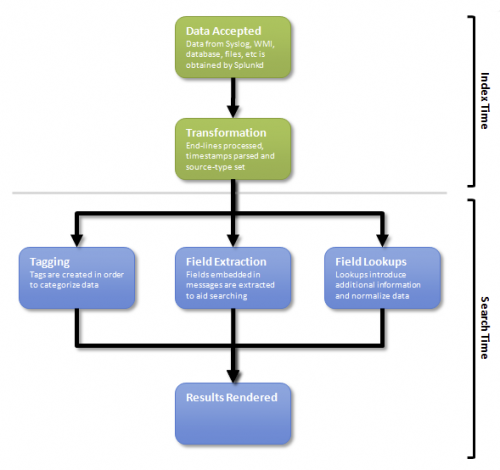

Splunk Enterprise has the ability to flexibly search and analyze highly diverse machine data by employing late-binding or search-time techniques for schema-creation ("schema-on-the-fly"). The Common Information Model (CIM) defines relationships in the underlying data, while leaving the raw machine data intact. Tags and fields map these relationships at search time.

Why the Common Information Model is Important

By default, Splunk provides powerful search capabilities for generic IT data. However, more advanced reporting and correlation requires that the data be normalized, categorized, and parsed.

Parsing

Unlike text-based search, the robust reporting of some applications rely heavily on "field extraction". Parsing occurs at index time during the transformation phase and at search time when field extraction is performed. See "Extract fields and assign tags" in this manual, and the tags and fields list for parsing additional data.

Categorizing

Various applications and add-ons use Splunk's event type and event type tagging facilities to categorize different types of data - security data for example. These searches are built using event types and tags to query for matching data. See "Extract fields and assign tags" in this manual, and the tags and fields list for parsing additional data.

Normalizing

The objective of normalization is to use the same names and values for equivalent events from different sources or vendors. Reports and correlation searches using normalized data are able to present a unified view of a data domain across heterogeneous vendor data formats. Data is normalized when events from different products and vendors, formatted in different ways, have the same field values for the semantically equivalent events.

Lookups are used to normalize event data by replacing field names or values with standardized names and values. Lookups can replace field values (such as "severity=med" with "severity=medium") or field names (such as replacing "sev=high" with "severity=high"). See "Normalize data" in this manual for more information about normalizing data.

See the Splunk Knowledge Manager Manual for more information on how to set up Splunk to accept new data or to learn about "What Splunk can index" and the types of data Splunk can import.

| What are data models? |

This documentation applies to the following versions of Splunk® Common Information Model Add-on: 3.0, 3.0.1, 3.0.2

Download manual

Download manual

Feedback submitted, thanks!