Example 2: OSSEC

This example shows how to create an add-on for OSSEC, an open-source host-based intrusion detection system (IDS). This example is somewhat more complex than the Blue Coat example and shows how to perform the following additional tasks:

- Use regular expressions to extract the necessary fields.

- Convert the values in the severity field to match the format required in the Common Information Model

- Create multiple event types to identify different types of events within a single data source.

Step 1: Capture and index the data

Get data into Splunk

To get started, set up a data input in order to get OSSEC data into ESS. OSSEC submits logs via syslog over port UDP\514, therefore, we would use a network-based data input. Once the add-on is completed and installed, it will detect OSSEC data and automatically assign it the correct source type if the data is sent over UDP port 514.

Choose a folder name for the add-on

The name of this add-on will be Splunk_TA-ossec. Create the add-on folder at $SPLUNK_HOME/etc/apps/Splunk_TA-ossec.

Define a source type for the data

For this add-on use the source type ossec to identify data associated with the OSSEC intrusion detection system.

Confirm that the data has been captured

After the source type is defined for the add-on, set the source type for the data input.

Handle timestamp recognition

Splunk successfully parses the date and time so there is no need to customize the timestamp recognition.

Configure line breaking

Each log message is separated by an end-line; therefore, line-merging needs to be disabled to prevent multiple messages from being combined. Line merging is disabled by setting SHOULD_LINEMERGE to false in default/props.conf:

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false [source::udp:514] TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

Step 2: Identify relevant IT security events

Identify which events are relevant and need to be displayed in the Enterprise Security dashboards.

Understand the data and Enterprise Security dashboards

The OSSEC intrusion detection system proxy should provide data for the Intrusion Center dashboard.

Step 3: Create field extractions and aliases

The next step is to create the field extractions that will populate the fields according to the Common Information Model. Before we begin, restart Splunk so that it will recognize the add-on and source type defined in the previous steps.

After reviewing the Common Information Model and the Dashboard Requirements Matrix, we determine that the OSSEC add-on needs to include the following fields:

| Domain | Sub-Domain | Field Name | Data Type |

|---|---|---|---|

| Network Protection | Intrusion Detection | signature | string |

| Network Protection | Intrusion Detection | dvc | int |

| Network Protection | Intrusion Detection | category | variable |

| Network Protection | Intrusion Detection | severity | variable |

| Network Protection | Intrusion Detection | src | string |

| Network Protection | Intrusion Detection | dest | string |

| Network Protection | Intrusion Detection | user | string |

| Network Protection | Intrusion Detection | vendor | string |

| Network Protection | Intrusion Detection | product | string |

Create extractions

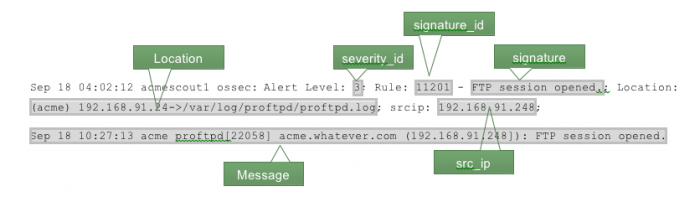

OSSEC data is in a proprietary format that does not use key-value pairs or any kind of standard delimiter between the fields. Therefore, we will have to write a regular expression to parse out the individual fields. Below is an outline of a log message with the relevant fields highlighted:

We see that the severity field includes an integer, while the Common Information Model requires a string. Therefore, we will extract this into a different field, severity_id, and perform the necessary conversion later to produce the severity field.

Extracting the Location, Message, severity_id, signature and src_ip fields

Now, we edit default/transforms.conf and add a stanza that that extracts the fields we need above to:

[force_sourcetype_for_ossec]

DEST_KEY = MetaData:Sourcetype

REGEX = ossec\:

FORMAT = sourcetype::ossec

[kv_for_ossec]

REGEX = Alert Level\:\s+([^;]+)\;\s+Rule\:\s+([^\s]+)\s+-

\s+([^\.]+)\.{0,1}\;\s+Location\:\s+([^;]+)\;\s*(srcip\:\s+(\d{1,3}

\.\d{1,3}\.\d{1,3}\.\d{1,3})\;){0,1}\s*(user\:\s+([^;]+)\;){0,1}\s*(.*)

FORMAT = severity_id::"$1" signature_id::"$2" signature::"$3"

Location::"$4" src_ip::"$6" user::"$8" Message::"$9"

Then we enable the statement in default/props.conf in our add-on folder:

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false REPORT-0kv_for_ossec = kv_for_ossec [source::udp:514] TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

Extract the dest field

Some of the fields need additional field extraction to fully match the Common Information Model. The Location field actually includes several separate fields within a single field value. Create the following stanza in default/props.conf to extract the destination DNS name, destination IP address and original source address:

[source::....ossec]

sourcetype=ossec

[ossec]

SHOULD_LINEMERGE = false

[source::udp:514]

TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

[kv_for_ossec]

REGEX = Alert Level\:\s+([^;]+)\;\s+Rule\:\s+([^\s]+)\s+-

\s+([^\.]+)\.{0,1}\;\s+Location\:\s+([^;]+)\;\s*(srcip\:\s+(\d{1,3

}\.\d{1,3}\.\d{1,3}\.\d{1,3})\;){0,1}\s*(user\:\s+([^;]+)\;){0,1}\s*(.*)

FORMAT = severity_id::"$1" signature_id::"$2" signature::"$3"

Location::"$4" src_ip::"$6" user::"$8" Message::"$9"

[Location_kv_for_ossec]

SOURCE_KEY = Location

REGEX = (\(([^\)]+)\))*\s*(.*?)(->)(.*)

FORMAT = dest_dns::"$2" dest_ip::"$3" orig_source::"$5"

Then we enable the statement in default/props.conf in the Splunk-TA-ossec folder:

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false REPORT-0kv_for_ossec = kv_for_ossec, Location_kv_for_ossec [source::udp:514] TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

The "Location_kv_for_ossec" stanza creates two fields that represent the destination (either by the DNS name or destination IP address). We need a single field named "dest" that represents the destination. To handle this, add stanzas to default/transforms.conf that populate the destination field if the dest_ip or dest_dns is not empty:

[source::....ossec]

sourcetype=ossec

[ossec]

SHOULD_LINEMERGE = false

[source::udp:514]

TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

[kv_for_ossec]

REGEX = Alert Level\:\s+([^;]+)\;\s+Rule\:\s+([^\s]+)\s+-

\s+([^\.]+)\.{0,1}\;\s+Location\:\s+([^;]+)\;\s*(srcip\:\s+(\d{1,3}\.\d{1,3

}\.\d{1,3}\.\d{1,3})\;){0,1}\s*(user\:\s+([^;]+)\;){0,1}\s*(.*)

FORMAT = severity_id::"$1" signature_id::"$2" signature::"$3"

Location::"$4" src_ip::"$6" user::"$8" Message::"$9"

[Location_kv_for_ossec]

SOURCE_KEY = Location

REGEX = (\(([^\)]+)\))*\s*(.*?)(->)(.*)

FORMAT = dest_dns::"$2" dest_ip::"$3" orig_source::"$5"

[dest_ip_as_dest]

SOURCE_KEY = dest_ip

REGEX = (.+)

FORMAT = dest::"$1"

[dest_dns_as_dest]

SOURCE_KEY = dest_dns

REGEX = (.+)

FORMAT = dest::"$1"

Note: The regular expressions above are designed to match only if the string has at least one character. This ensures that the destination is not an empty string.

Next, enable the field extractions created in default/transforms.conf by adding them to default/props.conf. We want to set up our field extractions to ensure that we get the DNS name instead of the IP address if both are available. We do this by placing the "dest_dns_as_dest" transform first. This works because Splunk processes field extractions in order, stopping on the first one that matches.

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false REPORT-0kv_for_ossec = kv_for_ossec, Location_kv_for_ossec REPORT-dest_for_ossec = dest_dns_as_dest,dest_ip_as_dest [source::udp:514] TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

Extract the src field

We populated the source IP into the field "src_ip" but the CIM requires a separate "src" field as well. We can create this by adding a field alias in default/props.conf that populates the "src" field with the value in "src_ip":

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false REPORT-0kv_for_ossec = kv_for_ossec, Location_kv_for_ossec REPORT-dest_for_ossec = dest_dns_as_dest,dest_ip_as_dest FIELDALIAS-src_for_ossec = src_ip as src [source::udp:514] TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

Normalize the severity field

The OSSEC data includes a field that contains an integer value for the severity; however, the Common Information Model requires a string value for the severity. Therefore, we need to convert the input value to a value that matches the Common Information Model. We do this using a lookup table.

First, map the "severity_id" values to the corresponding severity string and create a CSV file in lookups/ossec_severities.csv:

severity_id,severity 0,informational 1,informational 2,informational 3,informational 4,error 5,error 6,low 7,low 8,low 9,medium 10,medium 11,medium 12,high 13,high 14,high 15,critical

Next, we add the lookup file definition to default/transforms.conf:

[source::....ossec]

sourcetype=ossec

[ossec]

SHOULD_LINEMERGE = false

[source::udp:514]

TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

[kv_for_ossec]

REGEX = Alert Level\:\s+([^;]+)\;\s+Rule\:\s+([^\s]+)\s+-

\s+([^\.]+)\.{0,1}\;\s+Location\:\s+([^;]+)\;\s*(srcip\:\s+(\d{1,3

}\.\d{1,3}\.\d{1,3}\.\d{1,3})\;){0,1}\s*(user\:\s+([^;]+)\;){0,1}\s*(.*)

FORMAT = severity_id::"$1" signature_id::"$2" signature::"$3"

Location::"$4" src_ip::"$6" user::"$8" Message::"$9"

[Location_kv_for_ossec]

SOURCE_KEY = Location

REGEX = (\(([^\)]+)\))*\s*(.*?)(->)(.*)

FORMAT = dest_dns::"$2" dest_ip::"$3" orig_source::"$5"

[dest_ip_as_dest]

SOURCE_KEY = dest_ip

REGEX = (.+)

FORMAT = dest::"$1"

[dest_dns_as_dest]

SOURCE_KEY = dest_dns

REGEX = (.+)

FORMAT = dest::"$1"

[ossec_severities_lookup]

filename = ossec_severities.csv

Then we add the lookup to default/props.conf:

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false REPORT-0kv_for_ossec = kv_for_ossec, Location_kv_for_ossec REPORT-dest_for_ossec = dest_dns_as_dest,dest_ip_as_dest FIELDALIAS-src_for_ossec = src_ip as src LOOKUP-severity_for_ossec = ossec_severities_lookup severity_id OUTPUT severity [source::udp:514] TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

Define the vendor and product fields

The last fields to populate are the vendor and product fields. To populate these, add stanzas to default/transforms.conf to statically define them:

[source::....ossec]

sourcetype=ossec

[ossec]

SHOULD_LINEMERGE = false

[source::udp:514]

TRANSFORMS-force_sourcetype_for_ossec_syslog = force_sourcetype_for_ossec

[kv_for_ossec]

REGEX = Alert Level\:\s+([^;]+)\;\s+Rule\:\s+([^\s]+)\s+-

\s+([^\.]+)\.{0,1}\;\s+Location\:\s+([^;]+)\;\s*(srcip\:\s+(\d{1,3}\.\d{1,3}\.\d{1,3

}\.\d{1,3})\;){0,1}\s*(user\:\s+([^;]+)\;){0,1}\s*(.*)

FORMAT = severity_id::"$1" signature_id::"$2" signature::"$3"

Location::"$4" src_ip::"$6" user::"$8" Message::"$9"

[Location_kv_for_ossec]

SOURCE_KEY = Location

REGEX = (\(([^\)]+)\))*\s*(.*?)(->)(.*)

FORMAT = dest_dns::"$2" dest_ip::"$3" orig_source::"$5"

[dest_ip_as_dest]

SOURCE_KEY = dest_ip

REGEX = (.+)

FORMAT = dest::"$1"

[dest_dns_as_dest]

SOURCE_KEY = dest_dns

REGEX = (.+)

FORMAT = dest::"$1"

[ossec_severities_lookup]

filename = ossec_severities.csv

[product_static_hids]

REGEX = (.)

FORMAT = product::"HIDS"

[vendor_static_open_source_security]

REGEX = (.)

FORMAT = vendor::"Open Source Security"

Next, enable the stanzas in default/props.conf:

[source::....ossec] sourcetype=ossec [ossec] SHOULD_LINEMERGE = false REPORT-0kv_for_ossec = kv_for_ossec, Location_kv_for_ossec REPORT-dest_for_ossec = dest_dns_as_dest,dest_ip_as_dest FIELDALIAS-src_for_ossec = src_ip as src LOOKUP-severity_for_ossec = ossec_severities_lookup severity_id OUTPUT severity REPORT-product_for_ossec = product_static_hids REPORT-vendor_for_ossec = vendor_static_open_source_security

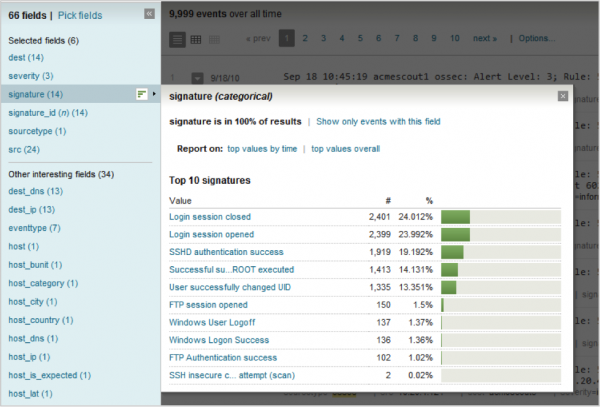

Verify field extractions

Now that we have created the field extractions, we need to verify that they are correct. First, we need to restart Splunk so that it recognizes the lookups we created. Next, we search for the source type in the Search dashboard:

sourcetype="ossec"

When the results are displayed, we select Pick Fields to choose the fields that ought to be populated. Once the fields are selected, we can hover over the field name to display the values that have been observed:

Step 4: Create tags

We created the field extractions, now we need to add the tags.

Identify necessary tags

The Common Information Model indicates that intrusion-detection data must be tagged with "host" and "ids" to indicate that it is data from a host-based IDS. Additionally, attack-related events must be tagged as "attack". Below is the information from the Common Information Model:

| Domain | Sub-Domain | Macro | Tags |

|---|---|---|---|

| Network Protection | IDS | ids_attack | ids attack |

| Network Protection | IDS | host | |

| Network Protection | IDS | ids |

Create tag properties

Now that we know what tags we need, let's create them. First, we need to create the event types that we can assign the tags to. To do so, we create an event type in eventtypes.conf that assigns an event type of "ossec" to all data with the source type of ossec.

[ossec] search = sourcetype=ossec #tags = host ids

Optionally, create an additional event type, "ossec_attack", which applies only to those OSSEC events that are related to attacks. The search should define what an attack would be based upon categorization in the IDS source and prioritization. For example:

[ossec_attack] search = sourcetype=ossec AND "other things" to search AND "find attacks"

Next, we assign the tags in tags.conf:

[eventtype=ossec] host = enabled ids = enabled [eventtype=ossec_attack] attack = enabled

Verify the Data

Now that the tags have been created, verify that the tags are being applied. Search for the source type in the Search dashboard:

sourcetype="ossec"

Review the entries and look for the tag statements (they should be present under the log message).

Check Enterprise Security dashboards

Now that the tags and field extractions are complete, the data should be ready to show up in Enterprise Security. The fields extracted and the tags defined should fit into the Intrusion Center dashboard; therefore, the OSSEC data ought to be visible on this view. The OSSEC data will not be immediately available in the dashboard since Enterprise Security uses summary indexing. Therefore, the data won't be available on the dashboard for up to an hour after the add-on is complete. After an hour or so, the dashboard should begin populating with OSSEC data.

Step 5: Document and package the add-on

Once the add-on is completed and verified, create a README file, clean up the directory structure, and tar or zip the add-on directory.

Document the add-on

Create a README with the information necessary for others to use the add-on. The following file is created with the name README under the root add-on directory:

===OSSEC add-on===

Author: John Doe

Version/Date: 1.3 September 2013

Supported product(s):

This add-on supports Open Source Security (OSSEC) IDS 2.5

Source type(s): This add-on will process data that is source typed

as "ossec".

Input requirements: Syslog data sent to port UDP\514

===Using this add-on===

Configuration: Automatic

Syslog data sent to port UDP\514 will automatically be detected as OSSEC

data and processed accordingly.

To process data that is sent to another

port, configure the data input with a source type of "ossec".

Package the add-on

Package up the OSSEC add-on by converting it into a zip archive named Splunk_TA-ossec.zip. To share the add-on, go to Splunkbase, click upload an app and follow the instructions for the upload.

| Example 1: Blue Coat proxy logs | FAQ |

This documentation applies to the following versions of Splunk® Enterprise Security: 3.0, 3.0.1

Download manual

Download manual

Feedback submitted, thanks!