Create an add-on

Before you create an add-on, understand the format, structure, and meaning of the IT security data that you want to import into the Splunk App for Enterprise Security.

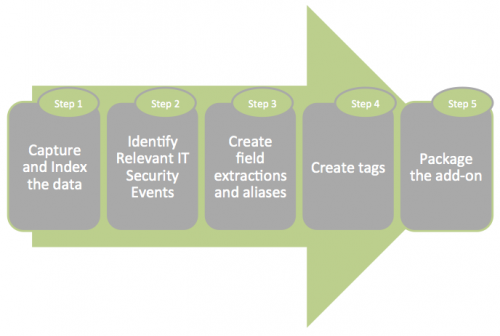

Creating an add-on involves five steps.

- Splunk Education offers a self-paced eLearning class on Building Add-ons.

Step 1: Capture and index the data

Although this step doesn't require anything beyond the normal process of getting data into Splunk, decisions that you make during this phase can affect how an add-on identifies and normalizes the data at search time.

The tasks in this step include:

- Get the source data into Splunk

- Choose a name for your add-on directory

- Define a source type name to apply to the data

Note: Changes made in Step 1 affect the indexing of data, so this step will require dumping the indexed data and starting over if the steps are done incorrectly. Steps 2-4 do not affect indexing and can be altered while Enterprise Security is running without restarting the server.

Get data into Splunk

Getting data into Splunk is necessary before Enterprise Security can normalize the data at search time and use the information to populate dashboards and reports. This step is highlighted because of the different ways that some data sources can be captured, thus resulting in different formats of data. As you develop an add-on, ensure that you are accurately defining the method by which the data will be captured and indexed within Splunk.

Common data input techniques used to bring in data from security-relevant devices, systems, and software include:

- Streaming data over TCP or UDP (for example, syslog on UDP 514)

- API-based scripted input (for example, Qualys)

- SQL-based scripted input (for example, Sophos, McAfee EPO)

For information about getting data into Splunk, see "What Splunk can index" in the core Splunk product documentation.

The way in which a particular data set is captured and indexed in Splunk is documented in the README file in the add-on directory.

Choose a folder name for the add-on

An add-on is packaged as a Splunk app and must include all of the basic components of a Splunk app in addition to the components required to process the incoming data. All Splunk apps (including technology add-ons) reside in $SPLUNK_HOME/etc/apps.

The following table lists the files and folders of a basic technology add-on.

The transforms in the transforms.conf file describe operations that you can perform on the data. The props.conf file references the transforms so that they execute for a particular source type. In actual use, the distinction is not as clear because you can access many of the operations in props.conf directly and avoid using transforms.conf.

See "Configure custom fields at search time" in the core Splunk product documentation.

When you build a new add-on, decide on a name for the add-on folder. When you choose a name for your technology add-on folder, use the following naming convention:

TA-<datasource>

The add-on folder always begins with "TA-". This lets you distinguish between Enterprise Security add-ons and other add-ons within your Splunk deployment. The <datasource> section of the name represents the specific technology or data source that this add-on is for. Add-ons that ship as part of the Splunk App for Enterprise Security follow this convention.

Examples include:

TA-bluecoat TA-cisco TA-snort

Important: A template for creating your own add-on that includes a folder structure and sample files is available in the Enterprise Security Installer in: $SPLUNK_HOME/etc/apps/SplunkEnterpriseSecurityInstaller/default/src/etc/apps/TA-template.zip.

Define a source type for the data

By default, Splunk sets a source type for a given data input. Each add-on has at least one source type defined for the data that is captured and indexed within Splunk. This requires an override of the automatic source type that Splunk attempts to assign to the data source. The source type definition is handled by Splunk at index time, along with line breaking, timestamp extraction, and timezone extraction. All other information is set at search time. See "Specify source type for a source" in the core Splunk product documentation.

Add an additional source type for your add-on, making the source type name match the product so that the add-on will work. This process overrides some default Splunk behavior. For information about how to define a source type within Splunk, see "Override automatic source type assignment" in the core Splunk product documentation.

Choose a source type name to match the name of the product for which you are building an add-on, for example, "nessus." Add-ons can cover more than one product by the same vendor, for example, "juniper," and might require multiple source type definitions. If the data source for which you are building an add-on has more than one data file with different formats, for example, "apache_error_log" and "apache_access_log," you might create multiple source types.

These source types can be defined as part of the add-on in inputs.conf, just props.conf or using both props.conf and transforms.conf. You must create these files as part of the add-on. These files contain only the definitions for the source types that the add-on is designed to work with.

You can specify source types in three ways:

- Let Splunk define source types in the data

- Define source types in

transforms.conf - Define source types in

inputs.conf

Splunk recommends that you define your source types in inputs.conf. See "Configure rule-based source type recognition" in the core Splunk product documentation.

Tip: In addition to the source type definition, you can add a statement that forces the source type when a file is uploaded with the extension set to the source type name. This lets you import files for a given source type by setting the file extension appropriately, for example, "mylogfile.nessus." You can add this statement to the props.conf file in the Splunk_TA-<add-on_name>/default/props.conf directory as shown in the following example:

[source:....nessus] sourcetype = nessus

Change the name "nessus" to match your source type.

Note: Usually, the source type of the data is statically defined for the data input. However, you might be able to define a statement that can recognize the source type based on the content of the logs.

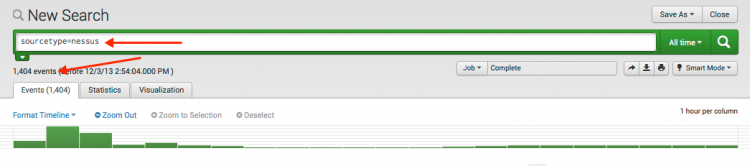

Confirm that your data has been captured

After you decide on a folder name and define the source types in the inputs.conf, just props.conf or using both props.conf and transforms.conf, and props.conf files, enable your add-on and start collecting your data within Splunk. Turn on the data source either by enabling the stream or the scripted input to begin data collection. Confirm that you are receiving the data and that the source type you defined is working appropriately by searching for the source type you defined in your data.

Restart Splunk so that it can recognize the add-on and the source type defined in this step. After you restart Splunk, it reloads the changes made to search time operations in props.conf and transforms.conf.

If the results of the search are different than you expected or no results appear, do the following:

- Confirm that the data source has been configured to send data to Splunk. You can validate this by using Splunk to search for keywords and other information within the data.

- If the data source is sent by scripted input, confirm that the scripted input is working correctly. You can do this by checking the data source, script, or other points of failure.

- If the data is indexed by Splunk, but the source type is not defined as you expect, confirm that your source type logic is defined correctly and retest.

Handling timestamp recognition

Splunk recognizes most common timestamps that are found in log data. Occasionally, Splunk might not recognize a timestamp when processing an event. If this situation occurs, you must manually configure the timestamp logic into the add-on. Add the appropriate statements in the props.conf file. For information about how to deal with manual timestamp recognition, see "How timestamp assignment works" in the Splunk documentation.

Configure line-breaking

Multiline events can be a challenge when you create an add-on. Splunk handles these types of events by default, but sometimes Splunk will not recognize events as multiline. If the data source is multiline and Splunk has problems recognizing it, see "Configure linebreaking for multiline events" in the core Splunk product documentation. The documentation provides guidance on how to configure Splunk for multiline data sources. Use this information in an add-on by making the necessary modifications to the props.conf file.

Step 2: Identify relevant IT security events

After indexing, examine the data to identify the IT security events that the source includes and determine how these events fit into the Enterprise Security dashboards. This step lets you select the correct Common Information Model tags and fields, so that data populates the correct dashboards.

Understand your data and Enterprise Security dashboards

To analyze the data, you need a data sample. If the data is loaded into Splunk, view the data based on the source type defined in Step 1. Determine which dashboards might use this data. To understand what each of the dashboards and panels require and the types of events that are applicable, see the "Dashboard Requirements Matrix" in the Installation and Configuration Manual. Review the data to determine which events need to be normalized for use within Enterprise Security.

Note: The dashboards in Enterprise Security use information that typically appears in the related data sources. The data source for which the add-on is being built might not provide all the information necessary to populate the required dashboards. Look further into the technology that drives the data source to determine whether other data is available or accessible to fill the gap.

This document assumes that you are familiar with the data and the ability to determine the types of events that the data contains.

After you review events and their contents, match the data to the Enterprise Security dashboards. Review the dashboards in the Splunk App for Enterprise Security or check the descriptions in the Enterprise Security User Guide to determine where the events fit into these dashboards.

The dashboards in Enterprise Security are grouped into three domains.

- Access: Provides information about authentication attempts and access control related events (login, logout, access allowed, access failure, use of default accounts, and so on).

- Endpoint: Includes information about endpoints such as malware infections, system configuration, system state (CPU usage, open ports, uptime, and so on), patch status and history (which updates have been applied), and time synchronization information.

- Network: Includes information about network traffic provided from devices such as firewalls, routers, network based intrusion detection systems, network vulnerability scanners, and proxy servers.

Within these domains, find the dashboards that relate to your data. In some cases, your data source might include events that belong in different dashboards, or even in different domains.

Example:

Assume that you capture logs from one of your firewalls. You expect that the data produced by the firewall contains network traffic related events. However, in reviewing the data captured by Splunk you might find that it also contains authentication events (login and logoff) and device change events (policy changes, addition/deletion of accounts, and so on).

Knowing that these events exist helps you to determine which of the dashboards within Enterprise Security are likely to be applicable. In this case, the Access Center, Traffic Center, and Network Changes dashboards are designed to report on the events found in this firewall data source.

You must define the product and vendor fields. These fields are not included in the data itself, so they are populated using a lookup.

Static strings and event fields

Do not assign static strings to event fields because this prevents the field values from being searchable. Map fields that do not exist with a lookup.

For example, here is a log message:

Jan 12 15:02:08 HOST0170 sshd[25089]: [ID 800047 auth.info] Failed publickey for privateuser from 10.11.36.5 port 50244 ssh2

To extract a field "action" from the log message and assign a value of "failure" to that field, either a static field (nonsearchable) or a lookup (searchable) could be used.

For example, to extract a static string from the log message, add this information to props.conf:

## props.conf [linux_secure] REPORT-action_for_sshd_failed_publickey = action_for_sshd_failed_publickey ## transforms.conf [action_for_sshd_failed_publickey] REGEX = Failed\s+publickey\s+for FORMAT = action::"failure" ## note the static assignment of action=failure above

This approach is not recommended; searching for "action=failure" in Splunk would not return these events, because the text "failure" does not exist in the original log message.

The recommended approach is to extract the actual text from the message and map it using a lookup:

## props.conf [linux_secure] LOOKUP-action_for_sshd_failed_publickey = sshd_action_lookup vendor_action OUTPUTNEW action REPORT-vendor_action_for_sshd_failed_publickey = vendor_action_for_sshd_failed_publickey ## transforms.conf [sshd_action_lookup] filename = sshd_actions.csv [vendor_action_for_sshd_failed_publickey] REGEX = (Failed\s+publickey) FORMAT = vendor_action::$1 ## sshd_actions.csv vendor_action,action "Failed publickey",failure

By mapping the event field to a lookup, Splunk is now able to search for the text "failure" in the log message and find it.

Step 3: Create field extractions and aliases

After you identify the events within your data source and determine which dashboards the events correspond to within Enterprise Security, now you create the field extractions needed to normalize the data and match the fields required by the Common Information Model and the Enterprise Security dashboards and panels.

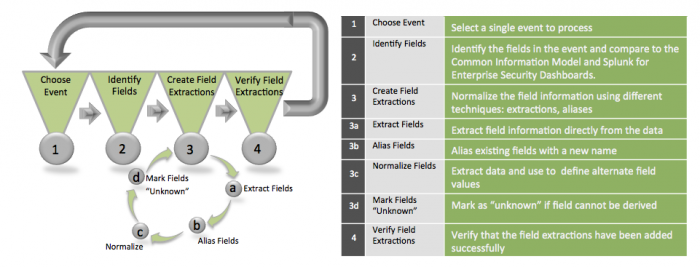

Use the following process to work through the creation of each required field, for each class of event that you have identified:

Note: This process can be done manually by editing configuration files or graphically by using the Interactive Field Extractor. See the Examples sections in this document for more detail on these options.

Choose event

In the Splunk Search app, start by selecting a single event or several almost identical events to work with. Start the Interactive Field Extractor and use it to identify fields for this event. Verify your conclusions by checking that similar events contain the same fields.

Identify fields

Each event contains relevant information that you will need to map into the Common Information Model. Start by identifying the fields of information that are present within the event. Then check the Common Information Model to see which fields are required by the dashboards where you want to use the event.

Note: Additional information about the Common Information Model can be found in the "Common Information Model" topic in the core Splunk product documentation.

Where possible, determine how the information within the event maps to the fields required by the Common Information Model (CIM). Some events will have fields that are not used by the dashboard. It may be necessary to create extractions for these fields. On the other hand, certain fields may be missing or have values other than those required by the CIM. Fields can be added or modified, or marked as unknown, in order to fulfill the dashboard requirements.

Note: In some cases, the events do not have enough information (or the right type of information) to be useful in the Splunk App for Enterprise Security. The type of data brought into the Splunk App for Enterprise Security is not applicable to security (for example, weather information).

Create field extractions

After the relevant fields have been identified, create the Splunk field extractions that parse and/or normalize the information within the event. Splunk provides numerous ways to populate search-time field information. The specific technique will depend on the data source and the information available within that source. For each field required by the CIM, create a field property that will do one or more of the following:

- Parse the event and create the relevant field (using field extractions)

Example: In a custom application, Error at the start of a line means authentication error, so it can be extracted to an authentication field and tagged "action=failed".

- Rename an existing field so that it matches (field aliasing)

Example: The "key=value" extraction has produced "target=10.10.10.1" and "target "needs to aliased to "dest".

- Convert an existing field to a field that matches the value expected by the Common Information Model (normalizing the field value)

Example: The "key=value" extraction has produced "action=stopped", and "stopped" needs to be changed to "blocked".

Extract fields

Review each field required by the Common Information Model and find the corresponding portion of the log message. Use the create field extraction statement to extract the field. See "When Splunk Enterprise extracts fields" in the core Splunk product documentation.

Note: Splunk can auto-extract certain fields at search time if they appear as "field"="value" in the data. Typically, the names of the auto-extracted fields will differ from the names required by the CIM. You can fix this problem by creating field aliases for those fields.

Create field aliases

You might have to rename an existing field to populate another field. For example, the event data might include the source IP address in the field src_ip, while the CIM requires the source be placed in the "src" field. The solution is to create a field alias for "src" field that contains the value from "src_ip". Define a field alias that creates a field with a name that corresponds with the name defined in the CIM.

See the "field aliases" topic in the core Splunk product documentation.

Normalize field values

Make sure that the value populated by the field extraction matches the field value requirements in the CIM. If the value does not match (for example, the value required by the CIM is success or failure but the log message uses succeeded and failed), then create a lookup to translate the field so that it matches the value defined in the CIM. See the core Splunk documentation topics "Setting up lookup fields" and "Tag and alias field values" to learn more about normalizing field values.

Verify field extractions

After you create field extractions for each of the security-relevant events to be processed, validate that the fields are extracted correctly. To confirm whether the data is extracted properly, search for the source type.

Perform a search

Run a search for the source type defined in the add-on to verify that each of the expected fields of information is defined and available on the field picker. If a field is missing or displays the wrong information, go back through these steps to troubleshoot the add-on and determine the problem.

Example: An add-on for Netscreen firewall logs is created by having network-communication events identified, a source type of "netscreen:firewall" created, and required field extractions defined.

To validate that the field extractions are correct, run the following search:

sourcetype="netscreen:firewall"

These events are network-traffic events. The "Dashboard Requirements Matrix" in the Installation and Configuration Manual shows that the datamodel requires the following fields: action, dvc, transport, src, dest, src_port, and dest_port.

Use the field picker to display these fields at the bottom of the events and then scan the events to see that each of these fields is populated correctly.

If a field has an incorrect value, change the field extraction to correct the value. If a field is empty, investigate whether the field should be populated.

Step 4: Create event types and tags

The Splunk App for Enterprise Security uses tags to categorize information and specify which dashboards an event belongs in. Once you have the fields, you can create properties that tag the fields according to the Common Information Model.

Note: Step 4 is required only for Centers and Searches. You can create correlation searches with data that is not tagged.

Identify necessary tags

To create the tags, determine the dashboards where you want to use the data and see which tags those dashboards require. If there are different classes of events in the data stream, tag each class separately.

To determine which tags to use, see the "Dashboard Requirements Matrix" in the Installation and Configuration Manual.

Create an event type

To tag the events, create an event type that characterizes the class of events to tag, then tag that event type. An event type is defined by a search that is used to describe the event in question. Event types have to be unique, each with different names. The event types are created and stored in the eventtypes.conf file.

To create an event type for the add-on, edit the default/eventtypes.conf file in the add-on directory. Give the event type a name that corresponds to the name and vendor of the data source, such as cisco:asa. When you create an event type, you create a field that can be tagged according to the CIM.

When you create the event type, create the tags (for example "authentication", "network", "communicate", and so on) that are used to group events into categories. To create the necessary tags, edit the tags.conf file in the default directory and enable the necessary tags on the event type field.

Verify the tags

To verify that the data is being tagged correctly, display the event type tags and review the events. To do this, search for the source type you created and use the field discovery tool to display the field "tag::eventtype" at the bottom of each event. Then look at your events to verify that they are tagged correctly. If you created more than one event type, you also want to make sure that each event type is finding the events you intended.

Check Enterprise Security dashboards

After you create and verify the field extractions and tags, the data begins to appear in the corresponding dashboards. Open each dashboard that you want to populate and verify that the dashboard information appears correctly. If it does not, check the fields and tags that you created to identify and correct the problem.

Many of the searches within the Splunk App for Enterprise Security run on a periodic scheduled basis. You might have to wait a few minutes for the scheduled search to run before data is available within the respective dashboard.

Review the "Search view matrix" topic in the User Manual to see which searches need to run. Go to Settings > Searches to see whether those searches are scheduled to run soon.

Note: You cannot run searches directly from the Enterprise Security interface because of a known issue in the Splunk core permissions.

Step 5: Document and package the add-on

After you create your add-on and verify that it is working correctly, document and package the add-on, so that it is easy to deploy. Packaging your add-on correctly ensures that you can deploy and update multiple instances of the add-on.

Document the add-on

Edit or create the README file under the root directory of your add-on and add the information necessary to document what you did and to help others who might use the add-on.

Note: If you used the Interactive Field Extractor to build field extractions and tags, these are found under $SPLUNK_HOME/etc/users/$USER/.

The following is the suggested format for your README:

===Product Name Add-on=== Author: The name of the person who created this add-on Version/Date: The version of the add-on or the date this version was created Supported product(s): The product(s) that the add-on supports, including which version(s) you know it supports Source type(s): The list of source types the add-on handles Input requirements: Any requirements for the configuration of the device that generates the IT data processed by the add-on ===Using this add-on=== Configuration: Manual/Automatic Ports for automatic configuration: List of ports on which the add-on will automatically discover supported data (automatic configuration only) Scripted input setup: How to set up the scripted input(s) (if applicable)

Package the add-on

Prepare the add-on so that it can be deployed easily. Ensure that any modifications or upgrades will not overwrite files that need to be modified or created locally.

- Make sure that the archive does not include any other files under the add-on

localdirectory. Thelocaldirectory is for files that are specific to an individual installation or to the system where the add-on is installed. - Add a

.defaultextension to any files you might need to change on individual instances of Splunk running the add-on. If a lookup file does not have a.defaultextension, an upgrade overwrites the corresponding file. The.defaultextension makes it clear that the file is a default version of the file, and is used only if the file does not exist. - Compress the add-on into a single file archive (such as a

ziportar.gzarchive). - To share the add-on, go to Splunkbase, Choose "For Developers" and submit your app for upload.

Add a custom add-on

To add a custom add-on to an app, see the instructions in "Add a custom add-on to an app" in the Splunk App for Enterprise Security Installation and Configuration Manual.

Remove an app from app import

To remove or block an add-on from being automatically imported ("app import"), see "Remove an add-on from app import" in the Splunk App for Enterprise Security Installation and Configuration Manual for details.

| Overview | Common Information Model |

This documentation applies to the following versions of Splunk® Enterprise Security: 3.2.1, 3.2.2, 3.3.0, 3.3.1, 3.3.2, 3.3.3

Download manual

Download manual

Feedback submitted, thanks!