About the ai command

The ai command is a new ML-SPL search command. This command lets you connect to externally hosted large language models (LLMs) from third-party providers including OpenAI, Gemini, Bedrock, Grok, and Ollama.

When you use the ai command Splunk data is sent to your chosen LLM and the response is presented back in the Splunk search pipeline.

There are security risks when using a commercial LLM and when sending data to an external service provider. Splunk is not providing the LLM. LLMs are external to Splunk and users are responsible for meeting any network security requirements.

The ai command brings Generative AI (GenAI) solutions to Splunk platform data. Using GenAI in your search pipeline can provide additional insights on your Splunk data and reduce time and costs to access those data insights.

You can use the ai command to answer the following kinds of questions:

- What does this notable event mean?

- Do these user agents look normal?

- Can you summarize these logs for me?

There are no guardrails for the response returned from the LLM.

To help you become familiar with the ai command, the MLTK Showcase offers the following 3 examples with the new ai command in action:

| Showcase | Description |

|---|---|

| Field Extraction | Uses the ai command to generate regex for extracting fields from Splunk internal log messages.

|

| Summarization | Uses the ai command to summarize internal Splunk error messages.

|

| Anomaly Detection | Uses the ai command to detect anomalies in time series metrics. In this case, counts of log events in the internal Splunk index.

|

Features of the ai command

The ai command provides the following key features:

- Apply any supported LLM model to any data, on any Splunk dashboard, or directly within any SPL search.

- Support of LLM inference for a single field, a number of fields, raw events, multiple events, and for any custom datasets.

The ai command does not inspect the input to the LLM. Use discretion to determine if the data you send to the LLM is suitable and appropriate.

Roles and permissions

A new role and capabilities are included with the addition of the ai command to MLTK.

When you install or upgrade to MLTK version 5.6.0 or higher there is a new role of mltk admin. The mltk admin role has the following 3 capabilities:

apply_ai_commander_commandedit_ai_commander_configlist_ai_commander_config

Permissions for the ai command are based on the apply_ai_commander_command capability. Users must have the apply_ai_commander_command capability to execute the ai command.

Users who have both the list_ai_commander_config and edit_ai_commander_config capabilities can view and add provider details, as well as modify tokens and model information. They have full access to all editable fields under the Connection Management tab.

Users with only the list_ai_commander_config capability can view configured providers and models but cannot view API tokens.

Parameters for the ai command

See the following table for the parameters you can use with the ai command:

If you have configured a default LLM service and model then you do not need to set the provider or model parameters.

| Parameter | Description |

|---|---|

prompt

|

Defines the data that is sent to the LLM for processing. |

provider

|

Set the LLM service provider you want to use such as OpenAI or Groq. |

model

|

Set the model you want to use. For example gpt-4o-mini or llama3-8b-8192.

|

Connection Management page

The Connection Management page serves as the central hub for configuring and managing the integration of LLM providers within MLTK.

By using the LLM connection feature you acknowledge and agree that data from searches using the ai command is processed by an external LLM service provider.

Supported LLM providers

You can use the ai command with the following LLM providers:

- Ollama

- OpenAI

- AzureOpenAI

- Anthropic

- Gemini

- Bedrock

- Groq

You can use the provider= or model= parameters in your ai command search to switch between these providers.

Configuration steps

Complete the following steps to configure the Connection Management page with the LLM service of your choosing:

- Open MLTK.

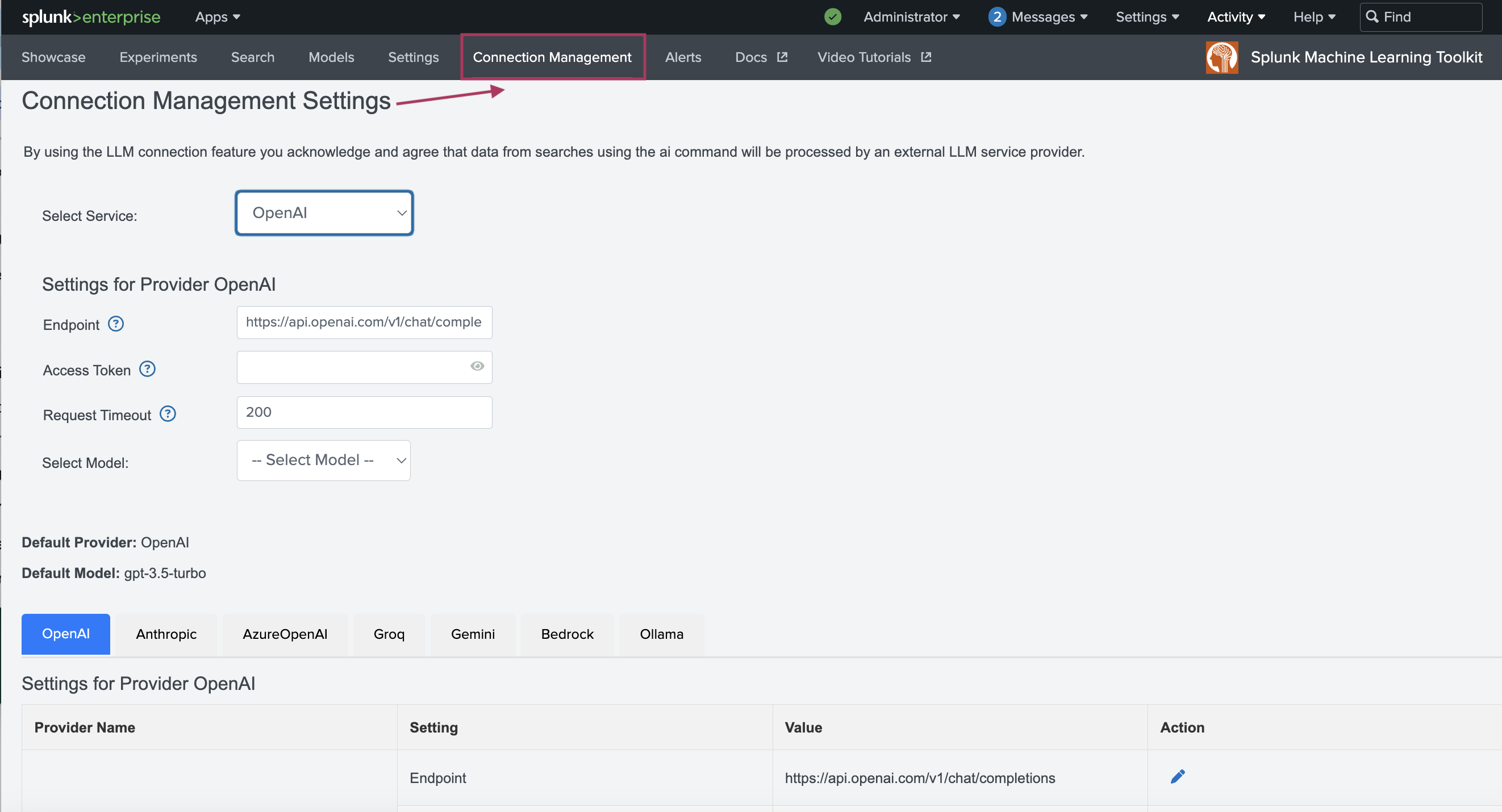

- Navigate to the Connection Management tab as shown in the following image:

- Choose your preferred LLM provider from the Select Service drop-down menu.

- Input the Settings for the chosen LLM including the Access Token and Request Timeout. The settings fields will change depending on the LLM service provider selected.

The API endpoint URL in the Endpoint field will be populated for you.

- Select the model you want to use and configure the selected model settings as follows:

- Response Variability: Controls how much difference there is between responses to identical prompts. The higher the value the more variability. Use a value between 0-1 only.

- Maximum Result Rows: Controls how many records can be sent to the LLM from Splunk. Each record sent from Splunk will be processed as a separate prompt. The higher this number is, the more data will be sent for inference.

Use caution if your chosen LLM service is charged on a per prompt basis.

- Max Tokens: Controls how many tokens can be generated by a single Splunk search.

Use caution if your chosen LLM service is charged on a per prompt basis.

- (Optional) Select the checkbox to set the chosen service as the default.

- When the connection has been configured, you can use the LLM with the

aicommand.The

aicommand does not inspect the input to the LLM. Use discretion to determine if the data you send to the LLM is suitable and appropriate.

Example dataset

The prompt pattern examples in the next section show how to leverage the ai command in different scenarios. The examples use the http_error_dataset.csv dataset.

About the http_error_dataset.csv

The http_error_dataset.csv dataset includes simulated HTTP error events with detailed causes and recovery suggestions.

The dataset includes the following information:

| Column name | Description |

|---|---|

Error_Code |

HTTP status code, such as 500, or 404. |

Error_Message |

Human-readable error description. |

Root_Cause |

Technical reason or analysis for the error. |

Recovery_Steps |

Recommended actions for mitigation or resolution. |

The http_error_dataset.csv dataset does not ship with MLTK.

You can view the contents of the http_error_dataset.csv

dataset as follows, or use the following link to download the file in Excel (XLS): http_error_dataset.xls

| Request_ID | HTTP_Code | Endpoint | Error_Message | Root_Cause | Classification |

|---|---|---|---|---|---|

| REQ-001 | 500 | /api/user/login | The server encountered an unexpected condition which prevented it from fulfilling the request. | The application server failed to establish a connection with the user database due to a timeout. | Critical |

| REQ-002 | 404 | /api/products/123 | The requested product resource could not be found on the server. | The product ID provided in the request URL does not match any existing records in the product catalog. | Non-Critical |

| REQ-003 | 403 | /admin/dashboard | Access to the requested admin dashboard is forbidden. | The user tried to access an administrative panel without sufficient permissions or valid authorization token. | Critical |

| REQ-004 | 408 | /api/upload | The server timed out waiting for the request. | The client-side upload process took too long, causing the server to abandon the connection. | Non-Critical |

| REQ-005 | 502 | /api/payment | The server received an invalid response from the upstream server while acting as a gateway. | The payment service backend returned an unexpected error or failed to respond during the transaction process. | Critical |

| REQ-006 | 400 | /api/register | The server could not understand the request due to invalid syntax. | The JSON payload sent by the client contained syntax errors or missing required fields. | Non-Critical |

| REQ-007 | 503 | /api/report | The server is currently unavailable due to overload or maintenance. | A spike in traffic or scheduled maintenance rendered the reporting service temporarily unreachable. | Critical |

| REQ-008 | 401 | /api/user/settings | Authentication is required and has failed or has not yet been provided. | The user attempted to access personal settings without including a valid authentication token in the request header. | Critical |

| REQ-009 | 422 | /api/order | The server understands the content type and syntax, but was unable to process the instructions. | One or more required fields in the order request failed validation checks due to incorrect format or missing data. | Non-Critical |

| REQ-010 | 504 | /api/checkout | The server acting as a gateway timed out while waiting for a response from an upstream server. | The third-party API responsible for processing the final checkout did not respond in time, causing a timeout. | Critical |

Prompt patterns

You can save time and reduce effort when using the ai command with prompt patterns. See the following prompt pattern examples which use the dataset described in the previous section:

Row-by-row prompting

Executes the ai command on each record individually. This pattern prompt is suitable for micro-analysis and for providing detailed responses.

The following is an example of HTTPS errors where for each HTTP error row, the ai command provides resolution advice based on the root cause:

| inputlookup http_error_dataset.csv

| head 10

| ai prompt="HTTP Error {Error_Code} occurred with message: '{Error_Message}'. Root cause: {Root_Cause}. What specific steps can we take to resolve this? Provide a precise but informative answer." provider=OpenAI model=gpt-4

Multi-chain prompting

Chains multiple prompts together. The second prompt uses the result of the first prompt, for example ai_result_1. This prompt pattern is suitable for deeper insights or a step-by-step investigation.

The following is an example of HTTPS errors which first provides an impact analysis, followed by operational countermeasures:

| inputlookup http_error_dataset.csv

| search Error_Code="500"

| head 5

| ai prompt="The server responded with {Error_Code} and message: '{Error_Message}'. Root cause: {Root_Cause}. What could be the broader operational impact?" provider=Anthropic model=claude-3-sonnet

| ai prompt="Given the operational impact: {ai_result_1}, suggest proactive monitoring and recovery measures." provider=Gemini model=gemini-1.5-pro

All data at once (summarization)

Aggregates the dataset and sends a collective prompt for summarization or holistic analysis.

The following is an example of HTTPS errors. Summarization is suitable for understanding overall trends and frequent errors in system logs:

| inputlookup http_error_dataset.csv

| stats values(*) as *

| ai prompt="Analyze the common root causes among the HTTP errors provided. The list includes error codes {Error_Code}, messages {Error_Message}, and root causes {Root_Cause}. Provide a consolidated summary of recurring issues and mitigation strategies." provider=AzureOpenAI model=gpt-35-turbo

| About the fit and apply commands | Search commands for machine learning safeguards |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 5.6.1

Download manual

Download manual

Feedback submitted, thanks!