For details, see:

An overview of the Splunk SOAR (On-premises) clustering feature

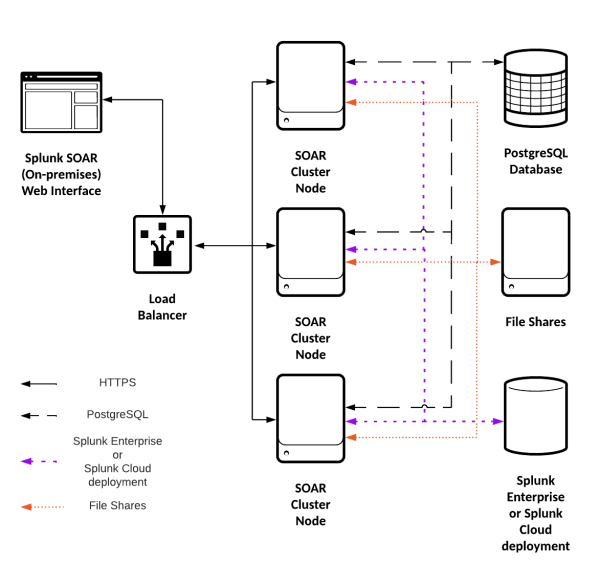

A cluster consists of a minimum of three instances of and its supporting external services; file shares, a PostgreSQL database or database cluster, a Splunk Enterprise or Splunk Cloud deployment, and a load balancer.

Splunk SOAR (On-premises) clusters provide horizontal scaling and redundancy. Larger clusters provide the capacity to handle larger workloads than a single Splunk SOAR (On-premises) instance, and provide benefits in terms of redundancy and reduced downtime for upgrades or other maintenance.

Splunk SOAR (On-premises) does not have the ability to automatically scale, or automatically add or remove cluster nodes through external systems such as Kubernetes, AWS, or Azure.

Elements of a Splunk SOAR (On-premises) cluster

The primary elements of a Splunk SOAR (On-premises) cluster are:

- a load balancer, such as HAProxy or Elastic Load Balancer

- three or more Splunk SOAR (On-premises) nodes

- a PostgreSQL database

- file shares

- either a Splunk Enterprise or Splunk Cloud deployment

The Splunk SOAR Automation Broker is not supported in a Splunk SOAR (On-premises) cluster.

A Splunk SOAR (On-premises) cluster uses other key technologies:

This diagram shows an example of a cluster, with the connections marked.

The role of Consul

Splunk SOAR (On-premises) clusters use Consul to elect a cluster leader and manage distributed locks for the system. The first three Consul nodes in a Splunk SOAR cluster are servers. Any additional nodes are Consul client nodes. Consul uses a quorum of server nodes to elect a cluster leader. If a majority of the Consul server nodes are unavailable or unreachable, no cluster leader can be elected.

The Splunk SOAR (On-premises) daemons ActionD and ClusterD depend on Consul electing a cluster leader.

ClusterDrelies on Consul's leader elections to set the leader for the Splunk SOAR (on-premises) cluster in the PostgreSQL database.ClusterDalso routes all RabbitMQ messages from other nodes to the appropriate processes within its cluster node. If no leader has been or can be elected, no messages are routed to cluster nodes, effectively stopping all automation.ActionDis responsible for managing action concurrency for each asset and triggering action runs across the cluster, which is accomplished using Consul's distributed locks. If no messages or actions can be processed, all automation is effectively stopped.

You can verify that a problem with actions processing or automation is related to cluster leadership by checking these things:

- The System Health page shows

ActionDandClusterDservices are stopped on all cluster nodes. - Reviewing the Consul logs at <$PHANTOM_HOME>/var/log/phantom/consul-stdout.log.

Recovering a Consul cluster that is unable to elect a leader

When recovering a Consul cluster, you will need to identify Consul server nodes. You can determine whether a Consul node is a server or a client by using a phenv command.

On any cluster node, run this command:

phenv cluster_management --status

The first stanza of the output will contain either Server: True or Server: False for each node in the ClusterNode table.

Example output:

[phantom@localhost phantom]$ phenv cluster_management --status

Splunk SOAR Cluster State:

ClusterNodes found in the database:

ID: f62911b-3613-4662-b439-b67711a13927

Name: 10.1.10.1

Server: True

Status: Enabled=True Online=True

ID: e8388de0-fc65-4980-a006-57bae5d8a236

Name: 10.1.10.2

Server: True

Status: Enabled=True Online=True

ID: 0c27dd73-7318-473b-b10a-f0d960d8ffa9

Name: 10.1.10.3

Server: True

Status: Enabled=True Online=True

ID: f181c689-3833-4335-b655-d61f95919291

Name: 10.1.10.4

Server: False

Status: Enabled=True Online=True

ID: 07cff7d7-0aa3-4906-87b0-c85c7c83f808

Name: 10.1.10.5

Server: False

Status: Enabled=True Online=True

...

The output in this example is truncated.

Recover the Consul cluster by doing the following steps:

- Using SSH, on every cluster node, stop Consul and clear Consul's raft database.

phenv phantom_supervisord ctl stop consul

rm -r <$PHANTOM_HOME>/local_data/consul/*

- Choose a cluster node defined as a Consul server node to be your bootstrap server node.

In a three-node Splunk SOAR (On-premises) cluster, all nodes are server nodes.- (Conditional) If your Splunk SOAR (On-premises) cluster is larger than three nodes, check <$PHANTOM_HOME>/etc/consul/config.json to identify a cluster node that Consul has configured as a server node.

- On your bootstrap server node, create a bootstrap file. This file will set and keep this node as the Consul cluster leader when Consul is restarted.

echo '{"bootstrap": true}' > <$PHANTOM_HOME>/etc/consul/bootstrap.json - Using SSH, on the server node with the bootstrap file, start Consul.

phenv phantom_supervisord ctl start consul

- Using SSH, on the remaining two server nodes, start Consul.

phenv phantom_supervisord ctl start consul

- Using SSH, on any remaining nodes, start Consul.

phenv phantom_supervisord ctl start consul

- After all Consul nodes are back online, SSH to your bootstrap server then delete the bootstrap file. This allows Consul to hold elections as normal.

rm <$PHANTOM_HOME>/etc/consul/bootstrap.json

The role of RabbitMQ

Splunk SOAR (On-premises) clusters use a RabbitMQ cluster to send messages between nodes.

Network failures between cluster nodes may result in network partitions (also sometimes called a split-brain), and by default, RabbitMQ requires manual intervention to recover from a network partition. When the RabbitMQ cluster is in a network partitioned state, messages sent between Splunk SOAR cluster nodes may be lost in undefined ways, which can disrupt automation and ingestion.

Splunk SOAR (On-premises) releases 6.1.0 and higher implements RabbitMQ's built-in cluster_partition_handling setting strategy of autoheal by default.

RabbitMQ nodes operate as either RAM nodes or DISC nodes. DISC node persist more state information to disk than RAM nodes. In Splunk SOAR (On-premises) 6.1.0 and later releases, all RabbitMQ nodes are set to DISC.

Set RabbitMQ nodes to disc mode

RabbitMQ nodes in Splunk SOAR (On-premises) releases earlier than 6.1.0 are a mix of RAM and DISC nodes.

- The fist three Splunk SOAR (On-premises) cluster nodes have RabbitMQ nodes in DISC mode.

- Any additional Splunk SOAR (On-premises) clusters nodes have RabbitMQ nodes in RAM mode.

Example:

In a five-node Splunk SOAR (On-premises) cluster, three RabbitMQ nodes are in DISC mode and two RabbitMQ nodes in RAM mode.

Improve reliability and recoverability for your Splunk SOAR (On-premises) cluster by setting all RabbitMQ nodes to disc mode.

On each cluster node, do the following steps:

- Using SSH, connect to a Splunk SOAR (On-premises) cluster node as the user account that runs Splunk SOAR.

- Run the following commands:

phenv rabbitmqctl stop_app

phenv rabbitmqctl change_cluster_node_type disc

phenv rabbitmqctl start_app

See also

| Use Python scripts and the REST API to manage your deployment | How to restart your Splunk SOAR (On-premises) cluster |

This documentation applies to the following versions of Splunk® SOAR (On-premises): 5.4.0, 5.5.0, 6.0.0, 6.0.1, 6.0.2

Download manual

Download manual

Feedback submitted, thanks!