Resolve data quality issues

You can troubleshoot the following event processing and data quality issues when you get data in to the Splunk platform:

- Incorrect line breaking

- Incorrect event breaking

- Incorrect timestamp extraction

Line breaking issues

The following symptoms indicate that there might be issues with line breaking:

- You have fewer events than you expect and the events are very large, especially if your events are single-line events.

- The Monitoring Console Data Quality dashboard displays issues with line breaking.

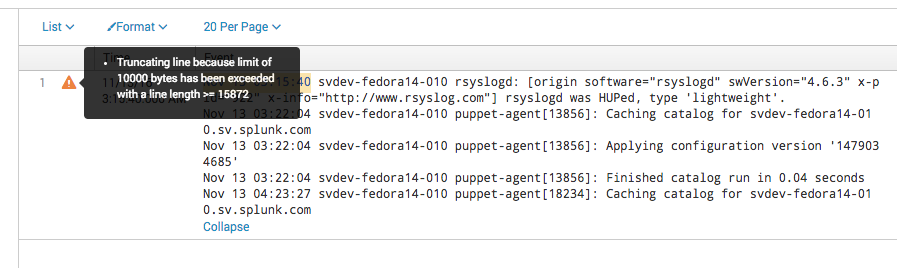

- You might see the following error message in the Splunk Web Data Input workflow or in the splunkd.log file: "Truncating line because limit of 10000 bytes has been exceeded".

Diagnosis

To confirm that the Splunk platform has line breaking issues, do one or more of the following troubleshooting steps:

- Visit the Monitoring Console Data Quality dashboard. Check the dashboard table for line breaking issues. See About the Monitoring Console in Monitoring Splunk Enterprise.

- Look for messages in the splunkd.log file like the following example:

- Search for events. Multiple combined events, or a single event broken into many, indicates a line breaking issue.

Solution

To resolve line breaking issues, complete these steps in Splunk Web:

- Click Settings > Add Data.

- Click Upload to test by uploading a file or Monitor to redo the monitor input.

- Select a file with a sample of your data.

- Click Next.

- On the Set Source Type page, work with the options on the left panel until your sample data is correctly broken into events. To configure

LINE_BREAKERorTRUNCATE, click Advanced. - Complete the data input workflow or record the correct settings and use them to correct your existing input configurations.

While you work with the options on the Set Source Type page, the LINE_BREAKER setting might not be properly set. The LINE_BREAKER setting must have a capturing group and the group must match the events.

For example, you might have a value of LINE_BREAKER that is not matched. Look for messages with "Truncating line because limit of 10000 bytes has been exceeded" in the splunkd.log file or look for the following message in Splunk Web:

If you find such a message, do the following:

- Confirm that

LINE_BREAKERis properly configured to segment your data into lines as you expect. - Confirm that the string you specify in the

LINE_BREAKERsetting exists in your data. - If

LINE_BREAKERis configured correctly but you have very long lines, or if you are usingLINE_BREAKERas the only method to define events, bypassing line merging later in the indexing pipeline, confirm that theTRUNCATEsetting is large enough to contain the entire data fragment delimited byLINE_BREAKER.

The default value forTRUNCATEis 10,000. If your events are larger than theTRUNCATEvalue, you might want to increase the value ofTRUNCATE. For performance and memory usage reasons, do not setTRUNCATEto unlimited.

If you do not specify a capturing group, LINE_BREAKER is ignored.

For more information, see Configure event line breaking.

Event breaking or aggregation issues

Event breaking issues can pertain to the BREAK_ONLY_BEFORE_DATE and MAX_EVENTS settings and any props.conf configuration file setting with the keyword BREAK.

You might have aggregation issues if you see the following indicators:

- Aggregation issues present in the Monitoring Console Data Quality dashboard.

- An error in the Splunk Web Data Input workflow.

- Count events. If events are missing and are very large, especially if your events are single-line events, you might have event breaking issues

Diagnosis

To confirm that the Splunk platform has event breaking issues, do one or more of the following troubleshooting steps:

- View the Monitoring Console Data Quality dashboard.

- Search for events that are multiple events combined into one.

- Check splunkd.log for messages such as the following:

12-07-2016 09:32:32.876 -0500 WARN AggregatorMiningProcessor - Breaking event because limit of 256 has been exceeded

12-07-2016 09:32:32.876 -0500 WARN AggregatorMiningProcessor - Changing breaking behavior for event stream because MAX_EVENTS (256) was exceeded without a single event break. Will set BREAK_ONLY_BEFORE_DATE to False, and unset any MUST_NOT_BREAK_BEFORE or MUST_NOT_BREAK_AFTER rules. Typically this will amount to treating this data as single-line only.

Solution

For line and event breaking, determine whether this issue occurs for one of the following reasons:

- Your events are properly recognized but are too large for the limits in place. The

MAX_EVENTSdefines the maximum number of lines in an event. - Your events are not properly recognized.

If your events are larger than the limit set in MAX_EVENTS, you can increase limits. Be aware that large events are not optimal for indexing performance, search performance, and resource usage. Large events can be costly to search. The upper values of both limits result in 10,000 characters per line, as defined by TRUNCATE, times 256 lines, as set by MAX_EVENTS. The combination of those two limits is a very large event.

If the cause is that your events are not properly recognized, which is more likely, the Splunk platform is not breaking events properly. Check the following:

- Your event breaking strategy. The default strategy breaks before the date, so if the Splunk platform does not extract a timestamp, it does not break the event. To diagnose and resolve this issue, investigate timestamp extraction. See How timestamp assignment works.

- Your event breaking regular expression.

For more information, see the following topics:

Time stamping issues

Time stamping issues can pertain to the following settings in the props.conf configuration file:

DATETIME_CONFIGTIME_PREFIXTIME_FORMATMAX_TIMESTAMP_LOOKAHEADTZ

You might have timestamp parsing issues if you see the following indicators:

- Timestamp parsing issues are present in the Monitoring Console Data Quality dashboard.

- An error occurs in the Splunk Web Data Input workflow.

- Count events. If you are missing events and have very large events, especially if your events are single-line events, parsing might be a problem.

- The time zone is not properly assigned.

- The value of

_timeassigned by the Splunk platform does not match the time in the raw data.

Diagnosis

To confirm that you have a timestamping issue, do one or more of the following:

- Visit the Monitoring Console Data Quality dashboard. Check for timestamp parsing issues in the table. Time stamp assignment resorts to various fallbacks. For more details, see How timestamp assignment works. For most of the fallbacks, even if one of them successfully assigns a timestamp, you still get an issue in the Monitoring Console dashboard.

- Search for events that are multiple events combined into one.

- Look in the splunkd.log file for messages like the following:

12-09-2016 00:45:29.956 -0800 WARN DateParserVerbose - Failed to parse timestamp. Defaulting to timestamp of previous event (Fri Dec 9 00:45:27 2016). Context: source::/disk2/sh-demo/splunk/var/log/splunk/entity.log|host::svdev-sh-demo|entity-too_small|682235

12-08-2016 12:33:56.025 -0500 WARN AggregatorMiningProcessor - Too many events (100K) with the same timestamp: incrementing timestamps 1 second(s) into the future to insure retrievability

All events are indexed with the same timestamp, which makes searching that time range ineffective.

Solution

To resolve a timestamping issue, complete the following steps:

- Make sure that each event has a complete timestamp, including a year, full date, full time, and a time zone.

- See Configure timestamp recognition for more possible resolution steps.

| Troubleshoot the input process |

This documentation applies to the following versions of Splunk® Enterprise: 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.0.4, 7.0.5, 7.0.6, 7.0.7, 7.0.8, 7.0.9, 7.0.10, 7.0.11, 7.0.13, 7.1.0, 7.1.1, 7.1.2, 7.1.3, 7.1.4, 7.1.5, 7.1.6, 7.1.7, 7.1.8, 7.1.9, 7.1.10, 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!