Multisite indexer cluster architecture

This topic describes the architecture of multisite indexer clusters. It focuses primarily on how multisite clusters differ from single-site clusters. For an overview of cluster architecture, focusing on single-site clusters, read The basics of indexer cluster architecture.

How multisite and single-site architecture differ

Multisite clusters differ from single-site clusters in these key respects:

- Each node (master/peer/search head) has an assigned site.

- Replication of bucket copies occurs with site-awareness.

- Search heads distribute their searches across local peers only, when possible.

- Bucket-fixing activities respect site boundaries when applicable.

Multisite cluster nodes

Multisite and single-site nodes share these characteristics:

- Clusters have three types of nodes: master, peers, and search heads.

- Each cluster has exactly one master node.

- The cluster can have any number of peer nodes and search heads.

Multisite nodes differ in these ways:

- Every node belongs to a specific site. Physical location typically determines a site. That is, if you want your cluster to span servers in Boston and Philadelphia, you assign all nodes in Boston to site1 and all nodes in Philadelphia to site2.

- A typical multisite cluster has search heads on each site. This is necessary for search affinity, which increases search efficiency by allowing a search head to access all data locally.

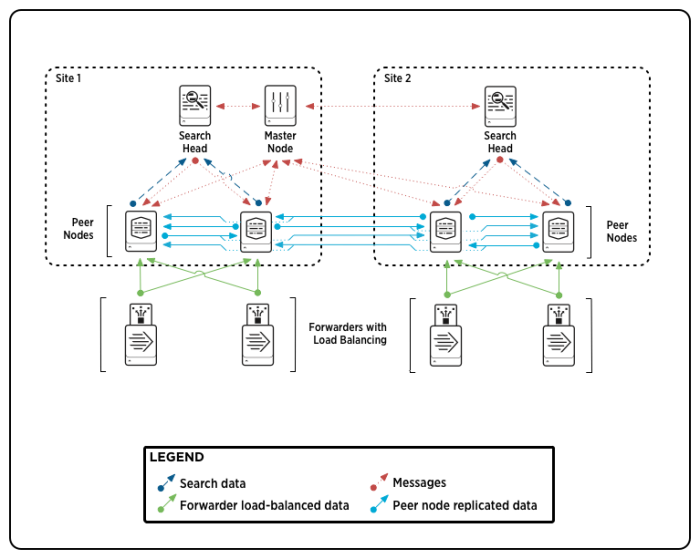

Here is an example of a two-site cluster.

Note the following:

- The master node controls the entire cluster. Although the master resides physically on a site, the master is not actually a member of any site. However, each master has a built-in search head, and that search head requires that you specify a site for the master as a whole. Note that the master's search head is for testing purposes only. Do not use it in a production environment.

- This is an example of a cluster that has been configured for search affinity. Each site has its own search head, which searches the set of peer nodes on its site. Depending on circumstances, however, a search head might also search peers outside its own site. See Multisite searching and search affinity.

- The peers replicate data across site boundaries. This behavior is fundamental for both disaster recovery and search affinity.

Multisite replication and search factors

As with their single-site counterparts, multisite replication and search factors determine the number of bucket copies and searchable bucket copies, respectively, in the cluster. The difference is that the multisite replication and search factors also determine the number of copies on each site. A multisite replication factor for a three-site cluster might look like this:

site_replication_factor = origin:2, site1:1, site2:1, site3:1, total:4

This replication factor specifies that each site will get one copy of each bucket, except when the site is the originating site for the data, in which case it will get two copies. It also specifies that the total number of copies across the cluster is four.

In this particular example, the replication factor explicitly specifies all sites, but that is not a requirement. An explicit site is a site that the replication factor explicitly specifies. A non-explicit site is a site that the replication factor does not explicitly specify.

Here is another example of a multisite replication factor for a three-site cluster. This replication factor specifies only two of the sites explicitly:

site_replication_factor = origin:2, site1:1, site2:2, total:5

In this example, the number of copies that the non-explicit site3 gets varies. If site1 is the origin, site1 gets two copies, site2 gets two copies, and site3 gets the remainder of one copy. If site2 is the origin, site1 gets one copy, site2 gets two copies, and site3 gets two copies. If site3 is the origin, site1 gets one copy, site2 gets two copies, and site3 gets two copies.

Note: In this example, the total value cannot be 4. It must be at least 5. This is because, when the replication factor has non-explicit sites, the total must be at least the sum of all explicit site and origin values.

For details on replication factor syntax and behavior, read "Configure the site replication factor".

The site search factor works the same way. For details, read "Configure the site search factor".

Multisite indexing

Multisite indexing is similar to single-site indexing, described in "The basics of indexer cluster architecture". A single master coordinates replication across all the peers on all the sites.

This section briefly describes the main multisite differences, using the example of a three-site cluster with this replication factor:

site_replication_factor = origin:2, site1:1, site2:1, site3:1, total:4

These are the main multisite issues to be aware of:

- Data replication occurs across site boundaries, based on the replication factor. If, in the example, a peer in site1 ingests the data, it will stream one copy of the data to another peer in site1 (to fulfill the

originsetting of 2), one copy to a peer in site2, and one copy to a peer in site3.

- Multisite replication has the concept of the origin site, which allows the cluster to handle data differently for the site that it originates on. The example illustrates this. If site1 originates the data, it gets two copies. If another site originates the data, site1 gets only one copy.

- As with single-site replication, you cannot specify the exact peers that will receive the replicated data. However, you can specify the sites whose peers will receive the data.

For information on how the cluster handles migrated single-site buckets, see "Migrate an indexer cluster from single-site to multisite".

Multisite searching and search affinity

Multisite searching is similar in most ways to single-site searching, described in "The basics of indexer cluster architecture". Each search occurs across a set of primary bucket copies. There is one key difference, however.

Multisite clusters provide search affinity, which allows searches to occur on site-local data. You must configure the cluster to take advantage of search affinity. Specifically, you must ensure that both the searchable data and the search heads are available locally.

To accomplish this, you configure the search factor so that each site has at least one full set of searchable data. The master then ensures that each site has a complete set of primary bucket copies, as long as the sites are functioning properly. This is known as the valid state.

With search affinity, the search heads still distribute their search requests to all peers in the cluster, but only the peers on the same site as the search head respond to the request by searching their primary bucket copies and returning results to the search head.

If the loss of some of a site's peers means that it no longer has a full set of primaries (and thus is no longer in the valid state), bucket fix-up activities will attempt to return the site to the valid state. During the fix-up period, peers on remote sites will participate in searches, as necessary, to ensure that the search head still gets a full set of results. After the site regains its valid state, the search head once again uses only local peers to fulfill its searches.

Note: You can disable search affinity if desired. When disabled for a particular search head, that search head can get its data from peers on any sites.

For more information on search affinity and how to configure the search factor to support it, see "Implement search affinity in a multisite indexer cluster". For more information on the internals of search processing, including search affinity, see "How search works in an indexer cluster".

Multisite clusters and node failure

The way that multisite clusters deal with node failure has some significant differences from single-site clusters.

Before reading this section, you must understand the concept of a "reserve" bucket copy.

A reserve bucket copy is a virtual copy, awaiting eventual assignment to a peer. Such a copy does not actually exist yet in storage while it is in the reserve state. Once the master assigns it to an available peer, the bucket gets streamed to that peer in the usual manner.

As a consequence of multisite peer node failure, some bucket copies might not be immediately assigned to a peer. For example, in a cluster with a total replication factor of 5, the master might tell the originating peer to stream buckets to just three other peers. This results in four copies (the original plus the three streamed copies), with the fifth copy awaiting assignment to a peer once certain conditions are met. The fifth, unassigned copy is known as a reserve copy. This section describes how the cluster must sometimes reserve copies when peer nodes fail.

How multisite clusters deal with peer node failure

When a peer goes down, bucket fix-up happens within the same site, if possible. The cluster tries to replace any missing bucket copies by adding copies to peers remaining on that site. (In all cases, each peer can have at most one copy of any particular bucket.) If it is not possible to fix up all buckets by adding copies to peers within the site, then, depending on the replication and search factors, the cluster might make copies on peers on other sites.

The fix-up behavior under these circumstances depends partially on whether the failed peer was on an explicit or non-explicit site.

If enough peers go down on an explicit site such that the site can no longer meet its site-specific replication factor, the cluster does not attempt to compensate by making copies on peers on other sites. Rather, it assumes that the requisite number of peers will eventually return to the site. For new buckets also, it holds copies in reserve for the return of peers to the site. In other words, it does not assign those copies to peers on a different site, but instead waits until the first site has peers available and then assigns the copies to those peers.

For example, given a three-site cluster (site1, site2, site3) with this replication factor:

site_replication_factor = origin:2, site1:1, site2:2, total:5

the cluster ordinarily maintains two copies on site2. But if enough peers go down on site2, so that only one remains and the site can no longer meet its replication factor of 2, that remaining peer gets one copy of all buckets in the cluster, and the cluster reserves another set of copies for the site. When a second peer rejoins site2, the cluster streams the reserved copies to that peer.

When a non-explicit site loses enough peers such that it can no longer maintain the number of bucket copies that it already has, the cluster compensates by adding copies to other sites to make up the difference. For example, assume that the non-explicit site3 in the example above has two copies of some bucket, and that it then loses all but one of its peers, so that it can only hold one copy of each bucket. The cluster compensates by streaming a copy of that bucket to a peer on one of the other sites, assuming there is at least one peer that does not yet have a copy of that bucket.

For information on how a cluster handles the case where all the peer nodes on a site fail, see See "How the cluster handles site failure".

How the cluster handles site failure

Site failure is just a special case of peer node failure. Cluster fix-up occurs following the rules described earlier for peer node failure. Of particular note, the cluster might hold copies in reserve against the eventual return of the site.

For any copies of existing buckets that are held in reserve, the cluster does not add copies to other sites during its fix-up activities. Similarly, for any new buckets added after the site goes down, the cluster reserves some number of copies until the site returns to the cluster.

Here is how the cluster determines the number of copies to reserve:

- For explicit sites, the cluster reserves the number of copies and searchable copies specified in the site's search and replication factors.

- For non-explicit sites, the cluster reserves one searchable copy if the

totalcomponents of the site's search and replication factors are sufficiently large, after handling any explicit sites, to accommodate the copy. (If the search factor isn't sufficiently large but the replication factor is, the cluster reserves one non-searchable copy.)

For example, say you have a three-site cluster with two explicit sites (site1 and site2) and one non-explicit site (site3), with this configuration:

site_replication_factor = origin:2, site1:1, site2:2, total:5 site_search_factor = origin:1, site1:1, site2:1, total:2

In the case of a site going down, the cluster reserves bucket copies like this:

- If site1 goes down, the cluster reserves one searchable copy.

- If site2 goes down, the cluster reserves two copies, including one that is searchable.

- If site3 goes down, the cluster reserves one non-searchable copy.

Once the reserved copies are accounted for, the cluster replicates any remaining copies to other available sites, both during fix-up of existing buckets and when adding new buckets.

When the site returns to the cluster, bucket fix-up occurs for that site to the degree necessary to ensure that the site has, at a minimum, its allocation of reserved bucket copies, both for new buckets and for buckets that were on the site when it went down.

If the site that fails is the site on which the master resides, you can bring up a stand-by master on one of the remaining sites. See "Handle indexer cluster master site failure".

How multisite clusters deal with master node failure

A multisite cluster handles master node failure the same as a single-site cluster. The cluster continues to function as best it can under the circumstances. See "What happens when a master node goes down".

| The basics of indexer cluster architecture | Indexer cluster deployment overview |

This documentation applies to the following versions of Splunk® Enterprise: 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.0.4, 7.0.5, 7.0.6, 7.0.7, 7.0.8, 7.0.9, 7.0.10, 7.0.11, 7.0.13, 7.1.0, 7.1.1, 7.1.2, 7.1.3, 7.1.4, 7.1.5, 7.1.6, 7.1.7, 7.1.8, 7.1.9, 7.1.10, 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10

Download manual

Download manual

Feedback submitted, thanks!