Route and filter data

You can use heavy forwarders to filter and route event data to Splunk instances. You can also perform selective indexing and forwarding, where you index some data locally and forward the data that you have not indexed to a separate indexer.

For information on routing data to non-Splunk systems, see Forward data to third-party systems.

For information on performing selective indexing and forwarding, see Perform selective indexing and forwarding later in this topic.

Routing and filtering capabilities of forwarders

Heavy forwarders can filter and route data to specific receivers based on source, source type, or patterns in the events themselves. For example, you can send all data from one group of machines to one indexer and all data from a second group of machines to a second indexer. Heavy forwarders can also look inside the events and filter or route accordingly. For example, you could use a heavy forwarder to inspect WMI event codes to filter or route Windows events. This topic describes a number of typical routing scenarios.

Besides routing to receivers, heavy forwarders can also filter and route data to specific queues, or discard the data altogether by routing to the null queue.

Only heavy forwarders can route or filter all data based on events. Universal and light forwarders cannot inspect individual events except in the case of extracting fields with structured data. They can still forward data based on a host, source, or source type. They can also route based on the stanza for a data input, as described below, in the subtopic, Route inputs to specific indexers based on the data input. Some input types can filter out data types while acquiring them.

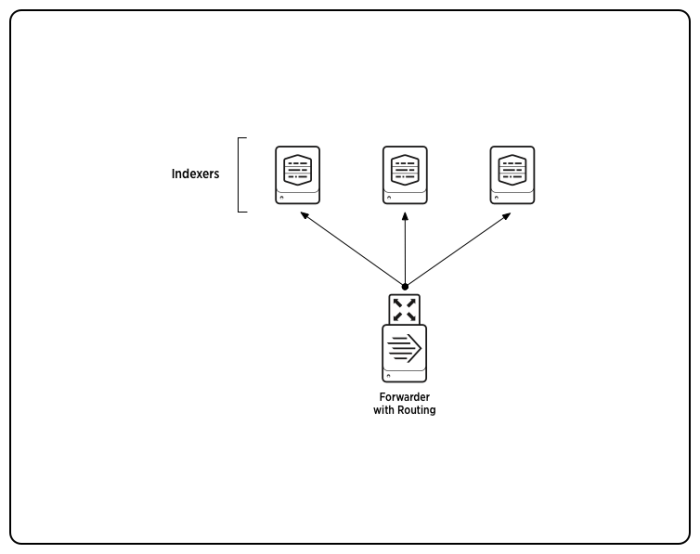

A simple illustration of a forwarder routing data to three indexers follows:

Configure routing

You can configure routing only on a heavy forwarder.

- Determine the criteria to use for routing by answering the following questions:

- How will you identify categories of events?

- Where will you route the events?

- On the Splunk instance that does the routing, open a shell or command prompt.

- Edit

$SPLUNK_HOME/etc/system/local/props.confto add aTRANSFORMS-routingsetting to determine routing based on event metadata. For example:[<spec>] TRANSFORMS-routing=<transforms_stanza_name>

In this

props.confstanza:<spec>can be:<sourcetype>, the source type of an eventhost::<host>, where <host> is the host for an eventsource::<source>, where <source> is the source for an event

- If you have multiple TRANSFORMS attributes, use a unique name for each. For example: "TRANSFORMS-routing1", "TRANSFORMS-routing2", and so on.

<transforms_stanza_name>must be unique.

Use the

<transforms_stanza_name>specified here when creating an entry intransforms.confExamples later in this topic show how to use this syntax.

- Save the file.

- Edit

$SPLUNK_HOME/etc/system/local/transforms.confto specify target groups and set additional criteria for routing based on event patterns. For example:[<transforms_stanza_name>] REGEX=<routing_criteria> DEST_KEY=_TCP_ROUTING FORMAT=<target_group>,<target_group>,....

In this

transforms.confstanza:<transforms_stanza_name>must match the name you defined inprops.conf.- In

<routing_criteria>, enter the regular expression rules that determine which events get routed. This line is required. Use "REGEX = ." if you don't need additional filtering beyond the metadata specified inprops.conf. DEST_KEYshould be set to_TCP_ROUTINGto send events via TCP. It can also be set to_SYSLOG_ROUTING.- Set

FORMATto a<target_group>that matches a target group name you defined inoutputs.conf. If you specify more than one target group, use commas to separate them. A comma separated list clones events to multiple target groups.

Examples later in this topic show how to use this syntax.

- Edit

$SPLUNK_HOME/etc/system/local/outputs.confto define the target groups for the routed data. For example:[tcpout:<target_group>] server=<ip>:<port>

In this

outputs.confstanza:- You can set

<target_group>to match the name you specified intransforms.conf. - You can set the IP address and port to match the receiving server.

The use cases described in this topic follow this pattern.

- You can set

Filter and route event data to target groups

In this example, a heavy forwarder filters three types of events and routes them to different target groups. The forwarder filters and routes according to these criteria:

- Events with a source type of "syslog" to a load-balanced target group

- Events containing the word "error" to a second target group

- All other events to a default target group

- On the instance that is to do the routing, open a command or shell prompt.

- Edit

$SPLUNK_HOME/etc/system/local/props.confin$SPLUNK_HOME/etc/system/localto set twoTRANSFORMS-routingsettings: one for syslog data and a default for all other data.[default] TRANSFORMS-routing=errorRouting [syslog] TRANSFORMS-routing=syslogRouting

- Edit

$SPLUNK_HOME/etc/system/local/transforms.confto set the routing rules for each routing transform.[errorRouting] REGEX=error DEST_KEY=_TCP_ROUTING FORMAT=errorGroup [syslogRouting] REGEX=. DEST_KEY=_TCP_ROUTING FORMAT=syslogGroup

In this example, if a syslog event contains the word "error", it routes to

syslogGroup, noterrorGroup. This is due to the settings you previously specified inprops.conf. Those settings dictated that all syslog events should be filtered through thesyslogRoutingtransform, while all non-syslog (default) events should be filtered through theerrorRoutingtransform. Therefore, only non-syslog events get inspected for errors. - Edit

$SPLUNK_HOME/etc/system/local/outputs.confto define the target groups.[tcpout] defaultGroup=everythingElseGroup [tcpout:syslogGroup] server=10.1.1.197:9996, 10.1.1.198:9997 [tcpout:errorGroup] server=10.1.1.200:9999 [tcpout:everythingElseGroup] server=10.1.1.250:6666

syslogGroupanderrorGroupreceive events according to the rules specified intransforms.conf. All other events get routed to the default group,everythingElseGroup.

Replicate a subset of data to a third-party system

This example uses data filtering to route two data streams. It forwards:

- All the data, in cooked form, to a Splunk Enterprise indexer (10.1.12.1:9997)

- A replicated subset of the data, in raw form, to a third-party machine (10.1.12.2:1234)

The example sends both streams as TCP. To send the second stream as syslog data, first route the data through an indexer.

- On the Splunk instance that is to do the routing, open a command or shell prompt.

- Edit

$SPLUNK_HOME/etc/system/local/props.conf.[syslog] TRANSFORMS-routing = routeAll, routeSubset

- Edit

$SPLUNK_HOME/etc/system/local/transforms.confand add the following text:[routeAll] REGEX=(.) DEST_KEY=_TCP_ROUTING FORMAT=Everything [routeSubset] REGEX=(SYSTEM|CONFIG|THREAT) DEST_KEY=_TCP_ROUTING FORMAT=Subsidiary,Everything

- Edit

$SPLUNK_HOME/etc/system/local/outputs.confand add the following text:[tcpout] defaultGroup=nothing [tcpout:Everything] disabled=false server=10.1.12.1:9997 [tcpout:Subsidiary] disabled=false sendCookedData=false server=10.1.12.2:1234

- Restart Splunk software.

Filter event data and send to queues

Although similar to forwarder-based routing, queue routing can be performed by an indexer, as well as a heavy forwarder. It does not use the outputs.conf file, only props.conf and transforms.conf.

You can eliminate unwanted data by routing it to the nullQueue, the Splunk equivalent of the Unix /dev/null device. When you filter out data in this way, the data is not forwarded and doesn't count toward your indexing volume.

See Caveats for routing and filtering structured data later in this topic.

Discard specific events and keep the rest

This example discards all sshd events in /var/log/messages by sending them to nullQueue:

- In

props.conf, set the TRANSFORMS-null attribute:[source::/var/log/messages] TRANSFORMS-null= setnull

- Create a corresponding stanza in

transforms.conf. SetDEST_KEYto "queue" andFORMATto "nullQueue":[setnull] REGEX = \[sshd\] DEST_KEY = queue FORMAT = nullQueue

- Restart Splunk Enterprise.

Keep specific events and discard the rest

Keeping only some events and discarding the rest requires two transforms. In this scenario, which is opposite of the previous, the setnull transform routes all events to nullQueue while the setparsing transform selects the sshd events and sends them on to indexQueue.

As with other index-time field extractions, processing of transforms happens in the order that you specify them, from left to right. The key difference is the order in which you specify the stanzas. In this example, the setnull stanza must appear first in the list. This is because if you set it last, it matches all events and sends them to the nullQueue, and as it is the last transform, it effectively throws all of the events away, even those that previously matched the setparsing stanza.

When you set the setnull transform first, it matches all events and tags them to be sent to the nullQueue. The setparsing transform then follows, and tags events that match [sshd] to go to the indexQueue. The result is that the events that contain [sshd] get passed on, while all other events get dropped.

- Edit

props.confand add the following:[source::/var/log/messages] TRANSFORMS-set= setnull,setparsing

- Edit

transforms.confand add the following:[setnull] REGEX = . DEST_KEY = queue FORMAT = nullQueue [setparsing] REGEX = \[sshd\] DEST_KEY = queue FORMAT = indexQueue

- Restart Splunk Enterprise.

Filter WMI and Event Log events

You can filter WinEventLog events directly on a universal forwarder. The [WinEventLog] stanzas in inputs.conf offer direct filtering of EventCodes before data leaves the forwarder. See Create advanced filters with 'whitelist' and 'blacklist' in the Getting Data In manual.

To filter event codes on WMI events, use the [WMI:WinEventLog:] source type stanza in props.conf on the parsing node (heavy forwarder or indexer,) and define a regular expression in transforms.conf to remove specific strings that match.

The following example uses regular expressions to filter out the two event codes (592, and 593) from the WMI:WinEventLog:Security channel:

- Determine which node is the receiver for events coming from the forwarder that can parse data. This will be a heavy forwarder or an indexer.

- Edit the receiver

props.confand add a transformation to a WMI:WinEventLog channel:[WMI:WinEventLog:Security] TRANSFORMS-wmi=wminull

- Edit the receiver

transforms.confand define a regular expression for the data you want to filter out:[wminull] REGEX=(?m)^EventCode=(592|593) DEST_KEY=queue FORMAT=nullQueue

- Restart Splunk Enterprise services on the receiver.

Filter data by target index

Forwarders have a forwardedindex filter that lets you specify whether data gets forwarded, based on the target index. For example, if you have one data input whose events you have specified to go to the "index1" index, and another whose events you want to go to the "index2" index, you can use the filter to forward only the data targeted to the index1 index, while ignoring the data that is to go to the index2 index.

The forwardedindex filter uses deny lists and allow lists to specify the filtering. For information on setting up multiple indexes, see Create custom indexes in the Managing Indexers and Clusters of Indexers manual.

You can only use the forwardedindex filter under the global [tcpout] stanza. The filter does not work if you specify it under any other outputs.conf stanza.

You can use the forwardedindex.<n>.whitelist|blacklist settings in outputs.conf to specify the data that should be forwarded. You accomplish this by configuring the settings with regular expressions that filter the target indexes.

Default behavior

By default, the forwarder sends data targeted for all external indexes, including the default index and any indexes that you have created. For internal indexes, the forwarder sends data targeted for the following indexes

_audit_internal_introspection_telemetry

In most deployments, you do not need to override the default settings.

For more information on how to include and exclude indexes, see the outputs.conf specification file

For more information on default and custom outputs.conf files and their locations, see Types of outputs.conf files.

Forward all external and internal index data

If you want to forward all internal index data, as well as all external data, you can override the default forwardedindex filter attributes:

#Forward everything [tcpout] forwardedindex.0.whitelist = .* # disable these forwardedindex.1.blacklist = forwardedindex.2.whitelist =

Forward data for a single index only

If you want to forward only the data targeted for a single index (for example, as specified in inputs.conf), and drop any data that is not a target for that index, configure outputs.conf in this way:

[tcpout] #Disable the current filters from the defaults outputs.conf forwardedindex.0.whitelist = forwardedindex.1.blacklist = forwardedindex.2.whitelist = #Forward data for the "myindex" index forwardedindex.0.whitelist = myindex

This first disables all filters from the default outputs.conf file. It then sets the filter for your own index. Be sure to start the filter numbering with 0: forwardedindex.0.

Note: If you've set other filters in another copy of outputs.conf on your system, you must disable those as well.

You can use the CLI btools command to ensure that there aren't any other filters located in other outputs.conf files on your system:

splunk cmd btool outputs list tcpout

This command returns the content of the tcpout stanza, after all versions of the configuration file have been combined.

Use the forwardedindex attributes with local indexing

On a heavy forwarder, you can index locally. To do that, you must set the indexAndForward attribute to "true". Otherwise, the forwarder just forwards your data and does not save it on the forwarder. On the other hand, the forwardedindex attributes only filter forwarded data; they do not filter any data that gets saved to the local index.

In a nutshell, local indexing and forwarder filtering are entirely separate operations, which do not coordinate with each other. This can have unexpected implications when you perform filtering:

- If you set

indexAndForwardto "true" and then filter out some data throughforwardedindexattributes, the forwarder does not forward the excluded data, but it does still index the data. - If you set

indexAndForwardto "false" (no local indexing) and then filter out some data, the forwarder drops the filtered data entirely (because it neither forwards nor indexes the filtered data.)

Route inputs to specific indexers based on the data input

In this scenario, you use inputs.conf and outputs.conf to route data to specific indexers, based on the data's input. Universal and light forwarders can perform this kind of routing.

Here's an example that shows how this works.

- In

outputs.conf, create stanzas for each receiving indexer:[tcpout:systemGroup] server=server1:9997 [tcpout:applicationGroup] server=server2:9997

- In

inputs.conf, specify_TCP_ROUTINGto set the stanza inoutputs.confthat each input should use for routing:[monitor://.../file1.log] _TCP_ROUTING = systemGroup [monitor://.../file2.log] _TCP_ROUTING = applicationGroup

The forwarder routes data from file1.log to server1 and data from file2.log to server2.

Perform selective indexing and forwarding

You can index and store data locally, as well as forward the data onwards to a receiving indexer. There are two ways to do this:

- Index all the data before forwarding it. To do this, enable the

indexAndForwardattribute inoutputs.conf.

- Index a subset of the data before forwarding it or other data. This is called selective indexing. With selective indexing, you can index some of the data locally and then forward it on to a receiving indexer. Alternatively, you can choose to forward only the data that you don't index locally.

Do not enable the indexAndForward attribute in the [tcpout] stanza if you also plan to enable selective indexing.

Configure selective indexing

To use selective indexing, you must modify both inputs.conf and outputs.conf.

In this example, the presence of the [IndexAndForward] stanza, including the index and selectiveIndexing settings, turns on selective indexing for the forwarder. It enables local indexing for any input (specified in inputs.conf) that has the _INDEX_AND_FORWARD_ROUTING setting.

The presence of the _INDEX_AND_FORWARD_ROUTING setting in inputs.conf tells the heavy forwarder to index that input locally. You can configure the setting to any string value you want. The forwarder looks for the setting itself.

Use the entire [indexAndForward] stanza exactly as shown here.

- In

outputs.conf, add the[indexAndForward]stanza:[indexAndForward] index=true selectiveIndexing=true

Note: This is a global stanza, and only needs to appear once in

outputs.conf. - Include the target group stanzas for each set of receiving indexers:

[tcpout:<target_group>] server = <ip address>:<port>, <ip address>:<port>, ... ...

The forwarder uses the named

<target_group>ininputs.confto route the inputs. - In

inputs.conf, add the_INDEX_AND_FORWARD_ROUTINGsetting to the stanzas of each input that you want to index locally:[input_stanza] _INDEX_AND_FORWARD_ROUTING=<any_string> ...

- Add the

_TCP_ROUTINGsetting to the stanzas of each input that you want to forward:[input_stanza] _TCP_ROUTING=<target_group> ...

The

<target_group>is the name used inoutputs.confto specify the target group of receiving indexers.

The next several sections show how to use selective indexing in a variety of scenarios.

Index one input locally and then forward the remaining inputs

In this example, the forwarder indexes data from one input locally but does not forward it. It also forwards data from two other inputs but does not index those inputs locally.

- In

outputs.conf, create these stanzas:[tcpout] defaultGroup=noforward disabled=false [indexAndForward] index=true selectiveIndexing=true [tcpout:indexerB_9997] server = indexerB:9997 [tcpout:indexerC_9997] server = indexerC:9997

Since the

defaultGroupis set to the non-existent group "noforward" (meaning that there is nodefaultGroup), the forwarder only forwards data that has been routed to explicit target groups ininputs.conf. It drops all other data. - In

inputs.conf, create these stanzas:[monitor:///mydata/source1.log] _INDEX_AND_FORWARD_ROUTING=local [monitor:///mydata/source2.log] _TCP_ROUTING=indexerB_9997 [monitor:///mydata/source3.log] _TCP_ROUTING=indexerC_9997

The result is that the forwarder:

- Indexes the

source1.logdata locally but does not forward it (because there is no explicit routing in its input stanza and there is no default group inoutputs.conf) - Forwards the

source2.logdata to indexerB but does not index it locally - Forwards the

source3.logdata to indexerC but does not index it locally

Index one input locally and then forward all inputs

This example is nearly identical to the previous one. The difference is that here, you index just one input locally, but then you forward all inputs, including the one you've indexed locally.

- In

outputs.conf, create these stanzas:[tcpout] defaultGroup=noforward disabled=false [indexAndForward] index=true selectiveIndexing=true [tcpout:indexerB_9997] server = indexerB:9997 [tcpout:indexerC_9997] server = indexerC:9997

- In

inputs.conf, create these stanzas:[monitor:///mydata/source1.log] _INDEX_AND_FORWARD_ROUTING=local _TCP_ROUTING=indexerB_9997 [monitor:///mydata/source2.log] _TCP_ROUTING=indexerB_9997 [monitor:///mydata/source3.log] _TCP_ROUTING=indexerC_9997

The only difference from the previous example is that here, you have specified the _TCP_ROUTING attribute for the input that you are indexing locally. The forwarder routes both source1.log and source2.log to the indexerB_9997 target group, but it only locally indexes the data from source1.log.

Another way to index one input locally and then forward all inputs

You can achieve the same result as in the previous example by setting the defaultGroup to a real target group.

- In

outputs.conf, create these stanzas:[tcpout] defaultGroup=indexerB_9997 disabled=false [indexAndForward] index=true selectiveIndexing=true [tcpout:indexerB_9997] server = indexerB:9997 [tcpout:indexerC_9997] server = indexerC:9997

This

outputs.confsets thedefaultGrouptoindexerB_9997. - In

inputs.conf, create these stanzas:[monitor:///mydata/source1.log] _INDEX_AND_FORWARD_ROUTING=local [monitor:///mydata/source2.log] _TCP_ROUTING=indexerB_9997 [monitor:///mydata/source3.log] _TCP_ROUTING=indexerC_9997

Even though you have not set up an explicit routing for source1.log, the forwarder still sends it to the indexerB_9997 target group, since outputs.conf specifies that group as the defaultGroup.

Selective indexing and internal logs

After you enable selective indexing in outputs.conf, the forwarder only indexes those inputs with the _INDEX_AND_FORWARD_ROUTING setting. This applies to the internal logs in the /var/log/splunk directory (specified in the default etc/system/default/inputs.conf). By default, the forwarder does not index those logs. If you want to index them, you must add their input stanza to your local inputs.conf file (which takes precedence over the default file) and include the _INDEX_AND_FORWARD_ROUTING attribute:

[monitor://$SPLUNK_HOME/var/log/splunk] index = _internal _INDEX_AND_FORWARD_ROUTING=local

Caveats for routing and filtering structured data

Splunk software does not parse structured data that has been forwarded to an indexer

When you forward structured data to an indexer, Splunk software does not parse this data after it arrives at the indexer, even if you have configured props.conf on that indexer with INDEXED_EXTRACTIONS and its associated attributes. Forwarded data skips the following queues on the indexer, which precludes any parsing of that data on the indexer:

parsingaggregationtyping

The forwarded data must arrive at the indexer already parsed. To achieve this, you must also set up props.conf on the forwarder that sends the data. This includes configuration of INDEXED_EXTRACTIONS and any other parsing, filtering, anonymizing, and routing rules. Universal forwarders are capable of performing these tasks solely for structured data. See Forward data extracted from header files.

| Protect against loss of in-flight data | Forward data to third-party systems |

This documentation applies to the following versions of Splunk® Enterprise: 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12

Download manual

Download manual

Feedback submitted, thanks!