About proactive Splunk component monitoring

Proactive Splunk component monitoring lets you view the health of Splunk Enterprise features from the output of a REST API endpoint. Individual features report their health status through a tree structure that provides a continuous, real-time view of the health of your deployment, without affecting your search load.

You can access feature health information using the splunkd health report in Splunk Web. See View the splunkd health report.

You can also access feature health information programmatically from the server/health/splunkd endpoint. See Query the server/health/splunkd endpoint.

For detailed information on the splunkd process, see Splunk Enterprise Processes in the Installation Manual.

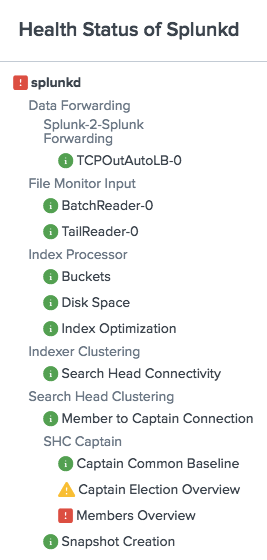

splunkd health report

The splunkd health report records the health status of splunkd in a tree structure, where leaf nodes represent particular Splunk Enterprise features, and intermediary nodes categorize the various features. Feature health status is color-coded in three states:

- Green: The feature is functioning properly.

- Yellow: The feature is experiencing a problem.

- Red: The feature has a severe issue and is negatively impacting the functionality of your deployment.

The health status tree structure

The splunkd health status tree has the following nodes:

| Health status tree node | Description |

|---|---|

| splunkd | The top level node of the status tree shows the overall health status (color) of splunkd. The status of splunkd shows the least healthy state present in the tree. The REST endpoint retrieves the instance health from the splunkd node.

|

| Feature categories | Feature categories represent the second level in the health status tree. Feature categories are logical groupings of features. For example, "BatchReader" and "TailReader" are features that form a logical grouping with the name "File Monitor Input". Feature categories act as buckets for groups of features, and do not have their own health status. |

| Features | The next level in the status tree is feature nodes. Each node contains information on the health status of a particular feature. Each feature contains one or more indicators that determine the status of the feature. The overall health status of a feature is based on the least healthy color of any of its indicators. |

| Indicators | Indicators are the fundamental elements of the splunkd health report. These are the lowest levels of functionality that are tracked by each feature, and change colors as functionality changes. Indicator values are measured against red or yellow threshold values to determine the status of the feature. See What determines the status of a feature?

|

For a list of supported Splunk Enterprise features, see Supported features.

What determines the status of a feature?

The current status of a feature in the status tree depends on the value of its associated indicators. Indicators have configurable thresholds for yellow and red. When an indicator's value meets threshold conditions, the feature's status changes.

For information on how to configure indicator thresholds, see Set feature indicator thresholds.

For information on how to troubleshoot the root cause of feature status changes, see Investigate feature health status changes.

Default health status alerts

By default, each feature in the splunkd health report generates an alert when a status change occurs, for example from green to yellow, or yellow to red. You can enable/disable alerts for any feature and set up alert notifications via email, mobile, VictorOps, or PagerDuty in health.conf or via REST endpoint. For more information, see Configure health report alerts.

splunkd health status viewpoint

The splunkd health report shows the health of your Splunk Enterprise deployment from the viewpoint of the local instance on which you are monitoring. For example, in an indexer cluster environment, the cluster master and peer nodes each show a different set of features contributing to the overall health of splunkd.

Distributed health report

The distributed health report lets you monitor the overall health status of your distributed deployment from a single central instance, such as a search head, search head captain, or cluster master. This differs from the splunkd health report, which shows feature health status from the viewpoint of the local instance only.

The instance on which the distributed health report is enabled collects data from connected instances across your deployment. When a feature indicator reaches a specified threshold, the feature health status changes, for example, from green to yellow, or yellow to red, in the same way as the splunkd health report.

Enable the distributed health report

You must enable the distributed health report in health.conf before it can collect data from your deployment. By default, the distributed health report is disabled.

To enable the distributed health report:

- Log in to the instance from which you want to monitor your deployment. In most cases a search head, search head cluster captain or cluster master.

- Edit

$SPLUNK_HOME/etc/system/local/health.conf. - In the

[distributed_health_reporter]stanza, setdisabled = 0. For example:[distributed_health_reporter] disabled = 0

Set up distributed health report alert actions

You can set up alert actions, such as sending email or mobile alert notifications, directly on the distributed health report's central instance.

This lets you configure alert actions in one location, which simplifies the alert configuration process, when compared to the single-instance view health report that requires you to set up alert actions on individual instances.

The distributed health report must be enabled to receive alerts from your deployment.

To set up distributed health report alert actions:

- Log in to the distributed health report's central instance. In most cases the cluster master or search head captain.

- Edit

$SPLUNK_HOME/etc/system/local/health.conf - Make sure the distributed health report is enabled, as shown:

[distributed_health_reporter] disabled = 0

- Add the specific alert actions that you want to run when the distributed health report receives an alert. For example, to send email alert notifications, add the

[alert_action:email]stanza:[alert_action:email] disabled = 0 action.to = <recipient@example.com> action.cc = <recipient_2@example.com> action.bcc = <other_recipients@example.com>

For details on how to configure available alert actions, see Set up health report alert actions.

If you enable alerts on both the central instance and on individual instances, the distributed health report sends duplicate alerts. To avoid duplicate alerts, disable alerts on individual instances.

View distributed health report

The distributed health report is currently visible via REST endpoint only. Currently supported features are limited to indexers only.

To view the overall health status of your distributed deployment, send a GET request to:

server/health/deployment

For endpoint details, see server/health/deployment in the REST API reference.

To view the health status of individual features reporting to the distributed health report (for example, indexers), send a GET request to:

server/health/deployment/details

For endpoint details, see server/health/deployment/details in the REST API reference.

| Forwarders | Requirements |

This documentation applies to the following versions of Splunk® Enterprise: 8.0.0, 8.0.1, 8.0.2, 8.0.3

Download manual

Download manual

Feedback submitted, thanks!