Manage report acceleration

Report acceleration is the easiest way to speed up transforming searches and reports that take a long time to complete because they have to cover a large volume of data. You enable acceleration for a transforming search when you save it as a report. You can also accelerate report-based dashboard panels that use a transforming search.

This topic covers various aspects of report acceleration in more detail. It includes:

- A quick guide to enabling automatic acceleration for a transforming report.

- Examples of qualifying and nonqualifying reports (only specific kinds of reports qualify for report acceleration).

- Details on how report acceleration summaries are created and maintained

- An overview of the Report acceleration summaries page in Settings, which you can use to review and maintain the data summaries that are used for automatic report acceleration.

Restrictions on report acceleration

You cannot accelerate a report if:

- You created it though Pivot. Pivot reports are accelerated via data model acceleration. See Manage data models.

- Your permissions do not enable you to accelerate searches. You cannot accelerate reports if your role does not have the

schedule_searchandaccelerate_searchcapabilities. - Your role does not have write permissions for the report.

- The search that the report is based upon is disqualified for acceleration. For more information, see How reports qualify for acceleration.

In addition, be careful when accelerating reports whose base searches include tags, event types, search macros, and other knowledge objects whose definitions can change independently of the report after the report is accelerated. If this happens, the accelerated report can return invalid results.

If you suspect that your accelerated report is returning invalid results, you can verify its summary to see if the data contained in the summary is consistent. See Verify a summary.

Enabling report acceleration

You can enable report acceleration when you create a report, or later, after the report is created.

For a more thorough description of this procedure, see Create and edit reports, in the Reporting Manual.

Enabling report acceleration when you create a report

You can enable report acceleration for qualifying reports.

Prerequisites

Steps

- In Search, run a search that qualifies for report acceleration.

- Select Save As > Report.

- Give your report a Name and optionally, a Description.

- Click Save to save the search as a report.

- Select Acceleration.

You can only accelerate the report if the report qualifies for acceleration and your permissions allow you to accelerate reports. To be able to accelerate reports your role has to have theschedule_searchandaccelerate_searchcapabilities. - Select Accelerate Report.

- Select a Summary Range.

Base your selection on the range of time over which you plan to run the report. For example, if you only plan to run the report over periods of time within the last seven days, choose 7 Days. - Click Save to save your acceleration settings.

Enabling report acceleration for an existing report

You can enable acceleration for a qualifying existing report.

Prerequisites

Steps

- On the Reports listing page, find a report that you want to accelerate.

- Expand the report row by clicking on the > symbol in the first column.

The Acceleration line item displays the acceleration status for the report. Its value will be Disabled if it is not accelerated. - Click Edit

You can only accelerate the report if the report qualifies for acceleration and your permissions allow you to accelerate reports. To be able to accelerate reports your role has to have theschedule_searchandaccelerate_searchcapabilities. - Select Accelerate Report.

- Select a Summary Range.

Base your selection on the range of time over which you plan to run the report. For example, if you only plan to run the report over periods of time within the last seven days, choose 7 Days. - Click Save to save your acceleration settings.

Alternatively, you can enable report acceleration for an existing report at Settings > Searches, Reports, and Alerts.

After you enable acceleration for a report

When you enable acceleration for your report, the Splunk software begins building a report acceleration summary for the report if it determines that the report would benefit from summarization. To find out whether your report summary is being constructed, go to Settings > Report Acceleration Summaries. If the Summary Status is stuck at 0% complete for an extended amount of time, the summary is not being built.

See Conditions under which Splunk software cannot build or update a summary.

Once the summary is built, future runs of an accelerated report should complete faster than they did before. See the subtopics below for more information on summaries and how they work.

Note: Report acceleration only works for reports that have Search Mode set to Smart or Fast. If you select the Verbose search mode for a report that benefits from report acceleration, it will run as slow as it would if no summary existed for it. Search Mode does not affect searches powering dashboard panels.

For more information about the Search Mode settings, see Search modes in the Search Manual.

How reports qualify for acceleration

For a report to qualify for acceleration its search must meet three criteria:

- The search string must use a transforming command (such as

chart,timechart,stats, andtop). - If the search string has any commands before the first transforming command, they must be streamable.

- The search cannot use event sampling.

Note: You can use non-streaming commands after the first transforming command and still have the report qualify for automatic acceleration. It's just non-streaming commands before the first transforming command that disqualify the report.

For more information about event sampling, see Event sampling in the Search Manual.

Examples of qualifying search strings

Here are examples of search strings that qualify for report acceleration:

index=_internal | stats count by sourcetype

index=_audit search=* | rex field=search "'(?.*)'" | chart count by user search

test foo bar | bin _time span=1d | stats count by _time x y

index=_audit | lookup usertogroup user OUTPUT group | top searches by group

Examples of nonqualifying search strings

And here are examples of search strings that do not qualify for report acceleration:

Reason the following search string fails: This is a simple event search, with no transforming command.

index=_internal metrics group=per_source_thruput

Reason the following search string fails: eventstats is not a transforming command.

index=_internal sourcetype=splunkd *thruput | eventstats avg(kb) as avgkb by group

Reason the following search string fails: transaction is not a streaming command. Other non-streaming commands include dedup, head, tail, and any other search command that is not on the list of streaming commands.

index=_internal | transaction user maxspan=30m | timechart avg(duration) by user

Search strings that qualify for report acceleration but won't get much out of it

In addition, you can have reports that technically qualify for report acceleration, but which may not be helped much by it. This is often the case with reports with high data cardinality--something you'll find when there are two or more transforming commands in the search string and the first transforming command generates many (50k+) output rows. For example:

index=* | stats count by id | stats avg(count) as avg, count as distinct_ids

Set report acceleration summary time ranges

Report acceleration summaries span an approximate range of time. You determine this time range when you choose a value from the Summary Range list. At times, a report acceleration summary can have a store of data that slightly exceeds its summary range, but the summary never fails to meet that range, except while it is first being created.

For example, if you set a summary range of 7 days for an accelerated report, a data summary that approximately covers the past seven days is created. Every ten minutes, a search is run to ensure that the summary always covers the selected range. These maintenance searches add new summary data and and remove older summary data that passes out of the range.

When you then run the accelerated report over a range that falls within the past 7 days, the report searches its summary rather than the source index (the index the report originally searched). In most cases the summary has far less data than the source index, and this--along with the fact that the report summary contains precomputed results for portions of the search pipeline--means that the report should complete faster than it did on its initial run.

When you run the accelerated report over a period of time that is only partially covered by its summary, the report does not complete quite as fast because the Splunk software has to go to the source index for the portion of the report time range that does not fall within the summary range.

If the Summary Range setting for a report is 7 Days and you run the report over the last 9 days, the Splunk software only gets acceleration benefits for the portion of the report that covers the past 7 days. The portion of the report that runs over days 8 and 9 will run at normal speed.

Keep this in mind when you set the Summary Range value. If you always plan to run a report over time ranges that exceed the past 7 days, but don't extend further out than 30 days, you should select a Summary Range of 1 month when you set up report acceleration for that report.

How the Splunk platform builds report acceleration summaries

After you enable acceleration for an eligible report, Splunk software determines whether it will build a summary for the report. A summary for an eligible report is generated only when the number of events in the hot bucket covered by the chosen Summary Range is equal to or greater than 100,000. For more information, see the subtopic below titled "Conditions under which the Splunk platform cannot build or update a summary."

When Splunk software determines that it will build a summary for the report, it begins running the report to populate the summary with data. When the summary is complete, the report is run every ten minutes to keep the summary up to date. Each update ensures that the entire configured time range is covered without a significant gap in data. This method of summary building also ensures that late-arriving data will be summarized without complication.

Report acceleration summaries can take time to build and maintain

It can take some time to build a report summary. The creation time depends on the number of events involved, the overall summary range, and the length of the summary timespans (chunks) in the summary.

You can track progress toward summary completion on the Report Acceleration Summaries page in Settings. On the main page you can check the Summary Status to see what percentage of the summary is complete.

Note: Just like ordinary scheduled reports, the reports that automatically populate report acceleration summaries on a regular schedule are managed by the report scheduler. By default, the report scheduler is allowed to allocate up to 25% of its total search bandwidth for report acceleration summary creation.

The report scheduler also runs reports that populate report acceleration summaries at the lowest priority. If these "auto-summarization" reports have a scheduling conflict with user-defined alerts, summary-index reports, and regular scheduled reports, the user-defined reports always get run first. This means that you may run into situations where a summary is not created or updated because reports with a higher priority are running.

For more information about the search scheduler see the topic "Configure the priority of scheduled reports," in the Reporting Manual.

Use parallel summarization to speed up creation and maintenance of report summaries

If you feel that the summaries for some of your accelerated reports are building or updating too slowly, you can turn on parallel summarization for those reports to speed the process up. To do this you add a parameter in savedsearches.conf for the report or reports in question.

Under parallel summarization, multiple search jobs are run concurrently to build a report acceleration summary. It also runs the same number of concurrent searches on a 10 minute schedule to maintain those summary files. Parallel summarization decreases the amount of time it takes for report acceleration summaries to be built and maintained.

There is a cost for this improvement in summarization search performance. The concurrent searches count against the total number of concurrent search jobs that your Splunk deployment can run, which means that they can cause increased indexer resource usage.

1. Open the savedsearches.conf file that contains the report that you want to update summarization settings for.

2. Locate the stanza for the report.

3. Add auto_summarize.max_concurrent = 2 if that parameter is not present in the stanza.

4. Save your changes.

If you turn on parallel summarization for some reports and find that your overall search performance is impacted, either because you have too many searches running at once or your concurrent search limit is reached, you can easily restore the auto_summarize.max_concurrent value of your accelerated reports back to 1.

In general we do not recommend increasing auto_summarize.max_concurrent to a value higher than 2. However, if your Splunk deployment has the capacity for a large amount of search concurrency, you can try setting auto_summarize.max_concurrent to 3 or higher for selected accelerated reports.

See:

- "Accomodate many simultaneous searches" in the Capacity Planning Manual for information about the impact of concurrent searches on search performance.

- "Configure the priority of scheduled reports" for more information about how the the concurrent search limit for your implementation is determined.

Summary data is divided into chunks with regular timespans

As Splunk software builds and maintains the summary, it breaks the data up into chunks to ensure statistical accuracy, according to a "timespan" determined automatically, based on the overall summary range. For example, when the summary range for a report is 1 month, a timespan of 1d (one day) might be selected.

A summary timespan represents the smallest time range for which the summary contains statistically accurate data. If you are running a report against a summary that has a one hour timespan, the time range you choose for the report should be evenly divisible by that timespan, if you want the report to use the summarized data. When you are dealing with a 1h timespan, a report that runs over the past 24 hours would work fine, but a report running over the past 90 minutes might not be able to use the summarized data.

Summaries can have multiple timespans

Report acceleration summaries might be assigned multiple timespans if necessary to make them as searchable as possible. For example, a summary with a summary range of 3 months can have timespans of 1mon and 1d. In addition, extra timespans might be assigned when the summary spans more than one index bucket and the buckets cover very different amounts of time. For example, if a summary spans two buckets, and the first bucket spans two months and the next bucket spans 40 minutes, the summary will have chunks with 1d and 1m timespans.

You can manually set summary timespans (but we don't recommend it)

You can set summary timespans manually at the report level in savedsearches.conf by changing the value of the auto_summarize.timespan parameter. If you do set your summary timespans manually, keep in mind that very small timespans can result in extremely slow summary creation times, especially if the summary range is long. On the other hand, large timespans can result in quick-building summaries that cannot not be used by reports with short time ranges. In almost all cases, for optimal performance and usability it's best to let Splunk software determine summary timespans.

The way that Splunk software gathers data for accelerated reports can result in a lot of files over a very short amount of time

Because report acceleration summaries gather information for multiple timespans, many files can be created for the same summary over a short amount of time. If file and folder management is an issue for you, this is something to be aware of.

For every accelerated report and search head combination in your system, you get:

- 2 files (data + info) for each 1-day span

- 2 files (data + info) for each 1-hour span

- 2 files (data + info) for each 10-minute span

- SOMETIMES: 2 files (data + info) for each 1-minute span

So if you have an accelerated report with a 30-day range and a 10 minute granularity, the result is:

(30x1 + 30x24 + 30x144)x2 = 10,140 files

If you switch to a 1 minute granularity, the result is:

(30x1 + 30x24 + 30x144 + 30x1440)x2 = 96,540 files

If you use Deployment Monitor, which ships with 12 accelerated reports by default, an immediate backfill could generate between 122k and 1.2 million files on each indexer in $SPLUNK_HOME/var/lib/splunk/_internaldb/summary, for each search-head on which it is enabled.

Where report acceleration summaries are created and stored

The Splunk software creates report acceleration summaries on the indexer, parallel to the bucket or buckets that cover the range of time over which the summary spans. For example, for the "index1" index, they reside under $SPLUNK_HOME/var/lib/splunk/index1/summary.

Data model acceleration summaries are stored in the same manner, but in directories labeled datamodel_summary instead of summary.

By default, indexer clusters do not replicate report acceleration and data model acceleration summaries. This means that only primary bucket copies will have associated summaries.

If your peer nodes are running version 6.4 or higher, you can configure the cluster master node so that your indexer clusters replicate report acceleration summaries. All searchable bucket copies will then have associated summaries. This is the recommended behavior.

See How indexer clusters handle report and data model acceleration summaries in the Managing Indexers and Clusters of Indexers manual.

Configure size-based retention for report acceleration summaries

Do you set size-based retention limits for your indexes so they do not take up too much disk storage space? By default, report acceleration summaries can theoretically take up an unlimited amount of disk space. This can be a problem if you're also locking down the maximum data size of your indexes or index volumes. The good news is that you can optionally configure similar retention limits for your report acceleration summaries.

Note: Although report acceleration summaries are unbounded in size by default, they are tied to raw data in your warm and hot index buckets and will age along with it. When events pass out of the hot/warm buckets into cold buckets, they are likewise removed from the related summaries.

Important: Before attempting to configure size-based retention for your report acceleration summaries, you should first understand how to use volumes to configure limits on index size across indexes, as many of the principles are the same. For more information, see "Configure index size" in Managing Indexers and Clusters.

By default, report acceleration summaries live alongside the hot and warm buckets in your index at homePath/../summary/. In other words, if in indexes.conf the homePath for the hot and warm buckets in your index is:

homePath = /opt/splunk/var/lib/splunk/index1/db

Then summaries that map to buckets in that index will be created at:

summaryHomePath = /opt/splunk/var/lib/splunk/index1/summary

Here are the steps you take to set up size-based retention for the summaries in that index. All of the configurations described are made within indexes.conf.

1. Review your volume definitions and identify a volume (or volumes) that will be the home for your report acceleration summary data.

- If the right volume doesn't exist, create it.

- If your want to piggyback on a preexisting volume that controls your indexed raw data, you might have that volume reference the filesystem that hosts your hot and warm bucket directories, because your report acceleration summaries will live alongside it.

- However, you could also place your report acceleration summaries in their own filesystem if you want. The only rule here is: You can only reference one filesystem per volume, but you can reference multiple volumes per filesystem.

2. For the volume that will be the home for your report acceleration data, add the maxVolumeDataSizeMB parameter to set the volume's maximum size.

- This lets you manage size-based retention for report acceleration summary data across your indexes.

3. Update your index definitions.

- Set the

summaryHomePathfor each index that deals with summary data. Ensure that the path is referencing the summary data volume that you identified in Step 1.

summaryHomePathoverrides the default path for the summaries. Its value should compliment thehomePathfor the hot and warm buckets in the indexes. For example, here's thesummaryHomePaththat compliments thehomePathvalue identified above:

summaryHomePath = /opt/splunk/var/lib/splunk/index1/summary

This example configuration shows data size limits being set up on a global, per-volume, and per-index basis.

######################### # Global settings ######################### # Inheritable by all indexes: No hot/warm bucket can exceed 1 TB. # Individual indexes can override this setting. The global # summaryHomePath setting indicates that all indexes that do not explicitly # define a summaryHomePath value will write report acceleration summaries # to the small_indexes # volume. [global] homePath.maxDataSizeMB = 1000000 summaryHomePath = volume:small_indexes/$_index_name/summary ######################### # Volume definitions ######################### # This volume is designed to contain up to 100GB of summary data and other # low-volume information. [volume:small_indexes] path = /mnt/small_indexes maxVolumeDataSizeMB = 100000 # This volume handles everything else. It can contain up to 50 # terabytes of data. [volume:large_indexes] path = /mnt/large_indexes maxVolumeDataSizeMB = 50000000 ######################### # Index definitions ######################### # The report_acceleration and rare_data indexes together are limited to 100GB, per the # small_indexes volume. [report_acceleration] homePath = volume:small_indexes/report_acceleration/db coldPath = volume:small_indexes/report_acceleration/colddb thawedPath = $SPLUNK_DB/summary/thaweddb summaryHomePath = volume:small_indexes/report_acceleration/summary maxHotBuckets = 2 [rare_data] homePath = volume:small_indexes/rare_data/db coldPath = volume:small_indexes/rare_data/colddb thawedPath = $SPLUNK_DB/rare_data/thaweddb summaryHomePath = volume:small_indexes/rare_data/summary maxHotBuckets = 2 # Splunk constrains the main index and any other large volume indexes that # share the large_indexes volume to 50TB, separately from the 100GB of the # small_indexes volume. Note that these indexes both use summaryHomePath to # direct summary data to the small_indexes volume. [main] homePath = volume:large_indexes/main/db coldPath = volume:large_indexes/main/colddb thawedPath = $SPLUNK_DB/main/thaweddb summaryHomePath = volume:small_indexes/main/summary maxDataSize = auto_high_volume maxHotBuckets = 10 # Some indexes reference the large_indexes volume with summaryHomePath, # which means their summaries are created in that volume. Others do not # explicitly reference a summaryHomePath, which means that the Splunk platform # directs their summaries to the small_indexes volume, per the [global] stanza. [idx1_large_vol] homePath=volume:large_indexes/idx1_large_vol/db coldPath=volume:large_indexes/idx1_large_vol/colddb homePath=$SPLUNK_DB/idx1_large/thaweddb summaryHomePath = volume:large_indexes/idx1_large_vol/summary maxDataSize = auto_high_volume maxHotBuckets = 10 frozenTimePeriodInSecs = 2592000 [other_data] homePath=volume:large_indexes/other_data/db coldPath=volume:large_indexes/other_data/colddb homePath=$SPLUNK_DB/other_data/thaweddb maxDataSize = auto_high_volume maxHotBuckets = 10

When a report acceleration summary volume reaches its size limit, the Splunk volume manager removes the oldest summary in the volume to make room. When the volume manager removes a summary, it places a marker file inside its corresponding bucket. This marker file tells the summary generator not to rebuild the summary.

Data model acceleration summaries have a default volume called _splunk_summaries that is referenced by all indexes for the purpose of data model acceleration summary size-based retention. By default this volume has no maxVolumeDataSizeMB setting, meaning it has infinite retention.

You can use this preexisting volume to manage data model acceleration summaries and report acceleration summaries in one place. You would need to:

- have the

summaryHomePathreference for your report acceleration summaries reference the_splunk_summariesvolume. - set a

maxVolumeDataSizeMBvalue for_splunk_summaries.

For more information about size-based retention for data model acceleration summaries, see "Accelerate data models" in this manual.

Multiple reports for a single summary

A single report summary can be associated with multiple searches when the searches meet the following two conditions.

- The searches are identical up to and including the first reporting command.

- The searches are associated with the same app.

Searches that meet the first condition, but which belong to different apps, cannot share the same summary.

For example, these two reports use the same report acceleration summary.

sourcetype=access_* status=2* | stats count by price

sourcetype=access_* status=2* | stats count by price | eval discount = price/2

These two reports use different report acceleration summaries.

sourcetype=access_* status=2* | stats count by price

sourcetype=access_* status=2* | timechart by price

Two reports that are identical except for syntax differences that do not cause one to output different results than the other can also use the same summary.

These two searches use the same report acceleration summary.

sourcetype = access_* status=2* | fields - clientip, bytes | stats count by price

sourcetype = access_* status=2* | fields - bytes, clientip | stats count by price

You can also run non-saved searches against the summary, as long as the basic search matches the populating saved search up to the first reporting command and the search time range fits within the summary span.

You can see which searches are associated with your summaries by navigating to Manager > Report Acceleration Summaries. See "Use the Report Acceleration Summaries Page" in this topic.

Conditions under which the Splunk platform cannot build or update a summary

Splunk software cannot build a summary for a report when either of the following conditions exist.

- The number of events in the hot bucket covered by the chosen Summary Range is less than than 100k. When this condition exists you see a Summary Status warning that says Not enough data to summarize.

- Splunk software estimates that the completed summary will exceed 10% of the total bucket size in your deployment. When it makes this estimation, it suspends the summary for 24 hours. You will see a Summary Status of Suspended.

You can see the Summary Status for a summary in Settings > Report Acceleration Summaries.

If you define a summary and the Splunk software does not create it because these conditions exist, the software checks periodically to see if conditions improve. When these conditions are resolved, Splunk software begins creating or updating the summary.

How can you tell if a report is using its summary?

The obvious clue that a report is using its summary is if you run it and find that its report performance has improved (it completes faster than it did before).

But if that's not enough, or if you aren't sure if there's a performance improvement, you can View search job properties in the Search Manual for a debug message that indicates whether the report is using a specific report acceleration summary. Here's an example:

DEBUG: [thething] Using summaries for search, summary_id=246B0E5B-A8A2-484E-840C-78CB43595A84_search_admin_b7a7b033b6a72b45, maxtimespan=

In this example, that last string of numbers, b7a7b033b6a72b45, corresponds to the Summary ID displayed on the Report Acceleration Summaries page.

Use the Report Acceleration Summaries page

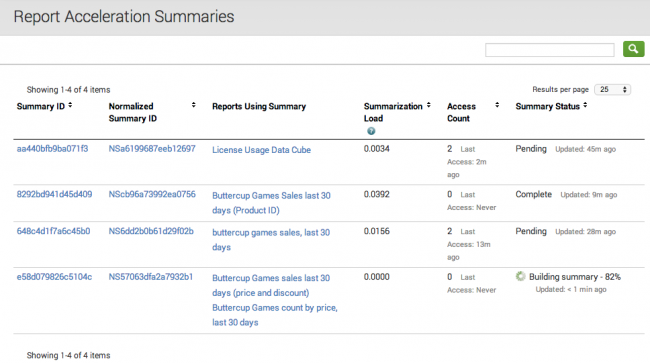

You can review the your report acceleration summaries and even manage various aspects of them with the Report Acceleration Summaries page in Settings. Go to Settings > Report Acceleration Summaries.

The main Report Acceleration Summaries page enables you to see basic information about the summaries that you have permission to view.

The Summary ID and Normalized Summary ID columns display the unique hashes that assigned to those summaries. The IDs are derived from the remote search string for the report. They are used as part of the directory name that is created for the summary files. Click a summary ID or normalized summary ID to view summary details and perform summary management actions. For more information about this detail view, see the subtopic "Review summary details," below.

The Reports Using Summary column lists the saved reports that are associated with each of your summaries. It indicates that each report associated with a particular summary will get report acceleration benefits from that summary. Click on a report title to drill down to the detail page for that report.

Check Summarization Load to get an idea of the effort that Splunk software has to put into updating the summary. It's calculated by dividing the number of seconds it takes to run the populating report by the interval of the populating report. So if the report runs every 10 minutes (600 seconds) and takes 30 seconds to run, the summarization load is 0.05. If the summarization load is high and the Access Count for the summary shows that the summary is rarely used or hasn't been used in a long time, you might consider deleting the summary to reduce the strain on your system.

The Summary Status column reports on the general state of the summary and tells you when it was last updated with new data. Possible status values are Summarization not started, Pending, Building summary, Complete, Suspended, and Not enough data to summarize. The Pending and Building summary statuses can display the percentage of the summary that is complete at the moment. If you want to update a summary to the present moment, click its summary ID to go to its detail page and click Update to run a new summary-populating report.

If the Summary Status is Pending it means that the summary may be slightly outdated and the search head is about to schedule a new update job for it.

If the Summary Status is Suspended it means that the report is not worth summarizing because it creates a summary that is too large. Splunk software projects the size of the summary that a report can create. If it determines that a summary will be larger than 10% of the index buckets it spans, it suspends that summary for 24 hours. There's no point to creating a summary, for example, if the summary contains 90% of the data in the full index.

You cannot override summary suspension, but you can adjust the length of time that summaries are suspended by changing the value of the auto_summarize.suspend_period attribute in savedsearches.conf,

If the Summary Status reads Not enough data to summarize, it means that Splunk software is not currently generating or updating a summary because the reports associated with it are returning less than 100k events from the hot buckets covered by the summary range. For more information, see the subtopic above titled "Conditions under which the Splunk platform cannot build or update a summary."

Review summary details

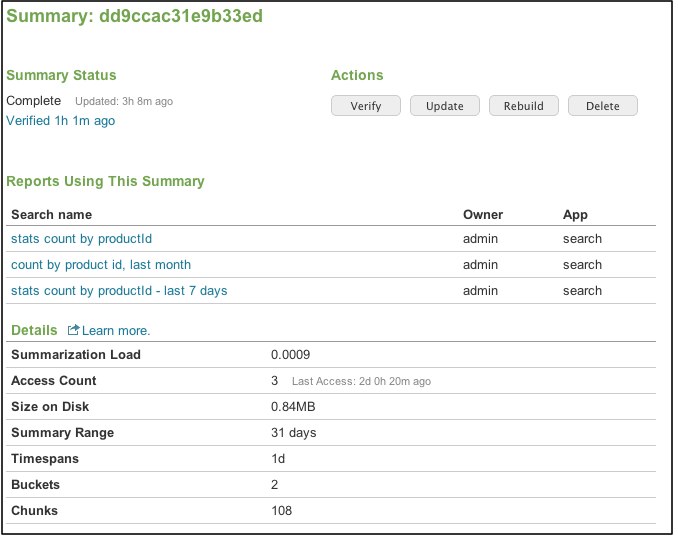

You use the summary details page to view detail information about a specific summary and to initiate actions for that summary. You get to this page by clicking a Summary ID on the Report Acceleration Summaries page in Settings.

Summary Status

Under Summary Status you'll see basic status information for the summary. It mirrors the Summary Status listed on the Report Acceleration Summaries page (see above) but also provides information about the verification status of the summary.

If you want to update a summary to the present moment click the Update button under Actions to kick off a new summary-populating report.

No verification status will appear here if you've never initiated verification for the summary. After you initiate verification this status shows the verification percentage complete. Otherwise this status shows the results of the last attempt at summary verification; the possible values are Verified and Failed to verify, with an indication of how far back in the past this attempt took place.

For more information about summary verification, see "Verify a summary," below.

Reports using the summary

The Reports Using This Summary section lists the reports that are associated with the summary, along with their owner and home app. Click on a report title to drill down to the detail page for that report. Similar reports (reports with search strings that all transform the same root search with different transforming commands, for example) can use the same summary.

Summary details

The Details section provides a set of metrics about the summary.

Summarization Load and Access Count are mirrored from the main Report Acceleration Summaries page. See the subtopic "Use the Report Acceleration Summaries page," above, for more information.

Size on Disk shows you how much space the summary takes up in terms of storage. You can use this metric along with the Summarization Load and Access Count to determine which summaries ought to be deleted.

Note: If the Size value stays at 0.00MB it means that Splunk software is not currently generating this summary because the reports associated with it either don't have enough events. At least 100k hot bucket events are required. It is also possible that the projected summary size is over 10% of the bucket that the report is associated with. Splunk software periodically checks this report and automatically creates a summary when the report meets the criteria for summary creation.

Summary range is the range of time spanned by the summary, always relative to the present moment. You set this up when you define the report that populates the summary. For more information, see the subtopic "Set report acceleration summary time ranges," above.

Timespans displays the size of the data chunks that make up the summary. A summary timespan represents the smallest time range for which the summary contains statistically accurate data. So if you are running a report against a summary that has a one hour timespan, the time range you choose for the report should be evenly divisible by that timespan if you want to get good results. So if you are dealing with a 1h timespan, a report over the past 24 hours would work fine, but a report over the past 90 minutes might be problematic. See the subsection "How the Splunk platform builds summaries," above, for more information.

Buckets shows you how many index buckets the summary spans, and Chunks tells you how many data chunks comprise the summary. Both of these metrics are informational for the most part, though they may aid with troubleshooting issues you may be encountering with your summary.

Verify a summary

At some point you may find that an accelerated report seems to be returning results that don't fit with the results the report returned when it was first created. This can happen when certain aspects of the report change without your knowledge, such as a change in the definition of a tag, event type, or field extraction rule used by the report.

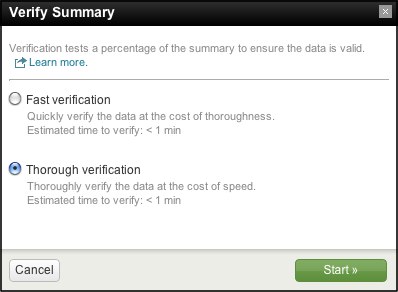

If you suspect that this has happened with one of your accelerated reports, go to the detail page for the summary with which the report is associated. You can run a verification process that examines a subset of the summary and verifies that all of the examined data is consistent. If it finds that the data is inconsistent, it notifies you that the verification has failed.

For example, say you have a report that uses an event type, netsecurity, which is associated with a specific kind of network security event. You enable acceleration for this report, and Splunk software builds a summary for it. At some later point, the definition of the event type netsecurity is changed, so it finds an entirely different set of events, which means your summary is now being populated by a different set of data than it was before. You notice that the results being returned by the accelerated report seem to be different, so you run the verification process on it from the Report acceleration summaries page in Settings. The summary fails verification, so you begin investigating the root report to find out what happened.

Ideally the verification process should only have to look at a subset of the summary data in order to save time; a full verification of the entire summary will take as long to complete as the building of the summary itself. But in some cases a more thorough verification is required.

Clicking Verify opens a Verify Summary dialog box. Verify Summary provides two verification options:

- A Fast verification, which is set to quickly verify a small subset of the summary data at the cost of thoroughness.

- A Thorough verification, which is set to thoroughly review the summary data at the cost of speed.

In both cases, the estimated verification time is provided.

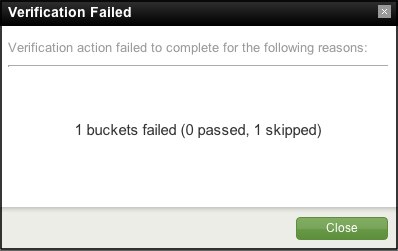

After you click Start to kick off the verification process you can follow its progress on the detail page for your summary under Summary Status. When the verification process completes, this is where you'll be notified whether it succeeded or failed. Either way you can click the verification status to see details about what happened.

When verification fails, the Verification Failed dialog can tell you what went wrong:

During the verification process, hot buckets and buckets that are in the process of building are skipped.

When a summary fails verification you can review the root search string (or strings) to see if it can be fixed to provide correct results. Once the report is working, click Rebuild to rebuild the summary so it is entirely consistent. Or, if you're fine with the report as-is, just rebuild the report. And if you'd rather start over from scratch, delete the summary and start over with an entirely new report.

Update, rebuild, and delete summaries

Click Update if the Summary Status shows that the summary has not been updated in some time and you would like to make it current. Update kicks off a standard summary update report to pull in events so that it is not missing data from the last few hours (for example).

Note: When a summary's Summary Status is Suspended, you cannot use Update to bring it current.

Click Rebuild to rebuild the index from scratch. You may want to do this in situations where you suspect there has been data loss due to a system crash or similar mishap, or if it failed verification and you've either fixed the underlying report(s) or have decided that the summary is ok with the data it is currently bringing in.

Click Delete to remove the summary from the system (and not regenerate summaries in the future). You may want to do this if the summary is used infrequently and is taking up space that could better be used for something else. You can use the Searches and Reports page in Settings to reenable report acceleration for the report or reports associated with the summary.

| Overview of summary-based search acceleration | Accelerate data models |

This documentation applies to the following versions of Splunk® Enterprise: 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.0.4, 7.0.5, 7.0.6, 7.0.7, 7.0.8, 7.0.9, 7.0.10, 7.0.11, 7.0.13, 7.1.0, 7.1.1, 7.1.2, 7.1.3, 7.1.4, 7.1.5, 7.1.6, 7.1.7, 7.1.8, 7.1.9, 7.1.10, 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!