What happens when a peer node goes down

A peer node can go down either intentionally (by invoking the CLI offline command, as described in Take a peer offline) or unintentionally (for example, by a server crashing).

No matter how a peer goes down, the manager coordinates remedial activities to recreate a full complement of bucket copies. This process is called bucket fixing. The manager keeps track of which bucket copies are on each node and what their states are (primacy, searchability). When a peer goes down, the manager can therefore instruct the remaining peers to fix the cluster's set of buckets, with the aim of returning to the state where the cluster has:

- Exactly one primary copy of each bucket (the valid state). In a multisite cluster, the valid state means that there is a primary copy on each site that supports search affinity, based on the

site_search_factor. - A full set of searchable copies for each bucket, matching the search factor. In the case of a multisite cluster, the number of searchable bucket copies must also fulfill the site-specific requirements for the search factor.

- A full set of copies (searchable and non-searchable combined) for each bucket, matching the replication factor (the complete state). In the case of a multisite cluster, the number of bucket copies must also fulfill the site-specific requirements for the replication factor.

Barring catastrophic failure of multiple nodes, the manager can usually recreate a valid cluster. If the cluster (or the site in a multisite cluster) has a search factor of at least 2, it can do so almost immediately. Whether or not a manager can also recreate a complete cluster depends on the number of peers still standing compared to the replication factor. At least replication factor number of peers must remain standing for a cluster to be made complete. In the case of a multisite cluster, each site must have available at least the number of peers specified for it in the site replication factor.

The time that the cluster takes to return to a complete state can be significant, because it must first stream buckets from one peer to another and make non-searchable bucket copies searchable. See Estimate the cluster recovery time when a peer gets decommissioned for more information.

Besides the remedial steps to fix the buckets, a few other key events happen when a node goes down:

- The manager rolls the generation and creates a new generation ID, which it communicates to both peers and search heads when needed.

- Any peers with copies of the downed node's hot buckets roll those copies to warm.

When a peer node gets taken offline intentionally

The splunk offline command removes a peer from the cluster and then stops the peer. It takes the peer down gracefully, allowing any in-progress searches to complete while quickly returning the cluster to a fully searchable state.

There are two versions of the splunk offline command:

splunk offline: This is the fast version version of thesplunk offlinecommand. The peer goes down quickly, after a maximum of five minutes, even if searches or remedial activities are still in progress.splunk offline --enforce-counts: This is the enforce-counts version of the command, which is designed to validate that the cluster has returned to the complete state. If you invoke theenforce-countsflag, the peer does not go down until all remedial activities are complete.

For detailed information on running the splunk offline command, see Take a peer offline.

The fast offline command

The fast version of the splunk offline command has this syntax:

splunk offline

This version of the command causes the following sequence of actions to occur:

1. Partial shutdown. The peer immediately undergoes a partial shutdown. The peer stops accepting both external inputs and replicated data. It continues to participate in searches for the time being.

2. Primacy reassignment. The manager reassigns primacy from any primary bucket copies on the peer to available searchable copies of those buckets on other peers (on the same site, if a multisite cluster). At the end of this step, which should take just a few moments if the cluster (or the cluster site) has a search factor of at least 2, the cluster returns to the valid state.

During this step, the peer's status is ReassigningPrimaries.

3. Generation ID rolling. The manager rolls the cluster's generation ID. At the end of this step, the peer no longer joins in new searches, but it continues to participate in any in-progress searches.

4. Full shutdown. The peer shuts down completely after a maximum of five minutes, or when in-progress searches and primacy re-assignment activities complete - whichever comes first. It no longer sends heartbeats to the manager. For details on the conditions that precede full shutdown, see The fast offline process.

5. Restart count. After the peer shuts down, the manager waits the length of the restart_timeout attribute (60 seconds by default), set in server.conf. If the peer comes back online within this period, the manager rebalances the cluster's set of primary bucket copies but no further remedial activities occur.

During this step, the peer's status is Restarting. If the peer does not come back up within the timeout period, its status changes to Down.

6. Remedial activities. If the peer does not restart within the restart_timeout period, the manager initiates actions to fix the cluster buckets and return the cluster to a complete state. It tells the remaining peers to replicate copies of the buckets on the offline peer to other peers. It also compensates for any searchable copies of buckets on the offline peer by instructing other peers to make non-searchable copies of those buckets searchable. At the end of this step, the cluster returns to the complete state.

If taking the peer offline results in less than replication factor number of remaining peers, the cluster cannot finish this step and cannot return to the complete state. For details on how bucket-fixing works, see Bucket-fixing scenarios.

In the case of a multisite cluster, remedial activities take place within the same site as the offline peer, if possible. For details, see Bucket-fixing in multisite clusters.

The enforce-counts offline command

The enforce-counts version of the splunk offline command has this syntax:

splunk offline --enforce-counts

This version of the splunk offline command runs only if certain conditions are met. In particular it will not run if taking the peer offline would result in less than replication factor number of peers remaining in the cluster. See The enforce-counts offline process for the set of conditions necessary to run this command.

This version of the command initiates a process called decommissioning, during which the following sequence of actions occurs:

1. Partial shutdown. The peer immediately undergoes a partial shutdown. The peer stops accepting both external inputs and replicated data. It continues to participate in searches for the time being.

2. Primacy reassignment. The manager reassigns primacy from any primary bucket copies on the peer to available searchable copies of those buckets on other peers (on the same site, if a multisite cluster). At the end of this step, which should take just a few moments if the cluster (or the cluster site) has a search factor of at least 2, the cluster returns to the valid state.

During this step, the peer's status is ReassigningPrimaries.

3. Generation ID rolling. The manager rolls the cluster's generation ID. At the end of this step, the peer no longer joins in new searches, but it continues to participate in any in-progress searches.

4. Remedial activities. The manager initiates actions to fix the cluster buckets so that the cluster returns to a complete state. It tells the remaining peers to replicate copies of the buckets on the offline peer to other peers. It also compensates for any searchable copies of buckets on the offline peer by instructing other peers to make non-searchable copies of those buckets searchable. At the end of this step, the cluster returns to the complete state.

During this step, the peer's status is Decommissioning. For details on how bucket-fixing works, see Bucket-fixing scenarios.

5. Full shutdown. The peer shuts down when the cluster returns to the complete state. Once it shuts down, the peer no longer sends heartbeats to the manager. At this point, the peer's status changes to GracefulShutdown. For details on the conditions that precede full shutdown, see The enforce-counts offline process.

When a peer node goes down unintentionally

When a peer node goes down for any reason besides the offline command, it stops sending the periodic heartbeat to the manager. This causes the manager to detect the loss and initiate remedial action. The manager coordinates essentially the same actions as when the peer gets taken offline intentionally, except for the following:

- The downed peer does not continue to participate in ongoing searches.

- The manager waits only for the length of the heartbeat timeout (by default, 60 seconds) before reassigning primacy and initiating bucket-fixing actions.

Searches can continue across the cluster after a node goes down; however, searches will provide only partial results until the cluster regains its valid state.

In the case of a multisite cluster, when a peer goes down on one site, the site loses its search affinity, if any, until it regains its valid state. During that time, searches continue to provide full results through involvement of remote peers.

Bucket-fixing scenarios

To replace bucket copies on a downed peer, the manager coordinates bucket-fixing activities among the peers. Besides replacing all the bucket copies, the cluster must ensure that it has a full complement of primary and searchable copies.

Note: The bucket-fixing process varies somewhat for clusters with SmartStore indexes. See Indexer cluster operations and SmartStore.

Bucket fixing involves three activities:

- Compensating for any primary copies on the downed peer by assigning primary status to searchable copies of those buckets on other peers.

- Compensating for any searchable copies by converting non-searchable copies of those buckets on other peers to searchable.

- Replacing all bucket copies (searchable and non-searchable) by streaming a copy of each bucket to a peer that doesn't already have a copy of that bucket.

For example, assume that the downed peer had 10 bucket copies, and five of those were searchable, and two of the searchable copies were primary. The cluster must:

- Reassign primary status to two searchable copies on other peers.

- Convert five non-searchable bucket copies on other peers to searchable.

- Stream 10 bucket copies from one standing peer to another.

The first activity - converting a searchable copy of a bucket from non-primary to primary - happens very quickly, because searchable bucket copies already have index files and so there's virtually no processing involved. (This assumes that there is a spare searchable copy available, which requires the search factor to be at least 2. If not, the cluster has to first make a non-searchable copy searchable before it can assign primary status to the copy.)

The second activity - converting a non-searchable copy of a bucket to searchable - takes some time, because the peer must copy the bucket's index files from a searchable copy on another peer (or, if there's no other searchable copy of that bucket, then the peer must rebuild the bucket's index files from the rawdata file). For help estimating the time needed to make non-searchable copies searchable, read Estimate the cluster recovery time when a peer gets decommissioned.

The third activity - streaming copies from one peer to another - can also take a significant amount of time (depending on the amount of data to be streamed), as described in Estimate the cluster recovery time when a peer gets decommissioned.

The two examples below illustrate how a manager 1) recreates a valid and complete cluster and 2) creates a valid but incomplete cluster when insufficient nodes remain standing. The process operates the same whether the peer goes down intentionally or unintentionally.

Remember these points:

- A cluster is valid when there is one primary searchable copy of every bucket. Any search across a valid cluster provides a full set of search results.

- A cluster is complete when the cluster has replication factor number of copies of buckets with search factor number of searchable copies.

- A cluster can be valid but incomplete if there are searchable copies of all buckets, but there are less than replication factor number of copies of buckets. So, if a cluster with a replication factor of 3 has exactly three peer nodes and one of those peers goes down, the cluster can be made valid but it cannot be made complete, since, with just two standing nodes, it is not possible to fulfill the replication factor by maintaining three sets of buckets.

Example: Fixing buckets to create a valid and complete cluster

Assume:

- The peer went down unintentionally (that is, not in response to an

offlinecommand).

- The downed peer is part of a cluster with these characteristics:

- 5 peers, including the downed peer

- replication factor = 3

- search factor = 2

- The downed peer holds these bucket copies:

- 3 primary copies of buckets

- 10 searchable copies (including the primary copies)

- 20 total bucket copies (searchable and non-searchable combined)

When the peer goes down, the manager sends messages to various of the remaining peers as follows:

1. For each of the three primary bucket copies on the downed peer, the manager identifies a peer with another searchable copy of that bucket and tells that peer to mark the copy as primary.

When this step finishes, the cluster regains its valid state, and any subsequent searches provide a full set of results.

2. For each of the 10 searchable bucket copies on the downed peer, the manager identifies 1) a peer with a searchable copy of that bucket and 2) a peer with a non-searchable copy of the same bucket. It then tells the peer with the searchable copy to stream the bucket's index files to the second peer. When the index files have been copied over, the non-searchable copy becomes searchable.

3. For each of the 20 total bucket copies on the downed peer, the manager identifies 1) a peer with a copy of that bucket and 2) a peer that does not have a copy of the bucket. It then tells the peer with the copy to stream the bucket's rawdata to the second peer, resulting in a new, non-searchable copy of that bucket.

When this last step finishes, the cluster regains its complete state.

Example: Fixing buckets to create a valid but incomplete cluster

Assume:

- The peer went down unintentionally (that is, not in response to an

offlinecommand).

- The downed peer is part of a cluster with these characteristics:

- 3 peers, including the downed peer

- replication factor = 3

- search factor = 1

- The downed peer holds these bucket copies:

- 5 primary copies of buckets

- 5 searchable copies (the same as the number of primary copies; because the search factor = 1, all searchable copies must also be primary.)

- 20 total bucket copies (searchable and non-searchable combined)

Since the cluster has just three peers and a replication factor of 3, the downed peer means that the cluster can no longer fulfill the replication factor and therefore cannot be made complete.

When the peer goes down, the manager sends messages to various of the remaining peers as follows:

1. For each of the five searchable, primary bucket copies on the downed node, the manager first identifies a peer with a non-searchable copy and tells the peer to make the copy searchable. The peer then begins building index files for that copy. (Because the search factor is 1, there are no other searchable copies of those buckets on the remaining nodes. Therefore, it is not possible for the remaining peers to make non-searchable bucket copies searchable by streaming index files from another searchable copy. Rather, they must employ the slower process of creating index files from the rawdata file of a non-searchable copy.)

2. The manager then tells the peers from step 1 to mark the five, newly searchable copies as primary. Unlike the previous example, the step of designating other bucket copies as primary cannot occur until non-searchable copies have been made searchable. Because the cluster's search factor = 1, there are no standby searchable copies.

Once step 2 completes, the cluster regains its valid state. Any subsequent searches provide a full set of results.

3. For the 20 bucket copies on the downed node, the manager cannot initiate any action to make replacement copies (so that the cluster will again have the three copies of each bucket, as specified by the replication factor), because there are too few peers remaining to hold the copies.

Since the cluster cannot recreate a full set of replication factor number of copies of buckets, the cluster remains in an incomplete state.

Pictures, too

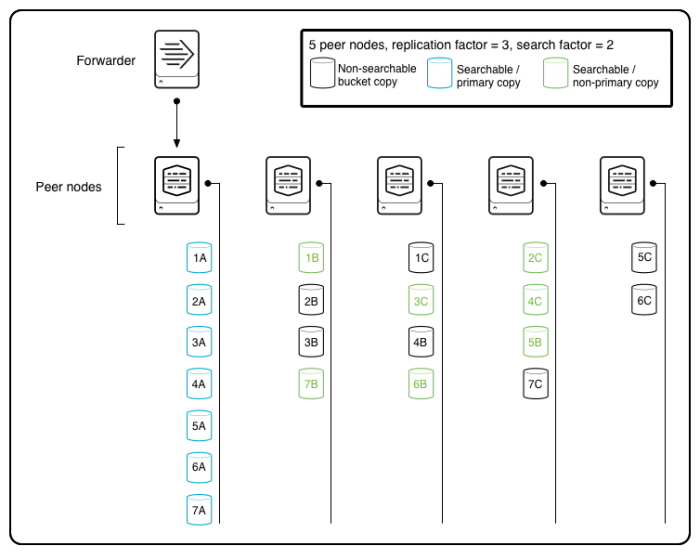

The following diagram shows a cluster of five peers, with a replication factor of 3 and a search factor of 2. The primary bucket copies reside on the source peer receiving data from a forwarder, with searchable and non-searchable copies of that data spread across the other peers.

Note: This is a highly simplified diagram. To reduce complexity, it shows only the buckets for data originating on one peer. In a real-life scenario, most, if not all, of the other peers would also be originating data and replicating it to other peers on the cluster.

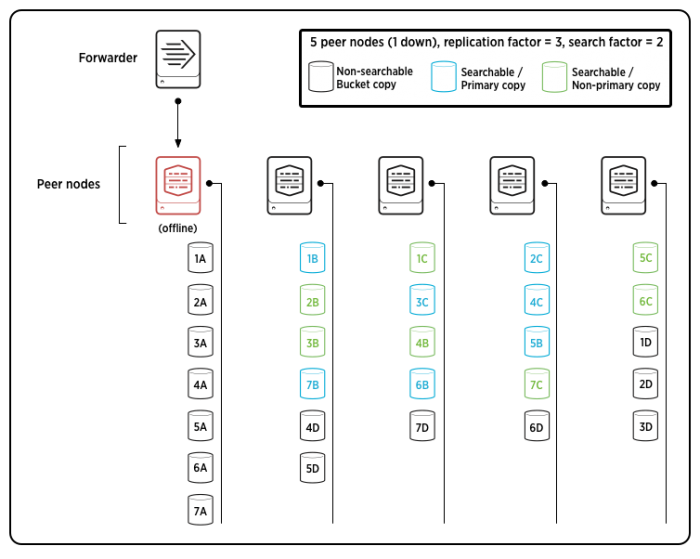

The next diagram shows the same simplified version of the cluster, after the source node holding all the primary copies has gone down and the manager has finished directing the remaining peers in fixing the buckets:

The manager directed the cluster in a number of activities to recover from the downed peer:

1. The manager reassigned primacy from bucket copies on the downed peer to searchable copies on the remaining peers. When this step finished, it rolled the generation ID.

2. It directed the peers in making a set of non-searchable copies searchable, to make up for the missing set of searchable copies.

3. It directed the replication of a new set of non-searchable copies (1D, 2D, etc.), spread among the remaining peers.

Even though the source node went down, the cluster was able to fully recover both its complete and valid states, with replication factor number of total buckets, search factor number of searchable bucket copies, and exactly one primary copy of each bucket. This represents a different generation from the previous diagram, because primary copies have moved to different peers.

Bucket-fixing in multisite clusters

The processes that multisite clusters use to handle node failure have some significant differences from single-site clusters. See Multisite clusters and node failure.

View bucket-fixing status

You can view the status of bucket fixing from the manager node dashboard. See View the manager node dashboard.

| How indexer cluster nodes start up | What happens when a peer node comes back up |

This documentation applies to the following versions of Splunk® Enterprise: 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!