Search head clustering architecture

A search head cluster is a group of Splunk Enterprise search heads that serves as a central resource for searching.

Parts of a search head cluster

A search head cluster consists of a group of search heads that share configurations, job scheduling, and search artifacts. The search heads are known as the cluster members.

One cluster member has the role of captain, which means that it coordinates job scheduling and replication activities among all the members. It also serves as a search head like any other member, running search jobs, serving results, and so on. Over time, the role of captain can shift among the cluster members.

In addition to the set of search head members that constitute the actual cluster, a functioning cluster requires several other components:

- The deployer. This is a Splunk Enterprise instance that distributes apps and other configurations to the cluster members. It stands outside the cluster and cannot run on the same instance as a cluster member. It can, however, under some circumstances, reside on the same instance as some other Splunk Enterprise components, such as a deployment server or an indexer cluster manager node. See Use the deployer to distribute apps and configuration updates.

- Search peers. These are the indexers that cluster members run their searches across. The search peers can be either independent indexers or nodes in an indexer cluster. See Connect the search heads in clusters to search peers.

- Load balancer. This is third-party software or hardware optionally residing between the users and the cluster members. With a load balancer in place, users can access the set of search heads through a single interface, without needing to specify a particular search head. See Use a load balancer with search head clustering.

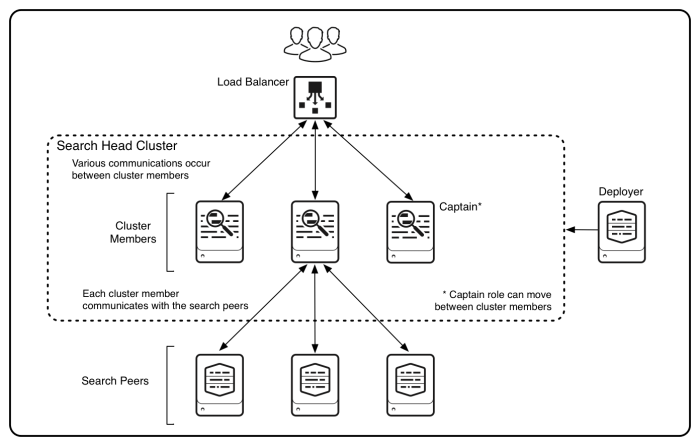

Here is a diagram of a small search head cluster, consisting of three members:

This diagram shows the key cluster-related components and interactions:

- One member serves as the captain, directing various activities within the cluster.

- The members communicate among themselves to schedule jobs, replicate artifacts, update configurations, and coordinate other activities within the cluster.

- The members communicate with search peers to fulfill search requests.

- Users can optionally access the search heads through a third-party load balancer.

- A deployer sits outside the cluster and distributes updates to the cluster members.

Note: This diagram is a highly simplified representation of a set of complex interactions between components. For example, each cluster member sends search requests directly to the set of search peers. On the other hand, only the captain sends the knowledge bundle to the search peers. Similarly, the diagram does not attempt to illustrate the messaging that occurs between cluster members. Read the text of this topic for the details of all these interactions.

Search head cluster captain

The captain is a cluster member with additional responsibilities, beyond the search activities common to all cluster members. It serves to coordinate the activities of the cluster. Any member can perform the role of captain, but the cluster has just one captain at any time. Over time, if failures occur, the captain changes and a new member gets elected to the role.

The elected captain is known as a dynamic captain, because it can change over time. A cluster that is functioning normally uses a dynamic captain. You can deploy a static captain as a temporary workaround during disaster recovery, if the cluster is not able to elect a dynamic captain.

Role of the captain

The captain is a cluster member and in that capacity it performs the search activities typical of any cluster member, servicing both ad hoc and scheduled searches. If necessary, you can limit the captain's search activities so that it performs only ad hoc searches and not scheduled searches. See Configure the captain to run ad hoc searches only.

The captain also coordinates activities among all cluster members. Its responsibilities include:

- Scheduling jobs. It assigns jobs to members, including itself, based on relative current loads.

- Coordinating alerts and alert suppressions across the cluster. The captain tracks each alert but the member running an initiating search fires it.

- Pushing the knowledge bundle to search peers.

- Coordinating artifact replication. The captain ensures that search artifacts get replicated as necessary to fulfill the replication factor. See Choose the replication factor for the search head cluster.

- Replicating configuration updates. The captain replicates any runtime changes to knowledge objects on one cluster member to all other members. This includes, for example, changes or additions to saved searches, lookup tables, and dashboards. See Configuration updates that the cluster replicates.

Captain election

A search head cluster normally uses a dynamic captain. This means that the member serving as captain can change over the life of the cluster. Any member has the ability to function as captain. When necessary, the cluster holds an election, which can result in a new member taking over the role of captain.

Captain election occurs when:

- The current captain fails or restarts.

- A network partition occurs, causing one or more members to get cut from the rest of the search head cluster. Subsequent healing of the network partition triggers another, separate captain election.

- The current captain steps down, because it does not detect that a majority of members are participating in the cluster.

Note: The mere failure or restart of a non-captain cluster member, without an associated network partition, does not trigger captain election.

To become captain, a member needs to win a majority vote of all members. For example, in a seven-member cluster, election requires four votes. Similarly, a six-member cluster also requires four votes.

The majority must be a majority of all members, not just of the members currently running. So, if four members of a seven-member cluster fail, the cluster cannot elect a new captain, because the remaining three members are fewer than the required majority of four.

The election process involves timers set randomly on all the members. The member whose timer runs out first stands for election and asks the other members to vote for it. Usually, the other members comply and that member becomes the new captain.

It typically takes one to two minutes after a triggering event occurs to elect a new captain. During that time, there is no functioning captain, and the search heads are aware only of their local environment. The election takes this amount of time because each member waits for a minimum timeout period before trying to become captain. These timeouts are configurable.

The cluster might re-elect the member that was the previous captain, if that member is still running. There is no bias either for or against this occurring.

Once a member is elected as captain, it takes over the duties of captaincy.

Important: A majority of members must be running and participating in the cluster at all times. If the captain does not detect a majority of members, it steps down, relinquishing its authority. An election for a new captain will subsequently occur, but without a majority of participating members, it will not succeed. If you lose majority on a cluster, a temporary workaround is to deploy a static captain, in place of the dynamic captain. Static captains are designated by the administrator, not elected by the members. See Use static captain to recover from loss of majority.

For details of your cluster's captain election process, view the Search Head Clustering: Status and Configuration dashboard in the monitoring console. See Use the monitoring console to view search head cluster status.

Control of captaincy

You have some control over which members become captain. In particular, you can:

- Set captaincy preference on a member-by-member basis. The cluster attempts to elect as captain a member designated as a preferred captain.

- Transfer captaincy from one member to another.

- Prevent an out-of-sync member from becoming captain. An out-of-sync member is a member that cannot sync its own set of replicated configurations with the common baseline set of replicated configurations maintained by the current or most recent captain. By default, the cluster attempts not to elect as captain an out-of-sync member.

For details on these captaincy control capabilities, see Control captaincy.

Consequences of a non-functioning cluster

If the cluster lacks a majority of members and therefore cannot elect a captain, the members will continue to function as independent search heads. However, they will only be able to service ad hoc searches. Scheduled reports and alerts will not run, because, in a cluster, the scheduling function is relegated to the captain. In addition, configurations and search artifacts will not be replicated during this time.

To remedy this situation, you can temporarily deploy a static captain. See Use static captain to recover from loss of majority.

Recovering from a non-functioning cluster

If you do not deploy a static captain during the time that the cluster lacks a majority, the cluster will not function again until a majority of members rejoin the cluster. When a majority is attained, the members elect a captain, and the cluster starts to function.

There are two key aspects to recovery:

- Runtime configurations

- Scheduled reports

Once the cluster starts functioning, it attempts to sync the runtime configurations of the members. Since the members were able to operate independently during the time that their cluster was not functioning, it is likely that each member developed its own unique set of configuration changes during that time. For example, a user might have created a new saved search or added a new panel to a dashboard. These changes must now be reconciled and replicated across the cluster. To accomplish this, each member reports its set of changes to the captain, which then coordinates the replication of all changes, including its own, to all members. At the end of this process, all members should have the same set of configurations.

Caution: This process can only proceed automatically if the captain and each member still share a common commit in their change history. Otherwise, it will be necessary to manually resync the non-captain member against the captain's current set of configurations, causing that member to lose all of its intervening changes. Configurable purge limits control the change history. For details of purge limits and the resync process, see Replication synchronization issues.

The recovered cluster also begins handling scheduled reports again. As for whether it attempts to run reports that were skipped while the cluster was down, that depends on the type of scheduled report. For the most part, it will just pick up the reports at their next scheduled run time. However, the scheduler will run reports employed by report acceleration and data model acceleration from the point when they were last run before the cluster stopped functioning. For detailed information on how the scheduler handles various types of reports, see Configure the priority of scheduled reports in the Reporting Manual.

Captain election process has deployment implications

The need of a majority vote for a successful election has these deployment implications:

- A cluster must consist of a minimum of three members. A two-member cluster cannot tolerate any node failure. Failure of either member will prevent the cluster from electing a captain and continuing to function. Captain election requires majority (51%) assent of all members, which, in the case of a two-member cluster, means that both nodes must be running. You therefore forfeit the high availability benefits of a search head cluster if you limit the cluster to one or two members.

Note: As an interim measure, when first deploying a search head cluster, you can bring up a single-member cluster. This approach allows you to start with a small distributed search deployment and later scale to a larger cluster. However, a single-member cluster does not provide high availability search, which is the main benefit of a search head cluster. To fulfill that benefit, the cluster must comprise at least three members. See Deploy a single-member search head cluster.

- If you are deploying the cluster across two sites, your primary site must contain a majority of the nodes. If there is a network disruption between the sites, only the site with a majority can elect a new captain. See Important considerations when deploying a search head cluster across multiple sites.

How the cluster handles search artifacts

The cluster replicates most search artifacts, also known as search results, to multiple cluster members. If a member needs to access an artifact, it accesses a local copy, if possible. Otherwise, it uses proxying to access the artifact.

Artifact replication

The cluster maintains multiple copies of search artifacts resulting from scheduled saved searches. The replication factor determines the number of copies that the cluster maintains of each artifact. For example, if the replication factor is three, the cluster maintains three copies of each artifact: one on the member that originated the artifact, and two on other members.

The captain coordinates the replication of artifacts to cluster members. As with any search head, clustered or not, when a search is complete, its search artifact is placed in the dispatch directory of the member originating the search. The captain then directs the artifact's replication process, in which copies stream between members until copies exist on the replication factor number of members, including the originating member.

The set of members receiving copies can change from artifact to artifact. That is, two artifacts from the same originating member might have their replicated copies on different members.

The captain maintains the artifact registry, with information on the locations of copies of each artifact. When the registry changes, the captain sends the delta to each member.

If a member goes down, thus causing the cluster to lose some artifact copies, the captain coordinates fix-up activities, with the goal of returning the cluster to a state where each artifact has the replication factor number of copies.

Search artifacts are contained in the dispatch directory, located under $SPLUNK_HOME/var/run/splunk/dispatch. Each dispatch subdirectory contains one search artifact. It is these subdirectories that the cluster replicates.

Replicated search artifacts can be identified by the prefix rsa_. The original artifacts do not have this prefix.

For details of your cluster's artifact replication process, view the Search Head Clustering: Artifact Replication dashboard in the monitoring console. See Use the monitoring console to view search head cluster status.

Artifact proxying

The cluster only replicates search artifacts resulting from scheduled saved searches. It does not replicate results from these other search types:

- Scheduled real-time searches

- Ad hoc searches of any kind (realtime or historical)

Instead, the cluster proxies these results, if they are requested by a non-originating search head. They appear on the requesting member after a short delay.

In addition, if a member needs an artifact from a scheduled saved search but does not itself have a local copy of that artifact, it proxies the results from a member that does have a copy. At the same time, the cluster replicates a copy of that artifact to the requesting member, so that it has a local copy for any future requests. Because of this process, some artifacts might have more than the replication factor number of copies.

Distribution of configuration changes

With a few exceptions, all cluster members must use the same set of configurations. For example, if a user edits a dashboard on one member, the updates must somehow propagate to all the other members. Similarly, if you distribute an app, you must distribute it to all members. Search head clustering has methods to ensure that configurations stay in sync across the cluster.

There are two types of configuration changes, based on how they are distributed to cluster members:

- Replicated changes. The cluster automatically replicates any runtime knowledge object changes on one member to all other members.

- Deployed changes. The cluster relies on an external instance, the deployer, to push apps and other non-runtime configuration changes to the set of members. You must initiate each push of changes from the deployer.

See How configuration changes propagate across the search head cluster.

Job scheduling

See Control search concurrency on search head clusters.

How the cluster handles concurrent search quotas

See Control search concurrency on search head clusters.

Search head clustering and KV store

KV store can reside on a search head cluster. However, the search head cluster does not coordinate replication of KV store data or otherwise involve itself in the operation of KV store. For information on KV store, see About KV store in the Admin Manual.

| About search head clustering | System requirements and other deployment considerations for search head clusters |

This documentation applies to the following versions of Splunk® Enterprise: 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!