Design data models

In Splunk Web, you use the Data Model Editor to design new data models and edit existing models. This topic shows you how to use the Data Model Editor to:

- Build out data model dataset hierarchies by adding root datasets and child datasets to data models.

- Define datasets (by providing constraints, search strings, or transaction definitions).

- Rename datasets.

- Delete datasets.

You can also use the Data Model Editor to create and edit dataset fields. For more information, see Define dataset fields.

Note: This topic will not spend much time explaining basic data model concepts. If you have not worked with Splunk data models, you may find it helpful to review the topic About data models. It provides a lot of background detail around what data models and data model datasets actually are and how they work.

For information about creating and managing new data models, see Manage data models in this manual. Aside from creating new data models via the Data Models management page, this topic will also show you how to manage data model permissions and acceleration.

The Data Model Editor

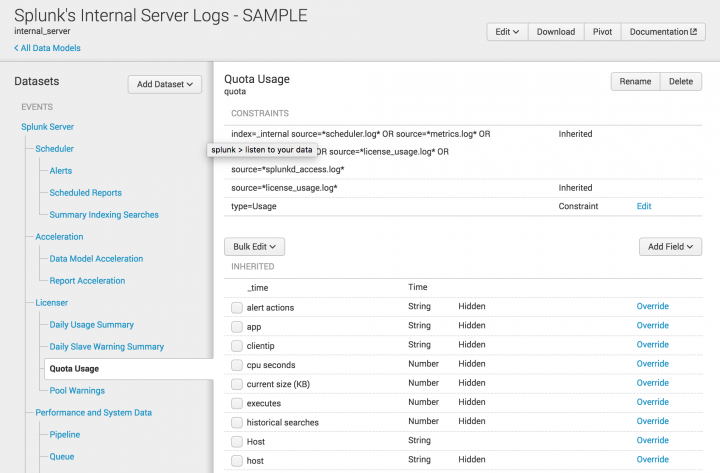

Data models are collections of data model datasets arranged in hierarchical structures. To design a new data model or redesign an existing data model, you go to the Data Model Editor. In the Data Model Editor, you can create datasets for your data model, define their constraints and fields, arrange them in logical dataset hierarchies, and maintain them.

You can only edit a specific data model if your permissions enable you to do so.

To open the Data Model Editor for an existing data model, choose one of the following options.

Option Additional steps for this option From the Data Models page in Settings. Find the data model you want to edit and select Edit > Edit Datasets. From the Datasets listing page Find a data model dataset that you want to edit and select Manage > Edit data model. From the Pivot Editor Click Edit dataset to edit the data model dataset that the Pivot editor is displaying.

Add a root event dataset to a data model

Data models are composed chiefly of dataset hierarchies built on root event dataset. Each root event dataset represents a set of data that is defined by a constraint: a simple search that filters out events that aren't relevant to the dataset. Constraints look like the first part of a search, before pipe characters and additional search commands are added.

Constraints for root event datasets are usually designed to return a fairly wide range of data. A large dataset gives you something to work with when you associate child event datasets with the root event dataset, as each child event dataset adds an additional constraint to the ones it inherits from its ancestor datasets, narrowing down the dataset that it represents.

For more information on how constraints work to narrow down datasets in a dataset hierarchy, see dataset constraints.

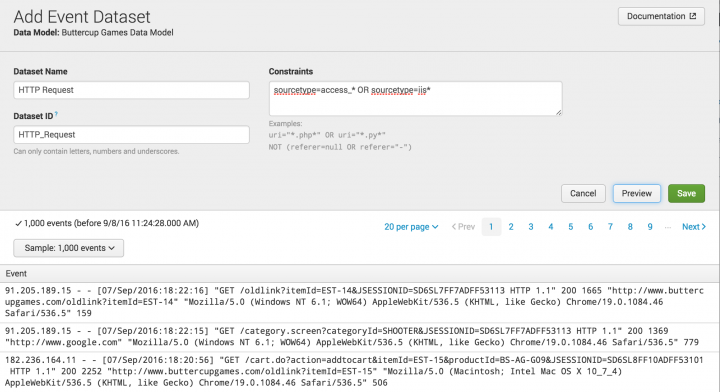

To add a root event dataset to your data model, click Add Dataset in the Data Model Editor and select Root Event. This takes you to the Add Event Dataset page.

Give the root event dataset a Dataset Name, Dataset ID, and one or more Constraints.

The Dataset Name field can accept any character except asterisks. It can also accept blank spaces between characters. It's what you'll see on the Choose a Dataset page and other places where data model datasets are listed.

The Dataset ID must be a unique identifier. It can contain only characters that are alphanumeric, underscores, or hyphens (a-z, A-Z, 0-9, _ , or - ). It cannot include spaces between characters. Once you save a Dataset ID value that value cannot be edited.

After you provide Constraints for the root event dataset you can click Preview to test whether the constraints you have supplied return the kinds of events you want.

Add a root search dataset to a data model

Root search datasets enable you to create dataset hierarchies where the base dataset is the result of an arbitrary search. You can use any SPL in the search string that defines a root search dataset.

You cannot accelerate root search datasets that use transforming searches. A transforming search uses transforming commands to define a base dataset where one or more fields aggregate over the entire dataset.

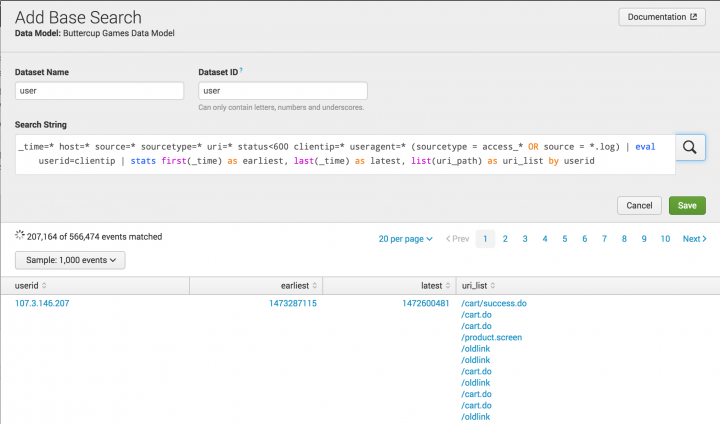

To add a root search dataset to your data model, click Add Dataset in the Data Model Editor and select Root Search. This takes you to the Add Search Dataset page.

Give the root search dataset a Dataset Name, Dataset ID, and search string. To preview the results of the search in the section at the bottom of the page, click the magnifying glass icon to run the search, or just hit return on your keyboard while your cursor is in the search bar.

The Dataset Name field can accept any character except asterisks. It can also accept blank spaces between characters. It's what you'll see on the Choose a Dataset page and other places where data model datasets are listed.

The Dataset ID must be a unique identifier for the dataset. It cannot contain spaces or any characters that aren't alphanumeric, underscores, or hyphens (a-z, A-Z, 0-9, _, or -). Spaces between characters are also not allowed. Once you save the Dataset ID, value you can't edit it.

For more information about designing search strings, see the Search Manual.

Don't create a root search dataset for your search if the search is a simple transaction search. Set it up as a root transaction dataset.

Using transforming searches in a root search dataset

You can create root search datasets for searches that do not map directly to Splunk events, as long as you understand that they cannot be accelerated. In other words, searches that involve input or output that is not in the format of an event. This includes searches that:

- Make use of transforming commands such as

stats,chart, andtimechart. Transforming commands organize the data they return into tables rather than event lists. - Use other commands that do not return events.

- Pull in data from external non-Splunk sources using a command other than

lookup. This data cannot be guaranteed to have default fields likehost,source,sourcetype, or_timeand therefore might not be event-mappable. An example would be using theinputcsvcommand to get information from an external .csv file.

Add a root transaction dataset to a data model

Root transaction datasets enable you to create dataset hierarchies that are based on a dataset made up of transaction events. A transaction event is actually a collection of conceptually-related events that spans time, such as all website access events related to a single customer hotel reservation session, or all events related to a firewall intrusion incident. When you define a root transaction dataset, you define the transaction that pulls out a set of transaction events.

Read up on transactions and the transaction command if you're unfamiliar with how they work. Get started at About transactions, in the Search Manual. Get detail information on the transaction command at its entry in the Search Reference.

Root transaction datasets and their children do not benefit from data model acceleration.

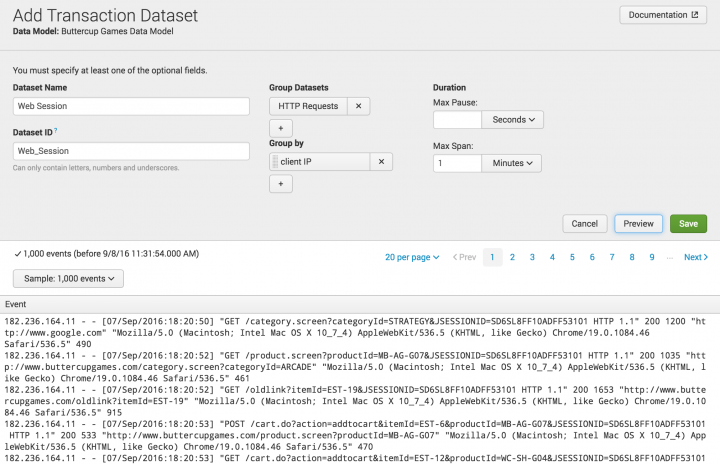

To add a root transaction dataset to your data model, click Add Dataset in the Data Model Editor and select Root Transaction. This takes you to the Add Transaction Dataset page.

Root transaction dataset definitions require a Dataset Name and Dataset ID and at least one Group Dataset.

The Group by, Max Pause, and Max Span fields are optional, but the transaction definition is incomplete until at least one of those three fields is defined. See Identify and group events into transactions in the Search Manual for more information about how the Group by, Max Pause, and Max Span fields work.

The Dataset Name field can accept any character except asterisks. It can also accept blank spaces between characters. It's what you'll see on the Choose a dataset page and other places where data model datasets are listed.

The Dataset ID must be a unique identifier for the dataset. It cannot contain spaces or any characters that aren't alphanumeric, underscores, or hyphens (a-z, A-Z, 0-9, _, or -). Spaces between characters are also not allowed. Once you save the Dataset ID value you can't edit it.

All root transaction dataset definitions require one or more Group Dataset names to define the pool of data from which the transaction dataset will derive its transactions. There are restrictions on what you can add under Group Dataset, however. Group Dataset can contain one of the following three options:

- One or more event datasets (either root event datasets or child event datasets)

- One transaction dataset (either root or child)

- One search dataset (either root or child)

In addition, you are restricted to datasets that exist within the currently selected data model.

If you're familiar with how the transaction command works, you can think of the Group Datasets as the way we provide the portion of the search string that appears before the transaction command. Take the example presented in the preceding screenshot, where we've added the Apache Access Search dataset to the definition of the root transaction dataset Web Session. Apache Access Search represents a set of successful webserver access events--its two constraints are status < 600 and sourcetype = access_* OR source = *.log. So the start of the transaction search that this root transaction dataset represents would be:

status < 600 sourcetype=access_* OR source=*.log | transaction...

Now we only have to define the rest of the transaction argument.

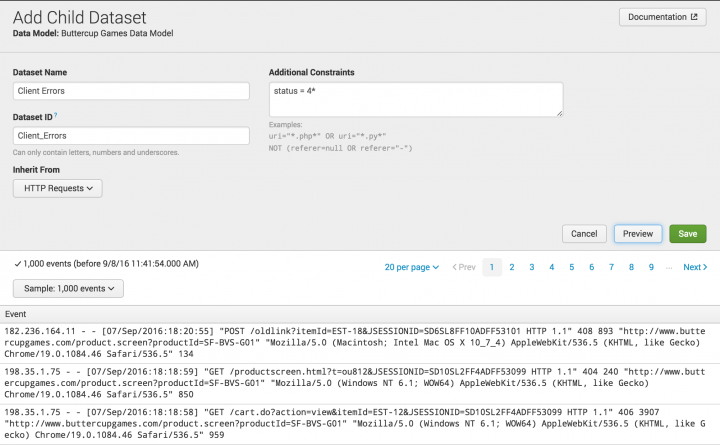

Add a child dataset to a data model

You can add child data model datasets to root data model datasets and other child data model datasets. A child dataset inherits all of the constraints and fields that belong to its parent dataset. A single dataset can be associated with multiple child datasets.

When you define a new child dataset, you give it one or more additional constraints, to further focus the dataset that the dataset represents. For example, if your Web Intelligence data model has a root event dataset called HTTP Request that captures all webserver access events, you could give it three child event datasets: HTTP Success, HTTP Error, and HTTP Redirect. Each child event dataset focuses on a specific subset of the HTTP Request dataset:

- The child event dataset HTTP Success uses the additional constraint

status = 2*to focus on successful webserver access events. - HTTP Error uses the additional constraint

status = 4*to focus on failed webserver access events. - HTTP Redirect uses the additional constraint

status = 3*to focus on redirected webserver access events.

Child dataset constraints cannot include search macros. Searches that reference child datasets with search macros in their constraints will fail.

The addition of fields beyond those that are inherited from the parent dataset is optional. For more information about field definition, see Manage Dataset fields with the Data Model Editor.

To add a child dataset to your data model, select the parent dataset in the left-hand dataset hierarchy, click Add Dataset in the Data Model Editor, and select Child. This takes you to the Add Child Dataset page.

Give the child dataset a Dataset Name and Dataset ID.

The Dataset Name field can accept any character except asterisks. It can also accept blank spaces between characters. It's what you'll see on the Choose a Dataset page and other places where data model datasets are listed.

The Dataset ID must be a unique identifier for the dataset. It cannot contain spaces or any characters that aren't alphanumeric, underscores, or hyphens (a-z, A-Z, 0-9, _, or -). Spaces between characters are also not allowed. After you save the Dataset ID value you can't edit it.

After you define a Constraint for the child dataset you can click Preview to test whether the constraints you've supplied return the kinds of events you want.

Disable strict field filtering for data model searches

When you search the contents of a data model using the datamodel command in conjunction with a <dm-search-mode> such as search, flat, or search_string, by default the search returns a strictly-filtered set of fields. It returns only default fields and fields that are explicitly identified in the constraint search that defines the data model.

You can disable this field filtering behavior. When it is disabled, the data model search returns all fields related to the data model, including fields inherited from parent data models, fields extracted at search time, calculated fields, and fields derived from lookups.

Disable data model field filtering for an individual search

When you write a datamodel search, you can add strict_fields=false as an argument for the datamodel command. This disables field filtering for the search. When you run the search, it returns all fields related to the data model.

See the datamodel topic in the Search Reference.

Disable field filtering for all searches of a specific data model

If you have .conf file access for your deployment, you can add strict_fields=false as a setting to the stanza for a specific data model. After you do this, your searches of the data model with the datamodel or from commands return all fields related to the data model.

If you want to enable field filtering for a data model that has strict_fields=false set in datamodels.conf, add strict_fields=true to your SPL when you search it with the datamodel command.

When you disable field filtering for a data model in datamodels.conf, field filtering is also disabled for | from searches of the data model.

Set a tag whitelist for better data model search performance

When the Splunk software processes a search that includes tags, it loads all of the tags defined in tags.conf by design. This means that data model searches that use tags can suffer from reduced performance.

Configure a tag whitelist for the following types of data models:

- Data models that include tags in their constraint searches.

- Data models that are commonly referenced in searches that also include tags in their search strings.

Set up tag whitelists for data models

If you have .conf file access you define tag whitelists for your data models by setting tags_whitelist in their datamodels.conf stanzas.

Configure tags_whitelist with a comma-separated list of all of the tags that you want the Splunk software to process. The list must include all of the tags in the constraint searches for the data model and any additional tags that you commonly use in searches that reference the data model.

If you use the Splunk Common Information Model Add-on

Use the setup page for the Common Information Model (CIM) Add-on to edit the tag whitelists of CIM data models. See Set up the Splunk Common Information Model Add-on in Common Information Model Add-on Manual.

How tag whitelists work

When you run a search that references a data model with a tag whitelist, the Splunk software only loads the tags identified in that whitelist. This improves the performance of the search, because the tag whitelist prevents the search from loading all the tags in tags.conf.

The tags_whitelist setting does not validate to ensure that the tags it lists are present in the data model it is associated with.

If you do not configure a tag whitelist for a data model, the Splunk software attempts to optimize out unnecessary tags when you use that data model in searches. Data models that do not have tag fields in their constraint searches cannot use the tags_whitelist setting.

A tag whitelist example

You have a data model named Network_Traffic with constraint searches include the network and communicate tags. When you run a search against the Network_Traffic data model, the Splunk software processes both of those tags, but it also processes the other 234 tags that are configured in the tags.conf file for your deployment.

In addition, your most common searches against Network_Traffic include the destination tag. For example, a typical Network_Traffic search might be as follows:

| datamodel network_traffic search | search tag=destination | stats count

In this case, you can set tags_whitelist = network, communicate, destination for the Network_Traffic stanza in datamodels.conf.

After you do this, any search you run against the Network_Traffic data model only loads the network, communicate, and destination tags. The rest of the tags in tags.conf are not factored into the search. This optimization should cause the search to complete faster than it would if you had not set tags_whitelist for Network_Traffic.

Some best practices for data model design

It can take some trial and error to determine the data model designs that work for you. Here are some tips that can get you off to a running start.

- Use root event datasets and root search datasets that only use streaming commands. This takes advantage of the benefits of data model acceleration.

- When you define constraints for a root event dataset or define a search for a root search dataset that you will accelerate, include the index or indexes it is selecting from. Data model acceleration efficiency and accuracy is improved when the data model is directed to search a specific index or set of indexes. If you do not specify indexes, the data model searches over all available indexes.

- Avoid circular dependencies in your dataset definitions. Circular dependencies occur when a dataset constraint definition includes fields that are not auto-extracted or inherited from a parent dataset. Circular dependencies can lead to a variety of problems including, but not limited to, invalid search results.

- Minimize dataset hierarchy depth. Constraint-based filtering is less efficient deeper down the tree.

- Use field flags to selectively expose small groups of fields for each dataset. You can expose and hide different fields for different datasets. A child field can expose an entirely different set of fields than those exposed by its parent. Your Pivot users will benefit from this selection by not having to deal with a bewildering array of fields whenever they set out to make a pivot chart or table. Instead they'll see only those fields that make sense in the context of the dataset they've chosen.

- Reverse-engineer your existing dashboards and searches into data models. This can be a way to quickly get started with data models. Dashboards built with pivot-derived panels are easier to maintain.

- When designing a new data model, first try to understand what your Pivot users hope to be able to do with it. Work backwards from there. The structure of your model should be determined by your users' needs and expectations.

| Manage data models | Define dataset fields |

This documentation applies to the following versions of Splunk® Enterprise: 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!