Overview of parallel reduce search processing

High-cardinality searches are searches that must match, filter, and aggregate extremely large numbers of unique field values. User IDs, session IDs, and telephone numbers are examples of fields that tend to be high in cardinality. Searches that compute aggregates over high-cardinality fields can be slow to complete. Parallel reduce search processing helps these high-cardinality searches in your Splunk platform deployment complete faster.

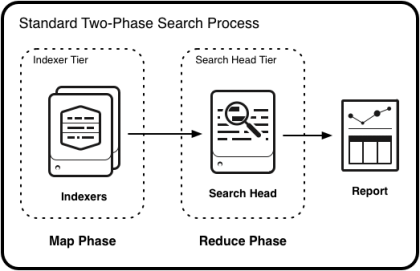

In a typical distributed search process, there are two broad search processing phases:

A map phase

- The map phase takes place across the indexers in your deployment. In the map phase, the indexers locate event data that matches the search query and sort it into field-value pairs. When the map phase is complete, indexers send the results to the search head for the reduce phase.

A reduce phase

- The reduce phase follows the map phase and takes place on the search head. During the reduce phase, the search head processes the results through the commands in your search and aggregates them to produce a final result set.

The following diagram illustrates the standard two-phase distributed search process.

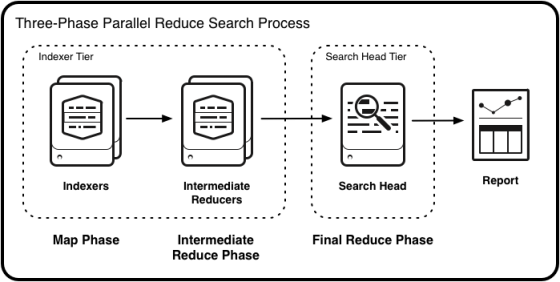

The parallel reduce process inserts an intermediate reduce phase into the map-reduce paradigm, making it a three-phase map-reduce-reduce operation. In this intermediate reduce phase, a subset of your indexers serve as intermediate reducers. The intermediate reducers divide up the mapped results and perform reduce operations on those results for certain supported search commands. When the intermediate reducers complete their work, they send the results to the search head, where the final result reduction and aggregation operations take place. The parallel processing of reduction work that otherwise would be done entirely by the search head can result in faster completion times for high-cardinality searches that aggregate large numbers of search results.

The following diagram illustrates the three-phase parallel reduce search process.

Performance considerations

Parallel reduce search processes typically add some extra processing load to the indexer, while at the same time reducing the load on the search head. If you attempt to run parallel reduce searches in an already overloaded indexer cluster, you might encounter slower performance than expected.

Parallel reduce prerequisites

To enable parallel reduce search processing, you need the following prerequisites in place:

| Prerequisite | Details | For more information |

|---|---|---|

| A distributed search environment. | Parallel reduce search processing requires a distributed search deployment architecture. | See About distributed search. |

| An environment where the indexers are at a single site. | Parallel reduce search processing is not site-aware. Do not use it if your indexers are in a multisite indexer cluster, or if you have non-clustered indexers spread across several sites. | |

| Splunk platform version 8.2.0 or later for all participating machines. | Upgrade all Splunk instances that participate in the parallel reduce process to version 8.2.0 or later. Participating instances include all indexers and search heads. | See How to upgrade Splunk Enterprise in the Installation Manual. |

| Internal search head data forwarded to the indexer layer. | The parallel reduce search process ignores all data on the search head. If you plan to run parallel reduce searches, the best practice is to forward all search head data to the indexer layer. | See Best Practice: Forward search head data to the indexer layer. |

Next steps

Learn how to configure your deployment for parallel reduce search processing. See Configure parallel reduce search processing.

| Use the monitoring console to view distributed search status | Configure parallel reduce search processing |

This documentation applies to the following versions of Splunk® Enterprise: 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.4.0, 9.4.1

Download manual

Download manual

Feedback submitted, thanks!