Identify the Amazon S3 data that you want to search

To get started with Federated Search for Amazon S3, you must have data that you want to search in an Amazon S3 location. An Amazon S3 location can be an Amazon S3 bucket or a folder at the end of a file path within an Amazon S3 bucket.

For more information about getting data into Amazon S3, search for "Creating, configuring, and working with Amazon S3 buckets" and "Uploading, downloading, and working with objects in Amazon S3" in the Amazon Simple Storage Service User Guide.

If you want to search several distinct datasets, set up a separate Amazon S3 location for each dataset.

Keep track of the Amazon S3 locations you identify. You need them when you create AWS Glue Data Catalog tables, and again when you define your federated provider for Federated Search for Amazon S3.

Amazon S3 locations are file paths

Amazon S3 locations are Amazon S3 file paths. To be valid for Federated Search for Amazon S3, your Amazon S3 locations must end in a folder. File paths that end in file objects are not valid for Federated Search for Amazon S3:

For example, this is a valid Amazon S3 location, as long as all of the data it contains shares the same data format and compression type.

s3://bucket1/path1/my_csv_data/

This Amazon S3 location, on the other hand, is invalid, because it terminates in a file object.

s3://bucket1/path1/my_csv_data/my_csv.csv

Amazon S3 location data requirements

To be searchable by Federated Search for Amazon S3, an Amazon S3 location must contain file objects that have the same data format and compression type.

The following table lists the data types and formats supported by AWS Glue tables.

| Data type | Data formats |

|---|---|

| Structured data |

|

| Semi-structured and unstructured data |

|

For more information about supported compression types for Federated Search for Amazon S3, see About Federated Search for Amazon S3.

What is new-line JSON?

If you want to search an Amazon S3 location that contains JSON data, that JSON data must have new-line formatting in order for it to be valid for Federated Search for Amazon S3. AWS Glue cannot create searchable AWS Glue tables from locations containing array-formatted JSON.

| JSON type | Notes | Example |

|---|---|---|

| New-line JSON |

|

{"date":"2023-01-01", "status":"404"} \n

{"date":"2023-01-02", "status":"200"} \n

{"date":"2023-01-03", "status":"200"} \n

...

|

| Array-formatted JSON |

|

[

{"date":"2023-01-01", "status":"200"},

{"date":"2023-01-02", "status":"401"},

...

]

|

If you have data generated by an outdated Parquet API

Federated Search for Amazon S3 cannot support Parquet data that is generated with a Parquet-format API of a version lower than 2.5.0. Federated searches over AWS Glue tables that refer to Amazon S3 locations containing such Parquet data fail with an error message that includes the phrase "hive_cursor_error: failed to read parquet file".

If you must search Parquet data that has been generated by an outdated Parquet-format API, you have 2 options: add a SerDe parameter to the AWS Glue table that references the incompatible Parquet data, or regenerate your Parquet data with an up-to-date Parquet API.

| Method | Details | Result |

|---|---|---|

| Add a SerDe parameter | In the AWS Glue console, find the AWS Glue table that references Parquet data generated with an outdated API, and open its table details. In the Serde properties section, add a Key of parquet.ignore.statistics and a Value of true. | After you apply the parameter, searches over an AWS Glue table based on Parquet data generated by an outdated API run without errors. However, they might complete slower than identical searches over AWS Glue tables based on Parquet data generated by an up-to-date API. |

| Regenerate Parquet data | Work with your AWS admin to regenerate your Parquet data with the Parquet-mr API version 1.11.1 or higher. Then create an AWS Glue table that refers to the regenerated dataset. | With the corrected Parquet data you can run federated searches over the AWS Glue table without complications. These searches might complete faster than similar searches over AWS Glue tables based on Parquet data generated by an outdated API. |

For more information about these methods, review the AWS Glue documentation or the documentation of the Parquet APIs.

Guidelines for Amazon S3 location selection

A good Amazon S3 location for Federated Search for Amazon S3 meets the following conditions:

- The Amazon S3 location contains only data that you want to search.

- All data at the Amazon S3 location has the same data format and compression type.

- If the dataset that you want to search in an S3 bucket has partitions, the Amazon S3 location contains all of the partitions for that dataset.

You might have cases where the base directory of an Amazon S3 bucket works well as a proper Amazon S3 location. For example, an Amazon S3 location for an AWS CloudTrail dataset might look like this:

s3://buttercupgames-cloudtrail-sample/

However, Amazon S3 buckets often contain data you don't need to search, or data that doesn't fit the data format and compression type requirements for Federated Search for Amazon S3. In such cases you must identify the directories within your S3 buckets that meet your needs.

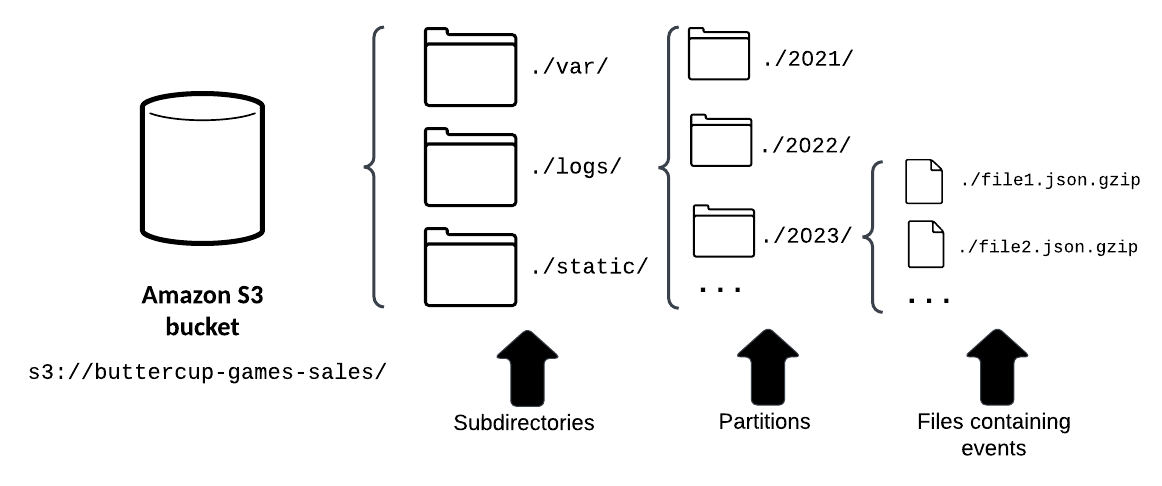

In the following example, you must identify an AWS S3 location that you can use to search online sales log data for your company, Buttercup Games.

Don't select the base directory of

Don't select the base directory of s3://buttercup-games-sales as your location, because the base directory includes subdirectories of data that you have no interest in searching, such as ./var/ and ./static/. You want only to search log data, so you focus on the ./logs/ subdirectory. Each folder in the ./logs/ subdirectory contains log data stored in JSON-formatted files with GZIP compression.

When you inspect the ./logs/ subdirectory you see that the dataset it contains is divided into partitions by year. Do not cut your dataset into unnecessarily small slices by selecting a location path that ends in a specific partition folder, such as s3://buttercup-games-sales/logs/2023/*.

It's actually a good thing if your location contains data that is fully partitioned by time or other partition keys. Searches that filter data by partition keys are more efficient and can consume less of your data scan entitlement than searches that must run over the entire dataset.

For this example, set the following URL as the Amazon S3 location for your Buttercup Games log data: s3://buttercup-games-sales/logs/*

This location captures all of the partitions and Buttercup Games log files that you might want to search.

Locations for common Amazon source types

Common Amazon source types like AWS CloudTrail and Amazon VPC Flow Logs have uniform log data organization in Amazon S3 buckets, which means their Amazon S3 location URLs follow predictable patterns. The following table provides the formats of the top-level Amazon S3 location URLs for these source types.

| Source type | Amazon S3 location URL format |

|---|---|

| AWS CloudTrail Logs | s3://<your_cloudtrail_logs>/AWSLogs/<your_AWS_account_number>/CloudTrail/*

|

| Amazon VPC Flow Logs | s3://<your_vpcflow_logs>/AWSLogs/<your_AWS_account_number>/vpcflowlogs/*

|

Create an AWS Glue table

After you identify the Amazon S3 locations that you want to search, you can create AWS Glue tables that reference the data in those locations. You create a separate AWS Glue table for each location.

| About Federated Search for Amazon S3 | Create an AWS Glue Data Catalog table |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.0.2305, 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406

Download manual

Download manual

Feedback submitted, thanks!