Process a copy of data using Ingest Processor

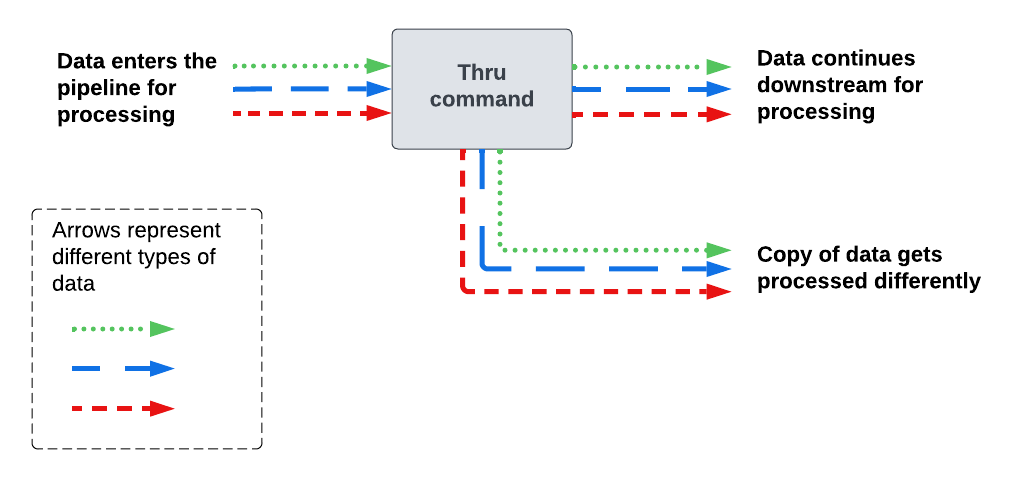

Use the thru command when you want to process or route the same set of data in 2 distinct ways.

The thru command does the following:

- Creates an additional path in the pipeline.

- Copies all of the incoming data in the pipeline.

- Sends the copied data into the newly created path.

The following diagram shows how the thru command sends data into different paths in the pipeline.

Add the thru command to a pipeline

- In the Actions section of the pipeline builder, select the plus icon (

) and then select Clone and route data.

) and then select Clone and route data.

The pipeline builder adds the following commands to your pipeline:| thru [ | into $destination2 ] - (Optional) In the SPL2 editor, in the area between the opening square bracket ( [ ) and the

| into $destinationcommand, enter one or more SPL2 commands for processing the copied data in the newly created pipeline path. Each command must be delimited by a pipe ( | ). If you don't want to make any changes to the copied data, you can skip this step. - In the Actions section, select Send data to $destination2.

- Select the destination that you want to send the data to, and then select Apply.

- (Optional) Select the Preview Pipeline icon (

) to generate a preview that shows what your data looks like when it passes through the pipeline. In the preview results panel, use the drop-down list to select the pipeline path that you want to preview.

) to generate a preview that shows what your data looks like when it passes through the pipeline. In the preview results panel, use the drop-down list to select the pipeline path that you want to preview.

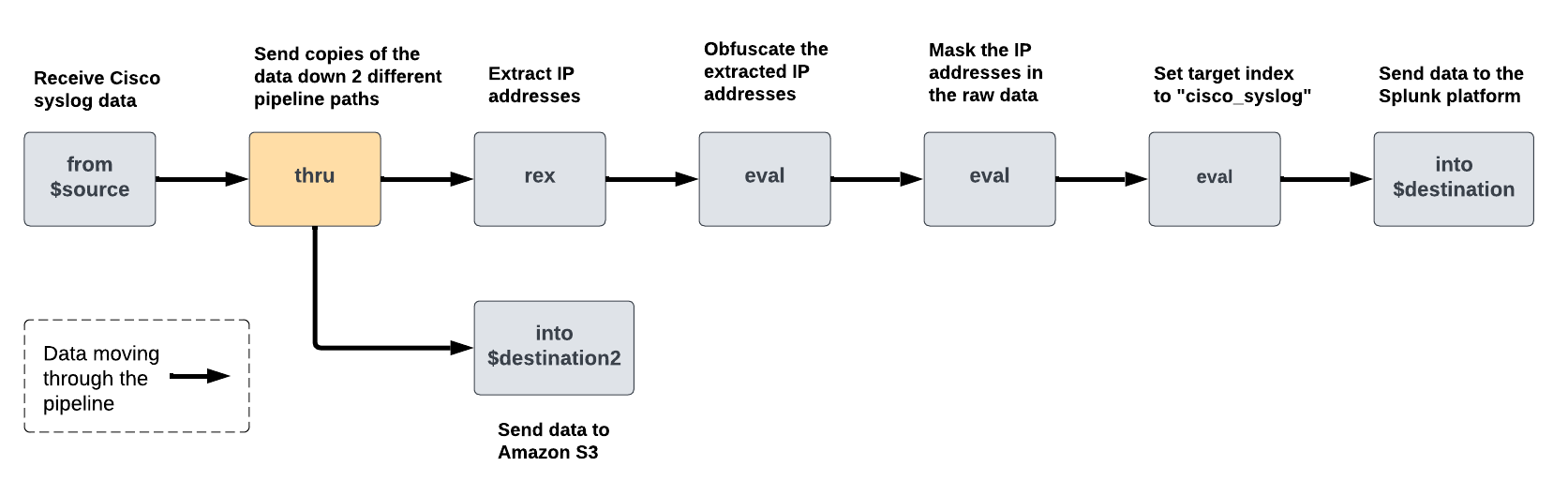

Example: Create backup copies of logs before processing them

In this example, the Ingest Processor is receiving Cisco syslog data such as the following:

<13>Jan 09 01:54:40 10.10.232.91 : %ASA-3-505016: Module Sample_prod_id3 in slot 1 application changed from: sample_app2 version 1.1.0 state Sample_state1 to: Sample_server_name2 1.1.0 state Sample_state1. <19>Jan 09 01:54:40 10.10.136.129 : %ASA-1-505015: Module Sample_prod_id2 in slot 1 , application up sample_app2 , version 1.1.0 <91>Jan 09 01:54:40 10.10.144.67 : %FTD-5-505012: Module Sample_prod_id1 in slot 1 , application stopped sample_app1 , version 2.2.1 <101>Jan 09 01:54:40 10.10.219.51 : %FTD-1-505011: Module 10.10.94.98 , data channel communication is UP.

Assume that you want to do the following:

- Make an unaltered backup copy of this data and send it to an Amazon S3 bucket.

- In the other copy of the data, obfuscate the IP addresses so that they aren't directly human-readable.

- Send the obfuscated logs to a Splunk index named cisco_syslog.

You can achieve this by creating a pipeline that uses the thru command to immediately send a copy of the received data to an Amazon S3 destination, and then obfuscates the IP addresses and assigns an appropriate index value to the logs. The following diagram shows the commands that this pipeline would contain and how the data would get processed as it moves through the pipeline:

To create this pipeline, do the following:

- On the Pipelines page, select New pipeline. Follow the on-screen instructions to define a partition, optionally enter sample data, and select data destinations for metrics and non-metrics data. Set the non-metrics data destination to the Splunk platform deployment that you want to send obfuscated logs to.

After you complete the on-screen instructions, the pipeline builder displays the SPL2 statement for your pipeline. - Add a

thrucommand to send an unaltered copy of the received data to an Amazon S3 bucket. - (Optional) Select the Preview Pipeline icon (

) to generate a preview that shows what your data looks like when it passes through the pipeline. In the preview results panel, confirm that you are able to choose between $destination and $destination2 in the drop-down list, and that the same data is displayed in both cases.

) to generate a preview that shows what your data looks like when it passes through the pipeline. In the preview results panel, confirm that you are able to choose between $destination and $destination2 in the drop-down list, and that the same data is displayed in both cases. - Extract the IP addresses from the logs into a field named

ip_address.- In the Actions section, select the plus icon (

) and then select Extract fields from _raw.

) and then select Extract fields from _raw. - Select Insert from library, and then select IPv4WithOptionalPort.

- In the Regular expression field, change the name of the capture group from IPv4WithOptionalPort to ip_address. The updated regular expression looks like this:

(?P<ip_address>(((?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)(?:\.(?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)){3}))(?::(?:[1-9][0-9]*))?) - Select Apply.

The pipeline builder adds the following

rexcommand to your pipeline:| rex field=_raw /(?P<ip_address>(((?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)(?:\.(?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)){3}))(?::(?:[1-9][0-9]*))?)/ - In the Actions section, select the plus icon (

- Obfuscate the IP addresses.

- In the Actions section, select the plus icon (

) and then select Compute hash of.

) and then select Compute hash of. - In the Compute hash of a field dialog box, configure the following options and then select Apply.

Option name Enter or select the following Source field ip_address Hashing algorithm SHA-256 Target field ip_address The pipeline builder adds the following

evalcommand to your pipeline:| eval ip_address = sha256(ip_address)

The values in the

ip_addressfield are now obfuscated, but the original IP addresses are still visible in the_rawfield.

- In the Actions section, select the plus icon (

- Mask the IP addresses in the

_rawfield.- In the Actions section, select the plus icon (

) and then select Mask values in _raw.

) and then select Mask values in _raw. - In the Mask using regular expression dialog box, configure the following options and then select Apply.

The pipeline builder adds the following

evalcommand to your pipeline:| eval _raw=replace(_raw, /(((?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)(?:\.(?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)){3}))(?::(?:[1-9][0-9]*))?/, "x.x.x.x")The IP addresses in the

_rawfield are replaced byx.x.x.x.

- In the Actions section, select the plus icon (

- Send these processed logs to an index named cisco_syslog.

- In the Actions section, select the plus icon (

) and then select Target index.

) and then select Target index. - Select Specify index for all events.

- In the Index name field, enter cisco_syslog.

- Select Apply.

The pipeline builder adds the following

evalcommand to your pipeline:| eval index="cisco_syslog"

- In the Actions section, select the plus icon (

You now have a pipeline that sends an unaltered copy of the data to an Amazon S3 bucket, and then sends a processed copy of the data to an index. The complete SPL2 statement of the pipeline looks like this:

$pipeline = | from $source | thru [

| into $destination2

]

| rex field=_raw /(?P<ip_address>(((?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)(?:\.(?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)){3}))(?::(?:[1-9][0-9]*))?)/

| eval ip_address = sha256(ip_address)

| eval _raw=replace(_raw, /(((?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)(?:\.(?:2(?:5[0-5]|[0-4][0-9])|[0-1][0-9][0-9]|[0-9][0-9]?)){3}))(?::(?:[1-9][0-9]*))?/, "x.x.x.x")

| eval index = "cisco_syslog"

| into $destination;

See also

For information about other ways to route data, see Routing data in the same Ingest Processor pipeline to different actions and destinations.

| Process a subset of data using Ingest Processor | Process multiple copies of data using Ingest Processor |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406, 9.3.2408 (latest FedRAMP release), 9.3.2411

Download manual

Download manual

Feedback submitted, thanks!