Accelerate data models

Data model acceleration is a tool that you can use to speed up data models that represent extremely large datasets. After acceleration, pivots based on accelerated data model datasets complete quicker than they did before, as do reports and dashboard panels that are based on those pivots.

Data model acceleration does this with the help of the High Performance Analytics Store functionality, which builds data summaries behind the scenes in a manner similar to that of report acceleration. Like report acceleration summaries, data model acceleration summaries are easy to turn on and turn off, and are stored on your indexers parallel to the index buckets that contain the events that are being summarized.

This topic covers:

- The differences between data model acceleration, report acceleration, and summary indexing.

- How you turn on persistent acceleration for data models.

- How Splunk software builds data model acceleration summaries.

- How you can search accelerated data model acceleration summaries with the

tstatscommand. - Advanced configurations for persistently accelerated data models.

This topic also explains ad hoc data model acceleration. Splunk software applies ad hoc data model acceleration whenever you build a pivot with an unaccelerated dataset. It is even applied to transaction-based datasets and search-based datasets that use transforming commands, which can't be accelerated in a persistent fashion. However, any acceleration benefits you obtain are lost the moment you leave the Pivot Editor or switch datasets during a session with the Pivot Editor. These disadvantages do not apply to "persistently" accelerated datasets, which will always load with acceleration whenever they're accessed via Pivot. In addition, unlike "persistent" data model acceleration, ad hoc acceleration is not applied to reports or dashboard panels built with Pivot.

How data model acceleration differs from report acceleration and summary indexing

This is how data model acceleration differs from report acceleration and summary indexing:

- Report acceleration and summary indexing speed up individual searches, on a report by report basis. They do this by building collections of precomputed search result aggregates.

- Data model acceleration speeds up reporting for the entire set of fields that you define in a data model and which you and your Pivot users want to report on. In effect it accelerates the dataset represented by that collection of fields rather than a particular search against that dataset.

What is a high-performance analytics store?

Data model acceleration summaries are composed of multiple time-series index files, which have the .tsidx file extension. Each .tsidx file contains records of the indexed field::value combos in the selected dataset and all of the index locations of those field::value combos. It's these .tsidx files that make up the high-performance analytics store. Collectively, the .tsidx files are optimized to accelerate a range of analytical searches involving the set of fields defined in the accelerated data model.

An accelerated data model's high-performance analytics store spans a summary range. This is a range of time that you select when you activate acceleration for the data model. When you run a pivot on an accelerated dataset, the pivot's time range must fall at least partly within this summary range in order to get an acceleration benefit. For example, if you have a data model that accelerates the last month of data but you create a pivot using one of this data model's dataset that runs over the past year, the pivot will initially only get acceleration benefits for the portion of the search that runs over the past month.

The .tsidx files that make up a high-performance analytics store for a single data model are always distributed across one or more of your indexers. This is because Splunk software creates .tsidx files on the indexer, parallel to the buckets that contain the events referenced in the file and which cover the range of time that the summary spans.

The high-performance analytics store created through persistent data model acceleration is different from the summaries created through ad hoc data model acceleration. Ad hoc summaries are always created in a dispatch directory at the search head.

See About ad hoc data model acceleration.

Turn on persistent acceleration for a data model

See Managing Data Models to learn how to activate data model acceleration.

Data model acceleration caveats

There are a number of restrictions on the kinds of data model datasets that can be accelerated.

- Datasets can only be accelerated if they contain at least one root event hierarchy or one root search hierarchy that only includes streaming commands. Dataset hierarchies based on root search datasets that include nonstreaming commands and root transaction datasets are not accelerated.

- Pivots that use unaccelerated datasets fall back to

_rawdata, which means that they initially run more slowly. However, they can receive some acceleration benefit from ad hoc data model acceleration. See About ad hoc data model acceleration.

- Pivots that use unaccelerated datasets fall back to

- Data model acceleration is most efficient if the root event datasets and root search datasets being accelerated include in their initial constraint search the index(es) that Splunk software should search over. A single high-performance analytics store can span across several indexes in multiple indexers. If you know that all of the data that you want to pivot on resides in a particular index or set of indexes, you can speed things up by telling Splunk software where to look. Otherwise the Splunk software wastes time accelerating data that is not of use to you.

After you turn on acceleration for a data model

After you activate persistent acceleration for your data model, the Splunk software begins building a data model acceleration summary for the data model that spans the summary range that you've specified. Splunk software creates the .tsidx files for the summary in indexes that contain events that have the fields specified in the data model. It stores the .tsidx files parallel to their corresponding index buckets in a manner identical to that of report acceleration summaries.

After the Splunk software builds the data model acceleration summary, it runs scheduled searches on a 5 minute interval to keep it updated. Every 30 minutes, the Splunk software removes old, outdated .tsidx summary files. You can adjust these intervals in datamodels.conf and limits.conf, respectively.

A few facts about data model acceleration summaries:

- Each bucket in each index in a Splunk deployment can have one or more data model acceleration summary

.tsidxfiles, one for each accelerated data model for which it has relevant data. These summaries are created as data is collected - Summaries are restricted to a particular search head (or search head cluster GUID) to account for different extractions that may produce different results for the same search string, unless you have set up your Splunk platform deployment to share data model acceleration summaries among search heads or search head clusters. See Share data model acceleration summaries among search heads.

- You can only accelerate data models that you have shared to all users of an app or shared globally to all users of your Splunk deployment. You cannot accelerate data models that are private. This prevents individual users from taking up disk space with private data model acceleration summaries.

If necessary, you can configure the location of data model acceleration summaries via indexes.conf.

About the summary range

Data model acceleration summary ranges span an approximate range of time. At times, a data model acceleration summary can have a store of data that slightly exceeds its summary range, but the summary never fails to meet that range, except during the period when it is first being built.

When Splunk software finishes building a data model acceleration summary, its data model summarization process ensures that the summary always covers its summary range. The process periodically removes older summary data that passes out of the summary range.

If you have a pivot that is associated with an accelerated data model dataset, that pivot completes fastest when you run it over a time range that falls within the summary range of the data model. The pivot runs against the data model acceleration summary rather than the source index _raw data. The summary has far less data than the source index, which means that the pivot completes faster than it does on its initial run.

If you run the same pivot over a time range that is only partially covered by the summary range, the pivot is slower to complete. Splunk software has to run at least part of the pivot search over the source index _raw data in the index, which means it must parse through a larger set of events. So it is best to set the Summary Range for a data model wide enough that it captures all of the searches you plan to run against it.

Note: There are advanced settings related to Summary Range that you can use if you have a large Splunk deployment that involves multi-terrabyte datasets. This can lead to situations where the search required to build the initial data model acceleration summary runs too long and/or is resource intensive. For more information, see the subtopic Advanced configurations for persistently accelerated data models.

Summary range example

You create a data model and accelerate it with a Summary Range of 7 days. Splunk software builds a summary for your data model that approximately spans the past 7 days and then maintains it over time, periodically updating it with new data and removing data that is older than seven days.

You run a pivot over a time range that falls within the last week, and it should complete fairly quickly. But if you run the same pivot over the last 3 to 10 days it will not complete as quickly, even though this search also covers 7 days of data. Only the part of the search that runs over the last 3 to 7 days benefits by running against the data model acceleration summary. The portion of the search that runs over the last 8 to 10 days runs over raw data and is not accelerated. In cases like this, Splunk software returns the accelerated results from summaries first, and then fills in the gaps at a slower speed.

Keep this in mind when you set the Summary Range value. If you always plan to run a report over time ranges that exceed the past 7 days, but don't extend further out than 30 days, you should select a Summary Range of 1 month when you set up data model acceleration for that report.

How the Splunk platform builds data model acceleration summaries

When you activate acceleration for a data model, Splunk software builds the initial set of .tsidx file summaries for the data model and then runs scheduled searches in the background every 5 minutes to keep those summaries up to date. Each update ensures that the entire configured time range is covered without a significant gap in data. This method of summary building also ensures that late-arriving data is summarized without complication.

Parallel summarization

Data model acceleration summaries utilize parallel summarization by default. This means that by default, Splunk software can potentially run up to three concurrent search jobs to build and maintain .tsidx summary files instead of one. Parallel summarization is useful when the time that summarization searches take to complete is longer than the schedule on which new summarization searches are launched. Because parallel summarization allows summarization searches to run concurrently rather than sequentially, this feature can reduce the amount of time it might otherwise take to build and maintain data model summaries.

There is a cost for this improvement in summarization search performance. The concurrent searches count against the total number of concurrent search jobs that your Splunk platform deployment can run. If you have several data models that require parallel summarization you may reach this concurrent search job limit.

Each data model summarization search works on a single bucket at a time before moving on to the next bucket. Summarization searches do not utilize thread level parallelization to summarize multiple buckets at once.

Manage parallel summarization with the Max Summarization Search Time, Maximum Concurrent Summarization Searches, and Summarization Period settings, which you can find under Advanced Settings when you manage data model acceleration in Splunk Web.

Prerequisites

- For more information about setting cron expressions, see Use cron expressions for alert scheduling in the Alerting Manual

- For more information about concurrent searches and concurrent search limits, see Configure the priority of scheduled reports in the Reporting Manual

Steps

- On your Splunk platform deployment, in Splunk Web, go to Settings > Data Models.

- Select Edit > Edit Acceleration for an accelerated data model that you want to edit.

- Open Advanced Settings.

- Review the Max Summarization Search Time, Maximum Concurrent Summarization Searches, and Summarization Period settings and adjust them as appropriate. See the following table for more information about these settings.

Setting Description Default Max Summarization Search Time Set the maximum run time for searches that create or update the data model acceleration summary.

Max Summarization Search Time is an approximate limit that causes a given summarization search to cease so that a new summarization search can begin. If a given data model acceleration search is in the middle of summarizing a bucket when it stops, the subsequent search will pick up summarizing that bucket where the first search left off.

If you find that you are experiencing lags that are slowing down summarization and are making it harder to search on recent events, consider reducing the Max Summarization Search Time value. See When summary populating searches take too long to run.

Reducing the Max Summarization Search Time to a value below the average runtime of your data model acceleration searches will not cause your data model acceleration summary to be created faster.

1 hour Summarization Period Set the cron expression that the search scheduler uses to launch searches that create or update the data model acceleration summary on a regular interval, such as every five minutes or at the start of each hour. */5 * * * * (every five minutes) Maximum Concurrent Summarization Searches Set the maximum number of summarization searches that Splunk software can run concurrently. Searches might run concurrently when the time it takes for summarization searches to complete exceeds the Summarization Period for those searches.

Be careful about setting Maximum Concurrent Summarization Searches higher than 3. If you have too many summarization searches running concurrently, there can be performance impacts to the system in terms of memory, CPU, and disk read/write operations.

In addition, when a significant number of data models have Maximum Concurrent Summarization Searches set to 3 or higher, automatic summarization search quota limits might be reached, which might cause data model summarization searches to be skipped.3 - Select Save to save your changes.

If your Splunk platform implementation does not use search head clustering and you find that the searches that build and maintain your data model acceleration summaries cause your implementation to reach or exceed concurrent search limits, consider lowering the Maximum Concurrent Summarization Searches setting.

Example of parallel summarization

Say there is an accelerated data model named DM1. Data model DM1 has a Summarization Period that causes the search scheduler to launch a new summary update search for DM1 every 5 minutes. DM1 has a Max Summarization Search Time of 600 seconds, or 10 minutes. For DM1, Maximum Concurrent Summarization Searches is set to 3.

| Time: Now | Time: Now + 5 minutes | Time: Now + 10 minutes |

|---|---|---|

| Currently no summarization searches are running for data model DM1. Operating on DM1's Summarization Period, the search scheduler launches a summarization search for DM1. We'll call this search Summarization Search 1. | Five minutes later, Summarization Search 1 is still running because the Max Summarization Search Time for data model DM1 is 600 seconds, or 10 minutes. The search scheduler checks to see if it can start another summarization search for data model DM1 even though one is currently in progress. The search scheduler finds that Maximum Concurrent Summarization Searches is set to 3, and only one search is currently running, so the scheduler launches a second search that we will name Summarization Search 2. |

Ten minutes later, Summarization Search 1 has completed after running for 423 seconds, or just over 7 minutes.

|

| Summarization searches running for data model DM1: Summarization Search 1 | Summarization searches running for data model DM1: Summarization Search 1 and Summarization Search 2 | Summarization searches running for data model DM1: Summarization Search 2 and Summarization Search 3 |

As you can see data model DM1 never quite reaches the Maximum Concurrent Summarization Searches limit, and it probably won't unless the summarization searches take much longer to complete and the administrator for DM1 raises the Max Summarization Search Time to accommodate them. However, if that is happening, the administrator might want to investigate whether there are ways to improve the scalability of data model DM1.

Review summary creation metrics

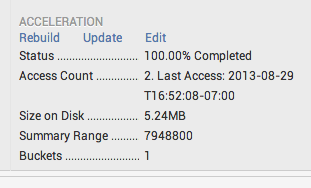

The speed of summary creation depends on the amount of events involved and the size of the summary range. You can track progress towards summary completion on the Data Models management page. Find the accelerated data model that you want to inspect, expand its row, and review the information that appears under ACCELERATION.

Status tells you whether the acceleration summary for the data model is complete. If it is in Building status it will tell you what percentage of the summary is complete. Data model acceleration summaries are constantly updating with new data. A summary that is "complete" now will return to "building" status later when it updates with new data.

When the Splunk software calculates the acceleration status for a data model, it bases its calculations on the Schedule Window that you have set for for the data model. However, if you have set a backfill relative time range for the data model, that time range is used to calculate acceleration status.

You might set up a backfill time range for a data model when the search that populates the data model acceleration summaries takes an especially long time to run. See Advanced configurations for persistently accelerated data models.

Verify that the Splunk platform is scheduling summary update searches

You can verify that Splunk software is scheduling searches to update your data model acceleration summaries. Inlog.cfg, set category.SavedSplunker=DEBUG and then watch scheduler.log for events like:

04-24-2013 11:12:02.357 -0700 DEBUG SavedSplunker - Added 1 scheduled searches for accelerated datamodels to the end of ready-to-run list

When the data model definition changes and your summaries have not been updated to match it

When you change the definition of an accelerated data model, it takes time for Splunk software to update its summaries so that they reflect this change. In the meantime, when you run Pivot searches (or tstats searches) that use the data model, it does not use the summaries that are older than the new definition, by default. This ensures that the output you get from Pivot for the data model always reflects your current configuration.

If you know that the old data is "good enough" you can take advantage of an advanced performance feature that lets the data model return summary data that has not yet been updated to match the current definition of the data model, using a setting called allow_old_summaries, which is set to false by default.

- On a search by search basis: When running

tstatssearches that select from an accelerated data model, set the argumentallow_old_summaries=t. - For your entire Splunk deployment: Go to

limits.confand change theallow_old_summariesparameter to true.

Data model acceleration summary size on disk

You can use the data model metrics on the Data Models management page to track the total size of a data model's summary on disk. Summaries do take up space, and sometimes a signficant amount of it, so it's important that you avoid overuse of data model acceleration. For example, you may want to reserve data model acceleration for data models whose pivots are heavily used in dashboard panels.

The amount of space that a data model takes up is related to the number of events that you are collecting for the summary range you have chosen. It can also be negatively affected if the data model includes fields with high cardinality (that have a large set of unique values), such as a Name field.

If you are particularly size constrained you may want to test the amount of space a data model acceleration summary will take up by enabling acceleration for a small Summary Range first, and then moving to a larger range if you think you can afford it.

Where the Splunk platform creates and stores data model acceleration summaries

By default, Splunk software creates each data model acceleration summary on the indexer, parallel to the bucket or buckets that cover the range of time over which the summary spans, whether the buckets that fall within that range are hot, warm, or cold. If a bucket within the summary range moves to frozen status, Splunk software removes the summary information that corresponds with the bucket when it deletes or archives the data within the bucket.

By default, data model acceleration summaries reside in a predefined volume titled _splunk_summaries at the following path:

$SPLUNK_DB/<index_name>/datamodel_summary/<bucket_id>/<search_head_or_pool_id>/DM_<datamodel_app>_<datamodel_name>

This volume initially has no maximum size specification, which means that it has infinite retention.

Also by default, the tstatsHomePath parameter is specified only once as a global setting in indexes.conf. Its path is inherited by all indexes. In etc/system/default/indexes.conf:

[global] [....] tstatsHomePath = volume:_splunk_summaries/$_index_name/datamodel_summary [....]

You can optionally:

- Override this default file path by providing an alternate volume and file path as a value for the

tstatsHomePathparameter. - Set different

tstatsHomePathvalues for specific indexes. - Add size limits to any volume (including

_splunk_summaries) by setting amaxVolumeDataSizeMBparameter in the volume configuration.

See the size-based retention example at Configure size-based retention for data model acceleration summaries.

For more information about index buckets and their aging process, see How the indexer stores indexes in the Managing Indexers and Clusters of Indexers manual.

How clusters handle data model acceleration summaries

By default, Indexer clusters do not replicate data model acceleration summaries. This means that only primary bucket copies have associated summaries. Under this default setup, if primacy gets reassigned from the original copy of a bucket to another (for example, because the peer holding the primary copy fails), the data model summary does not move to the peer with new primary copy. Therefore, it becomes unavailable. It does not become available again until the next time Splunk software attempts to update the data model summary, finds that it is missing, and regenerates it.

If your peer nodes are running version 6.4 or higher, you can configure the cluster manager node so that your indexer clusters replicate data model acceleration summaries. All searchable bucket copies will then have associated summaries. This is the recommended behavior.

See How indexer clusters handle report and data model acceleration summaries, in the Managing Indexers and Clusters of Indexers manual.

Configure size-based retention for data model acceleration summaries

Do you set size-based retention limits for your indexes so they do not take up too much disk storage space? By default, data model acceleration summaries can take up an unlimited amount of disk space. This can be a problem if you are also locking down the maximum data size of your indexes or index volumes. However, you can optionally configure similar retention limits for your data model acceleration summaries.

Although data model acceleration summaries are unbounded in size by default, they are tied to raw data in your index buckets and age along with it. When summarized events pass out of cold buckets into frozen buckets, those events are removed from the related summaries.

Important: Before you attempt to configure size-based retention for your data model acceleration summaries, you should understand how to use volumes to configure limits on index size across indexes. For more information, see "Configure index size" in the Managing Indexers and Clusters of Indexers manual.

Here are the steps you take to set up size-based retention for data model acceleration summaries. All of the configurations described are made within indexes.conf.

- (Optional) If you want to have data model acceleration summary results go into volumes other than

_splunk_summaries, create them.- If you want to use a preexisting volume that controls your indexed raw data, have that volume reference the filesystem that hosts your bucket directories, because your data model acceleration summaries will live alongside it.

- You can also place your data model acceleration summaries in their own filesystem if you want. You can only reference one filesystem per volume, but you can reference multiple volumes per filesystem.

- Add

maxVolumeDataSizeMBparameters to the volume or volumes that will be the home for your data model acceleration summary data, such as_splunk_summaries.- This lets you manage size-based retention for data model acceleration summary data across your indexes. When a data model acceleration summary volume reaches its maximum size, Splunk software volume manager removes the oldest summary in the volume to make room. It leaves a "done" file behind. The presence of this "done" file prevents Splunk software from rebuilding the summary.

- Update your index definitions.

- Set a

tstatsHomePathfor each index that deals with data model acceleration summary data. If you selected an alternate volume than_splunk_summariesin Step 1, ensure that the path references that volume.

- If you defined multiple volumes for your data model acceleration summaries, make sure that the

tstatsHomePathsettings for your indexes point to the appropriate volumes.

- Set a

Example configuration for data model acceleration size-based retention

This example configuration sets up data size limits for data model acceleration summaries on the _splunk_summaries volume, on a default, per-volume, and per-index basis.

######################## # Default settings ######################## # When you do not provide the tstatsHomePath value for an index, # the index inherits the default volume, which gives the index a data # size limit of 1TB. [default] maxTotalDataSizeMB = 1000000 tstatsHomePath = volume:_splunk_summaries/$_index_name/datamodel_summary ######################### # Volume definitions ######################### # Indexes with tstatsHomePath values pointing at this partition have # a data size limit of 100GB. [volume:_splunk_summaries] path = $SPLUNK_DB maxVolumeDataSizeMB = 100000 ######################### # Index definitions ######################### [main] homePath = $SPLUNK_DB/defaultdb/db coldPath = $SPLUNK_DB/defaultdb/colddb thawedPath = $SPLUNK_DB/defaultdb/thaweddb maxMemMB = 20 maxConcurrentOptimizes = 6 maxHotIdleSecs = 86400 maxHotBuckets = 10 maxDataSize = auto_high_volume [history] homePath = $SPLUNK_DB/historydb/db coldPath = $SPLUNK_DB/historydb/colddb thawedPath = $SPLUNK_DB/historydb/thaweddb tstatsHomePath = volume:_splunk_summaries/historydb/datamodel_summary maxDataSize = 10 frozenTimePeriodInSecs = 604800 [dm_acceleration] homePath = $SPLUNK_DB/dm_accelerationdb/db coldPath = $SPLUNK_DB/dm_accelerationdb/colddb thawedPath = $SPLUNK_DB/dm_accelerationdb/thaweddb [_internal] homePath = $SPLUNK_DB/_internaldb/db coldPath = $SPLUNK_DB/_internaldb/colddb thawedPath = $SPLUNK_DB/_internaldb/thaweddb tstatsHomePath = volume:_splunk_summaries/_internaldb/datamodel_summary

Search data model acceleration summaries

You can search the summary for a specific accelerated data model in Search with the tstats command.

tstats can sort through the full set of .tsidx file summaries that belong to your accelerated data model even when they are distributed among multiple indexes.

This can be a way to quickly run a stats-based search against a particular data model just to see if it's capturing the data you expect for the summary range you've selected.

To do this, you identify the data model using FROM datamodel=<datamodel-name>:

| tstats avg(foo) FROM datamodel=buttercup_games WHERE bar=value2 baz>5

This search returns the average of the field foo in the "Buttercup Games" data model acceleration summaries, specifically where bar is value2 and the value of baz is greater than 5.

Note: You don't have to specify the app of the data model as Splunk software takes this from the search context (the app you are in). However you cannot search an accelerated data model in App B from App A unless the data model in App B is shared globally.

Using the summariesonly argument

When you run a tstats search on an accelerated data model where the search has a time range that extends past the summarization time range of the data model, the search will generate results from the summarized data within that time range and from the unsummarized data that falls outside of that time range. This means that the search will be only partially accelerated. It can quickly pull results from the data model acceleration summary, but it slows down when it has to pull results from the "raw" unsummarized data outside of the summary.

If you do not want your tstats search to spend time pulling results from unsummarized data, use the summariesonly argument. This tstats argument ensures that the search generates results only from the TSIDX data in the data model acceleration summary. Non-summarized results are not provided.

For more about the tstats command, see the entry for tstats in the Search Reference.

Turn on multi-eval to improve data model acceleration

Searches against root-event datasets within data models iterate through many eval commands, which can be an expensive operation to complete during data model acceleration. You can improve the data model search efficiency by enabling multi-eval calculations for search in limits.conf.

enable_datamodel_meval = <bool> * Allow concatenation of successively occurring evals into a single comma-separated eval during the generation of data model searches. * default: true

If you turned off automatic rebuilds for any accelerated data model, you will need to rebuild that data model manually after enabling multi-eval calculations. For more information about rebuilding data models, see Manage data models.

Advanced configurations for persistently accelerated data models

There are a few situations that may require you to set up advanced configurations for your persistently accelerated data models.

When summary-populating searches take too long to run

If your Splunk deployment processes an extremely large amount of data on a regular basis you may find that the initial creation of persistent data model acceleration summaries is resource intensive. The searches that build these summaries may run too long, causing them to fail to summarize incoming events. To deal with this situation, Splunk software gives you two configuration parameters that you can set through Splunk Web. These parameters are Max Summarization Search Time and Backfill Range.

You can find both of these settings by opening the Edit Acceleration window for a data model and selecting Advanced Settings.

Change the maximum period of time that a summary-populating search can run

Max Summarization Search Time causes summary populating searches to quit after a specified amount of time has passed. After a summary-populating search stops, Splunk software runs a search to catch all of the events that have come in since the initial summary-populating search began, and then it continues updating the summary where the last summary-populating search left off.

For example, let's say you have activated acceleration for a data model, and you want its summary to retain events for the past three months. Because your organization indexes large amounts of data, the search that initially creates this summary should take about four hours to complete. Unfortunately you can't let the search run uninterrupted for that amount of time because it might fail to index some of the new events that come in while that four-hour search is in process.

Max Summarization Search Time stops the first search after an hour, and a second search takes its place. This second search pulls in the new events that have come in over the past hour. It then continues running to add more events from the last three months to the summary. This second search also stops after an hour and the process repeats until the summary of the last three months of data is complete.

If you find that your summarization searches have data collection lags that make it difficult to search on recent events, adjust your summarization settings to remove those lags. Continuing with our example, let's say your data model has the default acceleration settings:

| Setting | Value |

|---|---|

| Summarization Period | Every 5 minutes |

| Maximum Concurrent Summarization Searches | 3 searches |

| Max Summarization Search Time | 3600 seconds (1 hour) - default setting |

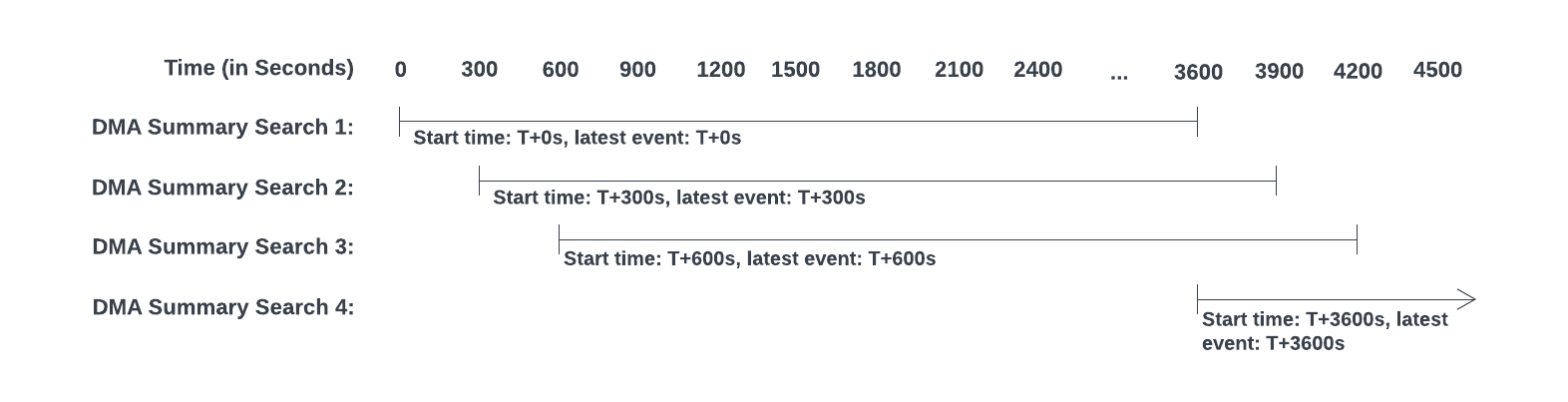

With these settings, if the data model summarization searches each run as long or longer than the default Max Summarization Search Time of one hour, that data model would likely experience periodic lags in data collection.

The following diagram illustrates that there will be a lag of 45 minutes between the start of search 3 and the start of search 4. This is due to two facts: Only 3 searches can run concurrently and each search takes about an hour to complete. Search 4 must collect the new data that comes in during that 45 minute lag, and search 4 can't add this new data to the summary index until it completes.

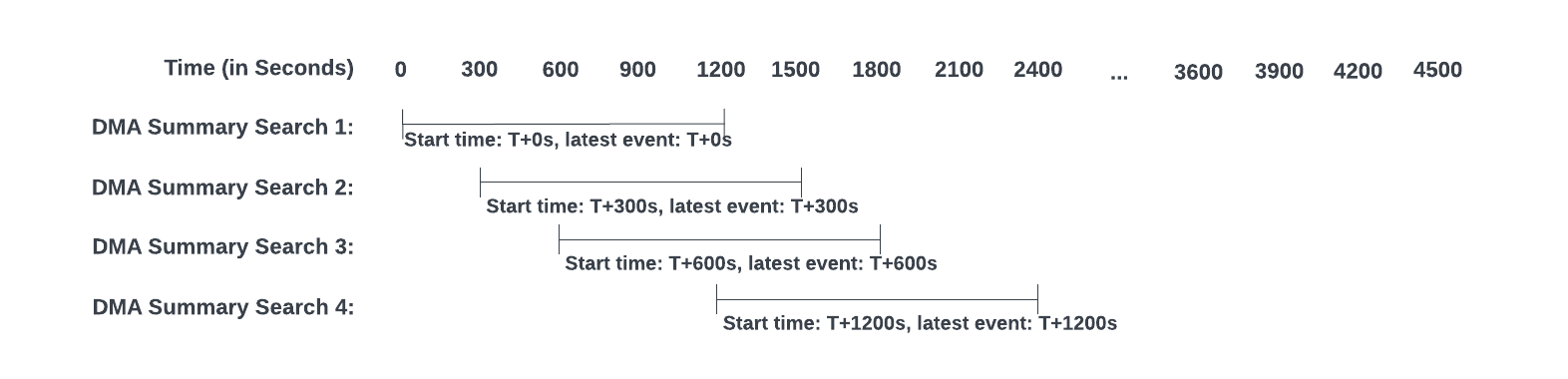

To reduce this lag, you must change the settings. You might increase Maximum Concurrent Summarization Searches, or reduce the Summarization Period, or reduce the Max Summarization Search Time.

Here is what might happen if you reduce the Max Summarization Search Time to 1200 seconds (20 minutes). The data lag is removed, making it easier to search recent data.

Note that when you reduce the data collection lag by lowering the Max Summarization Search Time to 1200, you do not have much capacity to increase Maximum Concurrent Summarization Searches value above 3.

For more information about Max Summarization Search Time see Parallel summarization.

Set a backfill time range that is shorter than the summary time range

If you are indexing a tremendous amount of data with your Splunk deployment and you don't want to adjust the Max Summarization Search Time range for a slow-running summary-populating search, you have an alternative option: the Backfill Range setting.

Backfill Range creates a second "backfill time range" that you set within the summary range. Splunk software builds a partial summary that initially only covers this shorter time range. After that, the summary expands with each new event summarized until it reaches the limit of the larger summary time range. At that point the full summary is complete and events that age out of the summary range are no longer retained.

For example, say you want to set your Summary Range to 1 Month but you know that your system would be taxed by a search that built a summary for that time range. To deal with this, you set the Backfill Range to -7d to run a search that creates a partial summary that initially just covers the past week. After that limit is reached, Splunk software only adds new events to the summary, causing the range of time covered by the summary to expand. But the full summary still retains events only for one month. Once the partial summary expands to the full Summary Range of the past month, it starts dropping its oldest events, just like an ordinary data model acceleration summary does.

When you do not want persistently accelerated data models to be rebuilt automatically

By default Splunk software automatically rebuilds persistently accelerated data models whenever it finds that those models are outdated. Data models can become outdated when the current data model search does not match the version of the data model search that was stored when the data model was created.

This can happen if the JSON file for an accelerated model is edited on disk without first disabling the model's acceleration. It can also happen when changes are made to knowledge objects that are interdependent with the data model search. For example, if the data model constraint search references an event type, and the definition of that event type changes, the constraint search will return different results than it did before the change. When the Splunk software detects this change, it rebuilds the data model.

Accelerated data models that have Automatic Rebuilds turned on might experience unbounded memory growth due to extensive search expansions, resulting in Out of Memory errors. In addition, the work of rebuilding large data model acceleration summaries each time a referenced knowledge object changes might be a burden on the system resources of your Splunk platform deployment. Turn off Automatic Rebuilds for accelerated data models that have large summaries on disk.

To turn off automatic rebuilds for a specific persistently accelerated data model, open the Edit Acceleration window for the data model and deselect Automatic Rebuilds.

When Automatic Rebuilds is turned off for an accelerated data model, you must manually initiate rebuilds of that data model. You can manually rebuild an accelerated data model through the Data Model Manager page, by expanding the row for the affected data model and selecting Rebuild.

See Manage data models.

You can search an out-of-date data model acceleration summary by adding allow_old_summaries = true to a tstats search of that summary. For more information about the allow_old_summaries argument, see the tstats reference topic in the Search Reference.

About ad hoc data model acceleration

Even when you're building a pivot that is based on a data model dataset that is not accelerated in a persistent fashion, that pivot can benefit from what we call "ad hoc" data model acceleration. In these cases, Splunk software builds a summary in a search head dispatch directory when you work with a dataset to build a pivot in the Pivot Editor.

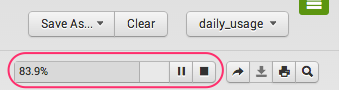

The search head begins building the ad-hoc data model acceleration summary after you select a dataset and enter the pivot editor. You can follow the progress of the ad hoc summary construction with the progress bar:

When the progress bar reads Complete, the ad hoc summary is built, and the search head uses it to return pivot results faster going forward. But this summary only lasts while you work with the dataset in the Pivot Editor. If you leave the editor and return, or switch to another dataset and then return to the first one, the search head will need to rebuild the ad hoc summary.

Ad hoc data model acceleration summaries complete faster when they collect data for a shorter range of time. You can change this range for root datasets and their children by resetting the time Filter in the Pivot Editor. See "About ad hoc data model acceleration summary time ranges," below, for more information.

Ad hoc data model acceleration works for all dataset types, including root search datasets that include transforming commands and root transaction datasets. Its main disadvantage against persistent data model acceleration is that with persistent data model acceleration, the summary is always there, keeping pivot performance speedy, until acceleration is deactivated for the data model. With ad hoc data model acceleration, you have to wait for the summary to be rebuilt each time you enter the Pivot Editor.

About ad hoc data model acceleration summary time ranges

The search head always tries to make ad hoc data model acceleration summaries fit the range set by the time Filter in the Pivot Editor. When you first enter the Pivot Editor for a dataset, the pivot time range is set to All Time. If your dataset represents a large dataset this can mean that the initial pivot will complete slowly as it builds the ad hoc summary behind the scenes.

When you give the pivot a time range other than All Time, the search head builds an ad hoc summary that fits that range as efficiently as possible. For any given data model dataset, the search head completes an ad hoc summary for a pivot with a short time range quicker than it completes when that same pivot has a longer time range.

The search head only rebuilds the ad hoc summary from start to finish if you replace the current time range with a new time range that has a different "latest" time. This is because the search head builds each ad hoc summary backwards, from its latest time to its earliest time. If you keep the latest time the same but change the earliest time the search head at most will work to collect any extra data that is required.

Root search datasets and their child datasets are a special case here as they do not have time range filters in Pivot (they do not extract _time as a field). Pivots based on these datasets always build summaries for all of the events returned by the search. However, you can design the root search dataset's search string so it includes "earliest" and "latest" dates, which restricts the dataset represented by the root search dataset and its children.

How ad hoc data model acceleration differs from persistent data model acceleration

Here's a summary of the ways in which ad hoc data model acceleration differs from persistent data model acceleration:

- Ad hoc data model acceleration takes place on the search head rather than the indexer. Locating the data model acceleration process on the search head allows it to accelerate all three dataset types (event, search, and transaction).

- Splunk software creates ad hoc data model acceleration summaries in dispatch directories at the search head. It creates and stores persistent data model acceleration summaries in your indexes alongside index buckets.

- Splunk software deletes ad hoc data model acceleration summaries when you leave the Pivot Editor or change the dataset you are working on while you are in the Pivot Editor. When you return to the Pivot Editor for the same dataset, the search head must rebuild the ad hoc summary. You cannot preserve ad hoc data model acceleration summaries for later use.

- Pivot job IDs are retained in the pivot URL, so if you quickly use the back button after leaving Pivot (or return to the pivot job with a permalink) you may be able to use the ad-hoc summary for that job without waiting for a rebuild. The search head deletes ad hoc data model acceleration summaries from the dispatch directory a few minutes after you leave Pivot or switch to a different model within Pivot.

- Ad hoc acceleration does not apply to reports or dashboard panels that are based on pivots. If you want pivot-based reports and dashboard panels to benefit from data model acceleration, base them on datasets from persistently accelerated event dataset hierarchies.

- Ad hoc data model acceleration can potentially create more load on your search head than persistent data model acceleration creates on your indexers. This is because the search head creates a separate ad hoc data model acceleration summary for each user that accesses a specific data model dataset in Pivot that is not persistently accelerated. On the other hand, summaries for persistently accelerated data model datasets are shared by each user of the associated data model. This data model acceleration summary reuse results in less work for your indexers.

| Manage report acceleration | Share data model acceleration summaries among search heads |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.3.2411 (latest FedRAMP release), 9.0.2208, 9.0.2209, 8.2.2112, 8.2.2201, 8.2.2202, 8.2.2203, 9.0.2205, 9.0.2303, 9.0.2305, 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406, 9.3.2408

Download manual

Download manual

Feedback submitted, thanks!