timechart

Description

Creates a time series chart with corresponding table of statistics.

A timechart is a statistical aggregation applied to a field to produce a chart, with time used as the X-axis. You can specify a split-by field, where each distinct value of the split-by field becomes a series in the chart. If you use an eval expression, the split-by clause is required. With the limit and agg options, you can specify series filtering. These options are ignored if you specify an explicit where-clause. If you set limit=0, no series filtering occurs.

Syntax

timechart [sep=<string>] [format=<string>] [partial=<bool>] [cont=<bool>] [limit=<int>] [agg=<stats-agg-term>] [<bin-options>... ] ( (<single-agg> [BY <split-by-clause>] ) | (<eval-expression>) BY <split-by-clause> )

Required arguments

When specifying timechart command arguments, either <single-agg> or <eval-expression> BY <split-by-clause> is required.

- eval-expression

- Syntax: <math-exp> | <concat-exp> | <compare-exp> | <bool-exp> | <function-call>

- Description: A combination of literals, fields, operators, and functions that represent the value of your destination field. For these evaluations to work, your values need to be valid for the type of operation. For example, with the exception of addition, arithmetic operations might not produce valid results if the values are not numerical. Additionally, the search can concatenate the two operands if they are both strings. When concatenating values with a period '.' the search treats both values as strings, regardless of their actual data type.

- single-agg

- Syntax: count | <stats-func>(<field>)

- Description: A single aggregation applied to a single field, including an evaluated field. For <stats-func>, see Stats function options. No wildcards are allowed. The field must be specified, except when using the

countfunction, which applies to events as a whole.

- split-by-clause

- Syntax: <field> (<tc-options>)... [<where-clause>]

- Description: Specifies a field to split the results by. If field is numerical, default discretization is applied. Discretization is defined with the

tc-options. Use the <where-clause> to specify the number of columns to include. See the tc options and the where clause sections in this topic.

Optional arguments

- agg=<stats-agg-term>

- Syntax:agg=( <stats-func> ( <evaled-field> | <wc-field> ) [AS <wc-field>] )

- Description: A statistical aggregation function. See Stats function options. The function can be applied to an eval expression, or to a field or set of fields. Use the AS clause to place the result into a new field with a name that you specify. You can use wild card characters in field names.

- bin-options

- Syntax: bins | minspan | span | <start-end>

- Description: Options that you can use to specify discreet bins, or groups, to organize the information. The

bin-optionsset the maximum number of bins, not the target number of bins. See the Bin options section in this topic. - Default: bins=100

- cont

- Syntax: cont=<bool>

- Description: Specifies whether the search results table should display a set of continuous time values, even if those values are zero (0). The default,

cont=true, fills any gaps in the time values in the results table. As a result, your chart visualization will show these 0 values. Setcont=falseto remove the time gaps and zero values from your results table and chart. - Default: true

- fixedrange

- Syntax: fixedrange=<bool>

- Description: Specify whether or not to enforce the earliest and latest times of the search. Setting fixedrange=false allows the timechart command to constrict to just the time range with valid data.

- Default: true

- format

- Syntax: format=<string>

- Description: Used to construct output field names when multiple data series are used in conjunction with a split-by-field.

formattakes precedence oversepand allows you to specify a parameterized expression with the stats aggregator and function ($AGG$) and the value of the split-by-field ($VAL$).

- limit

- Syntax: limit=<int>

- Description: Specifies a limit for the number of distinct values of the split-by field to return. If set to limit=0, all distinct values are used. Setting limit=N keeps the N highest scoring distinct values of the

split-byfield. All other values are grouped into 'OTHER', as long asuseotheris not set to false. The scoring is determined as follows:- If a single aggregation is specified, the score is based on the sum of the values in the aggregation for that split-by value. For example, for

timechart avg(foo) BY <field>theavg(foo)values are added up for each value of <field> to determine the scores. - If multiple aggregations are specified, the score is based on the frequency of each value of <field>. For example, for

timechart avg(foo) max(bar) BY <field>, the top scoring values for <field> are the most common values of <field>.

- If a single aggregation is specified, the score is based on the sum of the values in the aggregation for that split-by value. For example, for

- Ties in scoring are broken lexicographically, based on the value of the

split-byfield. For example, 'BAR' takes precedence over 'bar', which takes precedence over 'foo'. See Usage.

- partial

- Syntax: partial=<bool>

- Description: Controls if partial time bins should be retained or not. Only the first and last bin can be partial.

- Default: True. Partial time bins are retained.

- sep

- Syntax: sep=<string>

- Description: Used to construct output field names when multiple data series are used in conjunctions with a split-by field. This is equivalent to setting

formatto$AGG$<sep>$VALUE$.

Stats function options

- stats-func

- Syntax: The syntax depends on the function that you use. Refer to the table below.

- Description: Statistical functions that you can use with the

timechartcommand. Each time you invoke thetimechartcommand, you can use one or more functions. However, you can only use oneBYclause. See Usage.

- The following table lists the supported functions by type of function. Use the links in the table to see descriptions and examples for each function. For an overview about using functions with commands, see Statistical and charting functions.

Type of function Supported functions and syntax Aggregate functions avg()

count()

distinct_count()

estdc()

estdc_error()

exactperc<int>()

max()

median()

min()

mode()

perc<int>()

range()

stdev()

stdevp()

sum()

sumsq()

upperperc<int>()

var()

varp()

Event order functions earliest()

first()

last()

latest()

Multivalue stats and chart functions list(X)

values(X)

Time functions per_day()

per_hour()

per_minute()

per_second()

Bin options

- bins

- Syntax: bins=<int>

- Description: Sets the maximum number of bins to discretize into. This does not set the target number of bins. It finds the smallest bin size that results in no more than N distinct bins. Even though you specify a number such as 300, the resulting number of bins might be much lower.

- Default: 100

- minspan

- Syntax: minspan=<span-length>

- Description: Specifies the smallest span granularity to use automatically inferring span from the data time range.

- Syntax: span=<log-span> | span=<span-length> | span=<snap-to-time>

- Description: Sets the size of each bin, using either a log-based span, a span length based on time, or a span that snaps to a specific time. For descriptions of each of these options, see Span options.

- <start-end>

- Syntax: end=<num> | start=<num>

- Description: Sets the minimum and maximum extents for numerical bins. Data outside of the [start, end] range is discarded.

Span options

- <log-span>

- Syntax: [<num>]log[<num>]

- Description: Sets to log-based span. The first number is a coefficient. The second number is the base. If the first number is supplied, it must be a real number >= 1.0 and < base. Base, if supplied, must be real number > 1.0 (strictly greater than 1).

- span-length

- Syntax: <int>[<timescale>]

- Description: A span of each bin, based on time. If the timescale is provided, this is used as a time range. If not, this is an absolute bin length.

- <timescale>

- Syntax: <sec> | <min> | <hr> | <day> | <week> | <month> | <subseconds>

- Description: Time scale units.

Time scale Syntax Description <sec> s | sec | secs | second | seconds Time scale in seconds. <min> m | min | mins | minute | minutes Time scale in minutes. <hr> h | hr | hrs | hour | hours Time scale in hours. <day> d | day | days Time scale in days. <month> mon | month | months Time scale in months. <subseconds> us | ms | cs | ds Time scale in microseconds (us), milliseconds (ms), centiseconds (cs), or deciseconds (ds)

- <snap-to-time>

- Syntax: [+|-] [<time_integer>] <relative_time_unit>@<snap_to_time_unit>

- Description: A span of each bin, based on a relative time unit and a snap to time unit. The <snap-to-time> must include a relative_time_unit, the @ symbol, and a snap_to_time_unit. The offset, represented by the plus (+) or minus (-) is optional. If the <time_integer> is not specified, 1 is the default. For example if you specify w as the relative_time_unit, 1 week is assumed.

The

option is used only with week time units. It cannot be used with other time units such as minutes or quarters.

tc options

The <tc-option> is part of the <split-by-clause>.

- tc-option

- Syntax: <bin-options> | usenull=<bool> | useother=<bool> | nullstr=<string> | otherstr=<string>

- Description: Options for controlling the behavior of splitting by a field.

- bin-options

-

- See the Bin options section in this topic.

- nullstr

- Syntax: nullstr=<string>

- Description: If

usenull=true, specifies the label for the series that is created for events that do not contain the split-by field. - Default: NULL

- otherstr

- Syntax: otherstr=<string>

- Description: If

useother=true, specifies the label for the series that is created in the table and the graph. - Default: OTHER

- usenull

- Syntax: usenull=<bool>

- Description: Controls whether or not a series is created for events that do not contain the split-by field. The label for the series is controlled by the

nullstroption. - Default: true

- useother

- Syntax: useother=<bool>

- Description: You specify which series to include in the results table by using the <agg>, <limit>, and <where-clause> options. The

useotheroption specifies whether to merge all of the series not included in the results table into a single new series. Ifuseother=true, the label for the series is controlled by theotherstroption. - Default: true

where clause

The <where-clause> is part of the <split-by-clause>. See Where clause Examples.

- where clause

- Syntax: <single-agg> <where-comp>

- Description: Specifies the criteria for including particular data series when a field is given in the tc-by-clause. The most common use of this option is to select for spikes rather than overall mass of distribution in series selection. The default value finds the top ten series by area under the curve. Alternately one could replace sum with max to find the series with the ten highest spikes.This has no relation to the where command.

- <where-comp>

- Syntax: <wherein-comp> | <wherethresh-comp>

- Description: A criteria for the where clause.

- <wherein-comp>

- Syntax: (in | notin) (top | bottom)<int>

- Description: A where-clause criteria that requires the aggregated series value be in or not in some top or bottom grouping.

- <wherethresh-comp>

- Syntax: (< | >)( )?<num>

- Description: A where-clause criteria that requires the aggregated series value be greater than or less than some numeric threshold.

Usage

The timechart command is a transforming command. See Command types.

bins and span arguments

The timechart command accepts either the bins argument OR the span argument. If you do not specify either bins or span, the timechart command uses the default bins=100.

Default time spans

It you use the predefined time ranges in the time range picker, and do not specify the span argument, the following table shows the default span that is used.

| Time range | Default span |

|---|---|

| Last 15 minutes | 10 seconds |

| Last 60 minutes | 1 minute |

| Last 4 hours | 5 minutes |

| Last 24 hours | 30 minutes |

| Last 7 days | 1 day |

| Last 30 days | 1 day |

| Previous year | 1 month |

(Thanks to Splunk users MuS and Martin Mueller for their help in compiling this default time span information.)

Bin time spans versus per_* functions

The functions, per_day(), per_hour(), per_minute(), and per_second() are aggregator functions and are not responsible for setting a time span for the resultant chart. These functions are used to get a consistent scale for the data when an explicit span is not provided. The resulting span can depend on the search time range.

For example, per_hour() converts the field value so that it is a rate per hour, or sum()/<hours in the span>. If your chart span ends up being 30m, it is sum()*2.

If you want the span to be 1h, you still have to specify the argument span=1h in your search.

Note: You can do per_hour() on one field and per_minute() (or any combination of the functions) on a different field in the same search.

Split-by fields

If you specify a split-by field, ensure that you specify the bins and span arguments before the split-by field. If you specify these arguments after the split-by field, Splunk software assumes that you want to control the bins on the split-by field, not on the time axis.

If you use chart or timechart, you cannot use a field that you specify in a function as your split-by field as well. For example, you will not be able to run:

... | chart sum(A) by A span=log2

However, you can work around this with an eval expression, for example:

... | eval A1=A | chart sum(A) by A1 span=log2

Functions and memory usage

Some functions are inherently more expensive, from a memory standpoint, than other functions. For example, the distinct_count function requires far more memory than the count function. The values and list functions also can consume a lot of memory.

If you are using the distinct_count function without a split-by field or with a low-cardinality split-by by field, consider replacing the distinct_count function with the the estdc function (estimated distinct count). The estdc function might result in significantly lower memory usage and run times.

Lexicographical order

Lexicographical order sorts items based on the values used to encode the items in computer memory. In Splunk software, this is almost always UTF-8 encoding, which is a superset of ASCII.

- Numbers are sorted before letters. Numbers are sorted based on the first digit. For example, the numbers 10, 9, 70, 100 are sorted lexicographically as 10, 100, 70, 9.

- Uppercase letters are sorted before lowercase letters.

- Symbols are not standard. Some symbols are sorted before numeric values. Other symbols are sorted before or after letters.

Basic Examples

1. Chart the product of the average "CPU" and average "MEM" for each "host"

For each minute, compute the product of the average "CPU" and average "MEM" for each "host".

... | timechart span=1m eval(avg(CPU) * avg(MEM)) BY host

2. Chart the average of cpu_seconds by processor

Create a timechart of the average of cpu_seconds by processor, rounded to 2 decimal places.

... | timechart eval(round(avg(cpu_seconds),2)) BY processor

3. Chart the average of "CPU" for each "host"

For each minute, calculate the average value of "CPU" for each "host".

... | timechart span=1m avg(CPU) BY host

4. Chart the average "cpu_seconds" by "host" and remove outlier values

Calculate the average "cpu_seconds" by "host". Remove outlying values that might distort the timechart axis.

... | timechart avg(cpu_seconds) BY host | outlier action=tf

5. Chart the average "thruput" of hosts over time

... | timechart span=5m avg(thruput) BY host

6. Chart the eventypes by "source_ip" where the count is greater than 10

For each minute, count the eventypes by "source_ip", where the count is greater than 10.

sshd failed OR failure | timechart span=1m count(eventtype) BY source_ip usenull=f WHERE count>10

Extended Examples

1. Chart revenue for the different products that were purchased yesterday

This example uses the sample dataset from the Search Tutorial and a field lookup to add more information to the event data. To try this example for yourself:

The original data set includes a |

Chart revenue for the different products that were purchased yesterday.

sourcetype=access_* action=purchase | timechart per_hour(price) by productName usenull=f useother=f

This example searches for all purchase events (defined by the action=purchase) and pipes those results into the timechart command. The per_hour() function sums up the values of the price field for each item (product_name) and bins the total for each hour of the day.

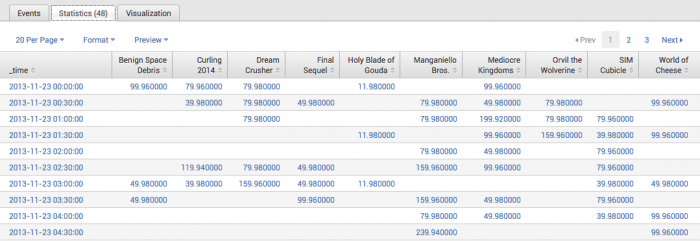

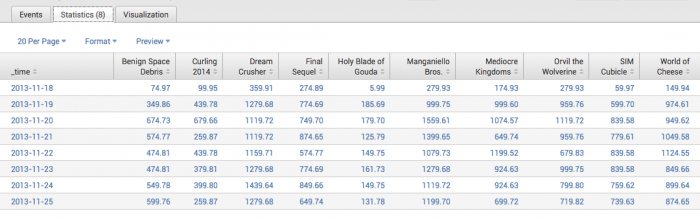

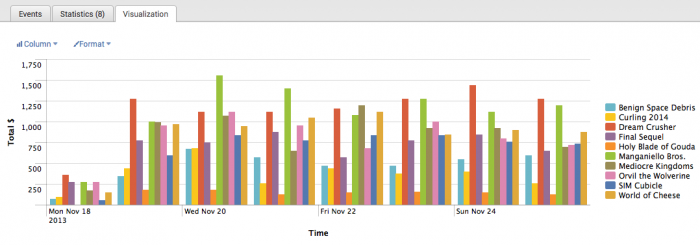

This produces the following table of results in the Statistics tab.

View and format the report in the Visualizations tab. Here, it's formatted as a stacked column chart over time.

After you create this chart, you can position your mouse pointer over each section to view more metrics for the product purchased at that hour of the day. Notice that the chart does not display the data in hourly spans. Because a span is not provided (such as span=1hr), the per_hour() function converts the value so that it is a sum per hours in the time range (which in this example is 24 hours).

2. Chart the number of purchases made daily for each type of product

| This example uses the sample dataset from the Search Tutorial and a field lookup to add more information to the event data. Before you run this example, download the data set from this topic in the Search Tutorial and follow the instructions to upload it to your Splunk deployment. |

Chart the number of purchases made daily for each type of product.

sourcetype=access_* action=purchase | timechart span=1d count by categoryId usenull=f

This example searches for all purchases events (defined by the action=purchase) and pipes those results into the timechart command. The span=1day argument buckets the count of purchases over the week into daily chunks. The usenull=f argument means "ignore any events that contain a NULL value for categoryId."

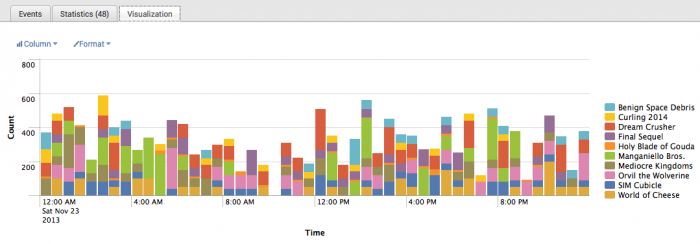

This produces the following table of results in the Statistics tab.

View and format the report in the Visualizations tab. Here, it's formatted as a column chart over time.

Compare the number of different items purchased each day and over the course of the week. It looks like day-to-day, the number of purchases for each item does not vary significantly.

3. Display results in 1 week intervals

| This search uses recent earthquake data downloaded from the USGS Earthquakes website. The data is a comma separated ASCII text file that contains magnitude (mag), coordinates (latitude, longitude), region (place), etc., for each earthquake recorded.

You can download a current CSV file from the USGS Earthquake Feeds and upload the file to your Splunk instance. This example uses the All Earthquakes data from the past 30 days. |

This search counts the number of earthquakes in Alaska where the magnitude is greater than or equal to 3.5. The results are organized in spans of 1 week, where the week begins on Monday.

source=all_month.csv place=*alaska* mag>=3.5 | timechart span=w@w1 count BY mag

- The <by-clause> is used to group the earthquakes by magnitude.

- You can only use week spans with the snap-to span argument in the

timechartcommand. For more information, see Specify a snap to time unit.

The results appear on the Statistics tab and look something like this:

| _time | 3.5 | 3.6 | 3.7 | 3.8 | 4 | 4.1 | 4.1 | 4.3 | 4.4 | 4.5 | OTHER |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2018-03-26 | 3 | 3 | 2 | 2 | 3 | 1 | 0 | 2 | 1 | 1 | 1 |

| 2018-04-02 | 5 | 7 | 2 | 0 | 3 | 2 | 1 | 0 | 0 | 1 | 1 |

| 2018-04-09 | 2 | 3 | 1 | 2 | 0 | 2 | 1 | 1 | 0 | 1 | 2 |

| 2018-04-16 | 6 | 5 | 0 | 1 | 2 | 2 | 2 | 0 | 0 | 2 | 1 |

| 2018-04-23 | 2 | 0 | 0 | 0 | 0 | 2 | 1 | 2 | 2 | 0 | 1 |

4. Count the total revenue made for each item over time

This example uses the sample dataset from the Search Tutorial and a field lookup to add more information to the event data. Before you run this example:

The original data set includes a |

Count the total revenue made for each item sold at the shop over the course of the week. This examples shows two ways to do this.

1. This first search uses the span argument to bucket the times of the search results into 1 day increments. Then uses the sum() function to add the price for each product_name.

sourcetype=access_* action=purchase | timechart span=1d sum(price) by product_name usenull=f

2. This second search uses the per_day() function to calculate the total of the price values for each day.

sourcetype=access_* action=purchase | timechart per_day(price) by product_name usenull=f

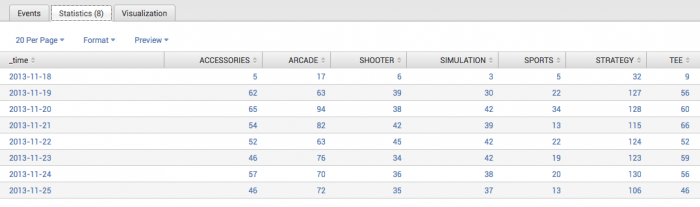

Both searches produce the following results table in the Statistics tab.

View and format the report in the Visualizations tab. Here, it's formatted as a column chart over time.

Now you can compare the total revenue made for items purchased each day and over the course of the week.

5. Chart product views and purchases for a single day

| This example uses the sample dataset from the Search Tutorial. Download the data set from this topic in the Search Tutorial and follow the instructions to upload it to your Splunk deployment. Then, run this search using the Preset time range "Yesterday" or "Last 24 hours". |

Chart a single day's views and purchases at the Buttercup Games online store.

sourcetype=access_* | timechart per_hour(eval(method="GET")) AS Views, per_hour(eval(action="purchase")) AS Purchases

This search uses the per_hour() function and eval expressions to search for page views (method=GET) and purchases (action=purchase). The results of the eval expressions are renamed as Views and Purchases, respectively.

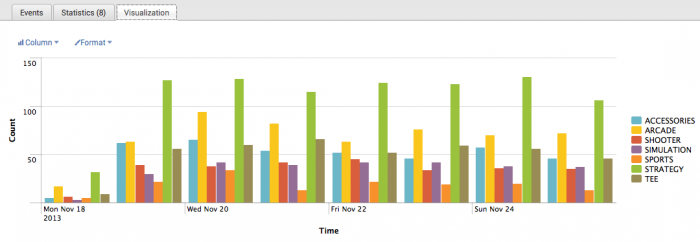

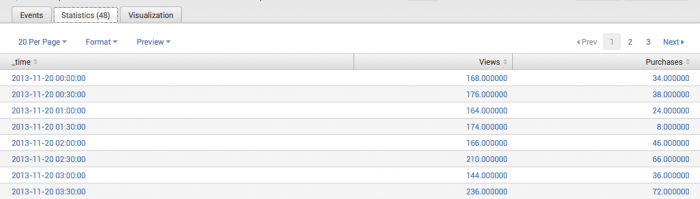

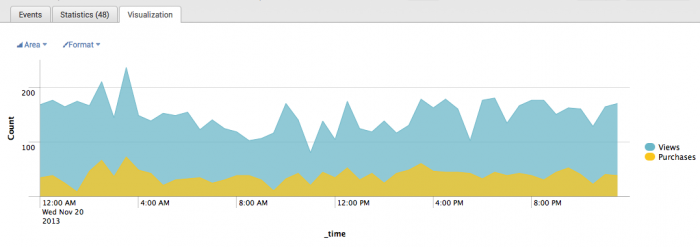

This produces the following results table in the Statistics tab.

View and format the report in the Visualizations tab. Here, it's formatted as an area chart.

The difference between the two areas indicates that all the views did not lead to purchases. If all views lead to purchases, you would expect the areas to overlay atop each other completely so that there is no difference between the two areas.

Where clause Examples

These examples use the where clause to control the number of series values returned in the time-series chart.

Example 1: Show the 5 most rare series based on the minimum count values. All other series values will be labeled as "other".

index=_internal | timechart span=1h count by source WHERE min in bottom5

Example 2: Show the 5 most frequent series based on the maximum values. All other series values will be labeled as "other".

index=_internal | timechart span=1h count by source WHERE max in top5

These two searches return six data series: the five top or bottom series specified and the series labeled other. To hide the "other" series, specify the argument useother=f.

Example 3: Show the source series count of INFO events, but only where the total number of events is larger than 100. All other series values will be labeled as "other".

index=_internal | timechart span=1h sum(eval(if(log_level=="INFO",1,0))) by source WHERE sum > 100

Example 4: Using the where clause with the count function measures the total number of events over the period. This yields results similar to using the sum function.

The following two searches returns the sources series with a total count of events greater than 100. All other series values will be labeled as "other".

index=_internal | timechart span=1h count by source WHERE count > 100

index=_internal | timechart span=1h count by source WHERE sum > 100

See also

Answers

Have questions? Visit Splunk Answers and see what questions and answers the Splunk community has using the timechart command.

| tail | timewrap |

This documentation applies to the following versions of Splunk® Enterprise: 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.0.4, 7.0.5, 7.0.6, 7.0.7, 7.0.8, 7.0.9, 7.0.10, 7.0.11, 7.0.13

Download manual

Download manual

Feedback submitted, thanks!