Download topic as PDF

Download topic as PDF

Ingest Processor is currently released as a preview only and is not officially supported. See Splunk General Terms for more information. For any questions on this preview, please reach out to ingestprocessor@splunk.com. Complete the preview application on the Voice of the Customer portal to get access to a demo for a tenant.

How the Ingest Processor solution works

Ingest Processor combines Splunk-managed cloud services and the Search Processing Language, version 2 (SPL2) to support processing of data that has been ingested into your Splunk Platform deployment. The Ingest Processor solution consists of the following main components:

| Component | Description | Usage |

|---|---|---|

| Ingest Processor service | A cloud service that provides a centralized console for managing Ingest Processor pipelines. | Splunk hosts the Ingest Processor service as part of Splunk Cloud Platform. The Ingest Processor service provides a cloud control plane that lets you deploy configurations, monitor the status of your Ingest Processor pipelines, and gain visibility into the amount of data that is moving through your network. |

| Pipeline | A set of data processing instructions written in SPL2, which is the data search and preparation language used by the Splunk software. | In the Ingest Processor service, you create pipelines to specify what data to process, how to process it, and what destination to send the processed data to. Then, you apply pipelines to configure them to start processing data according to those instructions. |

By using the Ingest Processor solution, you can process, manage and monitor your data ingest ecosystem from a Splunk-managed cloud service.

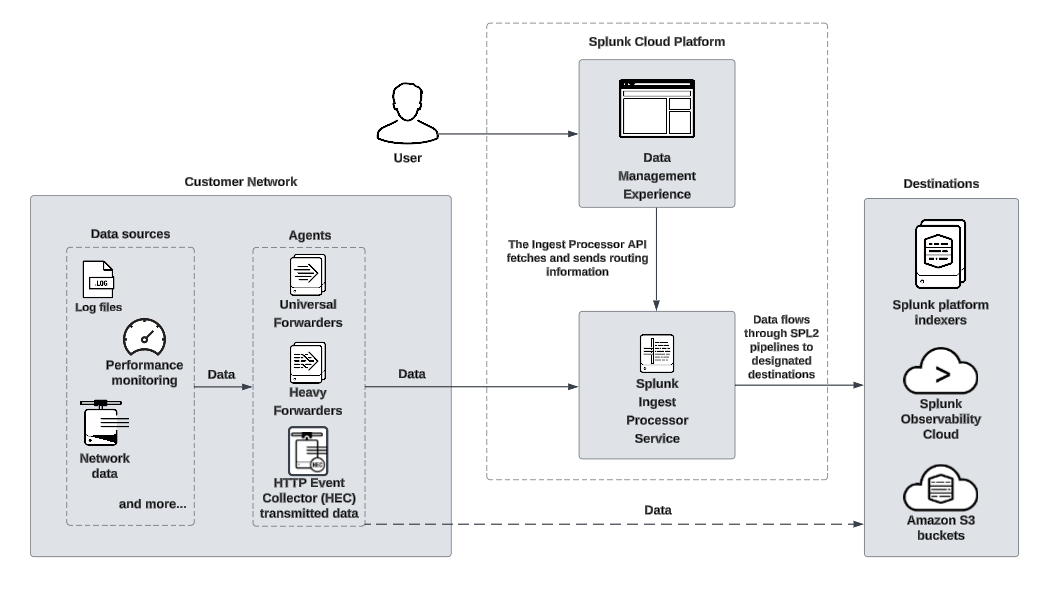

The following diagram provides an overview of these elements:

- The components that comprise the Ingest Processor service, and whether each component is hosted in the Splunk Cloud Platform environment or your local environment. See the System architecture section on this page for more information.

System architecture

The primary components of the Ingest Processor service include the Ingest Processor service and SPL2 pipelines that support data processing.

Ingest Processor service

The Ingest Processor service is a cloud service hosted by Splunk. It is part of the data management experience, which is a set of services that fulfill a variety of data ingest and processing use cases.

You can use the Ingest Processor service to do the following:

- Create and apply SPL2 pipelines that determine how each Ingest Processor processes and routes the data that it receives.

- Define source types to identify the kind of data that you want to process and determine how the Ingest Processor breaks and merges that data into distinct events.

- Create connections to the destinations that you want your Ingest Processor to send processed data to.

Pipelines

A pipeline is a set of data processing instructions written in SPL2. When you create a pipeline, you write a specialized SPL2 statement that specifies which data to process, how to process it, and where to send the results. When you apply a pipeline, the Ingest Processor uses those instructions to process all the data that it receives from data sources such as Splunk forwarders, HTTP clients, and logging agents.

Each pipeline selects and works with a subset of all the data that the Ingest Processor receives. For example, you can create a pipeline that selects events with the source type cisco_syslog from the incoming data, and then sends them to a specified index in Splunk Cloud Platform. This subset of selected data is called a partition. For more information, see Partitions.

The Ingest Processor solution supports only the commands and functions that are part of the IngestProcessor profile. For information about the specific SPL2 commands and functions that you can use to write pipelines for Ingest Processor, see Ingest Processor pipeline syntax. For a summary of how the IngestProcessor profile supports different commands and functions compared to other SPL2 profiles, see the following pages in the SPL2 Search Reference:

- Compatibility Quick Reference for SPL2 commands

- Compatibility Quick Reference for SPL2 evaluation functions

Data pathway

Data moves through Ingest Processor as follows:

- A tool, machine, or piece of software in your network generates data such as event logs or traces.

- An agent, such as a Splunk forwarder, receives the data and then sends it to the Ingest Processor. Alternatively, the device or software that generated the data can send it to the Ingest Processor without using an agent.

- Ingest Processor filters and transforms data in pipelines based on a partition, and then sends the resulting processed data to a specified destination such as a Splunk index.

Ingest Processor routes processed data to destinations based on pipelines you configured with a partition and SPL2 statement and then apply. If there are no applicable pipelines, then unprocessed data is either dropped or routed to the default destination specified in the configuration settings for Ingest Processor. For more information on how data moves through a Ingest Processor, see Partitions.

If you don't specify a default destination, the Ingest Processor drops unprocessed data.

As the Ingest Processor receives and processes data, it measures metrics indicating the volume of data that was received, processed, and sent to a destination. These metrics are stored in the _metrics index of the Splunk Cloud Platform deployment that is connected to your tenant. The Ingest Processor service surfaces the metrics in the dashboard, providing detailed overviews of the amount of data that is moving through the system.

Partitions

Ingest Processor merges received data into an internal dataset before processing and routing that data. A partition is a subset of data that you select for processing in your pipeline. Each pipeline that you apply to the Ingest Processor creates a partition. For information about how to specify a partition when creating a pipeline, see Create pipelines for Ingest Processor.

The partitions that you create and the configuration of your Ingest Processor determines how the Ingest Processor routes the received data and whether any data is dropped:

- The data that Ingest Processor receives is defined as processed or unprocessed based on whether there is at least one partition for that data. For example, if your Ingest Processor receives Windows event logs and Linux audit logs, but you only applied a pipeline with a partition for Windows event logs, then those Windows logs will go into your pipeline and are considered to be processed while the Linux audit logs are considered to be unprocessed because they are not included in your partition for that pipeline.

- Each pipeline creates a partition of the incoming data based on specified conditions, and only processes data that meets those conditions. Any data that does not meet those conditions is considered to be unprocessed.

- If you configure your pipeline to filter the processed data in the SPL2 editor, the data that is filtered out gets dropped.

- If you configure your Ingest Processor to have a default destination, any data that does not meet your pipeline's partition conditions is classified as unprocessed data and goes to that default destination.

- If you do not set a default destination, then any unprocessed data is dropped.

See also

For information about how to set up and use specific components of the Ingest Processor solution, see the following resources.

|

PREVIOUS About Ingest Processor |

NEXT First-time setup instructions for the Ingest Processor solution |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.1.2308 (latest FedRAMP release), 9.1.2312

Feedback submitted, thanks!