All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

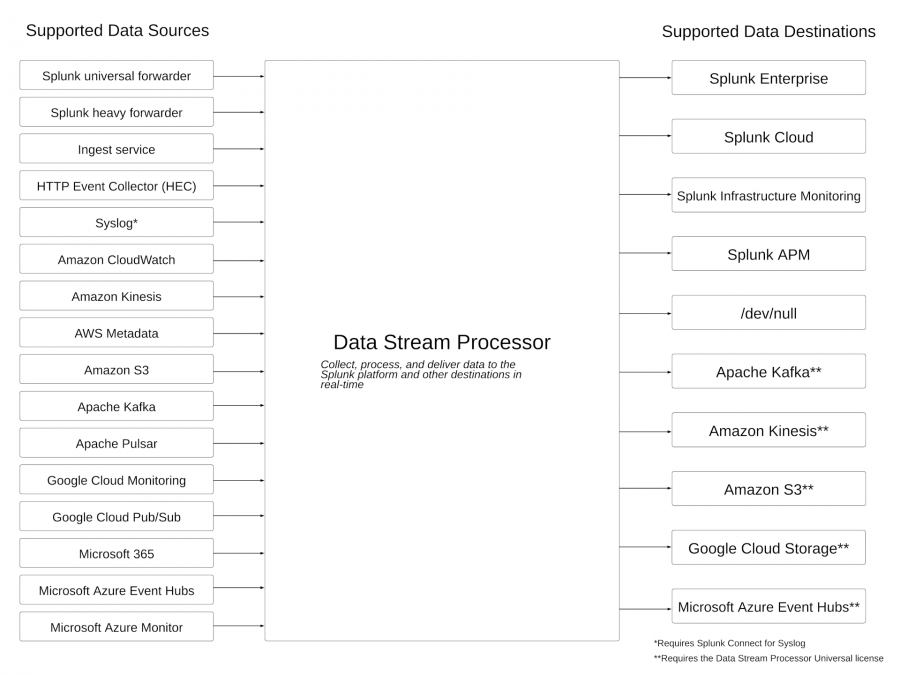

Data sources and destinations

The can collect data from and send data to a variety of locations, including databases, monitoring services, and pub/sub messaging systems. The location that DSP collects data from is called a "data source", while the location that DSP sends data to is called a "data destination".

Each type of data source or destination is supported by a specific DSP function. For example, you must use the Splunk forwarders source function to receive data from a Splunk forwarder, and use the Amazon Kinesis Data Streams sink function to write data to a Kinesis stream. Functions that provide read access to data sources are called source functions, while functions that provide write access to data destinations are called sink functions.

Some sink functions are only available if you're using a Universal license for DSP. Specifically, the following sink functions require the Universal license:

- Send to Apache Kafka

- Send to Amazon Kinesis Data Streams

- Send to Amazon S3

- Send to Google Cloud Storage

- Send to Microsoft Azure Event Hubs

See Licensing for the in the Install and administer the Data Stream Processor manual for more information.

The following diagram summarizes the data sources and destinations that DSP supports, and identifies the data destinations that require a Universal license:

| Getting started with DSP data connections | Data collection methods |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.2.1, 1.2.2-patch02, 1.2.4, 1.2.5, 1.3.0, 1.3.1

Download manual

Download manual

Feedback submitted, thanks!