All DSP releases prior to DSP 1.4.0 use Gravity, a Kubernetes orchestrator, which has been announced end-of-life. We have replaced Gravity with an alternative component in DSP 1.4.0. Therefore, we will no longer provide support for versions of DSP prior to DSP 1.4.0 after July 1, 2023. We advise all of our customers to upgrade to DSP 1.4.0 in order to continue to receive full product support from Splunk.

Send data from a pipeline to multiple destinations

When creating a data pipeline in the , you can choose to connect the pipeline to multiple data destinations. For example, you can create a single pipeline that sends data to a Splunk index, an Amazon S3 bucket, and Microsoft Azure Event Hubs concurrently. You can send the same set of data to multiple data destinations at once, or filter and route the data to different destinations depending on whether the data meets certain filter criteria. See Filtering and routing data in the for more information about this latter use case.

To send data from your pipeline to multiple destinations, branch the pipeline and end each branch with a different sink function as needed. The following steps assume that you've already started building the pipeline and now want to specify the data destinations, and that you are using the Canvas View. See Branch a pipeline using SPL2 for information about using the SPL2 View and the SPL2 Pipeline Builder to branch a pipeline.

- From the Canvas View of your pipeline, click the + icon beside the last function in your pipeline and add the sink function for one of your desired data destinations.

- To branch the pipeline and send the data to an additional data destination, click the

icon beside the function that you want to branch from, and then add another sink function.

icon beside the function that you want to branch from, and then add another sink function. - (Optional) To continue adding transformation functions to the pipeline, do either of the following, depending on the location in the pipeline where you want to add the function:

- (Optional) Repeat steps 2 and 3 as needed to finish building your pipeline and creating additional pipeline branches that point to other data destinations.

Create a pipeline that sends data to multiple destinations

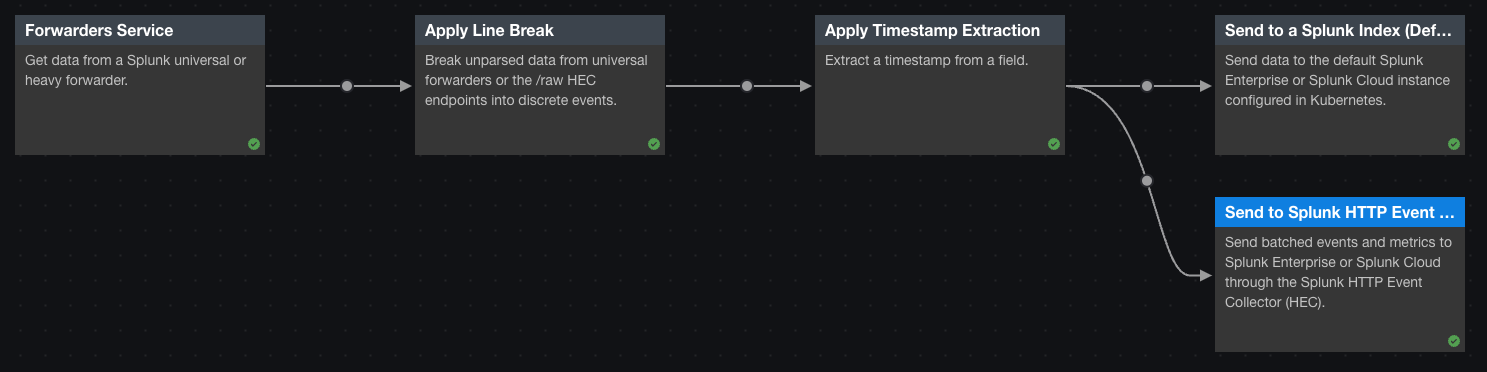

In this example, we create a pipeline that does the following:

- Ingests data from a Splunk universal forwarder.

- Processes the data to ensure that events are correctly grouped into records.

- Sends identical copies of this data to two different Splunk instances.

Prerequisites

- A Splunk universal forwarder that is configured to send data to the . See Create a connection to a Splunk forwarder in the Connect to Data Sources and Destinations with the manual.

- A administrator account, or a user account with the permissions required for using the Forwarders service. See Allow users to use the Forwarders service in the Connect to Data Sources and Destinations with manual.

- A Splunk instance configured to be the default Splunk environment for the . See Set a default Splunk Enterprise instance for the Send to a Splunk Index (Default for Environment) function in the Install and administer the manual.

- A connection to your non-default Splunk instance. See Create a connection to a Splunk index in the Connect to Data Sources and Destinations with the manual.

Steps

- From the Templates page, select the Splunk universal forwarder template.

This template creates a pipeline that reads data from Splunk forwarders, completes the processing required for data that comes from universal forwarders, and then sends the data to themainindex of the default Splunk instance associated with the . - Branch the pipeline so that it also sends data to the

mainindex of a different Splunk instance:- Click the

icon beside the Apply Timestamp Extraction function and select the Send to Splunk HTTP Event Collector sink function.

icon beside the Apply Timestamp Extraction function and select the Send to Splunk HTTP Event Collector sink function. - On the View Configurations tab, set connection_id to the connection for your non-default Splunk instance.

- In the index field, enter "" (two quotation marks).

- In the default_index field, enter "main" (including the quotation marks).

- Click the

- Validate your pipeline to confirm that all of the functions are configured correctly. Click the More Options

button located beside the Activate Pipeline button, and then select Validate.

button located beside the Activate Pipeline button, and then select Validate. - Click Save, enter a name for your pipeline, and then click Save again.

- (Optional) Click Activate to activate your pipeline. If it's the first time activating your pipeline, do not enable any of the optional Activate settings.

You now have a pipeline that receives data from a universal forwarder, processes the data to ensure that events are grouped into records correctly, and then sends all the records to two different Splunk instances.

The following is the complete SPL2 statement for this pipeline:

$statement_2 = | from forwarders("forwarders:all") | apply_line_breaking linebreak_type="auto" | apply_timestamp_extraction fallback_to_auto=false extraction_type="auto";

| from $statement_2 | into index("", "main");

| from $statement_2 | into into_splunk_enterprise_indexes("2f1ce641-baeb-4695-82cc-8f16ae64eb71", "", "main");

See also

| Create a pipeline with multiple data sources | Back up, restore, and share pipelines |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.3.0, 1.3.1, 1.4.0, 1.4.1, 1.4.2, 1.4.3, 1.4.4, 1.4.5, 1.4.6

Download manual

Download manual

Feedback submitted, thanks!