Experiments overview

Introduced in version 3.2 of the Splunk Machine Learning Toolkit (MLTK), the Experiment Management Framework (EMF) brings all aspects of a monitored machine learning pipeline into one interface with automated model versioning and lineage baked in.

Experiment workflow

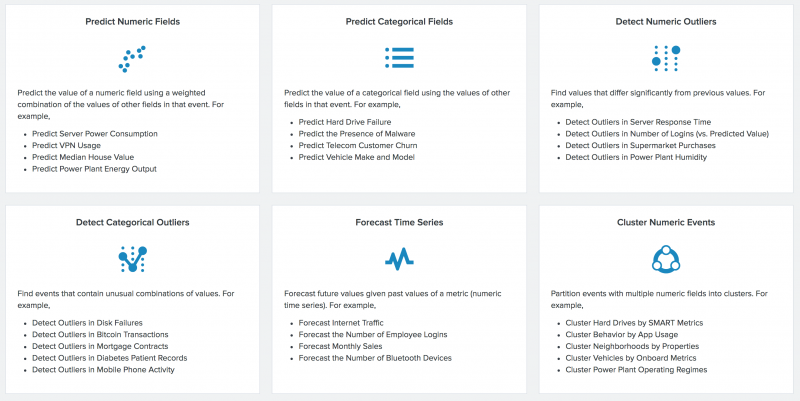

The Experiment workflow begins with the creation of a new machine learning pipeline, based on the selected MLTK guided modeling interface or Assistant. There are six Experiment Assistants to select from including Predict Numeric Fields, Predict Categorical Fields, Detect Numeric Outliers, Detect Categorical Outliers, Forecast Time Series, and Cluster Numeric Events.

Once you select and apply Experiment parameters to your data and generate results, the workflow continues through the available visualizations and statistical analysis. In this way, Experiments are similar to the Classic Assistant workflow. Through the guided Experiment Assistant you make selections including:

- Specifying your data sources.

- Selection of an algorithm and algorithm parameters.

- Selection of the fields for the algorithms to analyze.

- Setting training/test data splits.

Every step of an Experiment has tool-tips as additional guides, the option to see the SPL as written by the Experiment with explanations for the commands, and an option to open a clone of the SPL in a new search window for further customization.

Saved Experiments

Once you save an Experiment, a new exclusive knowledge object is created in the Splunk platform that keeps track of all the settings for that pipeline, as well as its affiliated alerts and scheduled trainings.

Save your work prior to scheduling a training job for the Experiment, managing alerts for an Experiment, or deploying an Experiment.

This saved knowledge object enables you to:

- Organize your Experiment around solving a business problem with machine learning.

- Keep all of your modeling history and experimentation in one place.

You can operationalize your persisted models to other SPL workflows in the Splunk platform through the publish functionality, as well as create alerts for any Experiments saved within the framework.

Experiment composition

Each Experiment contains the following sections. These vary slightly depending on the type of machine learning analytic being performed.

- Create or Detect: Follow the workflow laid out in the Experiment to create a new model or forecast, or detect outliers. The workflow depends on the type of analytic but usually includes performing a lookup on a dataset, selecting a field to predict or analyze, and selecting fields or values to use for performing different types of analysis.

- Raw Data Preview: This section is displayed for predictions and forecasts to show you the data that is being used.

- Validate: Use the tables and visualizations to determine how well the model was fitted, how well outliers were detected, or how well a forecast performed.

- Deploy: Click the buttons beneath the visualizations and tables to see different ways to use the analysis. For example, you can open the search in the Search app, show the SPL, or create an alert. Experiments that persist a model include the option to publish from within the EMF.

- Experiment History tab: Each time you use an Experiment, a history is captured of the settings used. Compare the effects of different searches, algorithms and parameters, and identify the best choices for your use. As a model learns over time, the Experiment monitors models to asses for an increase or decrease in accuracy. This average amount of error within the mathematical model is also captured within this history tab.

Experiment commands

Experiment Assistants use machine learning SPL commands. Commands use varies depending upon which Experiment Assistant is selected:

- Predict Numeric and Predict Categorical Fields use the

fitandapplycommands - Forecast Time Series can use the

predictcommand - Cluster Numeric Events uses the

fitandapplycommands

Choose the Experiment Assistant to suit your needs

Experiments cover six useful areas including Predict Numeric Fields, Predict Categorical Fields, Detect Numeric Outliers, Detect Categorical Outliers, Forecast Time Series, and Cluster Numeric Events.

- The Predict Numeric Fields Experiment Assistant uses regression algorithms to predict or estimate numeric values. Such models are useful for determining to what extent certain peripheral factors contribute to a particular metric result. After the regression model is computed, use these peripheral values to make a prediction on the metric result.

- The Predict Categorical Fields Experiment Assistant displays a type of learning known as classification. A classification algorithm learns the tendency for data to belong to one category or another based on related data.

- The Detect Numeric Outliers Experiment Assistant determines values that appear to be extraordinarily higher or lower than the rest of the data. Identified outliers are indicative of interesting, unusual, and possibly dangerous events. This Assistant is restricted to one numeric data field.

- The Detect Categorical Outliers Experiment Assistant identifies data that indicate interesting or unusual events. This Assistant allows non-numeric and multi-dimensional data, such as string identifiers and IP addresses. To detect categorical outliers, input your data and select the fields from which to look for unusual combinations or a coincidence of rare values. When multiple fields have rare values, the result is an outlier.

- The Forecast Time Series Experiment Assistant forecasts the next values in a sequence for a single time series. Forecasting makes use of past time series data trends to make a prediction about likely future values. The result includes both the forecasted value and a measure of the uncertainty of that forecast.

- The Cluster Numeric Events Experiment Assistant partitions events into groups of events based on the values of those fields. As the groupings are not known in advance, this is considered unsupervised learning.

| Algorithm permissions | Predict Numeric Fields Experiment workflow |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 4.1.0

Download manual

Download manual

Feedback submitted, thanks!