Smart Outlier Detection Assistant

The Smart Outlier Detection Assistant enables machine learning outcomes for users with little to no SPL knowledge. Introduced in version 5.0.0 of the Machine Learning Toolkit, this new Assistant is built on the backbone of the Experiment Management Framework (EMF), offering enhanced outlier detection abilities. The Smart Outlier Detection Assistant offers a segmented, guided workflow with an updated user interface. Move through the stages of Define, Learn, Review, and Operationalize to load data, build your model, and put that model into production. Each stage offers a data preview and visualization panel.

This Assistant leverages the DensityFunction algorithm which persists a model using the fit command that can be used with the apply command. DensityFunction creates and stores density functions for use in anomaly detection. DensityFunction groups the data, where for each of these groups a separate density function is fitted and stored.

To learn more about the Smart Outlier Detection Assistant algorithm, see DensityFunction algorithm.

The accuracy of the anomaly detection for DensityFunction depends on the quality and the size of the training dataset, how accurately the fitted distribution models the underlying process that generates the data, and the value chosen for the threshold parameter.

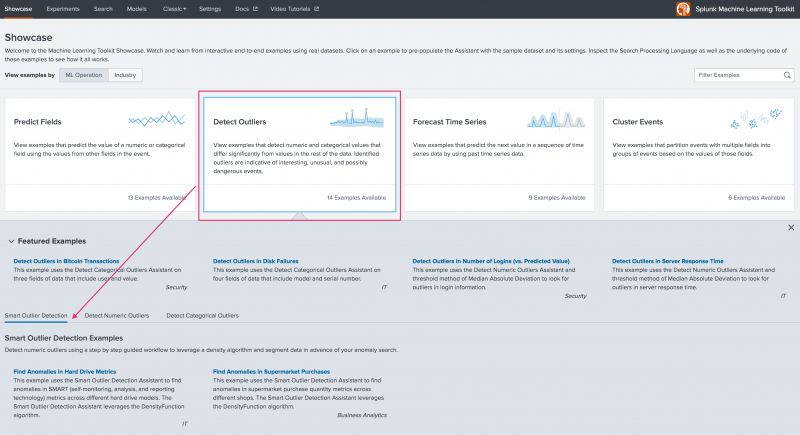

Smart Outlier Detection Assistant Showcase

You can gain familiarity of this new Assistant through the MLTK Showcase, accessed under its own tab. The Smart Outlier Detection Showcase examples include:

- Find Anomalies in Hard Drive Metrics

- Find Anomalies in Supermarket Purchases

Click the name of any Smart Outlier Detection Showcase to see this new Assistant and its updated interface using pre-loaded test data and pre-selected outlier detection parameters.

Smart Outlier Detection Assistant Showcases require you to click through to continue the demonstration. Showcases do not include the final stage of the Assistant workflow to Operationalize the model.

Smart Outlier Detection Assistant workflow

Move through the stages of Define, Learn, Review, and Operationalize to draw in data, build your model, and put that model into production.

This example workflow uses the supermarket.csv dataset that ships with the MLTK. You can use this dataset or another of your choice to explore the Smart Outlier Detection Assistant and its features before building a model with your own data.

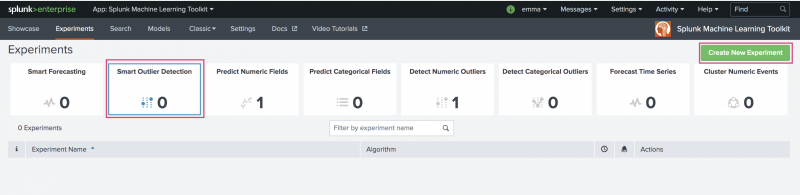

To begin, select Smart Outlier Detection from the Experiments landing page and the Create New Experiment button in the top right.

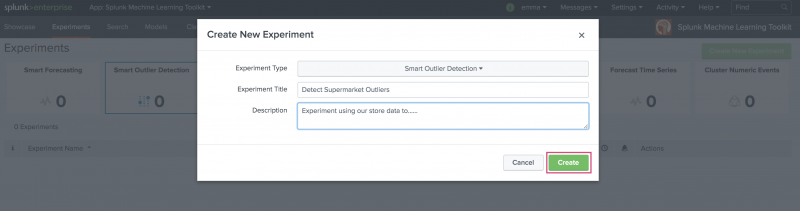

Enter an Experiment Title, and optionally add a Description. Click Create to move into the Assistant interface.

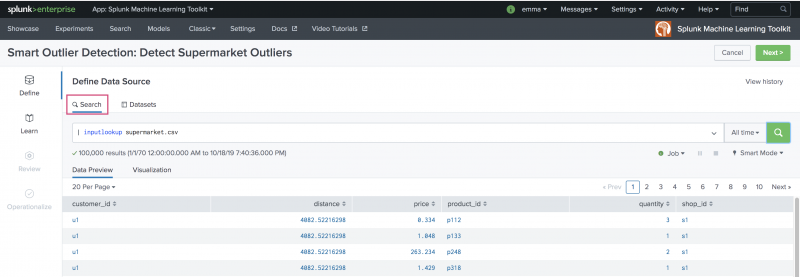

Define

Use the Define stage to select and preview the data you want to use for the outlier detection. You can pull in data from anywhere in the Splunk platform. You can use the Search bar to modify your dataset data in advance of using that data within the Learn step.

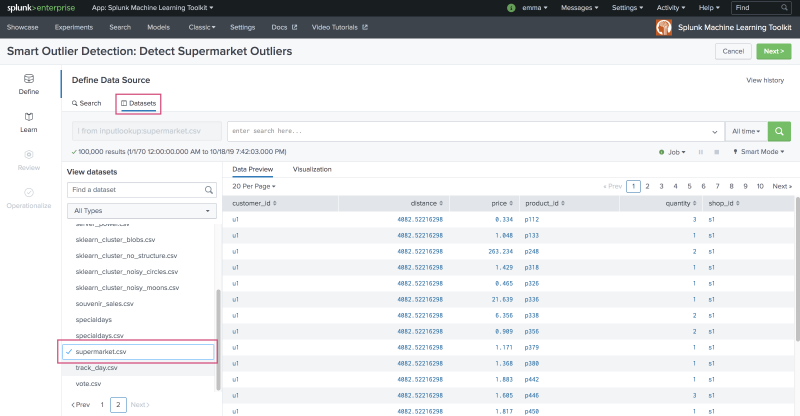

As an alternative to accessing data via Search, you can choose the Datasets option. Under Datasets, you can find any data you have ingested into Splunk, as well as any datasets that ship with Splunk Enterprise and the Machine Learning Toolkit. You can filter by type to find your preferred data faster.

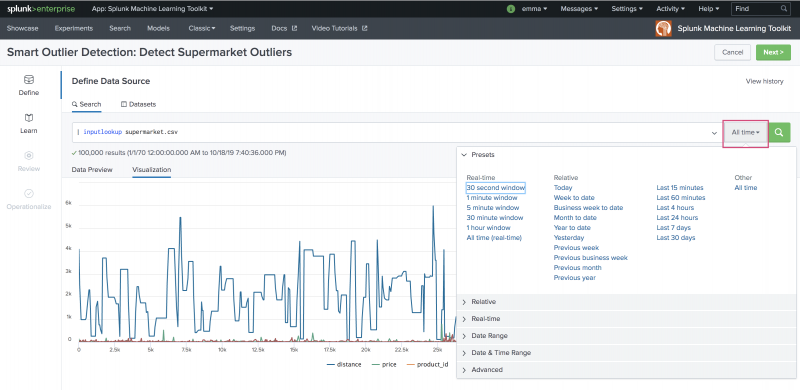

As with other Experiment Assistants, the Smart Outlier Detection Assistant includes a time-range picker to narrow down the data time-frame to a particular date or date range. The default setting of All time can be changed to suit your needs. Once data is selected, the Data Preview and Visualization tabs populate.

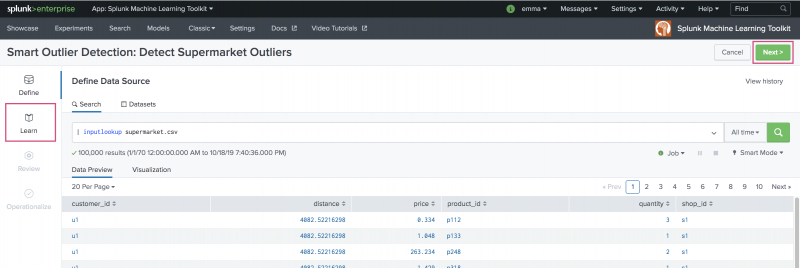

When you are finished selecting your data, click Next in the top right, or Learn from the left hand menu to move on to the next stage of the Assistant.

Learn

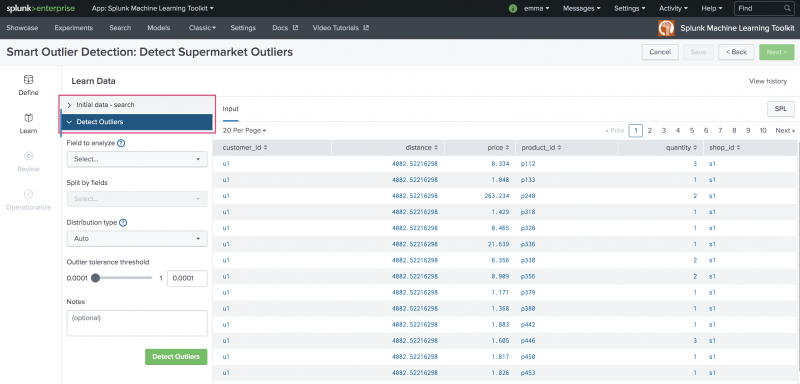

Use the Learn stage to create your outlier detection model. Select from the fields within this stage to customize and complete the outlier detection outcome.

The Learn is made up of two sections: Initial data, and Detect Outliers. The Initial data section is a carry over from inputs made in the Define stage. The Detect Outliers section is where you make selections to impact the machine learning results.

Refer to the following table for information on each available field. Hovering over the question mark helper icons beside fields also provides field descriptions.

| Field name | Description |

|---|---|

| Field to analyze | Required field. From the drop-down menu populated from your data set, select the field from which you wish to perform outlier detection. |

| Split by fields | Optional field. Select up to five fields. Use split by field(s) if the anomaly might be different based on the data in a particular field. You will not generate a cardinality histogram without the selection of at least one split by field. |

| Distribution type | Required field. Choose the distribution type based on the statistical behavior of the data. Leave as default selection of Auto if unsure. |

| Outlier tolerance threshold | Required field. Adjust as needed based on the number of expected outliers. |

| Notes | Optional field. Use this free form block of text to track the selections made in the parameter fields. Refer back to notes to review which parameter combinations yield the best results. |

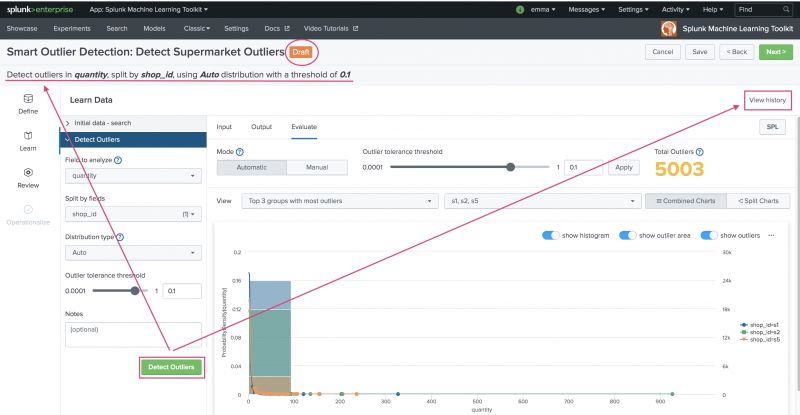

Once you make field selections, click Detect Outliers to view results. Clicking Detect Outliers creates the model using the fit command and produces a written summary of the chosen model parameters at the top of the page. The Experiment is now in a Draft state, and the View History option is available. View History allows you to track any changes you make in the Learn stage.

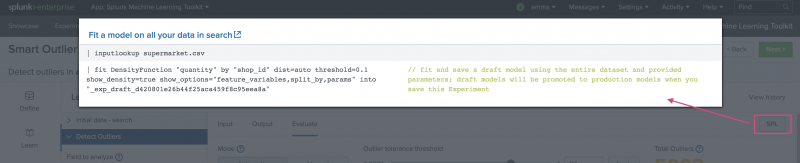

The SPL button is also available as a means to review the Splunk Search Processing Language being auto-generated for you in the background as you work through the Assistant.

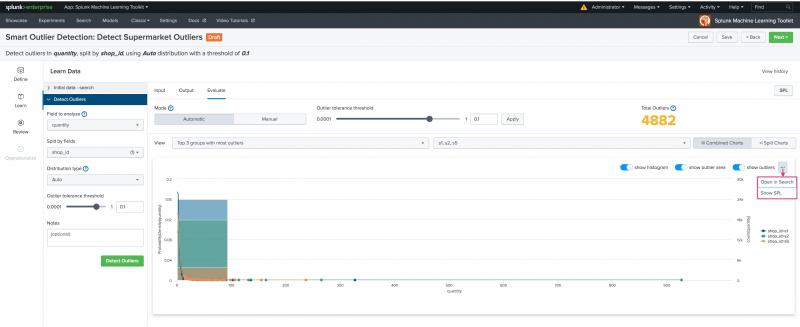

Access the underlying SPL via the SPL button, or access the SPL and Open in Search options from the ellipses within the Evaluate tab of the Learn step.

Making adjustments or changes to the fields in the Detect Outliers section and clicking the Detect Outlier button retrains the model using the fit command. This process can be compute intensive. The Smart Outlier Detection Assistant offers the unique option to make changes to some of the available fields without using the Detect Outliers section. Changes here tune the model parameters using the apply command. This option can be less compute intensive as you can update model settings without retraining the model.

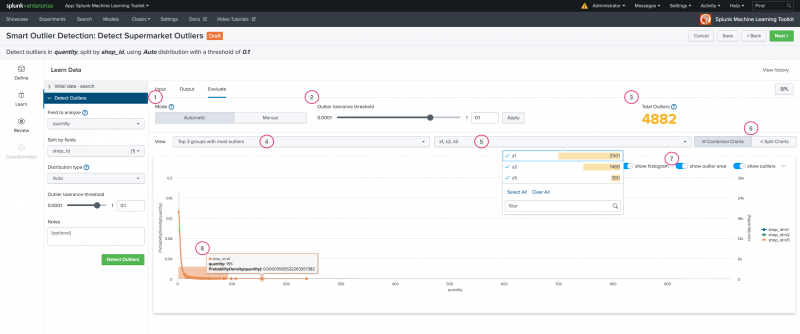

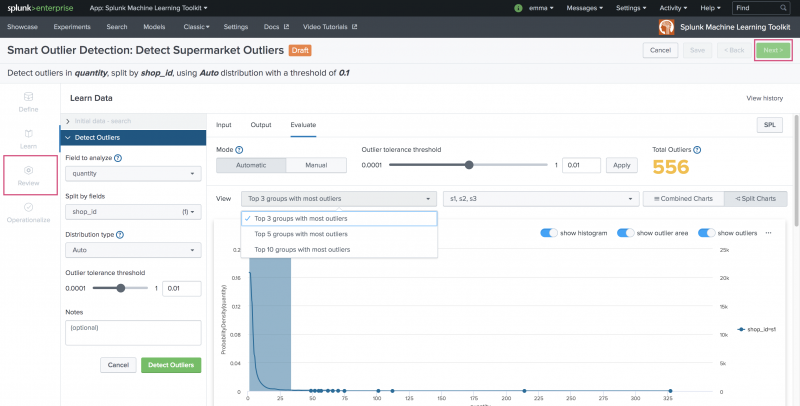

Fields and information views on the Evaluate tab include the following items that correspond in number to the screenshot:

- Choose to toggle the distribution mode from Automatic to Manual.

- Change the tolerance threshold for outliers. Click Apply to update the model parameters.

- View the total number of outliers based on current settings.

- Choose to view the top three, top five, or top ten groups with the most outliers. This option only appears if at least one field is selected to split by.

- Use this drop down to get a quick view of top outlier group values as based on the selection made at item #4.

- Opt for a split view of charts or combined view. This option only appears if at least one field is selected to split by.

- Toggle the view to show/ not show the histogram, outlier area, and outliers. All view options available in both the combined or split chart view.

- Hover over points within the visualization for additional details.

When you are happy with your results, click Next in the top right, or Review from the left hand menu to move on to the next stage of the Assistant.

Outlier tolerance threshold settings

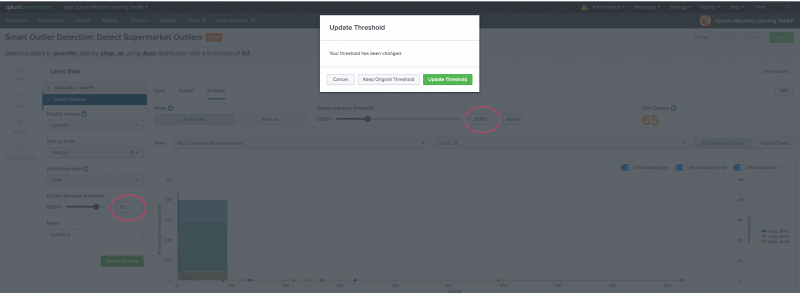

If you change the Outlier tolerance threshold parameter without retraining the model, and you try to move on to the Review stage, you will see a pop up modal window. In this example, the model has been trained with an outlier tolerance threshold of 0.1, but the parameter is adjusted to an outlier tolerance threshold of 0.001. Choosing Keep Original Threshold will use the outlier tolerance threshold of 0.1 and ignore the adjusted parameter setting. Choosing Update Threshold will retrain the model using the adjusted parameter setting of 0.001 and update this setting in the Detect Outliers menu.

Review

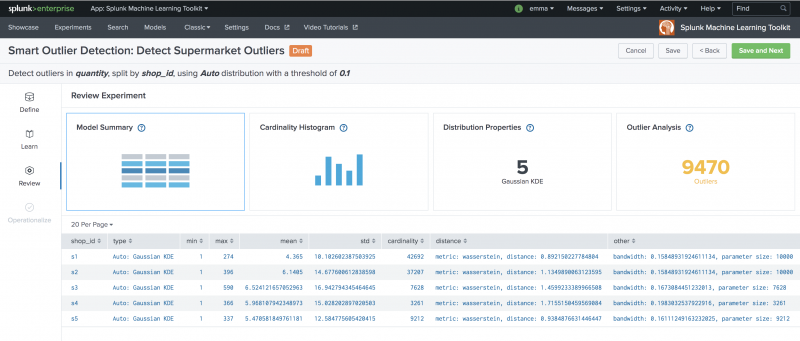

Use the Review stage to explore the resulting model based on the fields selected at the Learn stage. The Review panels give you the opportunity to assess your outlier detection results prior to putting the model into production.

There are four panels in this stage as seen in the following image and described in the table:

| Panel name | Description |

|---|---|

| Model Summary | This is the default view showing a summary table based on selections made in the Learn stage. |

| Cardinality Histogram | View a histogram of groups by number of data points. Groups are based on any fields chosen in the Learn stage and the split by fields section. Having fewer numbers of low value in this histogram can be an indication of a more reliable model. To see different results, increase the amount of data in the search or change the fields selected in the split by fields section. No results view generates if no split by fields were selected at the Learn step. |

| Distribution Properties | Use this view to get a sense of how groups with a similar type of distribution relate to one another. A histogram of mean and standard deviation that is sharp and narrow can mean that most of those groups have similar statistical behavior. Two distinct peaks in the histograms can signal two obvious characteristics in the groups worthy of further investigation. No results view generates if no split by fields were selected at the Learn step. |

| Outlier Analysis | View outliers by any field selections made in the Learn stage and the split by fields section. Use this breakdown to gain insight into individual dimensions that could require further investigation. No results view generates if no split by fields were selected at the Learn step. |

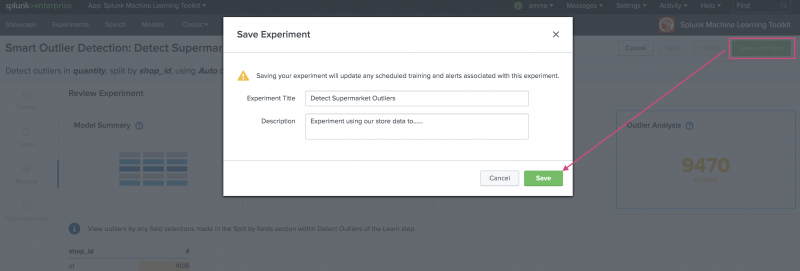

Navigate back to the Learn stage to make outlier detection adjustments, or click Save and Next to continue. Clicking Save and Next generates a modal window that offers the opportunity to update the Experiment name or description. When ready, click Save.

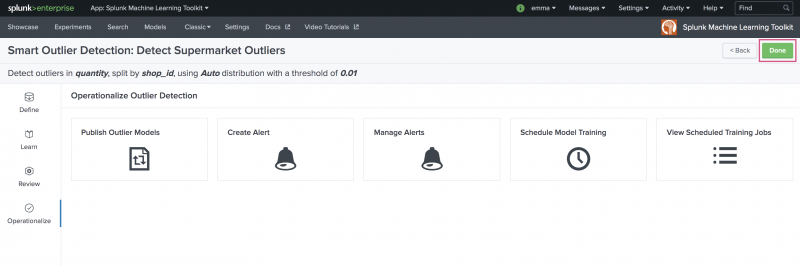

Operationalize

The Operationalize stage provides publishing, alerting, and scheduled training in one place. Click Done to move to the Experiments listings page.

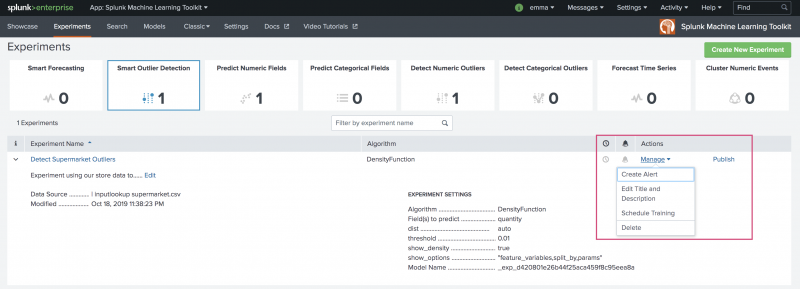

The Experiments listing page provides a place to publish, set up alerts, and schedule training for any of your saved Experiments across all Assistant types including Smart Forecasting.

Learn more

To learn about implementing analytics and data science projects using Splunk's statistics, machine learning, built-in and custom visualization capabilities, see the Splunk for Analytics and Data Science course.

| Smart Forecasting Assistant | Smart Clustering Assistant |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 4.5.0, 5.0.0, 5.1.0

Download manual

Download manual

Feedback submitted, thanks!