Troubleshoot the MLTK

Refer to the following list for some common questions about the Machine Learning Toolkit and how to resolve them. If you don't see the information you need, you can ask your question and get answers through community support at Splunk Answers.

Getting started with MLTK

How do I get started with machine learning in Splunk?

If you have not done so already, we recommend reviewing these introductory documents:

You can refer to the following blogs on machine learning and other related topics:

- Interested in Statistical Forecasts and Anomalies? Check out Part 1, Part 2 and Part 3 of this blog

- If you have ITSI or are interested in predictive analytics , check out this blog on ITSI and Sophisticated Machine Learning

- If you have ITSI or are interested in custom anomaly detection check out Part 1 and Part 2 of this blog

- If you are hungry for ice cream or anomalies in three flavors, check out Part 1 and Part 2 of this blog

Visit our YouTube channel to see the MLTK in action.

There is also have an active GitHub Community where you can connect with other MLTK users and share and reuse custom algorithms.

Splunk also offers a course on data science and analytics: Splunk for Data Science and Analytics

Do I have to use .CSV files to load data into the MLTK?

The Splunk platform accepts any type of data. In particular, it works with all IT streaming and historical data. The source of the data can be event logs, web logs, live application logs, network feeds, system metrics, change monitoring, message queues, archive files, data from indexes, third-party data sources, and so on. Basically any data that can be retrieved by a Splunk search can be used by the MLTK.

In general, data sources are grouped into the following categories.

Data source Description Files and directories Most data that you might be interested in comes directly from files and directories. Network events The Splunk software can index remote data from any network port and SNMP events from remote devices. Windows sources The Windows version of Splunk software accepts a wide range of Windows-specific inputs, including Windows Event Log, Windows Registry, WMI, Active Directory, and Performance monitoring. Other sources Other input sources are supported, such as FIFO queues and scripted inputs for getting data from APIs, and other remote data interfaces.

For many types of data, you can add the data directly to your Splunk deployment. If the data that you want to use is not automatically recognized by the Splunk software, you need to provide information about the data before you can add it.

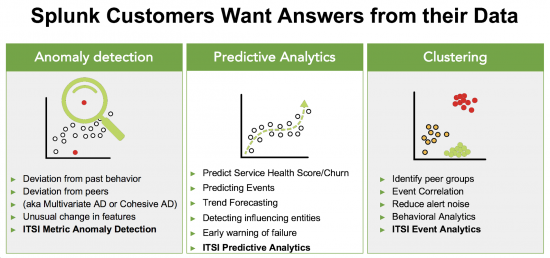

What are the most common use cases for machine learning in Splunk?

The most common use cases are as follows:

- Anomaly detection

- Prediction Analytics (Forecasting)

- Clustering

What is a Machine Learning Model and how is it different from a Splunk Data Model?

A machine learning model is an encoded lookup file created by from a fit search command using the into clause, persisting the learned behaviors to a file on disk for use in later searches on net new data using the apply command.

Splunk Data Models are knowledge objects for organizing and accelerating your data in the Splunk platform.

Why do I need a dedicated search head for MLTK app?

You need a dedicated search head for the MLTK if you are freely experimenting and creating large numbers of machine learning models of substantial size. The search load and the machine learning workload can get large and impact your production search environment . For applying machine learning models in production (generally extremely light on resource use), or periodically retraining production models, you should be able to use your normal Splunk infrastructure. Work with your Splunk admin for your specific Splunk deployment.

Why am I seeing the error of "Error in SearchOperator: loadjob"?

You need to configure sticky sessions on your load balancer. For further information, see Use a load balancer with search head clustering.

MLTK version compatibility and associated add-ons

How do I know if I'm using the correct version of MLTK and the PSC add-on?

You can refer to the Machine Learning Toolkit version dependencies document to ensure you are running the correct version of the MLTK, Python, Python for Scientific Computing add-on, and Splunk Enterprise.

How can I assess the performance costs of MLTK searches?

Machine learning requires compute resources and disk space. Each algorithm has a different cost, complicated by the number of input fields you select and the total number of events processed. Model files are lookups and will increase bundle replication costs.

For each algorithm implemented in ML-SPL, we measure run time, CPU utilization, memory utilization, and disk activity when fitting models on up to 1,000,000 search results, and applying models on up to 10,000,000 search results, each with up to 50 fields.

Ensure you know the impact of making changes to the algorithm settings by adding the ML-SPL Performance App for the Machine Learning Toolkit to your setup via Splunkbase.

Why am I seeing an error when installing the PSC add-on?

On some Windows installations, installing the PSC add-on through the Splunk Manage Apps user interface might result in an error. This error can be ignored. In some cases it may be necessary to manually unpack the package in the apps directory to get past the error.

Can I use the MLTK in other apps? How do I do that?

Yes you can use ML-SPL commands in other apps. You need to make the MLTK global if you want to use the ML-SPL commands across all the apps. Remember that the model files follow all the same rules as Splunk lookupfiles- permissions, access control, and replication.

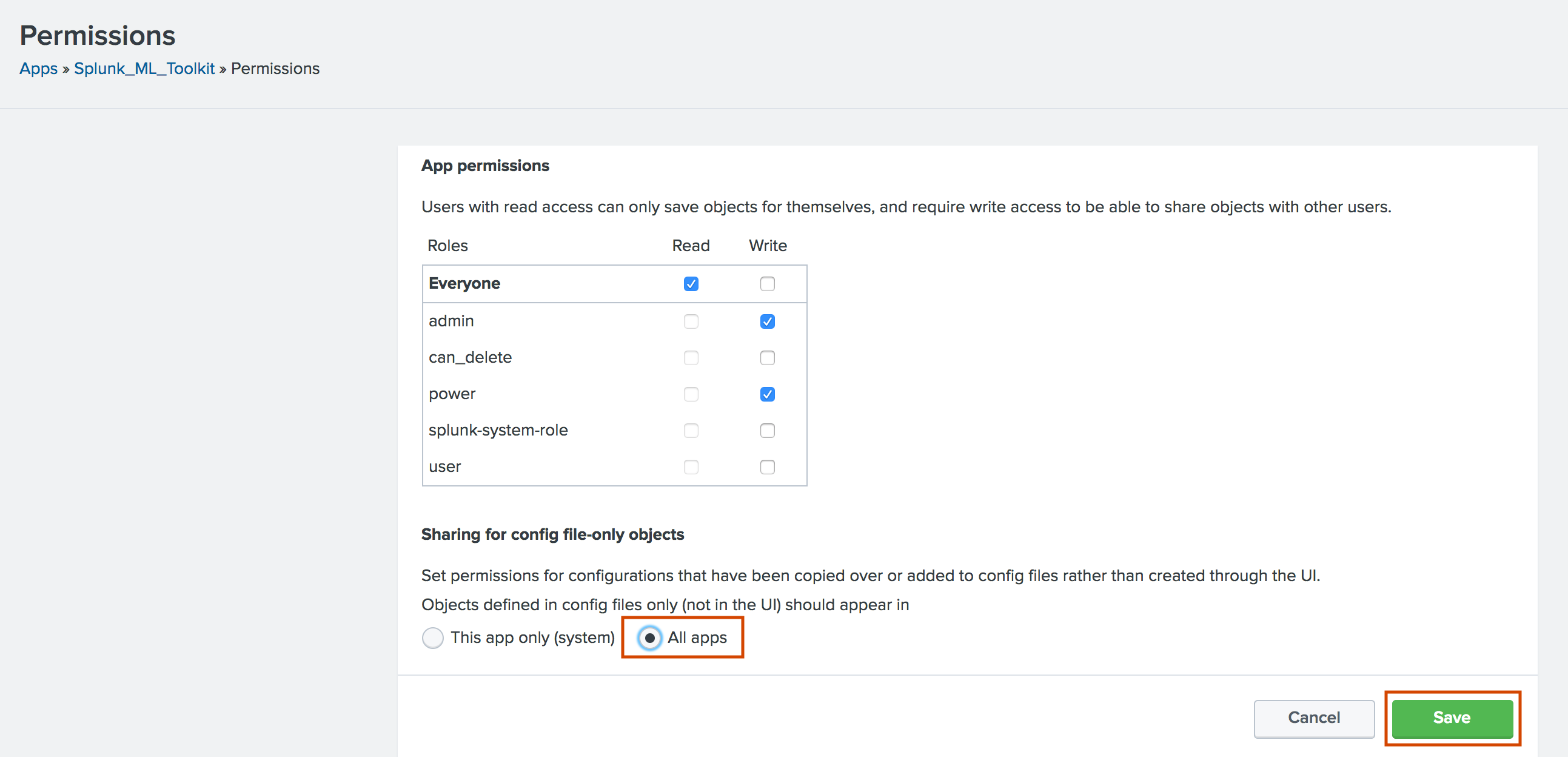

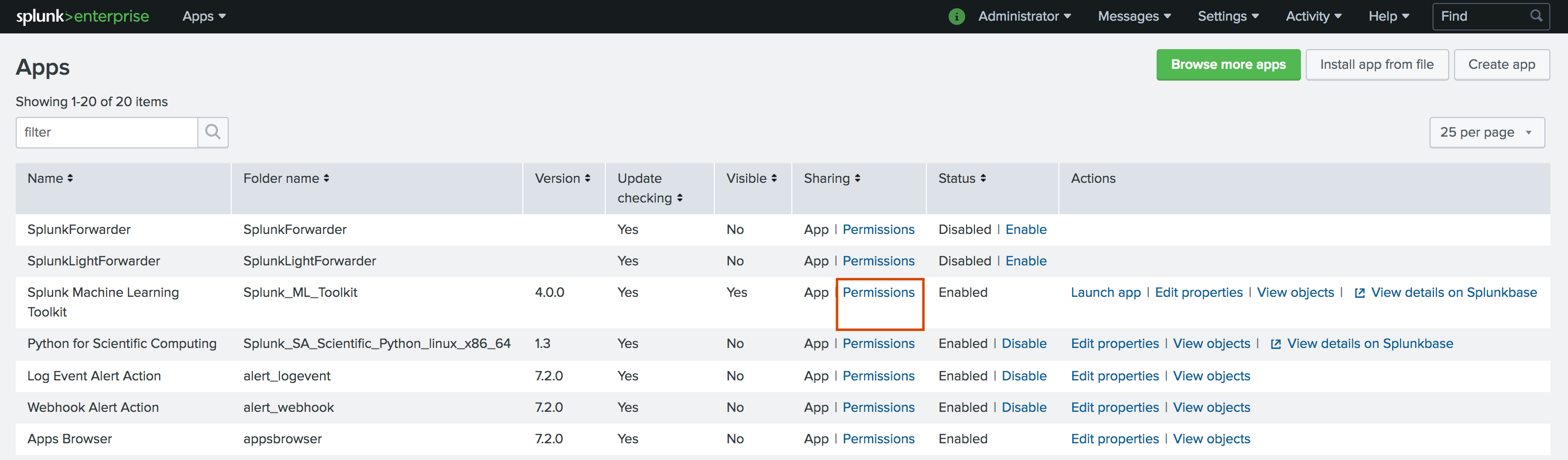

Perform the following steps:

- From the top navigation bar choose Apps ⇒ Manage Apps

- Find the Splunk Machine Learning Toolkit in the list, and click on the Permissions link in the Sharing column.

- Change the Sharing setting to All apps and (optionally) change any role based permissions as well. Click Save when done.

We're using version 5.0.0 of the MLTK. Can we add Python 3 libraries?

For details on the steps to add Python 3 libraries, see Adding Python 3 libraries in the ML-SPL API Guide.

Support is not offered on the use of or upgrade of any Python 3 libraries added to your Splunk platform instance. Any upgrade to the MLTK or the PSC add-on will overwrite any Python library changes.

MLTK know-how

What is partial fit and how does it work?

If an algorithm supports partial_fit, you can incrementally learn on net new data without loading the entire training history in a single search.

We recommend watching this brief video for details on the ways you can have your machine learning workflows update and learn through time.: How Does the Splunk Machine Learning Toolkit Learn?

As with the fit command, you want a lightweight search. Refer back to this question for more information.

Several of the MLTK algorithms offer the partial_fit option. For which algorithms support this option, see Algorithms that support partial_fit. For a detailed list of available algorithms, see Algorithms in the MLTK.

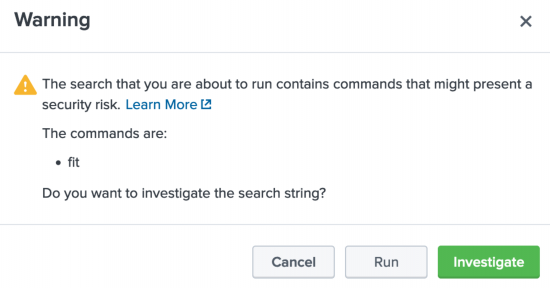

Why am I seeing a security warning when I run the fit command?

There are a handful of scenarios that throw a security warning dialog box when the fit command is called.

The scenarios under which this warning appears include:

- When the

fitcommand is run for the first time after logging into the system with a URL. - When the user refreshes the page or logs back in with the URL.

- When the user chooses the Open in Search option from within the MLTK.

- When viewing certain Showcase examples.

Users with Write permission can edit the web.conf file to turn off the warning dialog box. For directions, see Turning off the warning dialog box.

What is automatic sampling/performance Settings for the MLTK and why should I change these?

By default, reservoir sampling is enabled and will start sampling once the maximum number of events crosses 100,000 in your search events prior to the fit command.

If you do not wish to enable reservoir sampling and have resources available on your Splunk machine, then you can disable it and change the number of maximum input to a preferred number. In an environment set aside for machine learning workloads, and to avoid impact with production searches, it is not uncommon to increase the max_inputs setting into the millions.

Ensure you have enough compute and memory resources available before making these changes. You will likely need to change max_memory_usage_mb and other options in Settings as you increase the number of events you want to process.

Is there a way to increase the number of fields to forecast when using the Smart Forecasting Assistant?

There is a limit of five fields to forecast you can select for the Smart Forecasting Assistant. This limit is in place to make it easy for users to read and view the resulting charts. You can use more than five fields outside of the Assistant environment by using SPL in the search bar.

MLTK algorithms and ML-SPL commands

Do I have options outside of the 30 native algorithms in the MLTK?

Yes! On-prem customers looking for solutions that fall outside of the 30 native algorithms can use GitHub to add more algorithms. Solve custom uses cases through sharing and reusing algorithms in the Splunk Community for MLTK on GitHub. Here you can also learn about new machine learning algorithms from the Splunk open source community, and connect with fellow users of the MLTK.

Splunk Cloud Platform customers can also use GitHub to add more algorithms via an app. The Splunk GitHub for Machine learning app provides access to custom algorithms and is based on the Machine Learning Toolkit open source repo. Splunk Cloud Platform customers need to create a support ticket to have this app installed.

To access the Machine Learning Toolkit open source repo, see the MLTK GitHub repo.

The Machine Learning Toolkit and Python for Scientific computing add-on must be installed in order for GitHub to work in your Splunk environment.

Which MLTK algorithms support partial_fit?

The BernolliNB, Birch, GaussianNB, MLPClassifier, StandardScaler, SGDClassifier, SGDRegressor, and StateSpaceForecast algorithms all support partial_fit or incremental fit.

To view this information in a table view, along with information on which algorithms support the fit, apply, and summary commands, see Algorithm support of key ML-SPL commands quick reference.

What are the side effects of the fit and apply commands on my data?

Machine learning commands from the MLTK are very powerful and have a number automation steps built into them. The fit and apply commands have a number of caveats and features to accelerate your success with machine learning in Splunk. See, Using the fit and apply commands.

The

fitandapplycommands perform the following tasks at the highest level:

- The

fitcommand produces a learned model based on the behavior of a set of events.- The

fitcommand then applies that model to the current set of search results in the search pipeline.- The

applycommand repeats the field selection of thefitcommand steps.Steps of the fit command:

- Search results pulled into memory.

- The

fitcommand transforms the search results in memory through these data preparation actions:

- Discard fields that are null throughout all the events.

- Discard non-numeric fields with more than (>) 100 distinct values.

- Discard events with any null fields.

- Convert non-numeric fields into "dummy variables" by using one-hot encoding.

- Convert the prepared data into a numeric matrix representation and run the specified machine learning algorithm to create a model.

- Apply the model to the prepared data and produce new (predicted) columns.

- Learned model is encoded and saved as a knowledge object.

Steps of the apply command:

- Load the learned model.

- The

applycommand transforms the search results in memory through these data preparation actions:

- Discard fields that are null throughout all the events.

- Discard non-numeric fields with more than (>) 100 distinct values.

- Convert non-numeric fields into "dummy variables" by using one-hot encoding.

- Discard dummy variables that are not present in the learned model.

- Fill missing dummy variables with zeros.

- Convert the prepared data into a numeric matrix representation.

- Apply the model to the prepared data and produce new (predicted) columns.

How do I use a savedsearch with the apply command?

MLTK does not fully support using the apply command with savedsearch. If you are experiencing issues using apply with savedsearch, you can try rewriting your SPL with the appendcols keyword. The appendcols keyword appends the column that gets returned from the subsearch to the savedsearch columns, instead of assigning the value to a non-existing column in the savedsearch.

To learn more, see Appendcols in the Search Reference manual.

The following example shows the appendcols keyword in action:

-

| inputlookup track_day.csv | fit LogisticRegression fit_intercept=true "vehicleType" from "batteryVoltage" "engineCoolantTemperature" "engineSpeed" "lateralGForce" "longitudeGForce" "speed" "verticalGForce" into "example_vehicle_type"

-

makeresults count=50000save asMLA3950 -

This appends the

|savedsearch MLA3950 | appendcols [| inputlookup track_day.csv | apply "example_vehicle_type" | table vehicleType]

vehicleTypecolumn from thesubsearchto thesavedsearchcolumns.

How do you nest multiple uses of the score command?

For the time being you will need to nest your score commands. Follow a pattern such as in this example with your own data.

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply example_vehicle_type as DT_prediction probabilities=true | multireport [| score confusion_matrix vehicleType against DT_prediction] [| score roc_auc_score vehicleType against "probability(vehicleType=2013 Audi RS5)" pos_label="2013 Audi RS5"]

MLTK model management

How often should I run fit to retrain models?

In general, you are unlikely to need to run a fit search to update a specific set of models in production more often than once a day. When you are exploring and experimenting, you may be running fit more frequently to iteratively create your production machine learning solutions.

You can consider the following factors:

- How often is your data significantly changing it's overall behavior?

- How resource expensive is your base search before the machine learning commands (for example are you loading 3 billion events with your search over a 30 day window, and the search takes 45 minutes to load before the first

fitcommand is called?) - How computationally intensive are your selected algorithms? Remember to check out the ML SPL performance app!

Consider accelerating your base search, perhaps using Data Models or Summary Indexes, to speed up the base search!

How do I manage version control for my model files?

We do not have model history as part of the MLTK today, but we do have Experiment history stored to reload any saved change made to your Experiment.

If you want to version your models, remember they are just Splunk lookup objects and follow all the rules of Splunk knowledge objects. You can rename your models just like any other lookup object.

Renaming of models in this instance refers to those outside of the Experiment Management Framework. Not models created within an Experiment.

How do I move my model files from one Splunk instance to another?

Model files are lookups in Splunk and follow all the rules for lookups - so you can find the files on disk and with command line access you can move those lookups to another Splunk instance. Click to learn more about namespacing and permissions of lookups.

How do I access Classic Assistant history?

In versions of the MLTK including version 3.2.0 you could access data using a Load Existing Settings UI. That UI is not present in more current versions of the MLTK but the data is available. You can try these steps to retrieve and access your older settings.

- Using Search input

| kvstorelookup collection_name=<collection_name>. - Replace

collection_namevalue with the correct name for the Assistant used. - The values from

collection_nameare one of the following:linear_regression_history,classification_history,categorical_outlier_detection_history,clustering_history, orforecast_history.

How do you feed data from an existing Splunk data model into the Machine Learning Toolkit?

This is done in the same way you search for data in a Data Model anywhere else in Splunk.

For example:

| datamodel network_traffic search | search tag=destination

Remember that any data that can be retrieved by a Splunk search can be used with the Machine LearningToolkit, including data from indexes or third-party data sources . You simply append that search with the applicable | fit ... or | apply ... command.

Searching in MLTK

Why isn't my search returning any results?

If you are using an example or sample search, that search will likely need tuning to work properly on your data. For example, changing the index, sourcetype, or field names. As with all Splunk searches you may need to troubleshoot your SPL by iterating it command by command. Try each command in turn over a short time period to ensure that you are getting results from an index and that you aren't filtering out data with any subsequent commands.

Why does my search keep cutting off at 100k records?

All of the algorithms that ship with MLTK can be configured to manage the processing resources. By default these settings are low, so you may need to change the algorithm setting max_inputs for the algorithm you are using to meet your needs. See, Configure algorithm performance costs.

Why is my search timing out with an error?

All of the algorithms that ship with MLTK can be configured to manage the processing resources. By default these settings are low, so you may need to change the algorithm setting max_fit_time for the algorithm you are using to meet your needs. See, Configure algorithm performance costs.

All of the algorithms that ship with MLTK can be configured to manage the processing resources. By default these settings are low, so you may need to change the algorithm setting max_memory_usage_mb for the algorithm you are using to meet your needs. See, Configure algorithm performance costs.

| Learn more about the Splunk Machine Learning Toolkit | Support for the Splunk Machine Learning Toolkit |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 5.3.0, 5.3.1, 5.3.3

Download manual

Download manual

Feedback submitted, thanks!