About data models

Data models drive the pivot tool. Data models enable users of Pivot to create compelling reports and dashboards without designing the searches that generate them. Data models can have other uses, especially for Splunk app developers.

Splunk knowledge managers design and maintain data models. These knowledge managers understand the format and semantics of their indexed data and are familiar with the Splunk search language. In building a typical data model, knowledge managers use knowledge object types such as lookups, transactions, search-time field extractions, and calculated fields.

What is a data model?

A data model is a hierarchically structured search-time mapping of semantic knowledge about one or more datasets. It encodes the domain knowledge necessary to build a variety of specialized searches of those datasets. These specialized searches are used by Splunk software to generate reports for Pivot users.

When a Pivot user designs a pivot report, they select the data model that represents the category of event data that they want to work with, such as Web Intelligence or Email Logs. Then they select a dataset within that data model that represents the specific dataset on which they want to report. Data models are composed of datasets, which can be arranged in hierarchical structures of parent and child datasets. Each child dataset represents a subset of the dataset covered by its parent dataset.

If you are familiar with relational database design, think of data models as analogs to database schemas. When you plug them into the Pivot Editor, they let you generate statistical tables, charts, and visualizations based on column and row configurations that you select.

To create an effective data model, you must understand your data sources and your data semantics. This information can affect your data model architecture--the manner in which the datasets that make up the data model are organized.

For example, if your dataset is based on the contents of a table-based data format, such as a .csv file, the resulting data model is flat, with a single top-level root dataset that encapsulates the fields represented by the columns of the table. The root dataset may have child dataset beneath it. But these child dataset do not contain additional fields beyond the set of fields that the child datasets inherit from the root dataset.

Meanwhile, a data model derived from a heterogeneous system log might have several root datasets (events, searches, and transactions). Each of these root datasets can be the first dataset in a hierarchy of datasets with nested parent and child relationships. Each child dataset in a dataset hierarchy can have new fields in addition to the fields they inherit from ancestor datasets.

Data model datasets can get their fields from custom field extractions that you have defined. Data model datasets can get additional fields at search time through regular-expression-based field extractions, lookups, and eval expressions.

The fields that data models use are divided into the categories described above (auto-extracted, eval expression, regular expression) and more (lookup, geo IP). See Dataset field types.

Data models are a category of knowledge object and are fully permissionable. A data model's permissions cover all of its data model datasets.

See Manage data models.

Data models generate searches

When you consider what data models are and how they work it can also be helpful to think of them as a collection of structured information that generates different kinds of searches. Each dataset within a data model can be used to generate a search that returns a particular dataset.

We go into more detail about this relationship between data models, data model datasets, and searches in the following subsections.

- Dataset constraints determine the first part of the search through:

- Simple search filters (Root event datasets and all child datasets).

- Complex search strings (Root search datasets).

transactiondefinitions (Root transaction datasets).

- When you select a dataset for Pivot, the unhidden fields you define for that dataset comprise the list of fields that you choose from in Pivot when you decide what you want to report on. The fields you select are added to the search that the dataset generates. The fields can include calculated fields, user-defined field extractions, and fields added to your data by lookups.

The last parts of the dataset-generated-search are determined by your Pivot Editor selections. They add transforming commands to the search that aggregate the results as a statistical table. This table is then used by Pivot as the basis for charts and other types of visualizations.

For more information about how you use the Pivot Editor to create pivot tables, charts, and visualizations that are based on data model datasets, see Introduction to Pivot in the Pivot Manual.

Datasets

Data models are composed of one or more datasets. Here are some basic facts about data model datasets:

- Each data model dataset corresponds to a set of data in an index. You can apply data models to different indexes and get different datasets.

- Datasets break down into four types. These types are: Event datasets, search datasets, transaction datasets, and child datasets.

- Datasets are hierarchical. Datasets in data models can be arranged hierarchically in parent/child relationships. The top-level event, search, and transaction datasets in data models are collectively referred to as "root datasets."

- Child datasets have inheritance. Data model datasets are defined by characteristics that mostly break down into constraints and fields. Child datasets inherit constraints and fields from their parent datasets and have additional constraints and fields of their own.

We'll dive into more detail about these and other aspects of data model datasets in the following subsections.

- Child datasets provide a way of filtering events from parent datasets - Because a child dataset always provides an additional constraint on top of the constraints it has inherited from its parent dataset, the dataset it represents is always a subset of the dataset that its parent represents.

Root datasets and data model dataset types

The top-level datasets in data models are called root datasets. Data models can contain multiple root datasets of various types, and each of these root datasets can be a parent to more child datasets. This association of base and child datasets is a dataset tree. The overall set of data represented by a dataset tree is selected first by its root dataset and then refined and extended by its child datasets.

Root datasets can be defined by a search constraint, a search, or a transaction:

- Root event datasets are the most commonly-used type of root data model dataset. Each root event dataset broadly represents a type of event. For example, an HTTP Access root event dataset could correspond to access log events, while an Error event corresponds to events with error messages.

Root event datasets are typically defined by a simple constraint. This constraint is what an experienced Splunk user might think of as the first portion of a search, before the pipe character, commands, and arguments are applied. For example,status > 600andsourcetype=access_* OR sourcetype=iis*are possible event dataset definitions.

See Dataset constraints. - Root search datasets use an arbitrary Splunk search to define the dataset that it represents. If you want to define a base dataset that includes one or more fields that aggregate over the entire dataset, you might need to use a root search dataset that has transforming commands in its search. For example: a system security dataset that has various system intrusion events broken out by category over time.

The constraint for the root search dataset must be an event index search. For example,datamodel=dmtest_kvstore.testanddatamodel=dmtest_csv.testaren't supported. In addition, the root search dataset constraint can't begin with a command other than thesearchcommand. - Root transaction datasets let you create data models that represent transactions: groups of related events that span time. Transaction dataset definitions utilize fields that have already been added to the model via event or search dataset, which means that you can't create data models that are composed only of transaction datasets and their child datasets. Before you create a transaction dataset you must already have some event or search dataset trees in your model.

Child datasets of all three root dataset types--event, transaction, and search--are defined with simple constraints that narrow down the set of data that they inherit from their ancestor datasets.

Dataset types and data model acceleration

You can optionally use data model acceleration to speed up generation of pivot tables and charts. There are restrictions to this functionality that can have some bearing on how you construct your data model, if you think your users would benefit from data model acceleration.

To accelerate a data model, it must contain at least one root event dataset, or one root search dataset that only uses streaming commands. Acceleration only affects these dataset types and datasets that are children of those root datasets. You cannot accelerate root search datasets that use nonstreaming commands (including transforming commands), root transaction datasets, and children of those datasets. Data models can contain a mixture of accelerated and unaccelerated datasets.

See Manage data models.

See Command types in the Search Reference for more information about streaming commands and other command types.

Example of data model dataset hierarchies

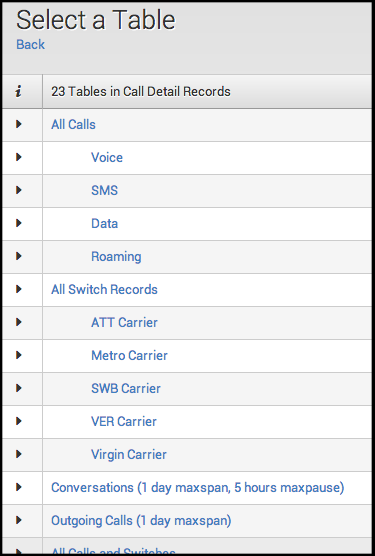

The following example shows the first several datasets in a "Call Detail Records" data model. Four top-level root datasets are displayed: All Calls, All Switch Records, Conversations, and Outgoing Calls.

All Calls and All Switch Records are root event datasets that represent all of the calling records and all of the carrier switch records, respectively. Both of these root event datasets have child datasets that deal with subsets of the data owned by their parents. The All Calls root event dataset has child datasets that break down into different call classifications: Voice, SMS, Data, and Roaming. If you were a Pivot user who only wanted to report on aspects of cellphone data usage, you'd select the Data dataset. But if you wanted to create reports that compare the four call types, you'd choose the All Calls root event dataset instead.

Conversations and Outgoing Calls are root transaction datasets. They both represent transactions--groupings of related events that span a range of time. The "Conversations" dataset only contains call records of conversations between two or more people where the maximum pause between conversation call record events is less than five hours and the total length of the conversation is less than one day.

For details about defining different data model dataset types, see Design data models.

Dataset constraints

All data model datasets are defined by sets of constraints. Dataset constraints filter out events that aren't relevant to the dataset.

- For a root event dataset or a child dataset of any type, the constraint looks like a simple search, without additional pipes and search commands. For example, the constraint for

HTTP Request, one of the root event dataset of the Web Intelligence data model, issourcetype=access_*. - For a root search dataset, the constraint is the dataset search string.

- For a root transaction dataset, the constraint is the transaction definition. Transaction dataset definitions must identify Group Dataset (either one or more event dataset, a search dataset, or a transaction dataset) and one or more Group By fields. They can also optionally include Max Pause and Max Span values.

Constraints are inherited by child datasets. Constraint inheritance ensures that each child dataset represents a subset of the data represented by its parent datasets. Your Pivot users can then use these child datasets to design reports with datasets that already have extraneous data prefiltered out.

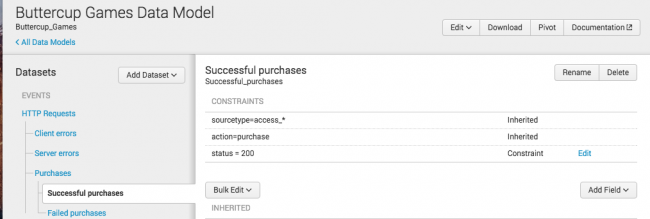

In the following example, we will use a data model called Buttercup Games. Its Successful Purchases dataset is a child of the root event dataset HTTP Requests and is designed to contain only those events that represent successful customer purchase actions. Successful Purchases inherits constraints from HTTP Requests and another parent dataset named Purchases.

- HTTP Requests starts by setting up a search that only finds webserver access events.

sourcetype=access_* - The Purchases dataset further narrows the focus down to webserver access events that involve purchase actions.

action=purchase - And finally, Successful Purchases adds a constraint that reduces the dataset event set to web access events that represent successful purchase events.

status=200When all the constraints are added together, the base search for the Successful Purchases dataset looks like this:

A Pivot user might use this dataset for reporting if they know that they only want to report on successful purchase actions.sourcetype=access_* action=purchase status=200

For details about datasets and dataset constraints, see the topic Design data models.

Dataset field types

There are five types of data model dataset fields.

Auto-extracted

A field extracted by the Splunk software at index time or search time. You can only add auto-extracted fields to root datasets. Child datasets can inherit them, but they cannot add new auto-extracted fields of their own. Auto-extracted fields divide into three groups.

| Group | Definition |

|---|---|

| Fields added by automatic key value field extraction | These are fields that the Splunk software extracts automatically, like uri or version. This group includes fields indexed through structured data inputs, such as fields extracted from the headers of indexed CSV files. See Extract fields from files with structured data in Getting Data In.

|

| Fields added by knowledge objects | Fields added to search results by field extractions, automatic lookups, and calculated field configurations can all appear in the list of auto-extracted fields. |

| Fields that you have manually added | You can manually add fields to the auto-extracted fields list. They might be rare fields that you do not currently see in the dataset, but may appear in it at some point in the future. This set of fields can include fields added to the dataset by generating commands such as inputcsv or dbinspect.

|

Eval Expression

A field derived from an eval expression that you enter in the field definition. Eval expressions often involve one or more extracted fields.

Lookup

A field that is added to the events in the dataset with the help of a lookup that you configure in the field definition. Lookups add fields from external data sources such as CSV files and scripts. When you define a lookup field you can use any lookup object in your system and associate it with any other field that has already been associated with that same dataset.

See About lookups.

Regular Expression

This field type is extracted from the dataset event data using a regular expression that you provide in the field definition. A regular expression field definition can use a regular expression that extracts multiple fields; each field will appear in the dataset field list as a separate regular expression field.

Geo IP

A specific type of lookup that adds geographical fields, such as latitude, longitude, country, and city to events in the dataset that have valid IP address fields. Useful for map-related visualizations.

See Design data models.

Field categories

The Data Model Editor groups data model dataset fields into three categories.

| Category | Definition |

|---|---|

| Inherited | All datasets have at least a few inherited fields. Child fields inherit fields from their parent dataset, and these inherited fields always appear in the Inherited category. Root event, search, and transaction datasets also have default fields that are categorized as inherited. |

| Extracted | Any auto-extracted field that you add to a dataset is listed in the "Extracted" field category. |

| Calculated | The Splunk software derives calculated fields through a calculation, lookup definition, or field-matching regular expression. When you add Eval Expression, Regular Expression, Lookup, and Geo IP field types to a dataset, they all appear in this field category. |

The Data Model Editor lets you arrange the order of calculated fields. This is useful when you have a set of fields that must be processed in a specific order. For example, you can define an Eval Expression that adds a set of fields to events within the dataset. Then you can create a Lookup with a definition that uses one of the fields calculated by the eval expression. The lookup uses this definition to add another set of fields to the same events.

Field inheritance

All data model datasets have inherited fields.

A child dataset will automatically have all of the fields that belong to its parent. All of these inherited fields will appear in the child dataset's "Inherited" category, even if the fields were categorized otherwise in the parent dataset.

You can add additional fields to a child dataset. The Data Model Editor will categorize these datasets either as extracted fields or calculated fields depending on their field type.

You can design a relatively simple data model where all of the necessary fields for a dataset tree are defined in its root dataset, meaning that all of the child datasets in the tree have the exact same set of fields as that root dataset. In such a data model, the child datasets would be differentiated from the root dataset and from each other only by their constraints.

Root event, search, and transaction datasets also have inherited fields. These inherited fields are default fields that are extracted from every event, such as _time, host, source, and sourcetype.

You cannot delete inherited fields, and you cannot edit their definitions. The only way to edit or remove an inherited field belonging to a child dataset is to delete or edit the field from the parent dataset it originates from as an extracted or calculated field. If the field originates in a root dataset as an inherited field, you won't be able to delete it or edit it.

You can hide fields from Pivot users as an alternative to field deletion.

You can also determine whether inherited fields are optional for a dataset or required.

Field purposes

Fields serve several purposes for data models.

Their most obvious function is to provide the set of fields that Pivot users use to define and generate a pivot report. The set of fields that a Pivot user has access to is determined by the dataset the user chooses when they enter the Pivot Editor. You might add fields to a child dataset to provide fields to Pivot users that are specific to that dataset.

On the other hand, you can also design calculated fields whose only function is to set up the definition of other fields or constraints. This is why field listing order matters: Fields are processed in the order that they are listed in the Data Model Editor. This is why The Data Model Editor allows you to rearrange the listing order of calculated fields.

For example, you could design a chained set of three Eval Expression fields. The first two Eval Expression fields would create what are essentially calculated fields. The third Eval Expression field would use those two calculated fields in its eval expression.

When you define a field you can determine whether it is visible or hidden for Pivot users. This can come in handy if each dataset in your data model has lots of fields but only a few fields per dataset are actually useful for Pivot users.

A field can be visible in some datasets and hidden in others. Hiding a field in a parent dataset does not cause it to be hidden in the child datasets that descend from it.

Fields are visible by default. Fields that have been hidden for a dataset are marked as such in the dataset's field list.

The determination of what fields to include in your model and which fields to expose for a particular dataset is something you do to make your datasets easier to use in Pivot. It's often helpful to your Pivot users if each dataset exposes only the data that is relevant to that dataset, to make it easier to build meaningful reports. This means, for example, that you can add fields to a root dataset that are hidden throughout the model except for a specific dataset elsewhere in the hierarchy, where their visibility makes sense in the context of that dataset and its particular dataset.

Consider the example mentioned in the previous subsection, where you have a set of three "chained" Eval Expression fields. You may want to hide the first two Eval Expression fields because they are just there as "inputs" to the third field. You would leave the third field visible because it's the final "output"--the field that matters for Pivot purposes.

Fields can be required or optional for a dataset

During the field design process you can also determine whether a field is required or optional. This can act as a filter for the event set represented by the dataset. If you say a field is required, you're saying that every event represented by the dataset must have that field. If you define a field as optional, the dataset may have events that do not have that field at all.

Note: As with field visibility (see above) a field can be required in some datasets and optional in others. Marking a field as required in a parent dataset will not automatically make that field required in the child datasets that descend from that parent dataset.

Fields are optional by default. Fields that have had their status changed to required for a dataset are marked as such in the dataset's field list.

| Accelerate tables | Manage data models |

This documentation applies to the following versions of Splunk® Enterprise: 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.0.4, 7.0.5, 7.0.6, 7.0.7, 7.0.8, 7.0.9, 7.0.10, 7.0.11, 7.0.13, 7.1.0, 7.1.1, 7.1.2, 7.1.3, 7.1.4, 7.1.5, 7.1.6, 7.1.7, 7.1.8, 7.1.9, 7.1.10, 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!