Alerts in the Analytics Workspace

Use alerts to monitor and respond to specific behavior in your data. Analytics Workspace alerts are based on a specific chart. Alerts use a scheduled search of chart data and trigger when search results meet specific conditions.

To create alerts in the workspace, you need specific permissions. See Requirements for the Analytics Workspace for details.

To learn more about alerting in the Splunk platform, see Getting started with alerts in the Alerting Manual.

Parts of an alert

Alerts in the Analytics Workspace consist of alert settings, trigger conditions, and trigger actions.

Alert settings

Configure what you want to monitor in alert settings. Alert settings include:

- Alert title

- Alert description

- Permissions. Whether the alert is private or shared in the workspace.

- Alert Type. Scheduled alerts periodically search for trigger conditions. Streaming alerts continuously search for trigger conditions. Streaming alerts can also reduce search processing load by enabling similar alerts to share the same search process.

- How often you want to check alert conditions. For example, "Evaluate every 10 minutes".

Trigger conditions

Set trigger conditions to manage when an alert triggers. Trigger conditions consist of an aggregation to measure, a threshold value, and a time period to evaluate.

For example, set trigger conditions to "Alert when Avg (over 10-second intervals) cpu.usage is greater than 10k in the last 20 minutes". The alert triggers when the aggregate average for cpu.usage exceeds 10,000 at any point in the last twenty minutes.

An alert does not have to trigger every time conditions are met. Throttle an alert to control how soon the next alert can trigger after an initial alert.

Trigger actions

Configure trigger actions to manage alert responses. By default, you can view detailed information for triggered alerts on the Triggered Alerts page in Splunk. To access the Triggered Alerts page, select Activity > Triggered Alerts from the top-level navigation bar.

Specify a severity level to assign a level of importance to an alert. Severity levels can help you sort or filter alerts on the Triggered Alerts page. Available severity levels include Info, Low, Medium, High, and Critical.

For detailed information about the various actions that can be set up for triggered alerts, see Set up alert actions in the Alerting Manual.

The Alerting Manual also has instructions for configuring mail server settings so Splunk can send email alerts. See Email notification action.

Create an alert

Create an alert in the Analytics Workspace to monitor your data for certain conditions.

- In the main panel, select the chart you want to use for the alert.

- Click the ellipsis (

) icon.

) icon. - Click Save as Alert.

- If your chart contains more than one time series, select the time series you want to use for the alert from the Source list.

- Fill in the Settings and Trigger Conditions for your alert.

- (Optional) Under Trigger Actions, click the + Add Actions drop-down list, and select additional actions for when the alert triggers. Triggered alerts are added to the Triggered Alerts page in the Splunk platform by default.

- Click the Severity drop-down list, and select a severity level for the alert.

- Click Save.

Manage alerts

View alerts that were previously created in the Analytics Workspace to monitor and respond to alert activity. Alerts show the same time range and hairline as other charts. Add an alert to the workspace through the Data panel. For more information, see Types of data in the Analytics Workspace.

Alert chart actions

Click the ellipsis (![]() ) icon in the top-right corner of an alert chart to view a list of alert chart actions.

) icon in the top-right corner of an alert chart to view a list of alert chart actions.

| Action | Description |

|---|---|

| Edit Alert | Modify alert conditions. |

| Open in Search | Show the SPL that drives the alert in the Search & Reporting App. |

| Clone this Panel | Open the alert query in a metrics chart for further analysis. |

| Search Related Events | View a list of related log events. |

Alert details

Select an alert in the Analytics Workspace to view its details. Alert details show in the Analysis panel. These details include the settings, threshold, and severity level configured for the alert. A scheduled alert displays the scheduled alert (![]() ) badge next to the alert title. A streaming alert displays the streaming alert (

) badge next to the alert title. A streaming alert displays the streaming alert (![]() ) badge next to the alert title.

) badge next to the alert title.

Show triggered instances to see when alert conditions are met.

- In the main panel, select the alert to show triggered instances.

- In the Analysis panel under Settings, select Show triggered instances.

Triggered instances appear as ![]() annotations on the chart.

annotations on the chart.

Triggered instance annotations appear at the end of the evaluation window in which the alert triggers, not at the time the alert threshold is crossed.

Use alert badges (![]() and

and ![]() ) to gauge the alert severity level. To help you monitor alert activity, badge colors are based on the most recent severity level of a triggered alert.

) to gauge the alert severity level. To help you monitor alert activity, badge colors are based on the most recent severity level of a triggered alert.

| Severity level | Badge color |

|---|---|

| No trigger | Gray |

| Info | Blue |

| Low | Green |

| Medium | Yellow |

| High | Orange |

| Critical | Red |

Example

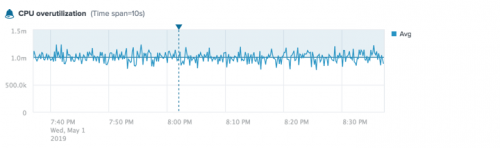

The following alert shows CPU overutilization for the aws.ec2.CPUUtilization metric.

This alert is based on the aggregate average values for the aws.ec2.CPUUtilization metric. The blue alert badge indicates a severity level of Info. The horizontal blue line shows the alert threshold (1.0m). The ![]() annotations show triggered instances for the alert.

annotations show triggered instances for the alert.

Follow up on alerts

Follow up on a triggered alert to perform additional analysis of the underlying data. To investigate a situation highlighted in an alert, open the alert query in a metrics chart.

Analyze a triggered alert in a metrics chart

To perform additional analysis of alert conditions, clone the alert in the Analytics Workspace.

- In the Data panel, search or browse for the alert that you want to investigate.

- Click on the alert name to open the alert in the Analytics Workspace.

- To view a list of alert chart actions, click the ellipsis (

) icon in the top-right corner of the alert chart.

) icon in the top-right corner of the alert chart. - Click Clone this Panel.

The alert query opens in a new metrics chart in the Analytics Workspace. You can perform additional analytic functions, such as filtering, modifying the time range, and splitting the chart by a dimension, to follow up on the conditions that triggered the alert.

Streaming metrics alert features not available in the Analytics Workspace

There are a few features for streaming metric alerts that are available only to users who can make direct edits to metric_alerts.conf, where streaming metric alert configurations are stored, or engage with the alerts/metric_alerts REST API endpoint.

| Additional alert feature | Description | Setting |

|---|---|---|

| Set multiple group-by dimensions | You can identify a list of group-by dimensions for an alert. This results in a separate aggregation value for each combination of group-by dimensions, instead of just one aggregation value. The Splunk software evaluates the alert against each of these aggregation values. | groupby

|

Define complex eval expressions for alert conditions

|

You can set alert conditions that include multiple Boolean operators, eval functions, and metric aggregations. They can also reference dimensions specified in the groupby setting.

|

condition

|

| Adjust lifespan of triggered streaming metric alert records | By default, records of triggered streaming metric alerts live for 24 hours. You can adjust this time on a per-alert basis for streaming metric alerts. | trigger.expires

|

| Adjust maximum number of triggered alert records for a given streaming metric alert | By default, only 20 triggered alert records of a given streaming metric alert can exist at any given time. You can raise or lower this limit on a per-alert basis according to your needs. | trigger.max_tracked

|

For more information, see the metric_alerts.conf topic in the Admin Manual.

The streaming metric alert settings are also documented in the context of the alerts/metric_alerts endpoint in the REST API Reference Manual.

| Analytics in the Analytics Workspace | Dashboards in the Analytics Workspace |

This documentation applies to the following versions of Splunk® Enterprise: 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!