The basics of indexer cluster architecture

This topic introduces indexer cluster architecture. It describes the nodes of a single-site cluster and how they work together. It also covers some essential concepts and describes briefly how clusters handle indexing and searching.

Multisite cluster architecture is similar to single-site cluster architecture. There are, however, a few areas of significant difference. For information on multisite cluster architecture and how it differs from single-site cluster architecture, read the topic Multisite indexer cluster architecture.

For a deeper dive into cluster architecture, read the chapter How indexer clusters work.

For information on how cluster architecture differs for SmartStore indexes, see SmartStore architecture overview and Indexer cluster operations and SmartStore.

Cluster nodes

A cluster includes three types of nodes:

- A single manager node to manage the cluster.

- Multiple peer nodes to index and replicate data and to search the data.

- One or more search heads to coordinate searches across all the peer nodes.

In addition, a cluster deployment usually employs forwarders to ingest and forward data to the peers.

Manager nodes, peer nodes, and search heads are all specialized Splunk Enterprise instances. All nodes must reside on separate instances and separate machines. For example, the manager node cannot reside on the same instance or machine as a peer node or a search head.

The manager node and all peer nodes must be specific to a single cluster. A manager node cannot manage multiple clusters. A peer node cannot connect to multiple manager nodes. Search heads, however, can search across multiple clusters.

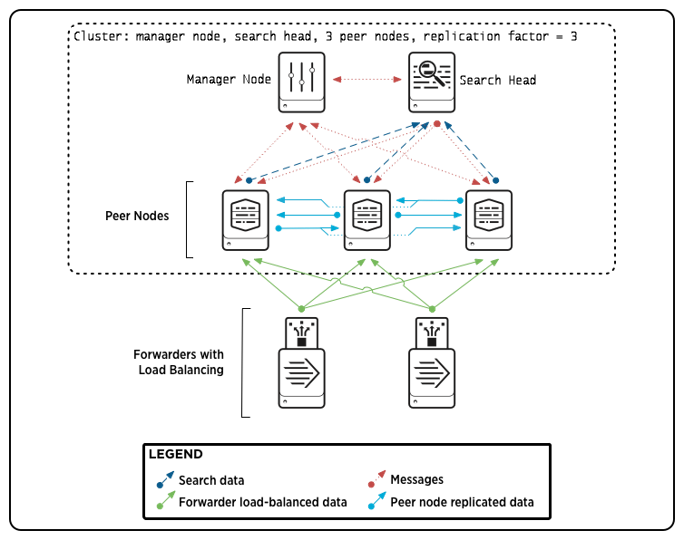

Here is a diagram of a simple single-site cluster, with a few peers and some forwarders sending data to them:

Some of what is happening in this diagram might not make sense yet; read on.

Manager node

The manager node manages the cluster. It coordinates the replicating activities of the peer nodes and tells the search head where to find data. It also helps manage the configuration of peer nodes and orchestrates remedial activities if a peer goes offline.

Unlike the peer nodes, the manager node does not index external data. A cluster has exactly one manager node.

Peer node

Peer nodes perform the indexing function for the cluster. They receive and index incoming data. They also send replicated data to other peer nodes in the cluster and receive replicated data from other peers. A peer node can index its own external data while simultaneously receiving and sending replicated data. Like all indexers, peers also search across their indexed data in response to search requests from the search head.

The number of peer nodes you deploy is dependent on two factors: the cluster replication factor and the indexing load. For example, if you have a replication factor of 3 (which means you intend to store three copies of your data), you need at least three peers. If you have more indexing load than three indexers can handle, you can add more peers to increase capacity.

Search head

The search head manages searches across the set of peer nodes. It distributes search queries to the peers and consolidates the results. You initiate all searches from the search head. A cluster must have at least one search head.

Forwarder

Forwarders function the same as in any Splunk Enterprise deployment. They consume data from external sources and then forward that data to indexers, which, in clusters, are the peer nodes. You are not required to use forwarders to get data into a cluster, but, for most purposes, you will want to. This is because only with forwarders can you enable indexer acknowledgment, which ensures that incoming data gets reliably indexed. In addition, to deal with potential peer node failures, it is advisable to use load-balancing forwarders. That way, if one peer goes down, the forwarder can switch its forwarding to other peers in the load-balanced group. For more information on forwarders in a clustered environment, read Use forwarders to get data into the indexer cluster in this manual.

Important concepts

To understand how a cluster functions, you need to be familiar with a few concepts:

- Replication factor. This determines the number of copies of data the cluster maintains and therefore, the cluster's fundamental level of failure tolerance.

- Search factor. This determines the number of searchable copies of data the cluster maintains, and therefore how quickly the cluster can recover its searching capability after a peer node goes down.

- Buckets. Buckets are the basic units of index storage. A cluster maintains replication factor number of copies of each bucket.

This section provides a brief introduction to these concepts.

Replication factor

As part of configuring the manager node, you specify the number of copies of data that you want the cluster to maintain. The number of copies is called the cluster's replication factor. The replication factor is a key concept in index replication, because it determines the cluster's failure tolerance: a cluster can tolerate a failure of (replication factor - 1) peer nodes. For example, if you want to ensure that your system can handle the failure of two peer nodes, you must configure a replication factor of 3, which means that the cluster stores three identical copies of your data on separate nodes. If two peers go down, the data is still available on a third peer. The default value for the replication factor is 3.

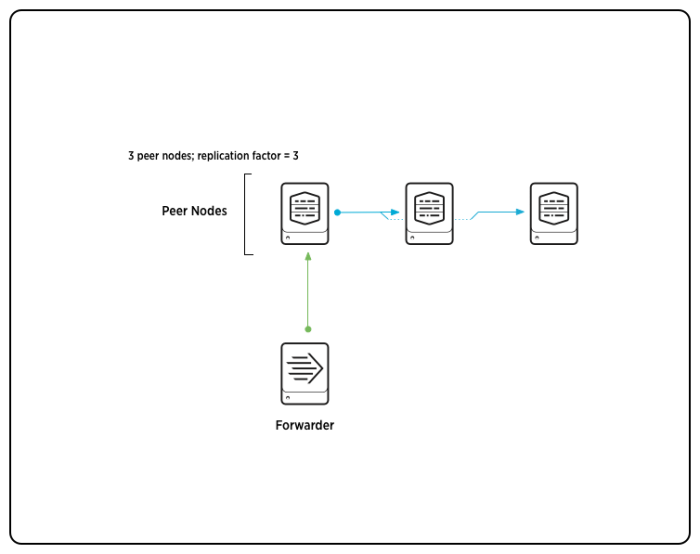

Here is a high-level representation of a cluster with three peers and a replication factor of 3:

In this diagram, one peer is receiving data from a forwarder, which it processes and then streams to two other peers. The cluster will contain three complete copies of the peer's data. This diagram represents a very simplified version of peer replication, where all data is coming into the system through a single peer. In most three-peer clusters, all three peers would be receiving external data from a forwarder, as well as replicated data from other peers.

For a detailed discussion of the replication factor and the trade-offs involved in adjusting its value, see the topic Replication factor.

Important: Multisite clusters use a significantly different version of the replication factor. See Multisite replication and search factors.

Search factor

When you configure the manager node, you also designate a search factor. The search factor determines the number of immediately searchable copies of data the cluster maintains.

Searchable copies of data require more storage space than non-searchable copies, so it is best to limit the size of your search factor to fit your exact needs. For most purposes, use the default value of 2. This allows the cluster to continue searches with little interruption if a single peer node goes down.

The difference between a searchable and a non-searchable copy of some data is this: The searchable copy contains both the data itself and some extensive index files that the cluster uses to search the data. The non-searchable copy contains just the data. Even the data stored in the non-searchable copy, however, has undergone initial processing and is stored in a form that makes it possible to recreate the index files later, if necessary.

For a detailed discussion of the search factor and the trade-offs involved in adjusting its value, see the topic Search factor.

Important: Multisite clusters use a significantly different version of the search factor. See Multisite replication and search factors.

Buckets

Splunk Enterprise stores indexed data in buckets, which are directories containing files of data. An index typically consists of many buckets.

A complete cluster maintains replication factor number of copies of each bucket, with each copy residing on a separate peer node. The bucket copies are either searchable or non-searchable. A complete cluster also has search factor number of searchable copies of each bucket.

Buckets contain two types of files: a rawdata file, which contains the data along with some metadata, and - for searchable copies of buckets - index files into the data.

The cluster replicates data on a bucket-by-bucket basis. The original bucket and its copies on other peer nodes have identical sets of rawdata. Searchable copies also contain the index files.

Each time a peer creates a new bucket, it communicates with the manager node to get a list of peers to stream the bucket's data to. If you have a cluster in which the number of peer nodes exceeds the replication factor, a peer might stream data to a different set of peers each time it creates a new bucket. Eventually, the copies of the peer's original buckets are likely to be spread across a large number of peers, even if the replication factor is only 3.

You need a good grasp of buckets to understand cluster architecture. For an overview of buckets in general, read How the indexer stores indexes. Then read the topic Buckets and indexer clusters. It provides detailed information on bucket concepts of particular importance for a clustered deployment.

How indexing works

Clustered indexing functions like non-clustered indexing, except that the cluster stores multiple copies of the data. Each peer node receives, processes, and indexes external data - the same as any non-clustered indexer. The key difference is that the peer node also streams, or "replicates", copies of the processed data to other peers in the cluster, which then store those copies in their own buckets. Some of the peers receiving the processed data might also index it. The replication factor determines the number of peers that receive the copies of data. The search factor determines the number of peers that index the data.

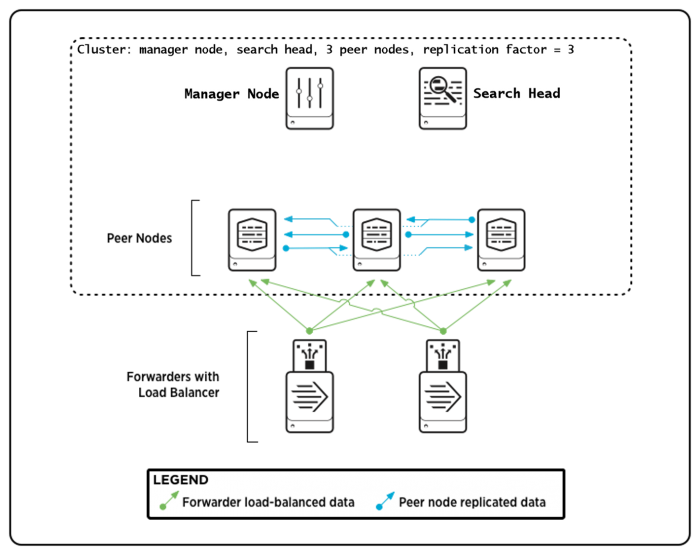

A peer node can be indexing external data while simultaneously storing, and potentially indexing, copies of replicated data sent to it from other peers. For example, if you have a three-peer cluster configured with a replication factor of 3, each peer can be ingesting and indexing external data while also storing copies of replicated data streamed to it by the other peers. If the cluster's search factor is 2, one of the peers receiving a copy of streamed data will also index it. (In addition, the peer that originally ingests the data always indexes its own copy.) This diagram shows the movement of data into peers, both from forwarders and from other peers:

You can set up your cluster so that all the peer nodes ingest external data. This is the most common scenario. You do this simply by configuring inputs on each peer node. However, you can also set up the cluster so that only a subset of the peer nodes ingest data. No matter how you disperse your inputs across the cluster, all the peer nodes can, and likely will, also store replicated data. The manager node determines, on a bucket-by-bucket basis, which peer nodes will get replicated data. You cannot configure this, except in the case of multisite clustering, where you can specify the number of copies of data that each site's set of peers receives.

In addition to replicating indexes of external data, the peers also replicate their internal indexes, such as _audit, _internal, etc.

The manager node manages the peer-to-peer interactions. Most importantly, it tells each peer what peers to stream its data to. Once the manager node has communicated this, the peers then exchange data with each other, without the manager node's involvement, unless a peer node goes down. The manager node also keeps track of which peers have searchable data and ensures that there are always search factor number of copies of searchable data available. When a peer goes down, the manager node coordinates remedial activities.

For detailed information, read the topic How clustered indexing works.

For information on how indexing works in a multisite cluster, read Multisite indexing.

For information on how indexing works with SmartStore indexes, see How indexing works in SmartStore.

How search works

In an indexer cluster, a search head coordinates all searches. The process is similar to how distributed searches work in a non-clustered environment. The main difference is that the search head relies on the manager node to tell it who its search peers are. Also, there are various processes in place to ensure that a search occurs over one and only one copy of each bucket.

To ensure that exactly one copy of each bucket participates in a search, one searchable copy of each bucket in the cluster is designated as primary. Searches occur only across the set of primary copies.

The set of primary copies can change over time, for example, in response to a peer node going down. If some of the bucket copies on the downed node were primary, other searchable copies of those buckets will be made primary to replace them. If there are no other searchable copies (because the cluster has a search factor of 1), non-searchable copies will first have to be made searchable before they can be designated as primary.

The manager node rebalances primaries across the set of peers whenever a peer joins or rejoins the cluster, in an attempt to improve distribution of the search load. See Rebalance the indexer cluster primary buckets.

The manager node keeps track of all bucket copies on all peer nodes, and the peer nodes themselves know the status of their bucket copies. That way, in response to a search request, a peer knows which of its bucket copies to search.

Periodically, the search head gets a list of active search peers from the manager node. To handle searches, it then communicates directly with those peers, as it would for any distributed search, sending search requests and knowledge bundles to the peers and consolidating search results returned from the peers.

For example, assume a cluster of three peers is maintaining 20 buckets that need to be searched to fulfill a particular search request coming from the search head. Primary copies of those 20 buckets could be spread across all three peers, with 10 primaries on the first peer, six on the second, and four on the third. Each peer gets the search request and then determines for itself whether its particular copy of a bucket is primary and therefore needs to participate in the search.

For detailed information, read the topic How search works in an indexer cluster.

Important: There are key differences in how searching works in a multisite cluster. For example, each site in the cluster typically has a complete set of primary buckets, so that a search head can perform its searches entirely on data local to its site. For more information, read Multisite searching.

For information on how search works with SmartStore indexes, see How search works in SmartStore.

How clusters deal with peer node failure

If a peer node goes down, the manager node coordinates attempts to reproduce the peer's buckets on other peers. For example, if a downed node was storing 20 copies of buckets, of which 10 were searchable (including three primary bucket copies), the manager node will direct efforts to create copies of those 20 buckets on other nodes. It will likewise attempt to replace the 10 searchable copies with searchable copies of the same buckets on other nodes. And it will replace the primary copies by changing the status of corresponding searchable copies on other peers from non-primary to primary.

Replication factor and node failure

If there are less peer nodes remaining than the number specified by the replication factor, the cluster will not be able to replace the 20 missing copies. For example, if you have a three-node cluster with a replication factor of 3, the cluster cannot replace the missing copies when a node goes down, because there is no other node where replacement copies can go.

Except in extreme cases, however, the cluster should be able to replace the missing primary bucket copies by designating searchable copies of those buckets on other peers as primary, so that all the data continues to be accessible to the search head.

The only case in which the cluster cannot maintain a full set of primary copies is if a replication factor number of nodes goes down. For example, if you have a cluster of five peer nodes, with a replication factor of 3, the cluster will still be able to maintain a full set of primary copies if one or two peers go down but not if a third peer goes down.

Search factor and node failure

The search factor determines whether full search capability can quickly resume after a node goes down. To ensure rapid recovery from one downed node, the search factor must be set to at least 2. That allows the manager node to immediately replace primaries on the downed node with existing searchable copies on other nodes.

If instead the search factor is set to 1, that means the cluster is maintaining just a single set of searchable bucket copies. If a peer with some primary copies goes down, the cluster must first convert a corresponding set of non-searchable copies on the remaining peers to searchable before it can designate them as primary to replace the missing primaries. While this time-intensive process is occurring, the cluster has an incomplete set of primary buckets. Searches can continue, but only across the available primary buckets. Eventually, the cluster will replace all the missing primary copies. Searches can then occur across the full set of data.

If, on the other hand, the search factor is at least 2, the cluster can immediately assign primary status to searchable copies on the remaining nodes. The activity to replace the searchable copies from the downed node will still occur, but in the meantime searches can continue uninterrupted across all the cluster's data.

For detailed information on peer failure, read the topic What happens when a peer node goes down.

For information on how a multisite cluster handles peer node failure, read How multisite indexer clusters deal with peer node failure.

How clusters deal with manager node failure

If a manager node goes down, peer nodes can continue to index and replicate data, and the search head can continue to search across the data, for some period of time. Problems eventually will arise, however, particularly if one of the peers goes down. There is no way to recover from peer loss without the manager node, and the search head will then be searching across an incomplete set of data. Generally speaking, the cluster continues as best it can without the manager node, but the system is in an inconsistent state and results cannot be guaranteed.

For detailed information on manager node failure, read the topic What happens when a manager node goes down.

| Multisite indexer clusters | Multisite indexer cluster architecture |

This documentation applies to the following versions of Splunk® Enterprise: 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!