Replication factor

As part of setting up an indexer cluster, you specify the number of copies of data that you want the cluster to maintain. Peer nodes store incoming data in buckets, and the cluster maintains multiple copies of each bucket. The cluster stores each bucket copy on a separate peer node. The number of copies of each bucket that the cluster maintains is the replication factor.

Replication factor and cluster resiliency

The cluster can tolerate a failure of (replication factor - 1) peer nodes. For example, to ensure that your system can tolerate a failure of two peers, you must configure a replication factor of 3, which means that the cluster stores three identical copies of each bucket on separate nodes. With a replication factor of 3, you can be certain that all your data will be available if no more than two peer nodes in the cluster fail. With two nodes down, you still have one complete copy of data available on the remaining peers.

By increasing the replication factor, you can tolerate more peer node failures. With a replication factor of 2, you can tolerate just one node failure; with a replication factor of 3, you can tolerate two concurrent failures; and so on.

The trade-off is that you need to store and process all those copies of data. Although the replicating activity doesn't consume much processing power, still, as the replication factor increases, you need to run more indexers and provision more storage for the indexed data. On the other hand, since data replication itself requires little processing power, you can take advantage of the multiple indexers in a cluster to ingest and index more data. Each indexer in the cluster can function as both originating indexer ("source peer") and replication target ("target peer"). It can index incoming data and also store copies of data from other indexers in the cluster.

Example: Replication factor in action

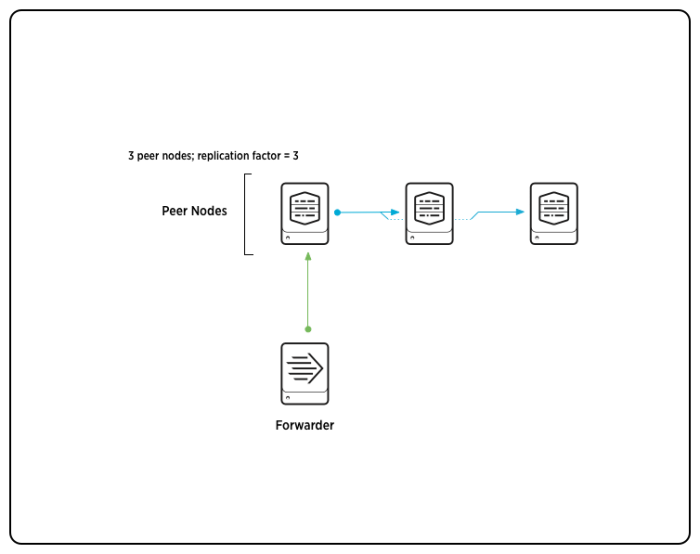

In the following diagram, one peer is receiving data from a forwarder, which it processes and then streams to two other peers.The cluster will contain three complete copies of the peer's data, one copy on each peer.

Note: This diagram represents a highly simplified version of peer replication, where all data is entering the system through a single peer. There are a few issues that add complexity to a real-life scenario:

- In most clusters, each of the peer nodes would be functioning as both source and target peer, receiving external data from a forwarder, as well as replicated data from other peers.

- To accommodate horizontal scaling, a cluster with a replication factor of 3 could consist of many more peers than three. At any given time, each source peer would be streaming copies of its data to two target peers, but each time it started a new hot bucket, its set of target peers could potentially change.

Later topics in this chapter describe in detail how clusters process data.

Replication factor in multisite clusters

A multisite cluster uses a special version of the replication factor, the site replication factor. This determines not only the number of copies that the entire cluster maintains but also the number of copies that each site maintains. For information on the site replication factor, see Configure the site replication factor.

| Basic indexer cluster concepts for advanced users | Search factor |

This documentation applies to the following versions of Splunk® Enterprise: 7.0.0, 7.0.1, 7.0.2, 7.0.3, 7.0.4, 7.0.5, 7.0.6, 7.0.7, 7.0.8, 7.0.9, 7.0.10, 7.0.11, 7.0.13, 7.1.0, 7.1.1, 7.1.2, 7.1.3, 7.1.4, 7.1.5, 7.1.6, 7.1.7, 7.1.8, 7.1.9, 7.1.10, 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!