View search job properties

The Search Job Inspector and the Job Details dashboard are tools that let you take a closer look at what your search is doing and see where the Splunk software is spending most of its time.

This topic discusses how to use the Search Job Inspector to both troubleshoot the performance of a search job and understand the behavior of knowledge objects such as event types, tags, lookups and so on within the search. For more information, see Manage search jobs in this manual.

![]() Here's a Splunk Education video about Using the Splunk Search Job Inspector. This video shows you how to determine if your search is running efficiently, event types, searches in a distributed environment, search optimization, and disk usage.

Here's a Splunk Education video about Using the Splunk Search Job Inspector. This video shows you how to determine if your search is running efficiently, event types, searches in a distributed environment, search optimization, and disk usage.

This topic also shows you how to access the Job Details dashboard after you open the Search Job Inspector for a search job. The Job Details dashboard gives you a clear and concise overview of a search job's process and performance. You may want to review the Job Details dashboard before you try to parse the itemized report provided by the Search Job Inspector.

The Job Details dashboard displays basic search job facts and metrics, shows you the search strings that were run in the background to carry out the search, and gives you a concise overview of search costs and indexer usage metrics.

Open the Search Job Inspector

You can access the Search Job Inspector for a search job, as long as the search job has not expired (which means that the search artifact still exists). The search does not need to be running to access the Search Job Inspector.

1. Run the search.

2. From the Job menu, select Inspect Job.

This opens the Search Job Inspector in a separate window.

Change the search job

After the Search Job Inspector opens in a separate window, you can use the URL for that window to inspect any valid search job artifact if you have its search ID (SID). You can find the SID of a search on the Jobs page (select Activity > Jobs) or listed in the dispatch directory, $SPLUNK_HOME/var/run/splunk/dispatch. For more information about the Jobs page, see Manage search jobs in this manual.

If you look at the URI path for the Search Job Inspector window, you will see something like this at the end of the string:

.../manager/search/job_inspector?sid=1299600721.22

The sid is the SID number. The namespace is the name of the app that the SID is associated with. In this example, the SID is 1299600721.22.

Type the search artifact SID in the URI path, after sid= and press Enter. As long as you have the necessary ownership permissions to view the search, you will be able to inspect it.

What the Search Job Inspector shows you

At the top of the Search Job Inspector window, an information message appears. The message depends on whether the job is paused, running, or finished. For example, if the job is finished the message tells you how many results it found and the time it took to complete the search. Any error messages are also displayed at the top of the window.

Below these messages you should find the SID for the search job and two links:

- The search.log link takes pulls up a text file with the raw search.log data for this search job. The displays of the Search Job Inspector and Job Details dashboard are based on this search.log data.

- The Job Details Dashboard link goes to the Job Details dashboard, which provides a condensed summary of important metrics and facts about the search job you are investigating. You may want to look at this dashboard before you try to do a deep dive into the Search Job Inspector's comprehensive report.

Open the Job Details dashboard to get a concise overview of your search job

After opening the Search Job Inspector for a search, click the Job Details Dashboard link to open the Job Details dashboard.

You can also reach the Job Details dashboard from the Dashboards page.

You can easily reload the dashboard for any search job currently listed on the Jobs page by placing its SID in the provided field and pressing the Return key.

The dashboard supplies the essential properties of the search job in a format that is designed for quick scanning. It breaks down into four sections:

- Summary

- The Summary presents basic metrics and facts about the search job, including the number of events scanned per second, the result count, and the search mode of the search.

- Search Strings

- The Search Strings section shows the search string that was provided to run the search alongside the string for the optimized version of the search that was actually run behind the scenes to improve search performance. The Search String section also includes the search strings for the map and reduce phases of the search, if applicable.

- Search Costs

- The Search Costs section provides simple breakdowns of search costs by command and phase, as well as the cumulative startup handoff time.

- Indexers

- The Indexers section lists the indexers that processed the search job. The shading of duration values on the Time Spent Running Search Per Indexer table helps you quickly identify indexers that did not process the search as efficiently as their peers. Click on an indexer name in that table to see the time the indexer spent on the search, broken out by search process.

The following Job Details dashboard panels will not display results for users with roles that do not have access to the _introspection index:

- Search Costs by Command

- Approximate Time Spent in Reduce Phase

- Map Phase Search Costs by Command

- Reduce Phase Search Costs by Command

Contact your administrator if you require access to this information.

To return to the main Search Job Inspector, right-click on the dashboard and select Back.

Review the specific execution costs and properties of your search job

The key information that the Search Job Inspector displays are the execution costs and the search job properties.

- Execution costs

- The Execution costs section lists information about the components of the search and how much impact each component has on the overall performance of the search.

- Search job properties

- The Search job properties section lists other characteristics of the job.

Execution costs

With the information in the Execution costs section, you can troubleshoot the efficiency of your search. You can narrow down which processing components are impacting the search performance. This section contains information about the search processing components that were used to process your search.

- The component durations in seconds.

- How many times each component was invoked while the search ran.

- The input and output event counts for each component.

The Search Job Inspector lists the components alphabetically. The number of components that you see depend on the search that you run.

The following tables describes the significance of each individual command and distributed component in a typical keyword search.

Execution costs of search commands

In general, for each command that is part of the search job, there is a parameter command.<command_name>. The values for these parameters represent the time spent in processing each <command_name>. For example, if the table command is used, you will see command.table.

| Search command component name | Description |

command.search

|

After the Splunk software identifies the events that contain the indexed fields matching your search, the events are analyzed to identify which events match the other search criteria. These are concurrent operations, not consecutive.

|

There is a relationship between the type of commands used and the numbers you can expect to see for Invocations, Input count, and Output count. For searches that generate events, you expect the input count to be 0 and the output count to be some number of events X. If the search is both a generating search and a filtering search, the filtering search would have an input (equal to the output of the generating search, X) and an output=X. The total counts would then be input=X, output=2*X, and the invocation count is doubled.

Execution costs of dispatched searches

| Distributed search component name | Description |

dispatch.check_disk_usage

|

The time spent checking the disk usage of this job. |

dispatch.createdSearchResultInfrastructure

|

The time to create and set up the collectors for each peer and execute the HTTP post to each peer. |

dispatch.earliest_time

|

Specifies the earliest time for this search. Can be a relative or absolute time. The default is an empty string. |

dispatch.emit_prereport_files

|

When running a transforming search, Splunk Enterprise cannot compute the statistical results of the report until the search completes. After it fetches events from the search peers (dispatch.fetch), it, writes out the results to local files. dispatch.emit_prereport_files provides the time that it takes for Splunk Enterprise to write the transforming search results to those local files.

|

dispatch.evaluate

|

The time spent parsing the search and setting up the data structures needed to run the search. This component also includes the time it takes to evaluate and run subsearches. This is broken down further for each search command that is used. In general, dispatch.evaluate.<command_name> tells you the time spent parsing and evaluating the <command_name> argument. For example, dispatch.evaluate.search indicates the time spent evaluating and parsing the searchcommand argument.

|

dispatch.fetch

|

The time spent by the search head waiting for or fetching events from search peers. The dispatch.fetch value is different than the command.search value. The command.search value includes time spent by all indexers, which can be greater than the actual elapsed time of the search. If you have only a single node, then the dispatch.fetch and the command.search values will be similar. In a distributed environment, depending on the search, these values can be very different.

|

dispatch.preview

|

The time spent generating preview results. |

dispatch.process_remote_timeline

|

The time spent decoding timeline information generated by search peers. |

dispatch.reduce

|

The time spent reducing the intermediate report output. |

dispatch.stream.local

|

The time spent by search head on the streaming part of the search. |

dispatch.stream.remote

|

The time spent executing the remote search in a distributed search environment, aggregated across all peers. Additionally, the time spent executing the remote search on each remote search peer is indicated with: dispatch.stream.remote.<search_peer_name>. output_count represents bytes sent rather than events in this case.

|

dispatch.timeline

|

The time spent generating the timeline and fields sidebar information. |

dispatch.writeStatus

|

The time spent periodically updating status.csv and info.csv in the job's dispatch directory.

|

startup.configuration

|

The time spent generating the startup configuration. |

startup.handoff

|

The time elapsed between the forking of a separate search process and the beginning of useful work of the forked search processes. In other words it is the approximate time it takes to build the search apparatus. This is cumulative across all involved peers. If this takes a long time, it could be indicative of I/O issues with .conf files or the dispatch directory. |

Search job properties

The Search job properties fields provide information about the search job. The Search job properties fields are listed in alphabetical order.

| Parameter name | Description |

cursorTime

|

The earliest time from which no events are later scanned. Can be used to indicate progress. See description for doneProgress.

|

delegate

|

For saved searches, specifies jobs that were started by the user. Defaults to scheduler. |

diskUsage

|

The total amount of disk space used, in bytes. |

dispatchState

|

The state of the search. Can be any of the following states:

|

doneProgress

|

A number between 0 and 1.0 that indicates the approximate progress of the search.

|

dropCount

|

For real-time searches only, the number of possible events that were dropped due to the rt_queue_size (defaults to 100000).

|

earliestTime

|

A time string that sets the earliest (inclusive), respectively, time bounds for the search. Can be used to indicate progress. See description for doneProgress.

|

eai:acl

|

Describes the app and user-level permissions. For example, is the app shared globally, and what users can run or view the search? |

eventAvailableCount

|

The number of events that are available for export. |

eventCount

|

The number of events returned by the search. In other words, this is the subset of scanned events (represented by the scanCount) that actually matches the search terms.

|

eventFieldCount

|

The number of fields found in the search results. |

eventIsStreaming

|

Indicates if the events of this search are being streamed. |

eventIsTruncated

|

Indicates if events of the search have not been stored, and thus not available from the events endpoint for the search. |

eventSearch

|

Subset of the entire search that is before any transforming commands. The timeline and events endpoint represents the result of this part of the search. |

eventSorting

|

Indicates if the events of this search are sorted, and in which order. asc = ascending; desc = descending; none = not sorted

|

isBatchMode

|

Indicates whether or not the search in running in batch mode. This applies only to searches that include transforming commands. |

isDone

|

Indicates if the search has completed. |

isFailed

|

Indicates if there was a fatal error executing the search. For example, if the search string had invalid syntax. |

isFinalized

|

Indicates if the search was finalized (stopped before completion). |

isPaused

|

Indicates if the search has been paused. |

isPreviewEnabled

|

Indicates if previews are enabled. |

isRealTimeSearch

|

Indicates if the search is a real time search. |

isRemoteTimeline

|

Indicates if the remote timeline feature is enabled. |

isSaved

|

Indicates that the search job is saved, storing search artifacts on disk for 7 days from the last time that the job has been viewed or touched. Add or edit the default_save_ttl value in limits.conf to override the default value of 7 days.

|

isSavedSearch

|

Indicates if this is a saved search run using the scheduler. |

isTimeCursored

|

Specifies if the cursorTime can be trusted or not. Typically this parameter it set to true if the first command is search. |

isZombie

|

Indicates if the process running the search is dead, but with the search not finished. |

keywords

|

All positive keywords used by this search. A positive keyword is a keyword that is not in a NOT clause. |

label

|

Custom name created for this search. |

latestTime

|

A time string that sets the latest (exclusive), respectively, time bounds for the search. Can be used to indicate progress. See description for doneProgress.

|

numPreviews

|

Number of previews that have been generated so far for this search job. |

messages

|

Errors and debug messages. |

optimizedSearch

|

The restructured syntax for the search that was run. The built-in optimizers analyze your search and restructure the search syntax, where possible, to improve search performance. The search that you ran is displayed under the search job property. |

performance

|

This is another representation of the Execution costs. |

remoteSearch

|

The search string that is sent to every search peer. |

reportSearch

|

If reporting commands are used, the reporting search. |

request

|

GET arguments that the search sends to splunkd.

|

resultCount

|

The total number of results returned by the search. |

resultIsStreaming

|

Indicates if the final results of the search are available using streaming (for example, no transforming operations). |

resultPreviewCount

|

The number of result rows in the latest preview results. |

runDuration

|

Time in seconds that the search took to complete. |

scanCount

|

The number of events that are scanned or read off disk. |

search

|

The search string. |

searchCanBeEventType

|

If the search can be saved as an event type, this will be 1, otherwise, 0.

Only base searches (no subsearches or pipes) can be saved as event types. |

searchProviders

|

A list of all the search peers that were contacted. |

searchTelemetry

|

A JSON object that contains search job metadata. The structure of this JSON object is likely to change from release to release. |

sid

|

The search ID number. |

statusBuckets

|

Maximum number of timeline buckets. |

ttl

|

The time to live, or time before the search job expires after it completes. |

| Additional info | Links to further information about your search. These links may not always be available.

|

Note: When troubleshooting search performance, it's important to understand the difference between the scanCount and resultCount costs. For dense searches, the scanCount and resultCount are similar (scanCount = resultCount); and for sparse searches, the scanCount is much greater than the result count (scanCount >> resultCount). Search performance should not so much be measured using the resultCount/time rate but scanCount/time instead. Typically, the scanCount/second event rate should hover between 10k and 20k events per second for performance to be deemed good.

Debug messages

Configure the Search Job Inspector to display DEBUG messages when there are errors in your search. For example, DEBUG messages can warn you when there are fields missing from your results.

The Search Job Inspector displays DEBUG messages at the top of the Search Job Inspector window, after the search has completed.

By default the Search Job Inspector hides DEBUG messages.

Prerequisites

- Only users with file system access, such as system administrators, can configure the Search Job Inspector to display DEBUG messages.

- Review the steps in How to edit a configuration file in the Splunk Enterprise Admin Manual.

- You can have configuration files with the same name in your default, local, and app directories. Read Where you can place (or find) your modified configuration files in the Splunk Enterprise Admin Manual.

Never change or copy the configuration files in the default directory. The files in the default directory must remain intact and in their original location. Make changes to the files in the local directory.

- To configure DEBUG messages to display, open

limits.conf. - Set the

infocsv_log_levelparameter in the[search_info]stanza toDEBUG.

[search_info] infocsv_log_level = DEBUG

Examples of Search Job Inspector output

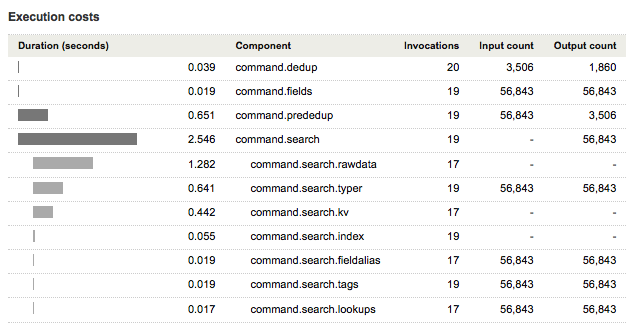

Here's an example of the execution costs for a dedup search, run over All time:

* | dedup punct

The search commands component of the Execution costs panel might look something like this:

The command.search component, and everything under it, gives you the performance impact of the search command portion of your search, which is everything before the pipe character.

The command.prededup gives you the performance impact of processing the results of the search command before passing it into the dedup command.

- The Input count of

command.prededupmatches the Output count ofcommand.search. - The Input count of

command.dedupmatches the Output count ofcommand.prededup.

In this case, the Output count of command.prededup should match the number of events returned at the completion of the search. This is the value of resultCount, under Search job properties.

See also

| Manage search jobs | Dispatch directory and search artifacts |

This documentation applies to the following versions of Splunk® Enterprise: 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!