append

Description

Appends the results of a subsearch to the current results.

The append command runs only over historical data and does not produce correct results if used in a real-time search.

By default, subsearches return a maximum of 10,000 results and have a maximum runtime of 60 seconds. If a subsearch runs for more than 60 seconds, its search results are automatically finalized.

For more information about when to use the append command, see the flowchart in the topic About event grouping and correlation in the Search Manual.

If you are familiar with SQL but new to SPL, see Splunk SPL for SQL users.

Syntax

append [<subsearch-options>...] <subsearch>

Required arguments

- subsearch

- Syntax: [subsearch]

- Description: A secondary search where you specify the source of the events that you want to append. The subsearch must be enclosed in square brackets. See About subsearches in the Search Manual.

Optional arguments

- subsearch-options

- Syntax: extendtimerange=<boolean> | maxtime=<int> | maxout=<int>

- Description: Controls how the subsearch is processed.

Subsearch options

- extendtimerange

- Syntax: extendtimerange=<boolean>

- Description: Specifies whether to include the subsearch time range in the time range for the entire search. Use the

extendtimerangeargument when the time range in the subsearch extends beyond the time range for the main search. Use this argument when a transforming command, such as chart, timechart, or stats, follows theappendcommand in the search and the search uses time based bins. - Default: false

- maxtime

- Syntax: maxtime=<int>

- Description: The maximum time, in seconds, to spend on the subsearch before automatically finalizing.

- Default: 60

- maxout

- Syntax: maxout=<int>

- Description: The maximum number of result rows to output from the subsearch.

- Default: 50000

Usage

The append command is a transforming command. See Command types.

Examples

1: Use the append command to add column totals.

| This search uses recent earthquake data downloaded from the USGS Earthquakes website. The data is a comma separated ASCII text file that contains magnitude (mag), coordinates (latitude, longitude), region (place), etc., for each earthquake recorded.

You can download a current CSV file from the USGS Earthquake Feeds and upload the file to your Splunk instance. This example uses the All Earthquakes data from the past 30 days. |

Count the number of earthquakes that occurred in and around California yesterday and then calculate the total number of earthquakes.

source=usgs place=*California* | stats count by magType | append [search index=usgs_* source=usgs place=*California* | stats count]

This example uses a subsearch to count all the earthquakes in the California regions (place="*California"), then uses the main search to count the number of earthquakes based on the magnitude type of the search.

You cannot use the stats command to simultaneously count the total number of events and the number of events for a specified field. The subsearch is used to count the total number of earthquakes that occurred. This count is added to the results of the previous search with the append command.

Because both searches share the count field, the results of the subsearch are listed as the last row in the count column.

The results appear on the Statistics tab and look something like this:

| magType | count |

|---|---|

| H | 123 |

| MbLg | 1 |

| Md | 1565 |

| Me | 2 |

| Ml | 1202 |

| Mw | 6 |

| ml | 10 |

| 2909 |

This search demonstrates how to use the append command in a way that is similar to using the addcoltotals command to add the column totals.

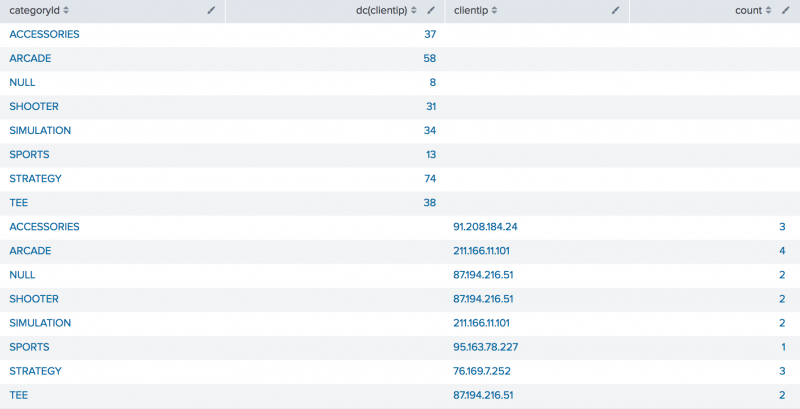

2. Count the number of different customers who purchased items. Append the top purchaser for each type of product.

| This example uses the sample data from the Search Tutorial. To try this example on your own Splunk instance, you must download the sample data and follow the instructions to get the tutorial data into Splunk. Use the time range Yesterday when you run the search. |

Count the number of different customers who purchased something from the Buttercup Games online store yesterday, and break this count down by the type of product (accessories, t-shirts, and type of games) they purchased. Also, list the top purchaser for each type of product and how much that person bought of that product.

sourcetype=access_* action=purchase | stats dc(clientip) BY categoryId | append [search sourcetype=access_* action=purchase | top 1 clientip BY categoryId] | table categoryId, dc(clientip), clientip, count

This example first searches for purchase events (action=purchase). These results are piped into the stats command and the dc(), or distinct_count() function is used to count the number of different users who make purchases. The BY clause is used to break up this number based on the different category of products (categoryId).

This example contains a subsearch as an argument for the append command.

...[search sourcetype=access_* action=purchase | top 1 clientip BY categoryId]

The subsearch is used to search for purchase events and count the top purchaser (based on clientip) for each category of products. These results are added to the results of the previous search using the append command.

Here, the table command is used to display only the category of products (categoryId), the distinct count of users who bought each type of product (dc(clientip)), the actual user who bought the most of a product type (clientip), and the number of each product that user bought (count).

You can see that the append command just tacks on the results of the subsearch to the end of the previous search, even though the results share the same field values. It does not let you manipulate or reformat the output.

3. Use the append command to determine the number of unique IP addresses that accessed the Web server.

Use the append command, along with the stats, count, and top commands to determine the number of unique IP addresses that accessed the Web server. Find the user who accessed the Web server the most for each type of page request.

| This example uses the sample data from the Search Tutorial. To try this example on your own Splunk instance, you must download the sample data and follow the instructions to get the tutorial data into Splunk. Use the time range Yesterday when you run the search. |

Count the number of different IP addresses that accessed the Web server and also find the user who accessed the Web server the most for each type of page request (method).

sourcetype=access_* | stats dc(clientip), count by method | append [search sourcetype=access_* | top 1 clientip by method]

The Web access events are piped into the stats command and the dc() or distinct_count() function is used to count the number of different users who accessed the site. The count() function is used to count the total number of times the site was accessed. These numbers are separated by the page request (method).

The subsearch is used to find the top user for each type of page request (method). The append command is used to add the result of the subsearch to the bottom of the table.

The results appear on the Statistics tab and look something like this:

| method | dc(clientip) | count | clientip | percent |

|---|---|---|---|---|

| GET | 173 | 2666 | ||

| POST | 168 | 1727 | ||

| GET | 83 | 87.194.216.51 | 3.113278 | |

| POST | 64 | 87.194.216.51 | 3.705848 |

The first two rows are the results of the first search. The last two rows are the results of the subsearch. Both result sets share the method and count fields.

4. Specify the maximum time for the subsearch to run and the maximum number of result rows from the subsearch

Use the append command, to determine the number of unique IP addresses that accessed the Web server. Find the user who accessed the Web server the most for each type of page request.

| This example uses the sample dataset from the Search Tutorial but should work with any format of Apache web access log. Download the data set from this topic in the Search Tutorial and follow the instructions to upload it to your Splunk deployment. Use the time range Yesterday when you run this search. |

Count the number of different IP addresses that accessed the Web server and also find the user who accessed the Web server the most for each type of page request (method). Limit the subsearch to 30 seconds and the maximum number of subsearch results to 1000.

sourcetype=access_* | stats dc(clientip), count by method | append maxtime=30 maxout=1000 [search sourcetype=access_* | top 1 clientip by method]

5. Use the extendtimerange argument

Use the extendtimerange argument to ensure that the time range used for the search includes both the time range of the main search and the time range of the subsearch.

index=_internal earliest=11/20/2017:00:00:00 latest=11/30/2017:00:00:00

|append extendtimerange=true

[search index=_audit earliest=11/1/2017:00:00:00 latest=11/25/2017:00:00:00]

|timechart span=1d count

The time range used for the search is from 11/1/2017:00:00:00, the earliest time in the subsearch, to 11/30/2017:00:00:00, the latest time in the main search.

See also

| anomalydetection | appendcols |

This documentation applies to the following versions of Splunk® Enterprise: 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!