contingency

Description

In statistics, contingency tables are used to record and analyze the relationship between two or more (usually categorical) variables. Many metrics of association or independence, such as the phi coefficient or the Cramer's V, can be calculated based on contingency tables.

You can use the contingency command to build a contingency table, which in this case is a co-occurrence matrix for the values of two fields in your data. Each cell in the matrix displays the count of events in which both of the cross-tabulated field values exist. This means that the first row and column of this table is made up of values of the two fields. Each cell in the table contains a number that represents the count of events that contain the two values of the field in that row and column combination.

If a relationship or pattern exists between the two fields, you can spot it easily just by analyzing the information in the table. For example, if the column values vary significantly between rows (or vice versa), there is a contingency between the two fields (they are not independent). If there is no contingency, then the two fields are independent.

Syntax

contingency [<contingency-options>...] <field1> <field2>

Required arguments

- <field1>

- Syntax: <field>

- Description: Any field. You cannot specify wildcard characters in the field name.

- <field2>

- Syntax: <field>

- Description: Any field. You cannot specify wildcard characters in the field name.

Optional arguments

- contingency-options

- Syntax: <maxopts> | <mincover> | <usetotal> | <totalstr>

- Description: Options for the contingency table.

Contingency options

- maxopts

- Syntax: maxrows=<int> | maxcols=<int>

- Description: Specify the maximum number of rows or columns to display. If the number of distinct values of the field exceeds this maximum, the least common values are ignored. A value of 0 means a maximum limit on rows or columns. This limit comes from the

maxvaluessetting in the [ctable] stanza in thelimits.conffile. - Default: 1000

- mincover

- Syntax: mincolcover=<num> | minrowcover=<num>

- Description: Specify a percentage of values per column or row that you would like represented in the output table. As the table is constructed, enough rows or columns are included to reach this ratio of displayed values to total values for each row or column. The maximum rows or columns take precedence if those values are reached.

- Default: 1.0

- usetotal

- Syntax: usetotal=<bool>

- Description: Specify whether or not to add row, column, and complete totals.

- Default: true

- totalstr

- Syntax: totalstr=<field>

- Description: Field name for the totals row and column.

- Default: TOTAL

Usage

The contingency command is a transforming command. See Command types.

This command builds a contingency table for two fields. If you have fields with many values, you can restrict the number of rows and columns using the maxrows and maxcols arguments.

Totals

By default, the contingency table displays the row totals, column totals, and a grand total for the counts of events that are represented in the table. If you don't want the totals to appear in the results, include the usetotal=false argument with the contingency command.

Empty values

Values which are empty strings ("") will be represented in the results table as EMPTY_STR.

Limits

There is a limit on the value of maxrows or maxcols, which means more than 1000 values for either field will not be used.

Examples

1. Build a contingency table of recent data

| This search uses recent earthquake data downloaded from the USGS Earthquakes website. The data is a comma separated ASCII text file that contains magnitude (mag), coordinates (latitude, longitude), region (place), etc., for each earthquake recorded.

You can download a current CSV file from the USGS Earthquake Feeds and upload the file to your Splunk instance. This example uses the All Earthquakes data from the past 30 days. Use the time range All time when you run the searches. |

You want to build a contingency table to look at the relationship between the magnitudes and depths of recent earthquakes. You start with a simple search.

source=all_month.csv | contingency mag depth | sort mag

There are quite a range of values for the Magnitude and Depth fields, which results in a very large table. The magnitude values appear in the first column. The depth values appear in the first row. The list is sorted by magnitude.

The results appear on the Statistics tab. The following table shows only a small portion of the table of results returned from the search.

| mag | 10 | 0 | 5 | 35 | 8 | 12 | 15 | 11.9 | 11.8 | 6.4 | 5.4 | 8.2 | 6.5 | 8.1 | 5.6 | 10.1 | 9 | 8.5 | 9.8 | 8.7 | 7.9 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| -0.81 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| -0.59 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| -0.56 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| -0.45 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| -0.43 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

As you can see, earthquakes can have negative magnitudes. Only where an earthquake occurred that matches the magnitude and depth will a count appear in the table.

To build a more usable contingency table, you should reformat the values for the magnitude and depth fields. Group the magnitudes and depths into ranges.

source=all_month.csv

| eval Magnitude=case(mag<=1, "0.0 - 1.0", mag>1 AND mag<=2, "1.1 - 2.0", mag>2

AND mag<=3, "2.1 - 3.0", mag>3 AND mag<=4, "3.1 - 4.0", mag>4

AND mag<=5, "4.1 - 5.0", mag>5 AND mag<=6, "5.1 - 6.0", mag>6

AND mag<=7, "6.1 - 7.0", mag>7,"7.0+")

| eval Depth=case(depth<=70, "Shallow", depth>70 AND depth<=300, "Mid", depth>300

AND depth<=700, "Deep")

| contingency Magnitude Depth

| sort Magnitude

This search uses the eval command with the case() function to redefine the values of Magnitude and Depth, bucketing them into a range of values. For example, the Depth values are redefined as "Shallow", "Mid", or "Deep". Use the sort command to sort the results by magnitude. Otherwise the results are sorted by the row totals.

The results appear on the Statistics tab and look something like this:

| Magnitude | Shallow | Mid | Deep | TOTAL |

|---|---|---|---|---|

| 0.0 - 1.0 | 3579 | 33 | 0 | 3612 |

| 1.1 - 2.0 | 3188 | 596 | 0 | 3784 |

| 2.1 - 3.0 | 1236 | 131 | 0 | 1367 |

| 3.1 - 4.0 | 320 | 63 | 1 | 384 |

| 4.1 - 5.0 | 400 | 157 | 43 | 600 |

| 5.1 - 6.0 | 63 | 12 | 3 | 78 |

| 6.1 - 7.0 | 2 | 2 | 1 | 5 |

| TOTAL | 8788 | 994 | 48 | 9830 |

There were a lot of quakes in this month. Do higher magnitude earthquakes have a greater depth than lower magnitude earthquakes? Not really. The table shows that the majority of the recent earthquakes in all of magnitude ranges were shallow. There are significantly fewer earthquakes in the mid-to-deep range. In this data set, the deep-focused quakes were all in the mid-range of magnitudes.

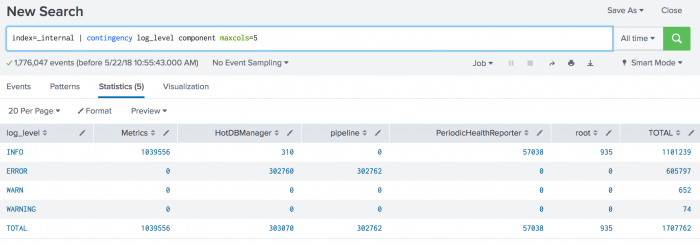

2. Identify potential component issues in the Splunk deployment

Determine if there are any components that might be causing issues in your Splunk deployment.

Build a contingency table to see if there is a relationship between the values of log_level and component.

Run the search using the time range All time and limit the number of columns returned.

index=_internal | contingency maxcols=5 log_level component

Your results should appear something like this:

These results show you any components that might be causing issues in your Splunk deployment. The component field has more than 50 values. In this search, the maxcols argument is used to show 5 components with the highest values.

See also

| concurrency | convert |

This documentation applies to the following versions of Splunk® Enterprise: 7.1.0, 7.1.1, 7.1.2, 7.1.3, 7.1.4, 7.1.5, 7.1.6, 7.1.7, 7.1.8, 7.1.9, 7.1.10, 7.2.0, 7.2.1, 7.2.2, 7.2.3, 7.2.4, 7.2.5, 7.2.6, 7.2.7, 7.2.8, 7.2.9, 7.2.10, 7.3.0, 7.3.1, 7.3.2, 7.3.3, 7.3.4, 7.3.5, 7.3.6, 7.3.7, 7.3.8, 7.3.9, 8.0.0, 8.0.1, 8.0.2, 8.0.3, 8.0.4, 8.0.5, 8.0.6, 8.0.7, 8.0.8, 8.0.9, 8.0.10, 8.1.0, 8.1.1, 8.1.2, 8.1.3, 8.1.4, 8.1.5, 8.1.6, 8.1.7, 8.1.8, 8.1.9, 8.1.10, 8.1.11, 8.1.12, 8.1.13, 8.1.14, 8.2.0, 8.2.1, 8.2.2, 8.2.3, 8.2.4, 8.2.5, 8.2.6, 8.2.7, 8.2.8, 8.2.9, 8.2.10, 8.2.11, 8.2.12, 9.0.0, 9.0.1, 9.0.2, 9.0.3, 9.0.4, 9.0.5, 9.0.6, 9.0.7, 9.0.8, 9.0.9, 9.0.10, 9.1.0, 9.1.1, 9.1.2, 9.1.3, 9.1.4, 9.1.5, 9.1.6, 9.1.7, 9.1.8, 9.1.9, 9.2.0, 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5, 9.2.6, 9.3.0, 9.3.1, 9.3.2, 9.3.3, 9.3.4, 9.4.0, 9.4.1, 9.4.2

Download manual

Download manual

Feedback submitted, thanks!