Enrich data with lookups using an Edge Processor

You can enrich your data by adding relevant information using a lookup. A lookup is a knowledge object that matches the field-value combinations in your event data with field-value combinations in a lookup table, and then adds the relevant information from the lookup table to your events. By creating and applying a pipeline that uses a lookup, you can configure an Edge Processor to add more information to the received data before sending that data to a destination.

For example, assume that the following events represent purchases from the fictitious Buttercup Games store:

| date | ip_address | product_id |

|---|---|---|

| 2023-11-22 | 107.3.146.207 | WC-SH-G04 |

| 2023-11-22 | 128.241.220 | DC-SG-G02 |

| 2023-11-23 | 194.215.205.19 | FS-SG-G03 |

These events contain the date when the purchase happened, the IP address of the customer who made the purchase, and the product ID of the item that was purchased. If you have a lookup table that maps the product IDs to product names, you can use it in an Edge Processor pipeline to add the corresponding product names to these events

For more information about lookups, see About lookups in the Splunk Cloud Platform Knowledge Manager Manual.

Limitations

Be aware of the following differences in lookup support between the Edge Processor solution and the Splunk platform:

- Edge Processors support CSV and KV Store lookups only. They do not support geospatial or external lookups.

- Edge Processors do not support the CIDR match type.

- Edge Processors support a maximum size of 200 MB for each lookup table.

- Edge Processors parse all lookup data as strings. In order for you to match a field from your events with a field from a lookup table, the event field must be a string field.

For example, an event field likehttp_status=200is an integer field and cannot be matched with lookup fields. However,http_status="200"is a string field that can be matched with lookup fields. For more information about data types in SPL2, see Built-in data types in the SPL2 Search Manual. - After updating lookup information in Splunk Cloud Platform, you might need to manually refresh the scpbridge connection to update the lookup datasets in the Edge Processor tenant. Additionally, it can take up to 4 hours before the Edge Processor service deploys that updated information to the Edge Processors that are using the lookup datasets. For more information, see Update lookup datasets in this topic.

Prerequisites

Before starting to configure lookups for Edge Processors, you must have the following:

- An Edge Processor that was created on or after December 8, 2023.

Edge Processors that were created earlier than this date cannot support lookups.

- A lookup table stored in one of the following ways:

- In a CSV file. Make sure that the file meets the restrictions described in About the CSV files in the Splunk Cloud Platform Knowledge Manager Manual.

- In a KV Store collection on the Splunk Cloud Platform deployment that is pair-connected with your Edge Processor tenant. Make sure that the KV Store collection meets the configuration requirements described in Special KV Store collection configuration for federated search in the Splunk Cloud Platform Knowledge Manager Manual.

The pair-connected Splunk Cloud Platform deployment is the deployment that was connected to the Edge Processor service during the first-time set up process for the Edge Processor solution. For more information, see First-time setup instructions for the Edge Processor solution.

The instructions on this page refer to an example scenario using a lookup dataset named prices.csv. If you'd like to follow along with these example configurations, then complete these steps to get the prices.csv file:

- Download the

Prices.csv.zipfile. - Uncompress the

Prices.csv.zipfile. There is only one file in the ZIP file,prices.csv.

After meeting these prerequisites, perform the following steps to configure an Edge Processor to enrich the incoming event data using lookups:

- Create a lookup in the pair-connected Splunk Cloud Platform deployment.

- Confirm the availability of the lookup dataset.

- Create a pipeline.

- Configure your pipeline to enrich event data using a lookup.

- Save and apply your pipeline.

Create a lookup in the pair-connected Splunk Cloud Platform deployment

Start by creating your lookup in the Splunk Cloud Platform deployment that is pair-connected with your Edge Processor tenant. Doing this makes your lookup available as a lookup dataset in the tenant, which you can then import and use in Edge Processor pipelines.

The Edge Processor solution supports CSV lookups and KV Store lookups. To follow along with the example scenario described on this page, create a CSV lookup using the prices.csv file.

Creating CSV lookups for Edge Processors

For detailed instructions on creating a CSV lookup, see Define a CSV lookup in Splunk Web in the Splunk Cloud Platform Knowledge Manager Manual. When creating a CSV lookup for use in an Edge Processor, do the following:

- In Splunk Cloud Platform, upload the .csv or .csv.gz file containing your lookup table.

- (Optional) If you don't want other users to be able to see all of the contents of your lookup table, you can create a restricted view of the table by creating a lookup definition.

- Update the permissions associated with the CSV file or the lookup definition. The file or definition must be available to all apps, your Splunk Cloud Platform user account, and the service account used in the scpbridge connection that connects the Splunk Cloud Platform deployment with the Edge Processor tenant.

- Set the Object should appear in option to All apps (system).

- Make sure that Read permission for the file or definition is available to a role that is associated with your Splunk Cloud Platform user account.

- Make sure that Read permission is also available to the role used by the service account. Typically, the name of this role is scp_user, if you used the role name suggested in Create a role for the service account during the initial setup of the Edge Processor solution.

- Make sure that a role that is associated with your user account and the role used by the service account both have Read permission for the Destination app that is associated with the CSV file or lookup definition.

- Select Apps, then select Manage Apps.

- Find the app that your CSV file or lookup definition is associated with, and then select Permissions.

- Select Read permission for the necessary roles, and then select Save.

Creating KV Store lookups for Edge Processors

For detailed instructions on creating a KV Store lookup, see Define a KV Store lookup in Splunk Web in the Splunk Cloud Platform Knowledge Manager Manual. When creating a KV Store lookup for use in an Edge Processor, do the following:

- Create a lookup definition for your KV Store collection.

- Update the permissions associated with the lookup definition. The definition must be available to all apps, your Splunk Cloud Platform user account, and the service account used in the scpbridge connection that connects the Splunk Cloud Platform deployment with the Edge Processor tenant.

- Set the Object should appear in option to All apps (system).

- Make sure that Read permission for this definition is available to a role that is associated with your Splunk Cloud Platform user account.

- Make sure that Read permission is also available to the role used by the service account. Typically, the name of this role is scp_user, if you used the role name suggested in Create a role for the service account during the initial setup of the Edge Processor solution.

- Make sure that a role that is associated with your user account and the role used by the service account both have Read permission for the Destination app that is associated with the lookup definition.

- Select Apps, then select Manage Apps.

- Find the app that your lookup definition is associated with, and then select Permissions.

- Select Read permission for the necessary roles, and then select Save.

Confirm the availability of the lookup dataset

After creating your lookup in the pair-connected Splunk Cloud Platform deployment, confirm that the lookup is available as a dataset in your Edge Processor tenant.

- Log in to your Edge Processor tenant.

- Refresh the scpbridge connection by doing the following:

- Navigate to the Datasets page and find your lookup dataset. The dataset has the same name as the CSV file or the lookup definition.

If the Datasets page does not show your lookup dataset, then there might be a permissions error preventing the scpbridge connection from accessing the dataset. Verify that the role used by the service account for the scpbridge connection has read permission for your lookup table or definition. See Create a lookup in the pair-connected Splunk Cloud Platform deployment on this page for more information. - Select your lookup dataset, and then select the Open in Search icon (

).

). - In the Search page, select the Run icon (

).

).

If the search results pane displays information from your lookup dataset, then the lookup dataset is available in your tenant and ready to be used in pipelines. If the search results pane displays an error or 0 results, then there might be a permissions error preventing you from fully accessing the lookup dataset. In Splunk Cloud Platform, verify that your user account has read permission for your lookup table or definition. See Create a lookup in the pair-connected Splunk Cloud Platform deployment on this page for more information.

You now have a lookup dataset that you can use to enrich events in an Edge Processor pipeline. Next, start creating the pipeline.

Create a pipeline

- Navigate to the Pipelines page and then select New pipeline.

- Select Blank pipeline and then select Next.

- Specify a subset of the data received by the Edge Processor for this pipeline to process. If you want to use the sample data given in step 4 so that you can follow along with the example configurations described in later sections of this page, skip this step. To define a partition, complete these steps:

- Select the plus icon (

) next to Partition or select the option that matches how you would like to create your partition in the Suggestions section.

) next to Partition or select the option that matches how you would like to create your partition in the Suggestions section. - In the Field field, specify the event field that you want the partitioning condition to be based on.

- To specify whether the pipeline includes or excludes the data that meets the criteria, select Keep or Remove.

- In the Operator field, select an operator for the partitioning condition.

- In the Value field, enter the value that your partition should filter by to create the subset.

- Select Apply.

- Once you have defined your partition, select Next.

You can create more conditions for a partition in a pipeline by selecting the plus icon (

).

).

- Select the plus icon (

- (Optional) Enter or upload sample data for generating previews that show how your pipeline processes data.

The sample data must be in the same format as the actual data that you want to process. See Getting sample data for previewing data transformations for more information.

If you want to follow the configuration examples in the next section, then select CSV and then enter the following sample events, which represent three fictitious purchases made from a store website:

date,ip_address,product_id 2023-11-22,107.3.146.207,WC-SH-G04 2023-11-22,128.241.220.82,DC-SG-G02 2023-11-23,194.215.205.19,FS-SG-G03

- Select Next to confirm your sample data.

- Select the name of the destination that you want to send data to.

- (Optional) If you selected a Splunk platform S2S or Splunk platform HEC destination, you can configure index routing:

- Select one of the following options in the expanded destinations panel:

Option Description Default The pipeline does not route events to a specific index.

If the event metadata already specifies an index, then the event is sent to that index. Otherwise, the event is sent to the default index of the Splunk platform deployment.Specify index for events with no index The pipeline only routes events to your specified index if the event metadata did not already specify an index. Specify index for all events The pipeline routes all events to your specified index. - If you selected Specify index for events with no index or Specify index for all events, then in the Index name field, select or enter the name of the index that you want to send your data to.

Be aware that the destination index is determined by a precedence order of configurations. See How does an Edge Processor know which index to send data to? for more information.

- Select one of the following options in the expanded destinations panel:

- Select Done to confirm the data destination.

You now have a simple pipeline that receives data and sends that data to a destination. In the next section, you'll configure this pipeline to enrich your data using information from your lookup dataset.

Configure your pipeline to enrich event data using a lookup

Add an Enrich events with lookup action to your pipeline. Configure this action to specify how the pipeline matches field-value combinations in the incoming event data with field-value combinations in a lookup dataset and adds information from that dataset to the events.

- (Optional) Select the Preview Pipeline icon (

) to generate a preview that shows what the sample data looks like when it passes through the pipeline.

) to generate a preview that shows what the sample data looks like when it passes through the pipeline. - Select the plus icon (

) in the Actions section, and then select Enrich events with lookup.

) in the Actions section, and then select Enrich events with lookup. - Open the Lookup dataset menu, select the lookup dataset that you want to use, and then select Select lookup dataset.

For example, if you created a lookup dataset using theprices.csvfile, then select prices.csv.

The Enrich events with lookups dialog box loads the information from your selected lookup dataset. - In the Match fields area, define one or more pairs of lookup fields and event fields that you want to match. When these matched fields contain identical values, the pipeline adds data from the lookup dataset to the event.

For example, theproduct_idfield from the sample data and theproductIdfield from the prices.csv lookup dataset both contain product ID values used by the fictitious Buttercup Games store. If you match these fields, then whenever the pipeline receives an event that hasWC-SH-G04as aproduct_idvalue, the pipeline will update that event to include data from the lookup dataset row that hasWC-SH-G04as aproductIdvalue. To match these fields, configure the following settings in the Match fields area:Option name Enter or select the following Lookup field productId Event field product_id - In the Output fields area, select the fields from the lookup dataset that you want to add to your events. You can choose to add all the fields from the dataset by selecting Output all fields.

- (Optional) Specify the action to take when an incoming event already contains the selected output fields:

- To replace the data in the event with the data from the lookup dataset, select Overwrite existing values in events. This setting is selected by default.

- To leave the existing data in the event unchanged, and only fill in empty or missing fields using data from the lookup dataset, deselect Overwrite existing values in events.

For example, consider if the pipeline receives an event that erroneously has

WC-SH-G04as aproduct_idvalue andPony Runas aproduct_namevalue. According to the prices.csv dataset, theWC-SH-G04product ID corresponds to theWorld of Cheeseproduct name. When Overwrite existing values in events is selected, the pipeline changes theproduct_namevalue in the event fromPony RuntoWorld of Cheese. When that option is not selected, the pipeline does not change theproduct_namevalue in the event. - To confirm the configuration of your lookups, select Apply.

- The

importcommand imports the lookup dataset into the pipeline so that the pipeline can use it. See Importing datasets into Edge Processor pipelines for more information. - The

lookupcommand matches fields from the lookup dataset with fields from the incoming events, and then enriches the events by adding the specified output fields to the event. See lookup command overview in the SPL2 Search Manual for more information. - Imports the prices.csv lookup dataset.

- Matches the

product_idfield in incoming events with theproductIdfield in the prices.csv dataset. - Adds the corresponding

product_namevalues from the prices.csv dataset into incoming events, overwriting anyproduct_namevalues that might already exist in the events.

If you used the sample events described in the previous section, then the preview results panel displays the following:

| date | ip_address | product_id |

|---|---|---|

| 2023-11-22 | 107.3.146.207 | WC-SH-G04 |

| 2023-11-22 | 128.241.220 | DC-SG-G02 |

| 2023-11-23 | 194.215.205.19 | FS-SG-G03 |

For example, to add product names from the prices.csv lookup dataset to the sample events, select product_name.

The pipeline editor adds an import statement and a lookup command to your pipeline.

For example, this pipeline does the following:

import 'prices.csv' from /envs.splunk.buttercup.lookups $pipeline = | from $source | lookup 'prices.csv' productId AS product_id OUTPUT product_name | into $destination;

If you preview your pipeline again, the preview results panel displays the following:

| date | ip_address | product_id | product_name |

|---|---|---|---|

| 2023-11-22 | 107.3.146.207 | WC-SH-G04 | World of Cheese |

| 2023-11-22 | 128.241.220 | DC-SG-G02 | Dream Crusher |

| 2023-11-23 | 194.215.205.19 | FS-SG-G03 | Final Sequel |

You now have a pipeline that enriches the incoming data with additional information from a lookup dataset. In the next section, you'll save this pipeline and apply it to an Edge Processor.

Save and apply your pipeline

Be aware that when you apply a pipeline that uses a lookup or add a lookup to an applied pipeline, it can take some time for the Edge Processor to download and start using your lookup table. For example, a 200 MB lookup table takes approximately 10 minutes to download.

This download time does not disrupt data processing when you're adding a lookup to a pipeline that is already applied to an Edge Processor. However, when you initially apply a lookup pipeline to an Edge Processor, that pipeline does not start receiving or processing data until after the download is complete.

- To save your pipeline, do the following:

- Select Save pipeline.

- In the Name field, enter a name for your pipeline.

- (Optional) In the Description field, enter a description for your pipeline.

- Select Save.

If your pipeline is valid, the Edge Processor service prompts you to apply it to an Edge Processor.

- To apply this pipeline to an Edge Processor, do the following:

- In the Apply pipeline prompt, select Yes, apply.

- Select the Edge Processors that you want to apply the pipeline to, and then select Save.

You can only apply pipelines to Edge Processors that are in the Healthy status. Additionally, you must select an Edge Processor that was created on or after December 8, 2023 when applying a pipeline that contains a lookup.

It can take a few minutes for the Edge Processor service to finish applying your pipeline to an Edge Processor. During this time, the affected Edge Processors enter the Pending status. To confirm that the process completed successfully, do the following:

- Navigate to the Edge Processors page. Then, verify that the Instance health column for the affected Edge Processors shows that all instances are back in the Healthy status.

- Navigate to the Pipelines page. Then, verify that the Applied column for the pipeline contains a The pipeline is applied icon (

).

).

If the Edge Processor service returns this error message, then there might be a permissions problem:

Failed to apply <pipeline_name>. Pipeline must contain valid SPL2 and have valid source and destination datasets.

If you see this message, then verify that your Splunk Cloud Platform user account has read permission for your lookup table or definition. See Create a lookup in the pair-connected Splunk Cloud Platform deployment on this page for more information.

- (Optional) To confirm that the Edge Processor has finished downloading the lookup table and is using your pipeline to receive and process data, do the following:

- Navigate to the Edge Processors page.

- In the row that lists your Edge Processor, select the Actions icon (

) and select View debug logs. The Search page opens.

) and select View debug logs. The Search page opens. - Select a time range for your search.

- Select the Run (

) icon to search for Edge Processor log entries.

) icon to search for Edge Processor log entries. - Confirm if the search results include a recent log containing the message

finished download of lookup tables, edge processor updates should start. If you do not see this log, wait a few minutes and then run the search and check again. - In the search results, confirm that after the log described previously, there is an additional log containing the message

otel-collector started successfully. If you do not see this log, wait a few minutes and then run the search and check again.

If you don't see any of these logs, then contact your Splunk representative for assistance.

The Edge Processor that you applied the pipeline to can now enrich the event data that it receives by adding information from the lookup dataset. For information on how to confirm that your data is being processed and routed as expected, see Verify your Edge Processor and pipeline configurations.

Update lookup datasets

The information in your lookup datasets comes from the pair-connected Splunk Cloud Platform deployment. To update a lookup dataset that your Edge Processor is using to enrich data, start by updating the lookup table or definition in Splunk Cloud Platform. Then, synchronize that updated information between Splunk Cloud Platform, the Edge Processor tenant, and the Edge Processor.

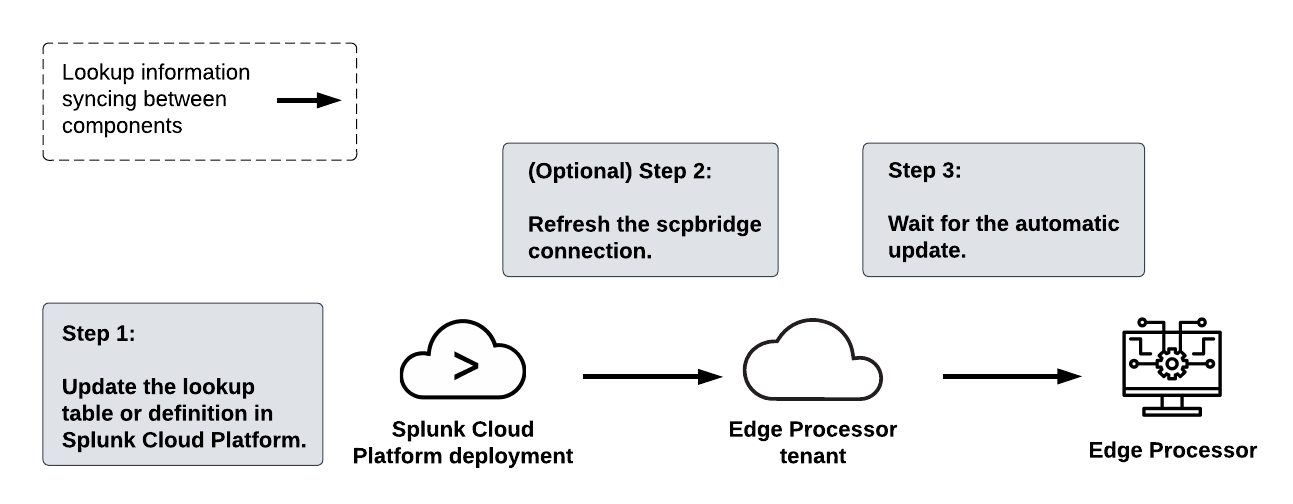

The following diagram summarizes the steps for updating a lookup dataset:

To update a lookup dataset, complete these steps:

- In the pair-connected Splunk Cloud Platform deployment, update the information in the lookup table or definition corresponding to the lookup dataset.

- (Optional) If you added any new columns to the lookup table, and you want to use the Enrich events with lookups interface when configuring the lookup in your pipeline, then you need to refresh the scpbridge connection in order for the interface to display the correct columns. In the Edge Processor tenant, do the following:

- Wait for the Edge Processor tenant to send the updated information to the Edge Processor. This update occurs when one of the following conditions is met:

- 4 hours have passed since the last time the Edge Processor tenant updated the lookup dataset. The tenant automatically updates all the lookup datasets used by Edge Processors every 4 hours.

- A change is made to the configuration of the

lookupcommand or the Enrich events with lookup action in the applied pipeline. After these changes are saved, the Edge Processor will download the latest lookup dataset.

Performance benchmarks for lookups in Edge Processors

These are benchmarks for expected performance but are not guaranteed.

When you apply a pipeline that uses a lookup or add a lookup to an applied pipeline, it can take some time for the Edge Processor to download and start using the lookup table. It takes approximately 10 minutes to download a 200 MB lookup table.

This download time does not disrupt data processing when you're adding a lookup to a pipeline that is already applied to an Edge Processor. However, when you initially apply a lookup pipeline to an Edge Processor, that pipeline does not start receiving or processing data until after the download is complete.

This benchmark was determined based on performance testing with these configurations:

- A pipeline that uses the

rexcommand to extract 1 event, and then uses thelookupcommand to match 1 existing field and add 1 new field. - A lookup table that contains 2 columns and 1 million rows. The file size of this table is 200 MB.

This table was used in performance testing specifically. Edge Processors have also been tested using lookup tables that contain up to 20 columns.

| Hash fields using an Edge Processor | Extract fields from event data using an Edge Processor |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.0.2209, 9.0.2303, 9.0.2305, 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406, 9.3.2408, 9.3.2411 (latest FedRAMP release)

Download manual

Download manual

Feedback submitted, thanks!