How the Edge Processor solution transforms data

You can use the Edge Processor solution to transform your data in a wide variety of ways, including but not limited to:

- Breaking multiline data into distinct events

- Assigning event timestamps

- Extracting raw data values into top-level event fields

- Masking or updating data values

Some of these transformations can also be applied by other Splunk software that is part of your end-to-end data ingestion pathway, such as forwarders and indexers. When you use an Edge Processor to process and route data, the way that your data is ultimately transformed varies depending on the specific combination of data sources and destinations that are involved. For example, in some cases, the data source assigns the event timestamps. In other cases, the Edge Processor assigns the timestamps, and you might need to configure a pipeline to extract date and time information from the raw data in order to create the event timestamp.

This page explains how and where your data is transformed as it passes through an Edge Processor.

- For a high-level summary of how an Edge Processor transforms various types of data as it receives that data, passes it through pipelines, and then exports the data to a destination, see Data transformation overview.

- For details about how your data is transformed for each specific combination of data sources and destinations that the Edge Processor supports, as well as guidance on how to configure certain transformations, see the following sections:

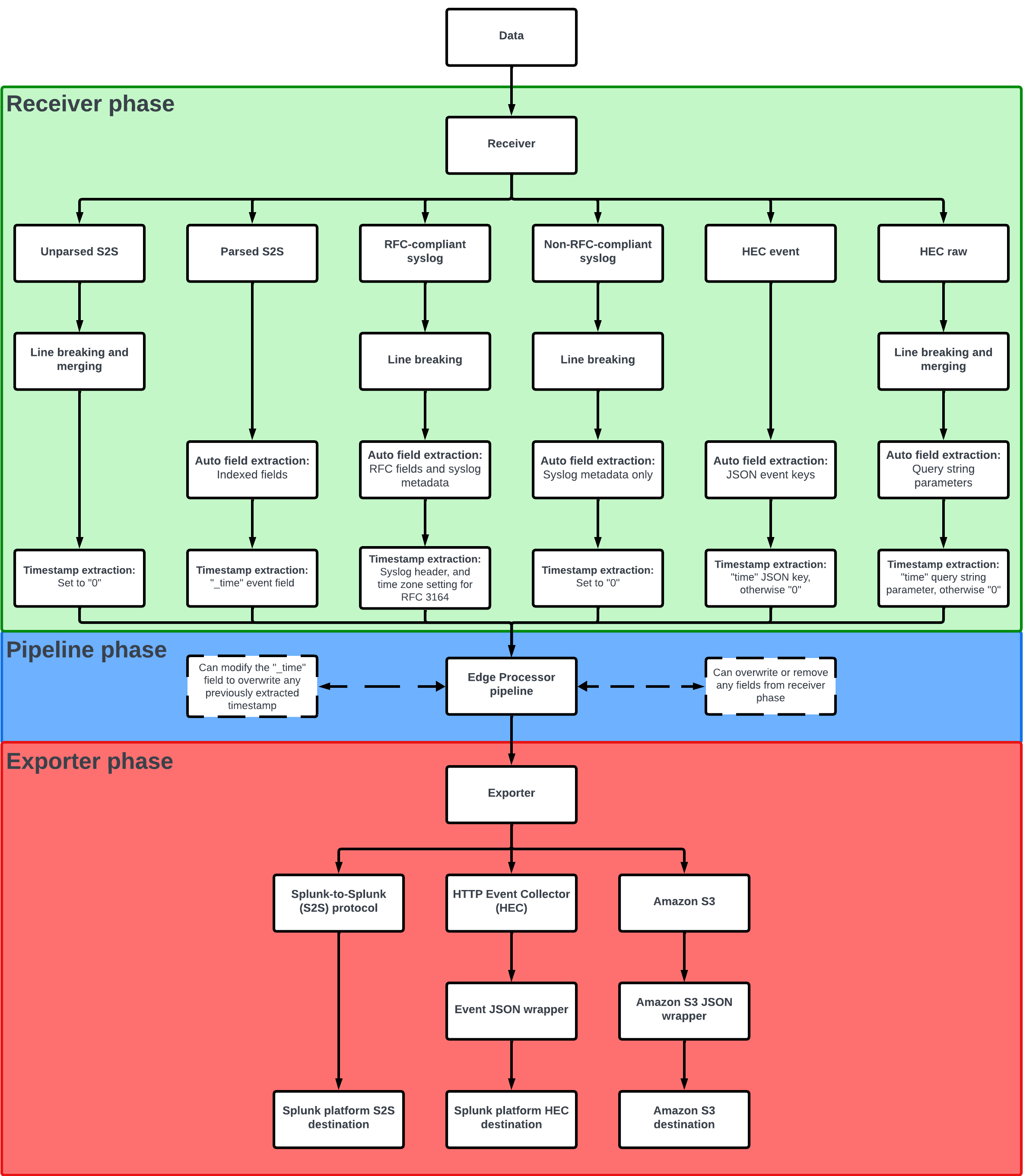

Data transformation overview

When an Edge Processor processes data, the data goes through 3 phases of transformations, and the exact transformations that take place vary depending on the nature of the data.

The data goes through these 3 phases of transformations:

- Receiver phase: The Edge Processor receives the data from a data source, and completes preliminary transformations to prepare the data before passing it to the pipelines.

- Pipeline phase: The pipelines that are applied to the Edge Processor route and transform the data according to SPL2 configurations.

- Exporter phase: The Edge Processor completes finalizing changes to ensure that the data is compatible for storage in the specified destination, and then sends the transformed data out to that destination.

During the receiver phase, the specific transformations that take place are determined by the type of data that the Edge Processor received. The supported data can be categorized into the following types:

- Unparsed S2S: Data that comes from other Splunk software and is not fully parsed. This corresponds to data from a universal forwarder that does not have the

INDEXED_EXTRACTIONSproperty configured. - Parsed S2S: Data that comes from other Splunk software and is fully parsed. This corresponds to data from a heavy forwarder or a universal forwarder that has the

INDEXED_EXTRACTIONSproperty configured. - RFC-compliant syslog: Syslog data that is formatted in a way that complies with the specified RFC protocol. Edge Processors can be configured to use RFC 3164, 5424, or 6587.

- Non-RFC-compliant syslog: Syslog data that does not comply with the specified RFC protocol.

- HEC raw: Data sent by an HTTP client to the Edge Processor through the services/collector/raw HEC endpoint.

- HEC event: Data sent by an HTTP client to the Edge Processor through the services/collector HEC endpoint.

The following flowchart outlines the data transformations that take place as data passes through an Edge Processor. You can select the image to expand it.

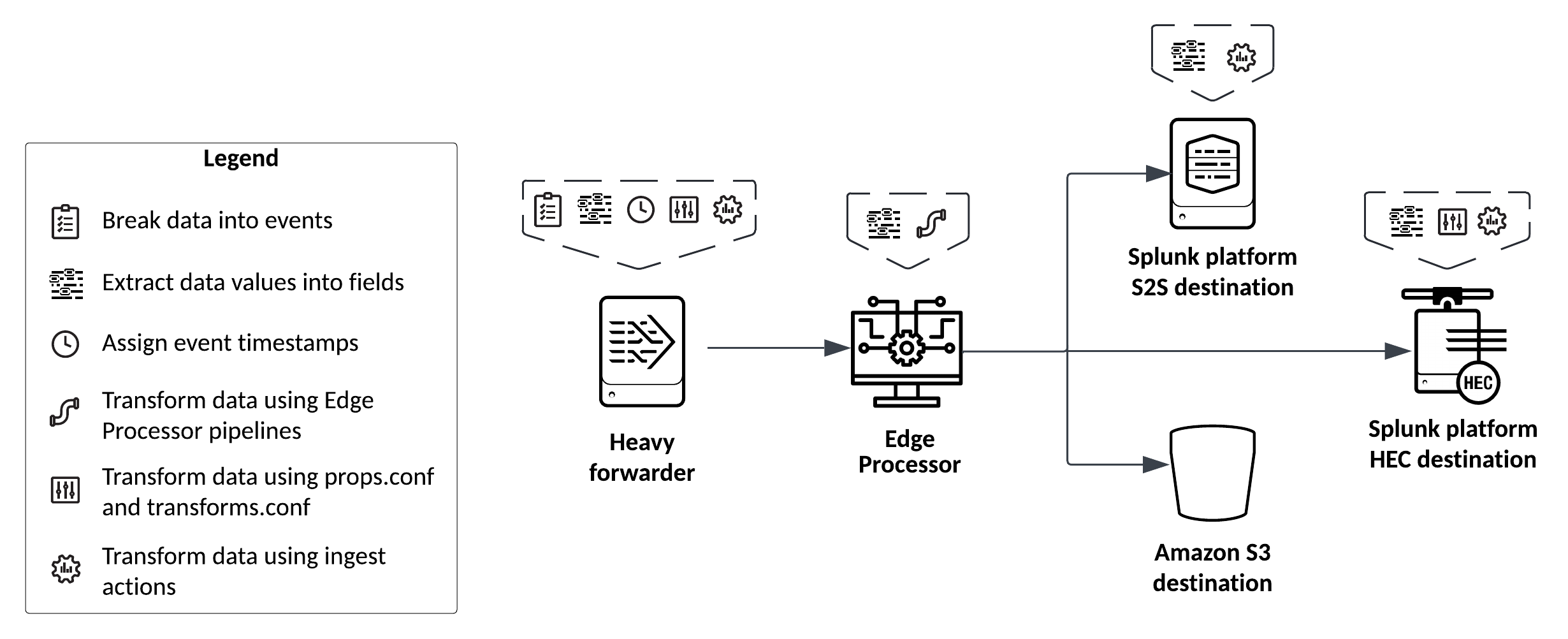

Heavy forwarder

If your Edge Processor receives the data from a heavy forwarder, then your data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | The heavy forwarder breaks the data into events based on the configurations in the props.conf file. | Configure line breaking and merging in the props.conf file of the heavy forwarder. |

|

| Extract data values into event fields | First, the heavy forwarder extracts the indexed fields specified by the props.conf and transforms.conf files.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The heavy forwarder assigns event timestamps based on the configurations in the props.conf file. | Configure timestamp assignment in the props.conf file of the heavy forwarder.

|

|

| Other data transformations | First, the heavy forwarder transforms data based on the configurations in the props.conf and transforms.conf files, including ingest actions.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through a heavy forwarder and an Edge Processor before being sent to a destination:

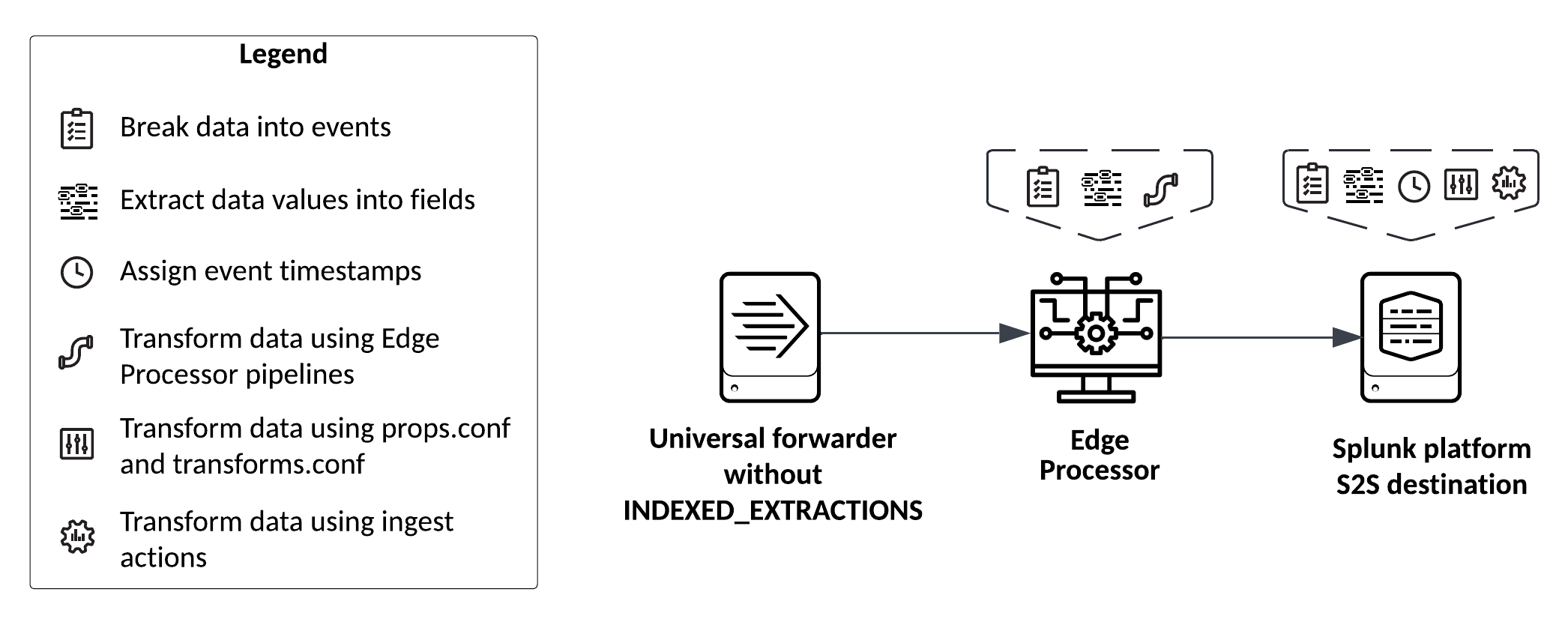

Universal forwarder without INDEXED_EXTRACTIONS

If your Edge Processor receives the data from a universal forwarder that does not have the INDEXED_EXTRACTIONS property configured in the props.conf file, then the exact transformations that your data goes through depends on the kind of destination that the Edge Processor sends the data to in the end.

Refer to the section corresponding to your data destination:

Splunk platform S2S destination

If your Edge Processor receives data from a universal forwarder without the INDEXED_EXTRACTIONS property and sends that data to a Splunk platform S2S destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | If the data's source type matches a source type defined in the Edge Processor service, then the Edge Processor breaks the data into events based on the configuration settings in the source type.

|

Maintain the same line breaking configurations in both the Edge Processor service and the Splunk platform. |

|

| Extract data values into event fields | First, the Edge Processor extracts fields based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The Splunk platform assigns event timestamps based on the configuration settings in the props.conf file. | Configure timestamp assignment in the props.conf file of the Splunk platform. |

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through a universal forwarder without INDEXED_EXTRACTIONS and an Edge Processor before being sent to a Splunk platform S2S destination:

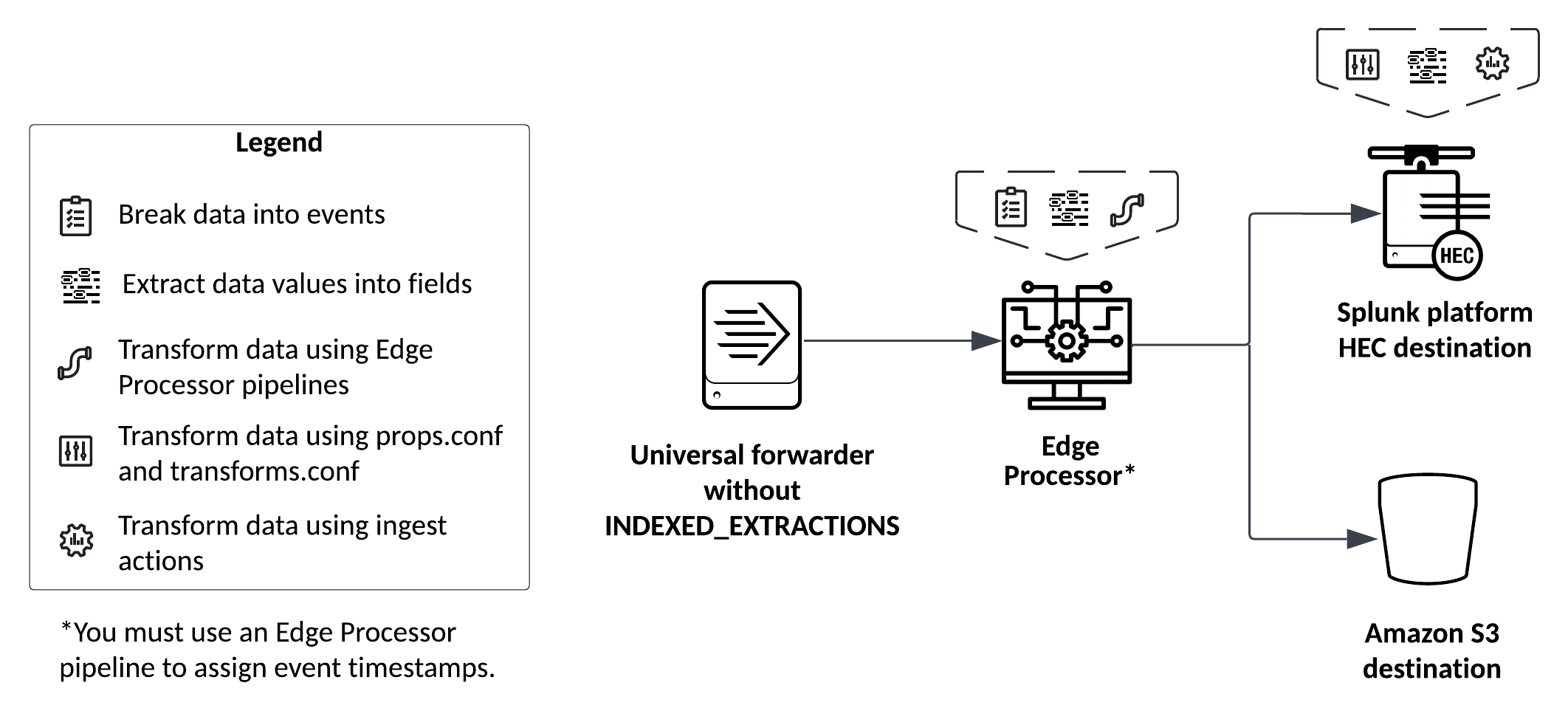

Splunk platform HEC or Amazon S3 destination

If your Edge Processor receives data from a universal forwarder without the INDEXED_EXTRACTIONS property and sends that data to a Splunk platform HEC or Amazon S3 destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | If the data's source type matches a source type defined in the Edge Processor service, then the Edge Processor breaks the data into events based on the configuration settings in the source type. | Make sure that a matching source type with the appropriate line breaking and merging configurations is defined in the Edge Processor service. | Add source types for Edge Processors |

| Extract data values into event fields | First, the Edge Processor extracts fields based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | By default, the Edge Processor sets a timestamp of "0". You must configure a pipeline to assign event timestamps, or else the Splunk platform will use the current time as the event timestamp.

The Edge Processor renames the |

Use an Edge Processor pipeline to assign event timestamps by using the SPL2 command eval _time = strptime(<field>, <format>) to create the _time field.

|

Configure a pipeline to assign event timestamps |

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through a universal forwarder without INDEXED_EXTRACTIONS and an Edge Processor before being sent to a Splunk platform HEC or Amazon S3 destination:

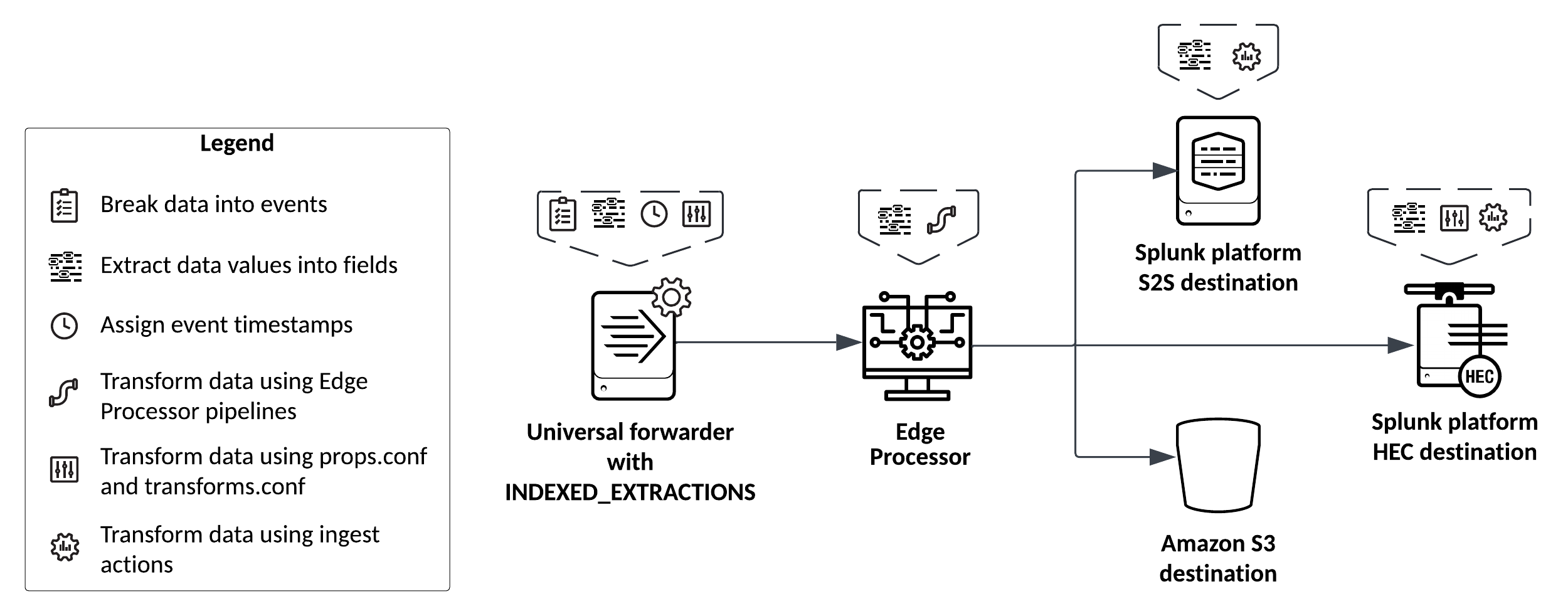

Universal forwarder with INDEXED_EXTRACTIONS

If your Edge Processor receives the data from a universal forwarder that has the INDEXED_EXTRACTIONS property configured in the props.conf file, then your data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | The universal forwarder breaks the data into events based on the configurations in the props.conf file. | Configure line breaking and merging in the props.conf file of the universal forwarder. |

|

| Extract data values into event fields | First, the universal forwarder extracts the indexed fields specified by the props.conf and transforms.conf files.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The universal forwarder assigns event timestamps based on the configurations in the props.conf file. | Configure timestamp assignment in the props.conf file of the universal forwarder.

|

|

| Other data transformations | First, the universal forwarder transforms data based on the configurations in the props.conf and transforms.conf files.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through a universal forwarder that has INDEXED_EXTRACTIONS configured and an Edge Processor before being sent to a destination:

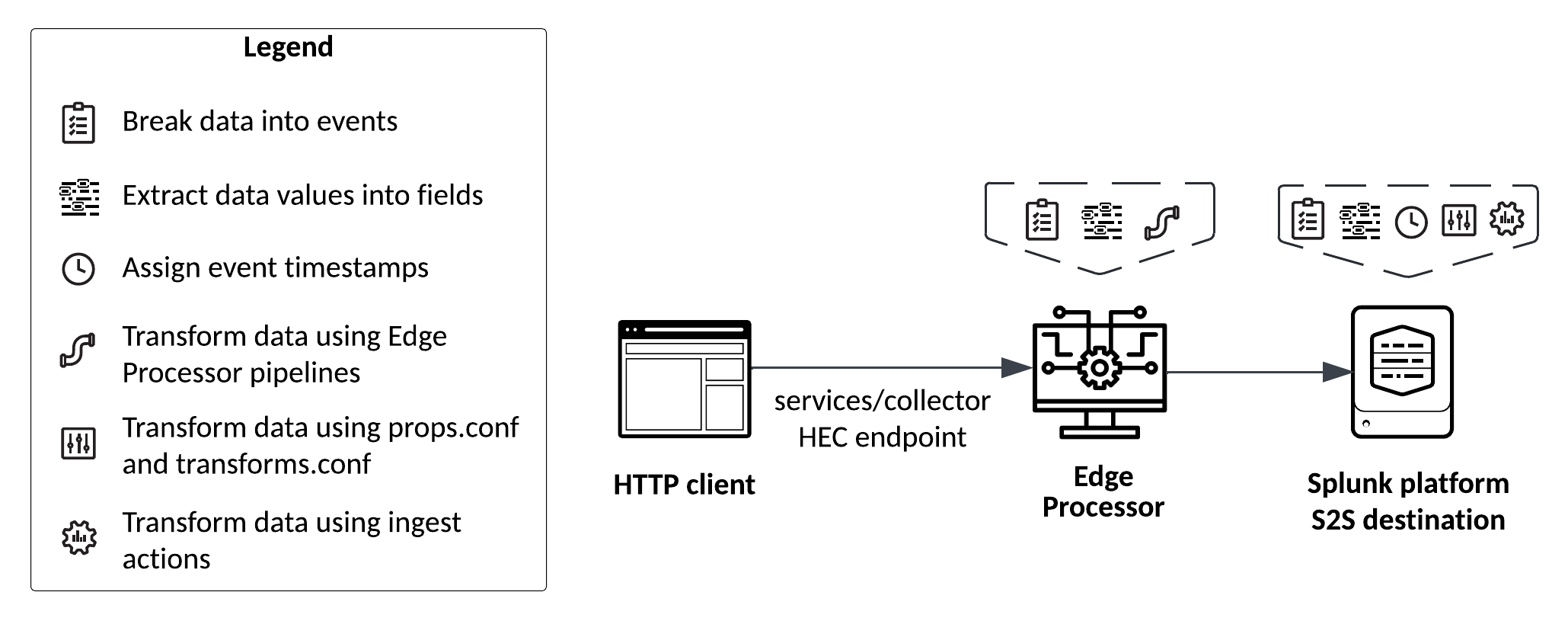

HTTP client using the services/collector HEC endpoint

If your Edge Processor receives the data from an HTTP client through the services/collector HTTP Event Collector (HEC) endpoint, then the exact transformations that your data goes through depends on the kind of destination that the Edge Processor sends the data to in the end.

Refer to the section corresponding to your data destination:

Splunk platform S2S destination

If your Edge Processor receives data through the services/collector HEC endpoint and sends that data to a Splunk platform S2S destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | The Edge Processor treats each top-level JSON object in the body of the HEC request as one distinct event.

|

Configure line breaking and merging in the props.conf file of the Splunk platform. |

|

| Extract data values into event fields | First, if the JSON object in the body of the HEC request contains any of these keys, the Edge Processor extracts them into event fields: fields, host, index, source, sourcetype, and time.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The Splunk platform assigns event timestamps based on the configuration settings in the props.conf file. | Configure timestamp assignment in the props.conf file of the Splunk platform. |

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through the services/collector HEC endpoint and an Edge Processor before being sent to a Splunk platform S2S destination:

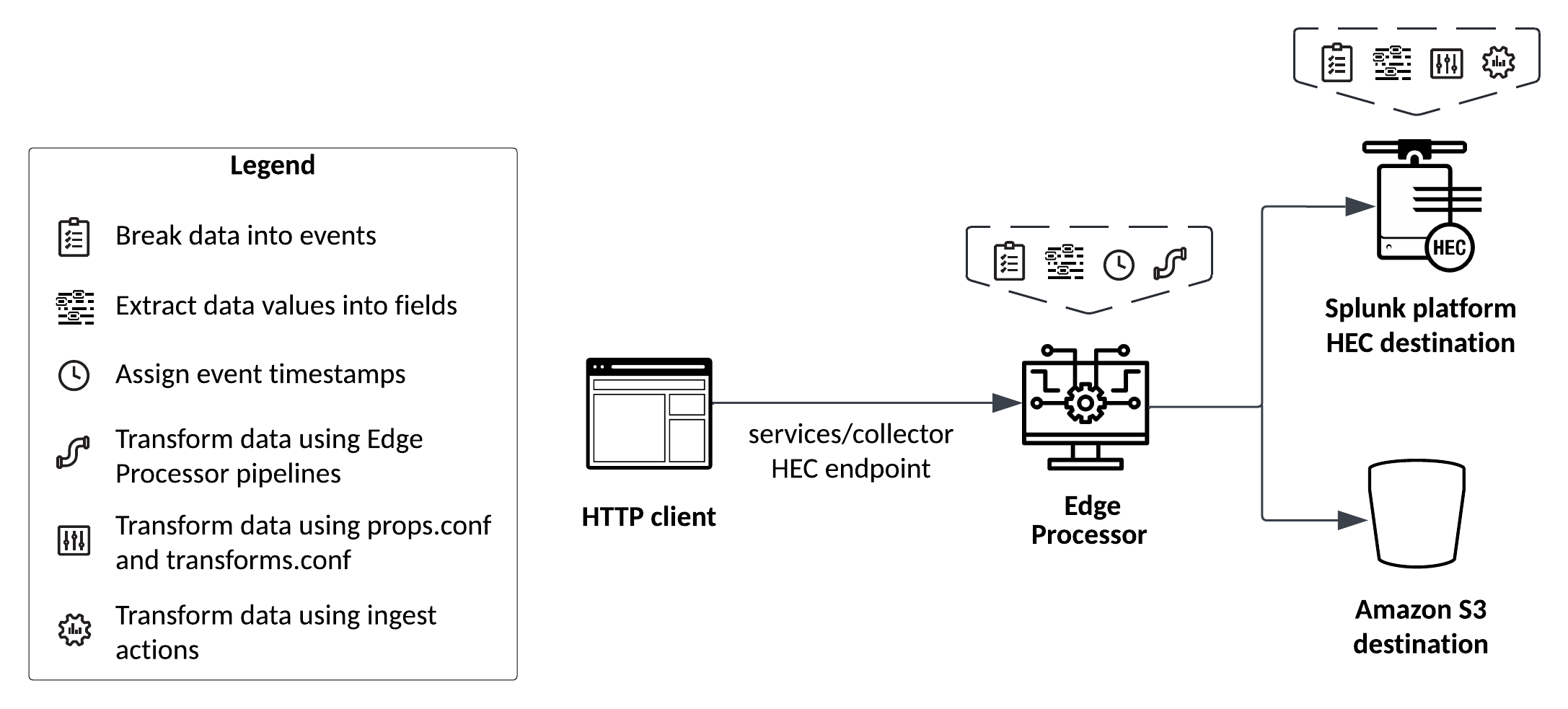

Splunk platform HEC or Amazon S3 destination

If your Edge Processor receives data through the services/collector HEC endpoint and sends that data to a Splunk platform HEC or Amazon S3 destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | The Edge Processor treats each top-level JSON object in the body of the HEC request as one distinct event.

|

None. You cannot change how the Edge Processor or the Splunk platform breaks data into events when receiving data through the services/collector HEC endpoint. | Using the services/collector endpoint in Edge Processors |

| Extract data values into event fields | First, if the JSON object in the body of the HEC request contains any of these keys, the Edge Processor extracts them into event fields: fields, host, index, source, sourcetype, and time.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The services/collector HEC endpoint assigns event timestamps based on the time key in the HTTP request body. If the time key is not specified, then the endpoint does not assign timestamps.

The Edge Processor renames the If none of the above occur, then the Splunk platform will use the current time as the timestamp. |

Configure your HTTP application to send a timestamp in UNIX time format as the value of the time key.

|

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through the services/collector HEC endpoint and an Edge Processor before being sent to a Splunk platform HEC or Amazon S3 destination:

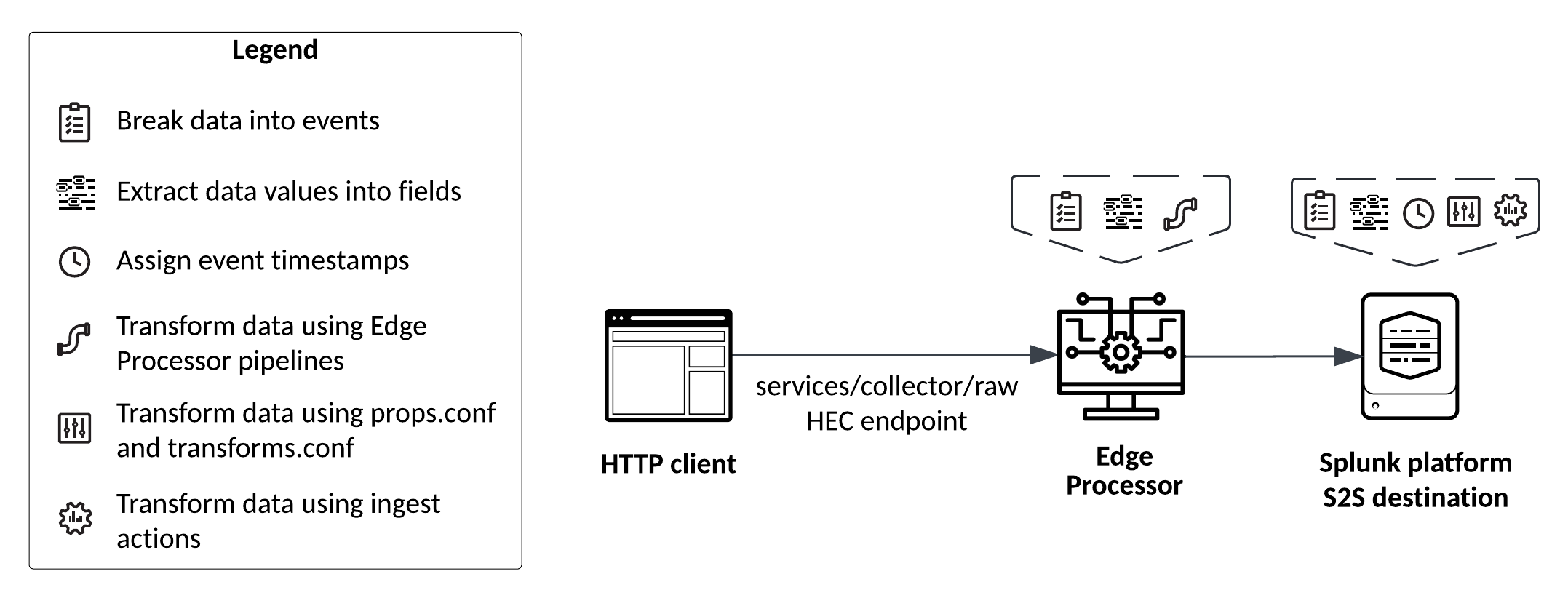

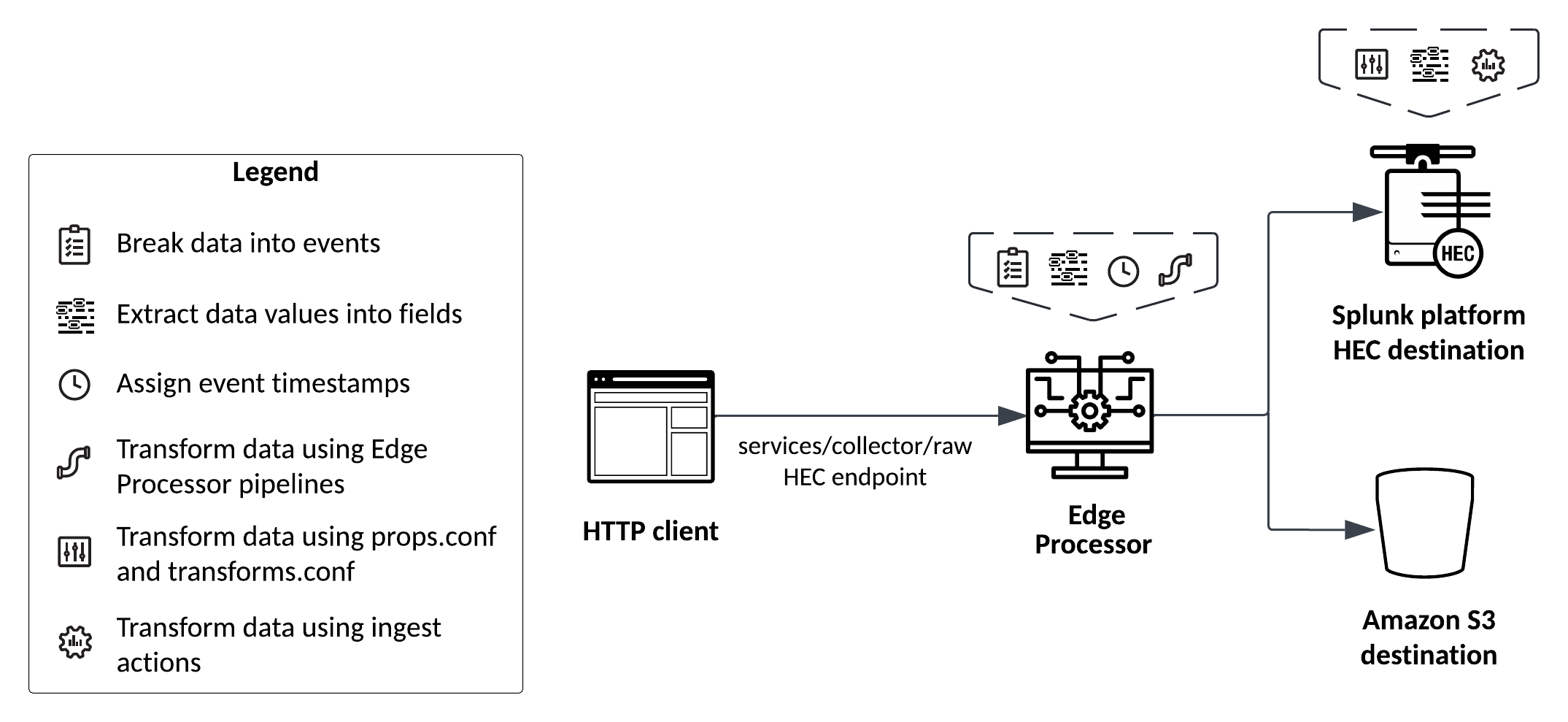

HTTP client using the services/collector/raw HEC endpoint

If your Edge Processor receives the data from an HTTP client through the services/collector/raw HTTP Event Collector (HEC) endpoint, then the exact transformations that your data goes through depends on the kind of destination that the Edge Processor sends the data to in the end.

When working with JSON-formatted event data, use the services/collector endpoint instead of the services/collector/raw endpoint. Otherwise, your data might not be transformed as expected.

Refer to the section corresponding to your data destination:

Splunk platform S2S destination

If your Edge Processor receives data through the services/collector/raw HEC endpoint and sends that data to a Splunk platform S2S destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | If the data's source type matches a source type defined in the Edge Processor service, then the Edge Processor breaks the data into events based on the configuration settings in the source type.

|

Maintain the same line breaking configurations in both the Edge Processor service and the Splunk platform. |

|

| Extract data values into event fields | First, if the HEC request contains any of these query string parameters, the Edge Processor extracts them into event fields: host, index, source, sourcetype, and time.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The Splunk platform assigns event timestamps based on the configuration settings in the props.conf file. | Configure timestamp assignment in the props.conf file of the Splunk platform. |

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through the services/collector/raw HEC endpoint and an Edge Processor before being sent to a Splunk platform S2S destination:

Splunk platform HEC or Amazon S3 destination

If your Edge Processor receives data through the services/collector/raw HEC endpoint and sends that data to a Splunk platform HEC or Amazon S3 destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | If the data's source type matches a source type defined in the Edge Processor service, then the Edge Processor breaks the data into events based on the configuration settings in the source type. | Make sure that a matching source type with the appropriate line breaking and merging configurations is defined in the Edge Processor service. | Add source types for Edge Processors |

| Extract data values into event fields | First, if the HEC request contains any of these query string parameters, the Edge Processor extracts them into event fields: host, index, source, sourcetype, and time.

|

Depending on your particular business requirements and use cases, you might need to configure field extractions at different points of the data ingestion pathway.

|

|

| Assign event timestamps | The services/collector/raw HEC endpoint assigns event timestamps based on the time query string parameter. If the time query string parameter is not specified, then the endpoint sets a timestamp of "0".

The Edge Processor renames the If none of the above occur, then the Splunk platform will use the current time as the timestamp. |

If your HEC request includes the time query string parameter, then let the services/collector/raw endpoint determine the event timestamp.

|

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through the services/collector/raw HEC endpoint and an Edge Processor before being sent to a Splunk platform HEC or Amazon S3 destination:

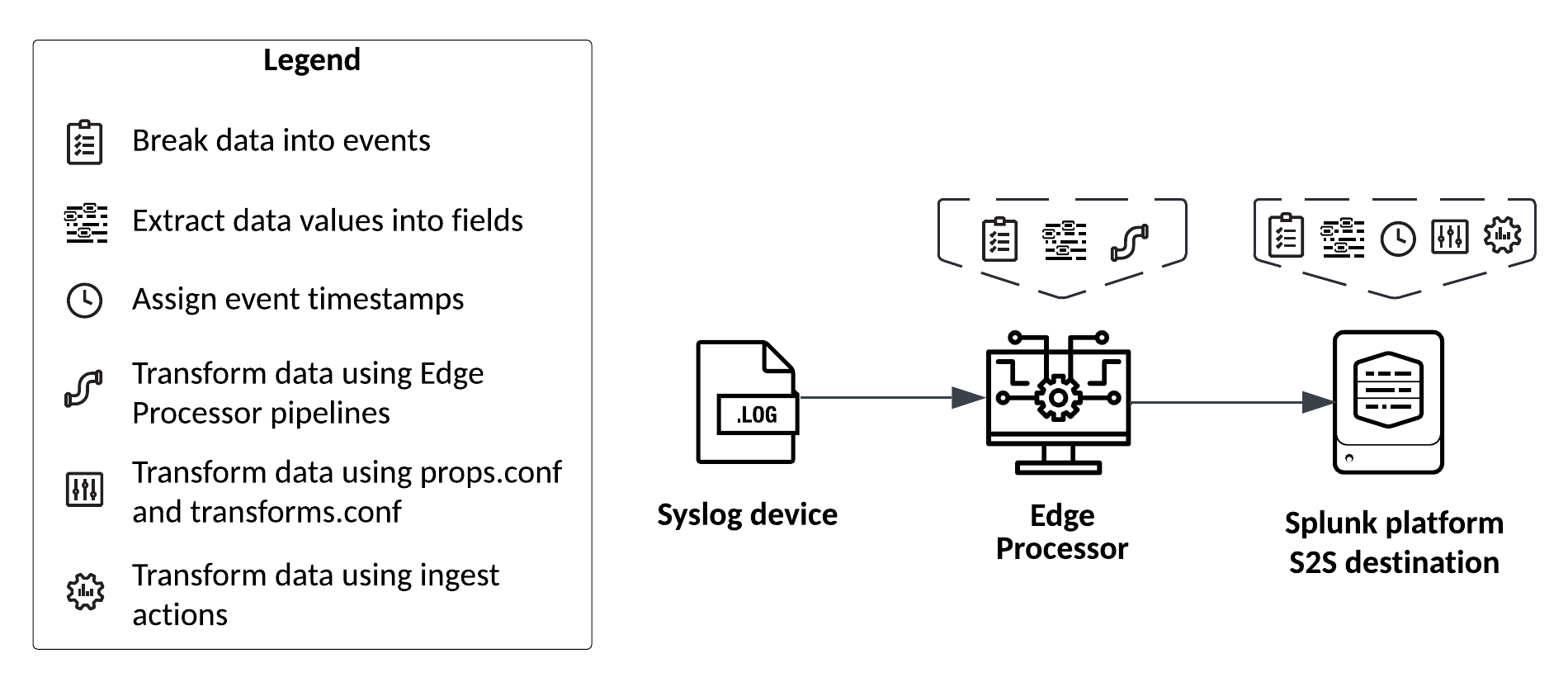

Syslog devices

If your Edge Processor receives the data from a syslog device, then the exact transformations that your data goes through depends on the kind of destination that the Edge Processor sends the data to in the end.

Refer to the section corresponding to your data destination:

Splunk platform S2S destination

If your Edge Processor receives from a syslog device and sends that data to a Splunk platform S2S destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | First, the Edge Processor treats each line of data as one distinct event. Then, if the data's source type matches a source type defined in the Edge Processor service, the Edge Processor attempts to break the data again based on the configuration settings in the source type.

|

Each syslog event is a single line of data, so the initial line breaking behavior by the Edge Processor suffices and you don't need to configure any additional line breaking settings.

|

|

| Extract data values into event fields | When you configure a port for the Edge Processor to start receiving syslog data, you select an RFC protocol.

Then, the Edge Processor completes additional field extractions based on the configurations in the applied pipelines.

|

Make sure to select an appropriate RFC protocol.

|

|

| Assign event timestamps | The Splunk platform assigns event timestamps based on the configuration settings in the props.conf file. | Configure timestamp assignment in the props.conf file of the Splunk platform. |

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through a syslog device and an Edge Processor before being sent to a Splunk platform S2S destination:

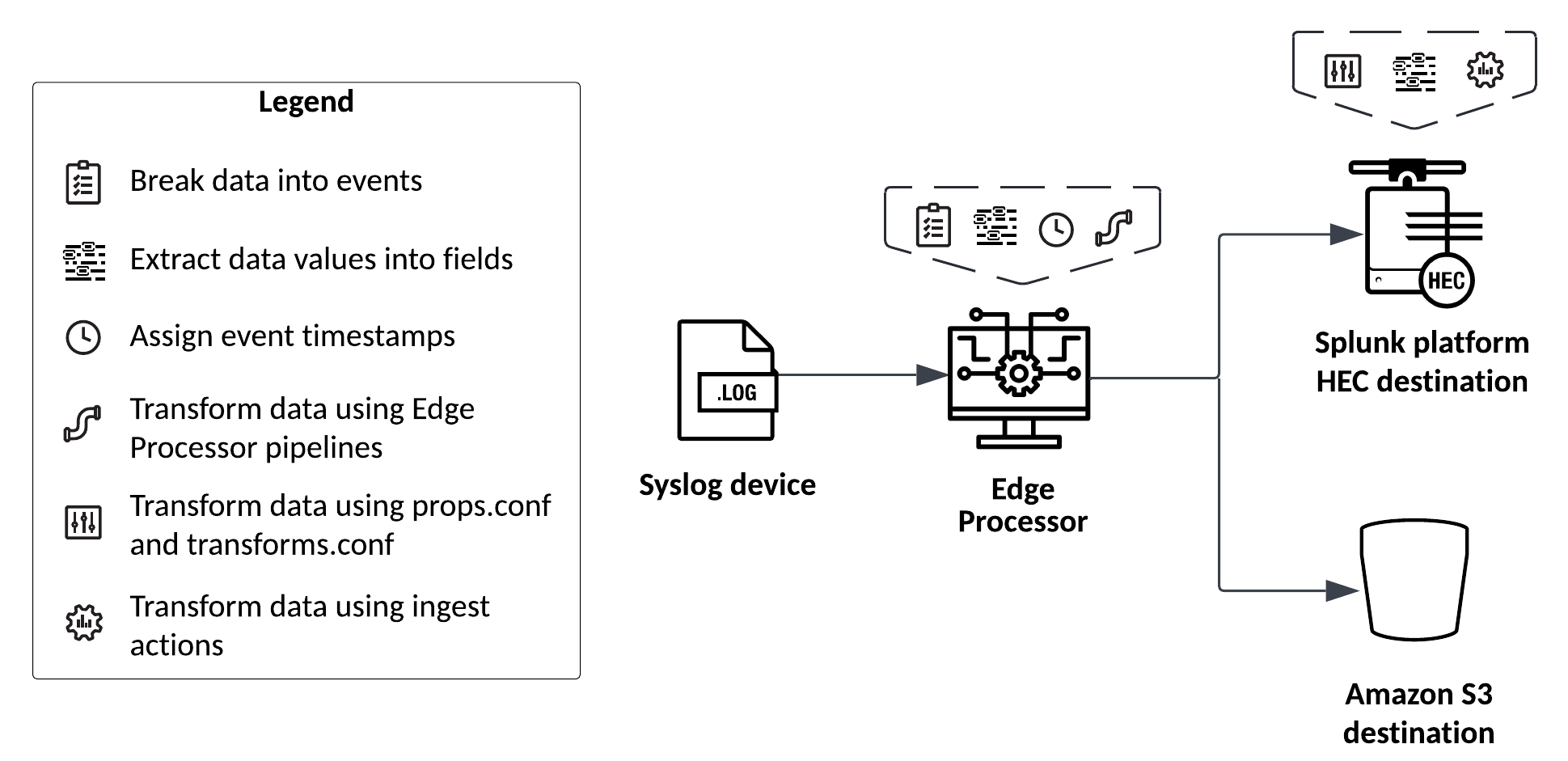

Splunk platform HEC or Amazon S3 destination

If your Edge Processor receives from a syslog device and sends that data to a Splunk platform HEC or Amazon S3 destination, then the data is transformed as follows:

| Data transformation | How the transformation happens | Configuration tips | Documentation |

|---|---|---|---|

| Break data into events | First, the Edge Processor treats each line of data as one distinct event. Then, if the data's source type matches a source type defined in the Edge Processor service, the Edge Processor attempts to break the data again based on the configuration settings in the source type. | Each syslog event is a single line of data, so the initial line breaking behavior by the Edge Processor suffices and you don't need to configure any additional line breaking settings.

|

Edit, clone, or delete source types for Edge Processors |

| Extract data values into event fields | When you configure a port for the Edge Processor to start receiving syslog data, you select an RFC protocol.

Then, the Edge Processor completes additional field extractions based on the configurations in the applied pipelines.

|

Make sure to select an appropriate RFC protocol.

|

|

| Assign event timestamps | When you configure a port for the Edge Processor to start receiving syslog data, you select an RFC protocol.

If the data passes through an Edge Processor pipeline that modifies the The Edge Processor renames the If none of the above occur, then the Splunk platform will use the current time as the timestamp. |

If your data is compliant with the selected RFC protocol, then let the Edge Processor determine the event timestamp.

|

|

| Other data transformations | First, the Edge Processor transforms data based on the configurations in the applied pipelines.

|

Depending on your particular business requirements and use cases, you might need to configure data transformations at different points of the data ingestion pathway.

|

|

The following diagram summarizes how data is transformed when it moves through a syslog device and an Edge Processor before being sent to a Splunk platform HEC or Amazon S3 destination:

| How data moves through the Edge Processor solution | Installation requirements for Edge Processors |

This documentation applies to the following versions of Splunk Cloud Platform™: 9.0.2209, 9.0.2303, 9.0.2305, 9.1.2308, 9.1.2312, 9.2.2403, 9.2.2406, 9.3.2408 (latest FedRAMP release), 9.3.2411

Download manual

Download manual

Feedback submitted, thanks!