Splunk App for Data Science and Deep Learning architecture overview

The Splunk App for Data Science and Deep Learning (DSDL) allows you to integrate advanced custom machine learning and deep learning systems with the Splunk platform.

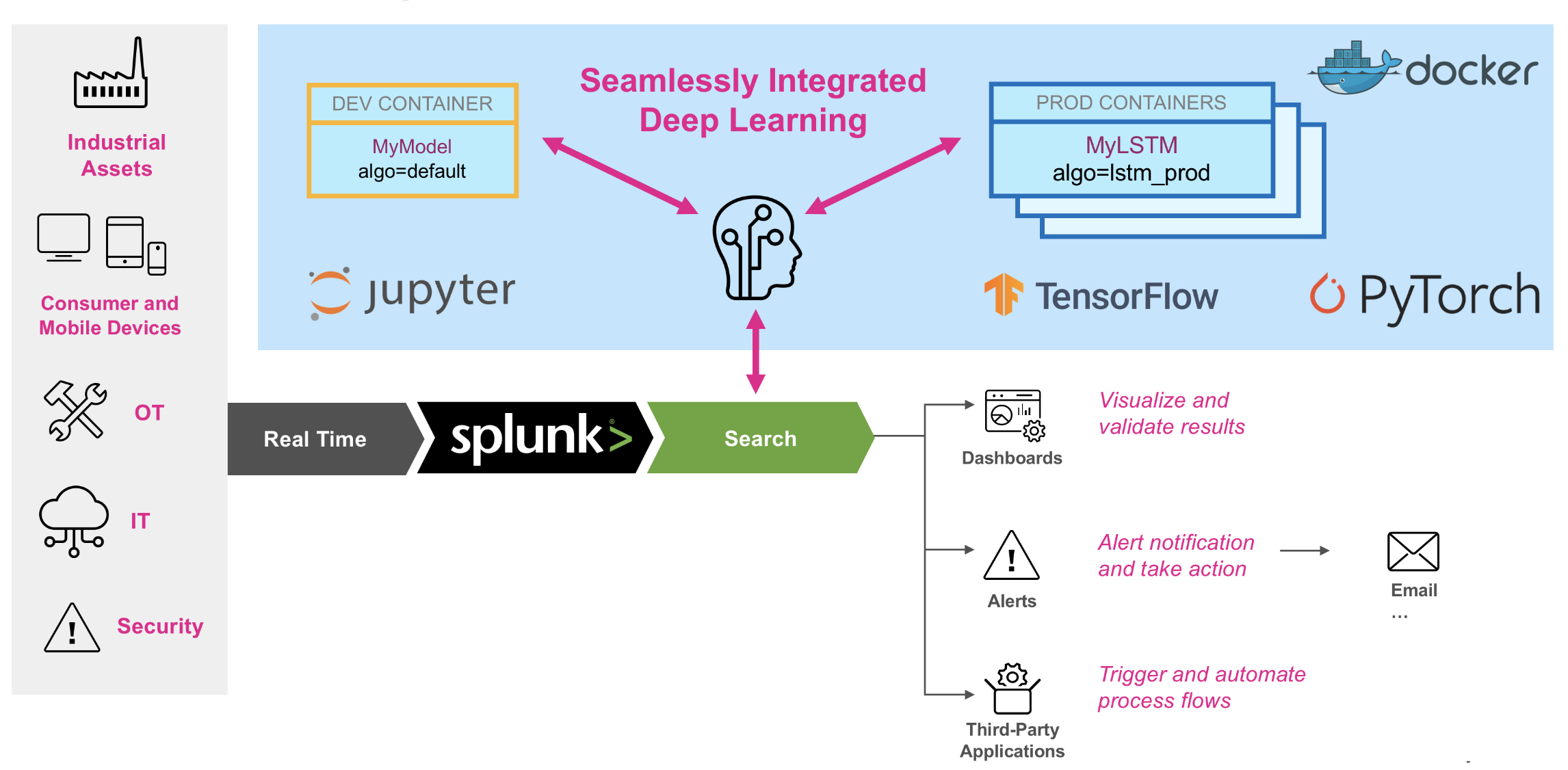

The following image shows where DSDL fits into a machine learning workflow:

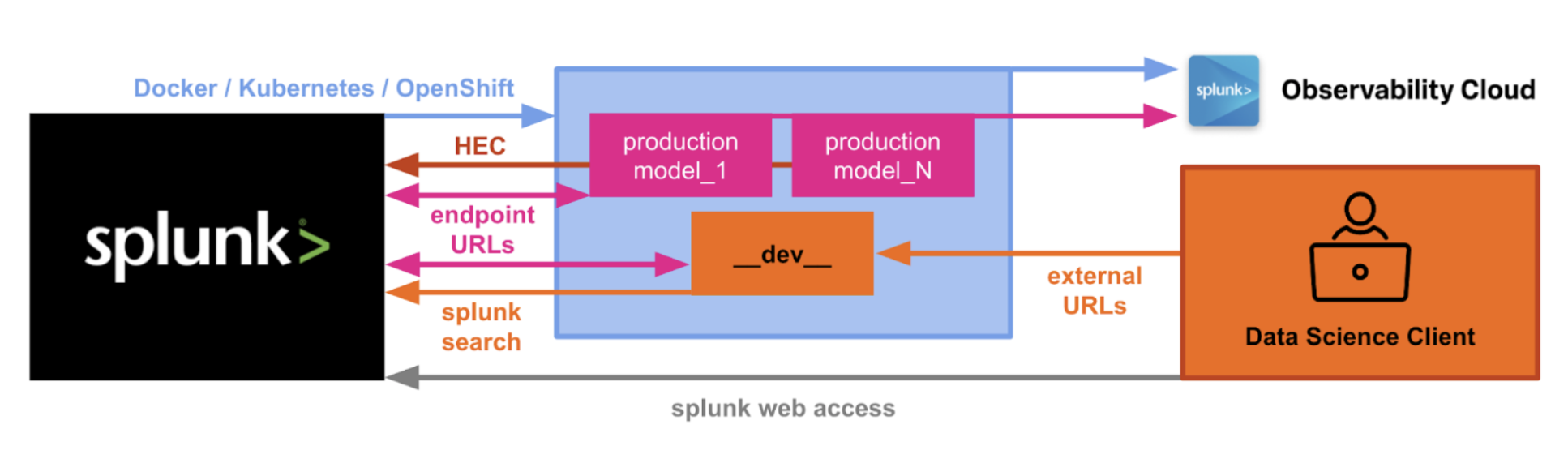

The Splunk App for Data Science and Deep Learning offers an integrated architecture with the Splunk platform. In the following diagram, the architecture is represented as follows:

- The Splunk platform is represented on the far left in the black box.

- The container environment is represented in the centre of the diagram in the light blue box.

- The Splunk Observability Suite is represented in a labeled box in the top right of the diagram.

DSDL connects your Splunk platform deployment to a container environment such as Docker, Kubernetes, or OpenShift. With the help of the container management interface, DSDL uses configured container environment APIs to start and stop development or production containers. DSDL users can interactively deploy containers based on need, and access those containers over external URLs from JuypterLab, or other browser-based tools like TensorBoard, MLflow, or the Spark user interface for job tracking.

The development and production containers offer additional interfaces, most importantly endpoint URLs that facilitate bi-directional data transfer between the Splunk platform and the algorithms running in the containers. Optionally, containers can send data to the Splunk HTTP Event Collector (HEC) or app users can send ad-hoc search queries to the Splunk REST API to retrieve data interactively in Juypter for experimentation, analysis tasks, or modeling.

An optional function is having all container endpoints get automatically instrumented with OpenTelemetry, and analyzed in the Splunk Observability Suite. Additionally the container environment can be monitored in the Splunk Observability Suite for further operational insights such as memory load and CPU utilization.

| About the Splunk App for Data Science and Deep Learning | Quick start guide for the Splunk App for Data Science and Deep Learning |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.0.0, 5.1.0, 5.1.1, 5.1.2

Download manual

Download manual

Feedback submitted, thanks!