Using the score command

In Splunk's Machine Learning Toolkit, the score command runs statistical tests to validate model outcomes. Use the score command for robust model validation and statistical tests in any use case.

The score command is only available for use on version 4.0.0 of the MLTK or above. Version 4.0.0 of the toolkit has the additional requirement of version 1.3 of the Python for Scientific Computing add-on.

The Splunk Machine Learning Toolkit uses the following classes of the score command, each with their own sets of methods (ie. Accuracy, F1-score, T-test etc):

- Classification

- Clustering

- Regression scoring

- Statistical functions (statsfunctions)

- Statistical testing (statstest)

Score commands are not customizable within the Splunk Machine Learning Toolkit.

The Splunk Machine Learning Toolkit also enables the examination of how well your model might generalize on unseen data by using folds of the training set. This method is known as K-fold scoring.

The kfold command does not use the score command, but operates more like a special kind of scoring.

Classification

Classification scoring metrics are used for evaluating the predictive power of a classification learning algorithm.

Classification scoring in the Splunk Machine Learning Toolkit includes the following methods:

- Accuracy

- Confusion matrix

- F1-score

- Precision

- Precision-Recall-F1-Support

- Recall

- ROC-AUC-score

- ROC-curve

Overview

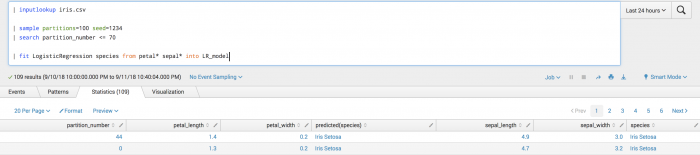

The most common use of classification scoring is to evaluate how well a classification model performs on the test set, as in the following example:

- Load data; split data into training (<=70 partitions) and test (>70 partitions) sets; fit a classification model and save as a model object.

- Select the training set; apply the model to get predictions on unseen data; perform scoring; analyze result.

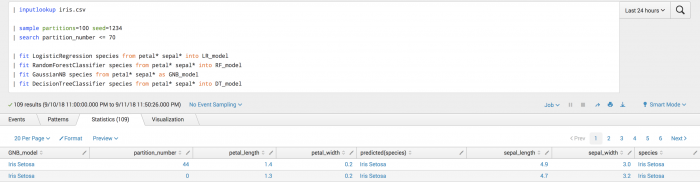

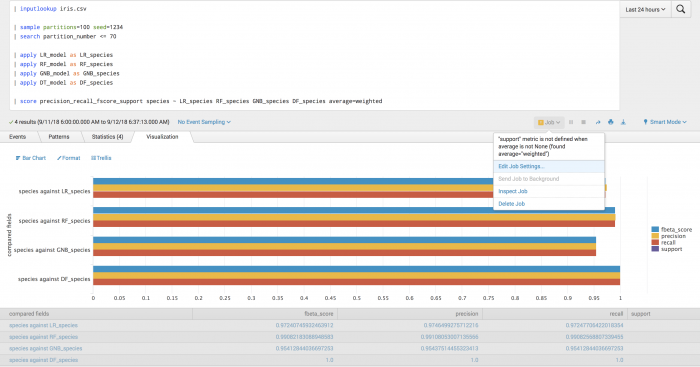

The syntax also supports the comparison of multiple fields, allowing for multi-field comparisons. This is useful when evaluating which classification model is best suited for your data.

- Training multiple models on the same field.

- Evaluating the ground-truth field against multiple predictions. Informative warning messages appear as they may occur.

The inputs to the classification scoring methods are actual and predicted field(s), corresponding to ground-truth-labels and predicted-labels, respectively.

Classification scoring methods will only work on categorical data (integers and string-types, but not floats), and are expected to be used to evaluate the output of classification algorithms, such as logistic regression.

Attempting to score on numeric (float-type) data throws the error message: "Value error: field field_name is not a valid categorical field since it contains floats with ambiguous categorical representation."

Preprocessing

All classification scoring methods follow the same preprocessing steps:

- Search commands pulled into memory.

- Data preparation including:

- All rows containing NAN values removed prior to computing the score.

- Errors raised should any categorical fields be found.

Common parameters

The pos_label parameter:

- Must be an element of all actual (ground-truth) data

- Is used to specify the positive class when average=binary

- Will throw the following error if not present:

- ""Value error: value <pos_label> for pos_label not found in actual field

actual_field. Please specify a valid value for pos_label."

- ""Value error: value <pos_label> for pos_label not found in actual field

- Is completely ignored when the average is not binary and throws the following warning:

- "Warning:

pos_labelwill be ignored since average is not binary (found average=<average>)"

- "Warning:

Uses of the pos_label parameter:

- When average=binary and the combined cardinality of actual/predicted field is <= 2 (applicable for accuracy, precision, recall, f1, precision_recall_f1_support)

- Report results for class=pos_label only

- When data is multiclass but we would like to apply binary scoring methods (applicable for ROC-curve and ROC-AUC-score)

- ROC-curve and ROC-AUC-score require binary data – the pos_label allows multiclass data to be cast to binary by specifying the class identified by "pos_label" as the positive class, and all other classes negative.

- For example if original multiclass data is [a, b, c, a, e] and pos_label=a, then resulting binary data is [1, 0, 0, 1, 0]

The average parameter options include:

- None:

- Return the scoring metric for each unique class in the union of

actual-field+predicted_field

- Return the scoring metric for each unique class in the union of

- Binary:

- To report results for the class specified by

pos_label - Parameter only works for binary data. If applied to a multiclass problem, the following error message generates: "Value error: Target is multiclass but average=binary. Please choose another average setting."

- To report results for the class specified by

- Micro:

- Calculate metrics globally by counting the total true positives, false negatives and false positives.

- Macro:

- Calculate metrics for each label, and find their unweighted mean. Does not take label imbalance into account.

- Weighted:

- Calculate metrics for each label, and find their average, weighted by support (the number of true instances for each label). This alters ‘macro’ to account for label imbalance and can result in an F-score that is not between precision and recall.

Syntax

As with all scoring methods, classification methods support pairwise (ie. comparing a_field_i with b_field_i) comparisons between two sets of fields (arrays). The general syntax is as follows:

.. | score <scoring-method-name> array_a against array_b [options]

againstseparates the ground-truth fields (on the left) from the predicted fields (on the right)"~"is equivalent

array_arepresents the ground-truth fields, and is specified by fieldsactual_field_1 ... actual_field_narray_brepresents the predicted fields, and is specified by fieldspredicted_field_1 ... bpredicted_field_n

SPL syntax

.. | score <scoring-method-name> <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> [options]

Accuracy scoring

Get the prediction accuracy between actual-labels and predicted-labels.

Implements sklearn.metrics.accuracy_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.accuracy_score.html

Further reading: https://en.wikipedia.org/wiki/Accuracy_and_precision

Parameters:

- The

normalizeparameter default is true. - The

normalizeparameter dictates whether to return the raw count of correctly classified samples (normalize=False) or the fraction of correctly classified samples (normalize=True).

Syntax

...|score accuracy_score <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> normalize=<True|False>

Syntax constraints

Accuracy supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

Example

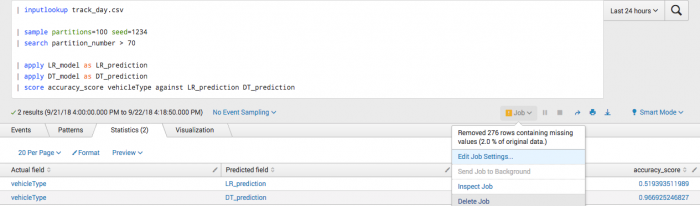

We manually specify fields (particularly for second call to fit and onwards) since a predicted field will exist in the data.

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number <= 70 | fit LogisticRegression vehicleType from batteryVoltage engineCoolantTemperature engineSpeed into LR_model | fit DecisionTreeClassifier vehicleType from batteryVoltage engineCoolantTemperature engineSpeed into DT_model

After training a classifier to predict vehicle type, we can analyze our test-set accuracy.

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply LR_model as LR_prediction | apply DT_model as DT_prediction | score accuracy_score vehicleType against LR_prediction DT_prediction

Confusion matrix

Get the confusion matrix for the actual-labels and predicted-labels.

Implements sklearn.metrics.confusion_matrix. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.confusion_matrix.html

Further reading: https://en.wikipedia.org/wiki/Confusion_matrix

Parameters:

- Confusion matrix takes no parameters.

Syntax

score confusion_matrix <actual_field> against <predicted_field>

Syntax constraints

- The

ground-truth-labelsmap along the event-axis (vertical), thepredicted-labelsmap along the field-axis (horizontal). - Only works for 1-1 comparisons, since the output of

confusion_matrixis already 2d

Although order is not preserved in the output fields/events, the correspondence of fields and events is preserved. For example the third field will correspond to the third event.

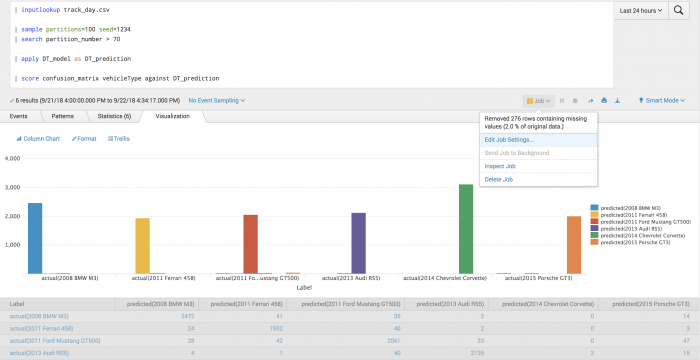

Example

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply DT_model as DT_prediction | score confusion_matrix vehicleType against DT_prediction

Example visualization

In the following visualization of the confusion matrix, we can see which classes were most and least successfully predicted, as well as what they were mistaken for.

F1-score

Get the f1-score between true-labels and predicted-labels.

Implements sklearn.metrics.f1_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.f1_score.html

Further reading: https://en.wikipedia.org/wiki/F1_score

Parameters:

- The

pos_labelparameter default is 1. - The

averageparameter default is binary.

Syntax

|score f1_score <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> average=<binary(default) | micro | macro | weighted> pos_label=<str | int>

Syntax constraints

F1-score supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

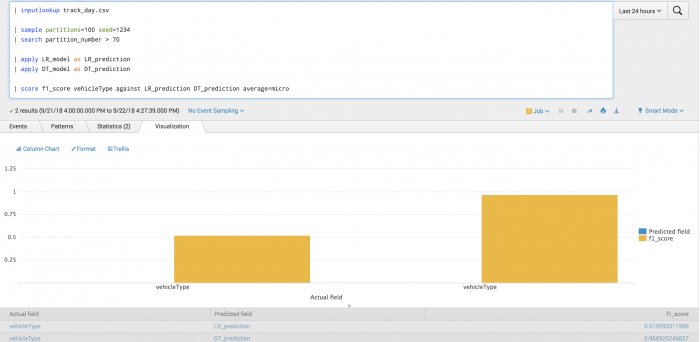

Example

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply LR_model as LR_prediction | apply DT_model as DT_prediction | score f1_score vehicleType against LR_prediction DT_prediction average=micro

Example visualization

The following plot shows model f1 score on the test set. LogisticRegression results on the left, and DecisionTree results on the right. We see the average across all vehicle types (average=micro).

Precision

Get the precision between actual-labels and predicted-labels.

Implements sklearn.metrics.precision_score. Learn more here:http://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_score.html

Further reading: https://en.wikipedia.org/wiki/Accuracy_and_precision

Parameters:

- The

pos_labelparameter default is 1. - The

averageparameter default is binary.

Syntax

...|score precision_score <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> average=<binary(default)|micro|macro|weighted> pos_label=<str|int>

Syntax constraints

Precision supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

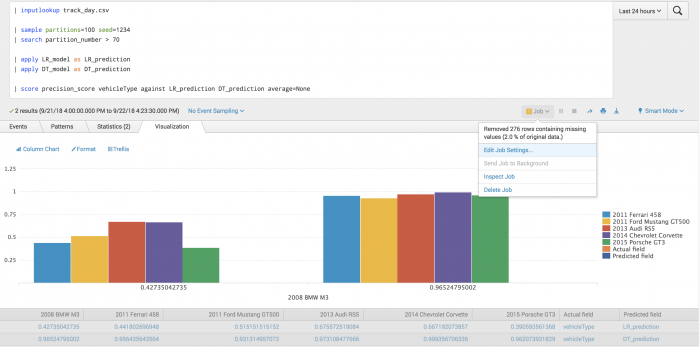

Example

In the following example, after training a classifier to predict vehicle type, we evaluate the model's precision on the training set for each vehicle type.

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply LR_model as LR_prediction | apply DT_model as DT_prediction | score precision_score vehicleType against LR_prediction DT_prediction average=None

Example visualization

The following visualization plot shows the model precision on the test set, for each vehicle type. Precision using LogisticRegression is on the left, DecisionTree on the right. A warning shows that 2% of the total rows contained NAN values, and have been removed.

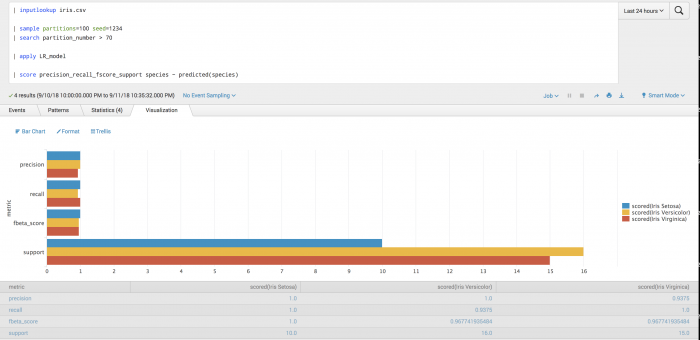

Precision-Recall-F1-Support

Get the precision, recall, f1-score and support between actual-fields and predicted-fields. A common example is a classification report.

Implements sklearn.metrics.precision_recall_fscore_support. Learn more here :http://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_recall_fscore_support.html

Parameters:

- The

pos_labelparameter default is 1. - The

averageparameter default is none. - The

betaparameter default is 1.0. - The

betaparameter shows strength of recall versus precision inf-score.

Syntax

score precision_recall_fscore_support <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> pos_label=<str> average=<str> beta=<float>

Syntax constraints

| Average | Result |

|---|---|

| Not None | Works for all syntax constraints ie. 1-to-1 and 1-to-n) |

| None | Only works for 1-1 comparisons since the output of precision_recall_fscore_support (when average = None) is already 2d

|

Support is only defined when average=None because support cannot be averaged.

Example

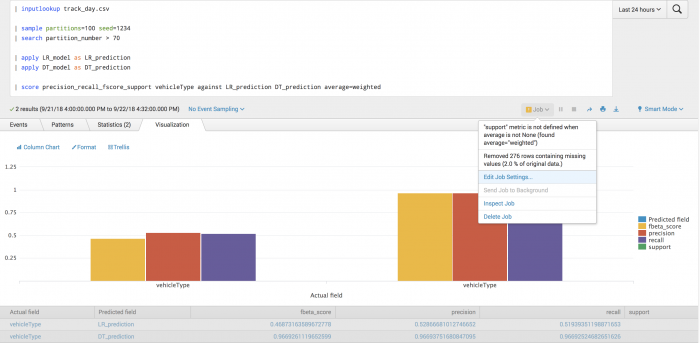

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply LR_model as LR_prediction | apply DT_model as DT_prediction | score precision_recall_fscore_support vehicleType against LR_prediction DT_prediction average=weighted

Example visualization

In the following visualization, the plot shows the precision, recall and f_beta scores for the prediction of vehicle type, under a weighted averaging scheme.

Recall

Get the recall between actual-labels and predicted-labels.

Implements sklearn.metrics.recall_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.recall_score.html

Further reading: https://en.wikipedia.org/wiki/Precision_and_recall

Parameters:

- The

pos_labelparameter default is 1. - The

averageparameter default is binary.

Syntax

|score accuracy_score <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> average=<binary(default) | micro | macro | weighted> pos_label=<str | int>

Syntax constraints

Recall supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

Example

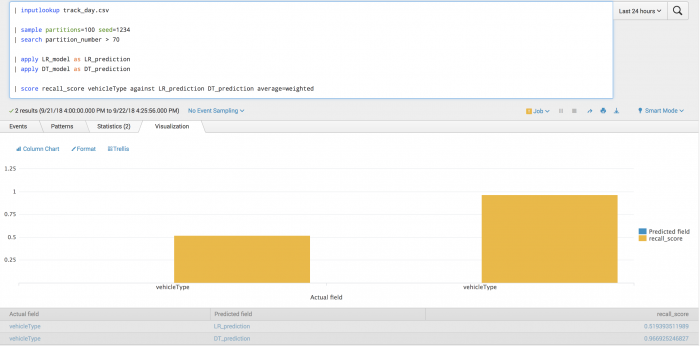

| inputlookup track_day.csv | inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply LR_model as LR_prediction | apply DT_model as DT_prediction | score recall_score vehicleType against LR_prediction DT_prediction average=weighted

Example visualization

The following plot shows model recall on the test set. LogisticRegression is on the left, DecisionTree on the right. We see the average across all vehicle types, where the average is weighted by the support.

ROC-AUC-score

Get the ROC-AUC-score for the actual-labels and predicted-scores.

Implements sklearn.metrics.roc_auc_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.roc_auc_score.html

For further reading see the AUC references here: https://en.wikipedia.org/wiki/Receiver_operating_characteristic

Parameters:

- Although sklearn.metrics.roc_auc_score supports an

averageparameter, this is currently disabled as the toolkit does not support thelabel-indicatorformat.- For multiclass problems, binarize the data using

pos_label.

- For multiclass problems, binarize the data using

- The

pos_labelparameter default is 1.- Class to represent positive (when multiclass target, makes all others negative)

- When the predicted field contains target scores, it can either be probability estimates of the positive class, confidence values, or non-thresholded measure of decisions.

Requirements:

- ROC_AUC_score only applies to binary data. To support multi-class problems, convert into binary with the

pos_labelparameter.- See table below for more details.

- The predicted-field must be numeric. Numeric must be float or integer type, corresponding to probability estimates of the positive class, confidence values, or non-thresholded measure of decisions (as returned by

decision_functionon some classifiers).- If the predicted field does not meet the numeric criteria, this error message will display: "Expected field <predicted> to be numeric and correspond to probability estimates or confidence intervals of the positive class, but field contains non-numeric events."

| If | Then |

|---|---|

| Binary data is given | It must be true-binary ie. {0,1} or {-1,1} |

| Binary is not data (ie. multiclass) | The pos_label must be specified and contained in the ground_truth field

|

| Binary us not true-binary | The pos_label must be specified and contained in the ground_truth field

|

If the pos_label is not in the ground_truth field, the following error will be raised: "Value

If the ground-truth data is multiclass and the pos_label is properly specified, the user is warned of the conversion: ""Found multiclass ground-truth field

Syntax

score roc_auc_score <a1> <a2> ... <an> against <b1> <b2> ... <bn> [pos_label=<str | int>]

Syntax constraints

ROC AUC score supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

Example

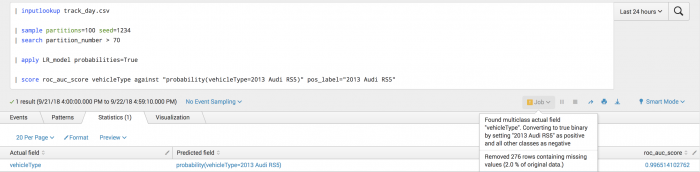

In the following example we obtain the area under the ROC curve for predicting the vehicle type of 2013 Audi RS5.

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number <= 70 | fit LogisticRegression vehicleType from * probabilities=True into LR_model

| inputlookup track_day.csv | sample partitions=100 seed=1234 | search partition_number > 70 | score roc_auc_score vehicleType against "probability(vehicleType=2013 Audi RS5)" pos_label="2013 Audi RS5"

Example output

ROC-curve

Get the ROC-curve for actual-fields and predicted-fields.

Implements sklearn.metrics.roc_curve. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.roc_curve.html

Further reading: https://en.wikipedia.org/wiki/Receiver_operating_characteristic

Parameters:

- The

pos_labelparameter default is 1.- Class to represent positive (when multiclass target, makes all others negative). See examples 1 and 2 below.

- The

drop_intermediateparameter default is true.- Whether to drop some sub-optimal thresholds which would not appear on a plotted ROC curve. This is useful in order to create lighter ROC curves.

Example 1: When binary data not true binary

- Data = <Y N Y N Y N >

-

pos_label= Y - Data transformed to true binary as <1 0 1 0 1 0>

Example 2: When multiclass data not true binary

- Data = <A B C B A B >

-

pos_label= A - Data transformed to true binary as <1 0 0 0 0 1 0>

Requirements:

- ROC_curve only applies to binary data. To support multi-class problems, convert into binary with the

pos_labelparameter.- See table below for more details.

- The predicted-field must be numeric. Numeric must be float or integer type, corresponding to probability estimates of the positive class, confidence values, or non-thresholded measure of decisions (ie. as returned by by LogisticRegression with probabilities=True).

- If the predicted field does not meet the numeric criteria, this error message will display: "Expected field <predicted> to be numeric and correspond to probability estimates or confidence intervals of the positive class, but field contains non-numeric events."

| If | Then |

|---|---|

| Binary data is given | It must be true-binary ie. {0,1} or {-1,1} |

| Binary is not data (ie. multiclass) | The pos_label must be specified and contained in the ground_truth field

|

| Binary is not true-binary | The pos_label must be specified and contained in the ground_truth field

|

If the pos_label is not in the ground_truth field, the following error will be raised: "Value

If the ground-truth data is multiclass and the pos_label is properly specified, the user is warned of the conversion: ""Found multiclass ground-truth field

Syntax

score roc_curve <actual_field> against <predicted_field> pos_label=<str|int> drop_intermediate=<True|False>

Syntax constraints

Only works for 1-1 comparisons.

Example

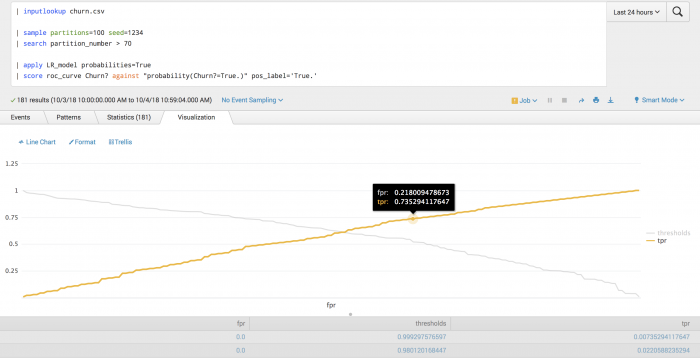

| inputlookup churn.csv | sample partitions=100 seed=1234 | search partition_number <= 70 | fit LogisticRegression Churn? from * probabilities=True into LR_model

| inputlookup churn.csv | sample partitions=100 seed=1234 | search partition_number > 70 | apply LR_model probabilities=True | score roc_curve Churn? against "probability(Churn?=True.)" pos_label='True.'

Example visualization

In the following visualization, the plot shows how the true-positive-rate (tpr) varies with the false-positive-rate (fpr), along with the corresponding probability thresholds.

Clustering scoring

Clustering scoring is used to evaluate the predicted value of the clustering model. The inputs to the clustering scoring methods are arrays of data specified by an ordered sequence of fields:

Clustering scoring in the Splunk Machine Learning Toolkit includes the following methods:

Overview

Clustering scoring methods may operate on two arrays:

- We adopt the notation of

labelandfeatureswhich are specified by the ordered sequence of fields<label_field>andfeature_field_1 feature_field_2 ... feature_field_nrespectively. - We've introduced the

againstclause to separate the arrays. label_field against feature_field_1 feature_field_2 ... feature_field_ncorrespond tolabel(ground-truth or predicted labels) against features (features used by clustering algorithm), respectively.

Preprocessing

Clustering scoring methods follow the same preprocessing steps:

- Search commands pulled into memory.

- Data preparation including:

- All rows containing NAN values are removed prior to computing the score.

- Label field is converted to categorical.

- Errors are raised if any categorical fields are found in feature fields.

Parameters

- The

metricparameter default is euclidean.- Supported

metricmetric values include: cityblock, cosine, euclidean, l1, l2, manhattan, braycurtus, canberra, chebyshev, correlation, hamming, matching, minkowski, and sqeuclidean.

- Supported

Example

..| score r2_score <label_array> against <feature_array>

Clustering scoring methods will only work on numerical data, and are expected to be used to evaluate the output of clustering models (such as KMeans, Spectral Clustering...etc).

Attempting to score on categorical data will result in the error: "Non-numeric fields found in the data: categorical_fields. Please provide only numerical fields"

The number of fields in label_array is limited to one and it has to be categorical.

Neither parameters that take a list/ array as input nor metrics which calculate the distance between categorical arrays are currently supported.

Silhouette score

Calculate the silhouette score between label_array.

and feature_array

Implements sklearn.metrics.silhouette_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.silhouette_score.html

Further reading: https://en.wikipedia.org/wiki/Silhouette_(clustering)

Parameters

- Supports only common parameters.

Syntax

...|score silhouette_score <label_field> against <feature_field_1> ... <feature_field_n> metric=<euclidean(default) | cityblock | cosine | l1 | l2 | manhattan | braycurtis | canberra | chebyshev | correlation | hamming | matching | minkowski | sqeuclidean>

Syntax constraints

Silhouette score works for the following syntax constraints:

label_fieldmust have only single field.feature_fieldscan have single or multiple fields.

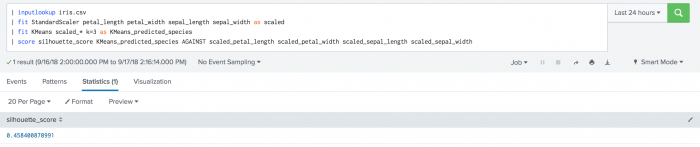

Example

| inputlookup iris.csv | fit StandardScaler petal_length petal_width sepal_length sepal_width as scaled | fit KMeans scaled_* k=3 as KMeans_predicted_species | score silhouette_score KMeans_predicted_species against scaled_petal_length scaled_petal_width scaled_sepal_length scaled_sepal_width

Regression scoring

Regression scoring metrics are used for evaluating the predictive power of a regression learning algorithm. The most common use of regression scoring is to evaluate how well a regression model performs on the test set.

Regression scoring in the Splunk Machine Learning Toolkit includes the following methods:

The inputs to the regression scoring methods are arrays of data specified by an ordered sequence of field(s).

Regression scoring methods may operate on two arrays. For example

..| score r2_score <actual> against <predicted>

- We adopt the notation of

actualandpredicted, which are specified by an ordered sequence of fieldsactual_field_1...actual_field_nandpredicted_field_1...predicted_field_n. - We've introduced the

againstclause to separate the arrays. actual_field_1...actual_field_nagainstpredicted_field_1...predicted_field_n. fields, corresponding to actual (ground-truth-target-values) against predicted (predicted-target-values), respectively.

These scoring methods will only work on numerical data, and are expected to be used to evaluate the output of regression algorithms (such as Gradient Boosting Regression, Linear Regression...etc).

Attempting to score on categorical data returns the error: "Non-numeric fields found in the data actual_field/predicted_field. Please provide only numerical fields."

No numerical fields in any of the arrays returns the error "Value error: arrays cannot be empty. No valid numerical fields exist to populate" or "Value error: No valid numerical fields exist for scoring method."

The number of fields in actual_fields and predtcted_fields must be either equal to each other or one of them should have only one field. If one of the arrays has a single field and the other array has multiple fields, the multioutput parameter is set to raw_values and the regression score calculated between each field of the array which has multiple fields and the same field of the array which has a single field. Also, in this case, if the multioutputparameter was set to a different value by the user before, an error is returned: "Updating multioutput value from <X> to raw_values since <a_array/b_array> has a single column.

Preprocessing

All regression scoring methods follow the same preprocessing steps:

- Search commands pulled into memory.

- Data preparation including:

- All Rows containing NAN values are removed prior to computing the score.

- Errors raised should any categorical fields be found.

Common parameters:

- The

multioutputparameter default israw_values. raw_valuesreturns a full set of regressions scores or errors between each field infields_aandfield_brespectively.

Parameters that take a list/ array as input are not supported.

Explained variance score

Calculate the explained variance regression score.

Implements sklearn.metrics.explained_variance_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.explained_variance_score.html#sklearn.metrics.explained_variance_score

Further reading: https://en.wikipedia.org/wiki/Explained_variation

Parameters:

- The

multioutputparameter default israw_values. - The

variance_weightedparameter is the scores of all outputs averages with the weights of each individual output's variance. - To see each explained variance score compared to the actual score, set the multioutput parameter to

raw_values.

Syntax

...|score explained_variance_score <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> multioutput=<raw_values(default) | uniform_average | variance_weighted>

Syntax constraints

Explained variance score supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

Explained variance is not symmetric.

Example

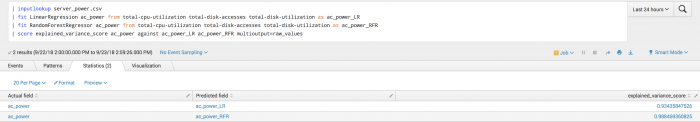

In the following example, we manually specify fields (particularly for the second call to fit and onwards) since a predicted field exists in the data.

| inputlookup server_power.csv | fit LinearRegression ac_power from total-cpu-utilization total-disk-accesses total-disk-utilization as ac_power_LR | fit RandomForestRegressor ac_power from total-cpu-utilization total-disk-accesses total-disk-utilization as ac_power_RFR | score explained_variance_score ac_power_LR against ac_power_RFR

To see each explained variance score compared to the actual score, set multioutput to raw_values.

Mean absolute error score

Calculate the mean absolute error (regression loss) between actual_fields and predicted_fields.

Implements sklearn.metrics.mean_absolute_error. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.mean_absolute_error.html#sklearn.metrics.mean_absolute_error

Further reading: https://en.wikipedia.org/wiki/Mean_absolute_error

Parameters:

- Only supports common parameters.

Syntax

...|score mean_absolute_error <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> multioutput=<raw_values(default) | uniform_average>

Syntax constraints

Mean absolute error supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

Example

In the following example, we manually specify fields (particularly for the second call to fit and onwards) since a predicted field exists in the data.

If the user wants to see each mean absolute score compared to the actual score, multioutput must be set to raw_values. if set to another value a warning message is returned.

| inputlookup power_plant.csv | fit LinearRegression Energy_Output from Temperature Pressure Humidity Vacuum fit_intercept=true as energy_output_LR | fit Lasso Energy_Output from Temperature Pressure Humidity Vacuum as energy_output_LASSO | score mean_absolute_error Energy_Output energy_output_LR against energy_output_LASSO

Mean squared error

Calculate the mean square error (regression loss) between actual_fields and predicted_fields.

Implements sklearn.metrics.mean_squared_error. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.mean_squared_error.html#sklearn.metrics.mean_squared_error

Further reading: https://en.wikipedia.org/wiki/Mean_squared_error

Parameters:

- Only supports common parameters.

Syntax

...|score mean_squared_error <actual_field__1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> multioutput=<raw_values(default) | uniform_average>

Syntax constraints

Mean squared error supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

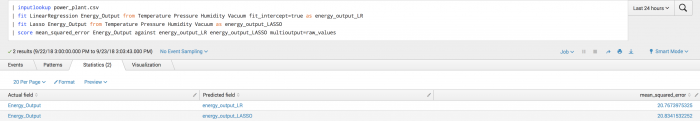

Example

In the following example, we manually specify fields (particularly for the second call to fit and onwards) since a predicted field exists in the data.

| inputlookup power_plant.csv | fit LinearRegression Energy_Output from Temperature Pressure Humidity Vacuum fit_intercept=true as energy_output_LR | fit Lasso Energy_Output from Temperature Pressure Humidity Vacuum as energy_output_LASSO | score mean_squared_error Energy_Output against energy_output_LR energy_output_LASSO

R2 score

Calculate the r2 score between actual_fields and predicted_fields.

Implements sklearn.metrics.r2_score. Learn more here: http://scikit-learn.org/stable/modules/generated/sklearn.metrics.r2_score.html#sklearn.metrics.r2_score

Further reading: https://en.wikipedia.org/wiki/Coefficient_of_determination

Parameters:

- The

multioutputdefault israw_values. - The

variance_weightedparameter is scores of all outputs are averaged with weights of each individual output's variance. - The

noneparameter acts the same as theuniform_averageparameter.

Syntax

...|score r2_score <actual_field_1> ... <actual_field_n> against <predicted_field_1> ... <predicted_field_n> multioutput=<raw_values(default) | uniform_average | variance_weighted | None >

Syntax constraints

R2 score supports 1-to-1, n-to-n and 1-to-n comparison syntaxes.

R2 score is not symmetric.

Example

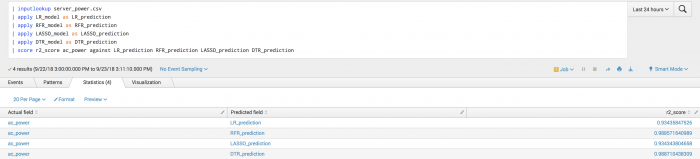

In the following example, we manually specify fields (particularly for the second call to fit and onwards) since a predicted field exists in the data.

| inputlookup server_power.csv | fit LinearRegression ac_power from total-cpu-utilization total-disk-accesses total-disk-utilization into LR_model | fit RandomForestRegressor ac_power from total-cpu-utilization total-disk-accesses total-disk-utilization into RFR_model | fit Lasso ac_power from total-cpu-utilization total-disk-accesses total-disk-utilization into LASSO_model | fit DecisionTreeRegressor ac_power from total-cpu-utilization total-disk-accesses total-disk-utilization into DTR_model

After training several regressors to predict ac_power, we can analyze their predictions compared to the ground-truth.

| inputlookup server_power.csv | apply LR_model as LR_prediction | apply RFR_model as RFR_prediction | apply LASSO_model as LASSO_prediction | apply DTR_model as DTR_prediction | score r2_score ac_power against LR_prediction RFR_prediction LASSO_prediction DTR_prediction

Statistical functions (statsfunctions)

Statsfunctions are general statistical methods that either provide statistical information about data, or perform a statistical test on data. A statistic/p-value is not returned.

Statistical functions scoring in the Splunk Machine Learning Toolkit includes the following methods:

Preprocessing

All statistical functions scoring methods follow the same preprocessing steps:

- Search commands pulled into memory.

- Data preparation including:

- All rows containing NAN values removed prior to computing the score.

- Errors raised should any categorical fields be found.

Describe

Compute several descriptive statistics of the passed array.

Implements scipy.stats.describe. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.describe.html

Parameters

- The

ddofparameter default is 1.ddof=delta degrees of freedom.- delta degrees of freedom used only for variance.

- If the

biasparameter is false, then the skewness and kurtosis calculations are corrected for statistical bias

Syntax

|score describe <a_field_1> <a_field_2> ... <a_field_n> ddof=<int> bias=<true|false>

Syntax constraints

A single sequence of fields.

Example

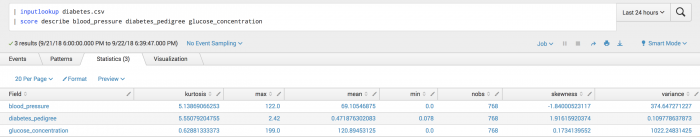

| inputlookup diabetes.csv | score describe blood_pressure diabetes_pedigree glucose_concentration

Example output

Moment

A moment is a specific quantitative measure of the shape of a set of points. It is often used to calculate coefficients of skewness and kurtosis due to its close relationship with them

Implements scipy.stats.moment. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.moment.html

Further reading: https://en.wikipedia.org/wiki/Moment_(mathematics)

Parameters

- The

momentparameter default is 1.

Syntax

|score moment <a_field_1> <a_field_2> ... <a_field_n> moment=<int>

Syntax constraints

A sequence of fields.

Example

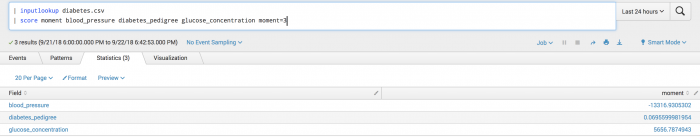

The following example is set-up to calculate the third moment of the given data.

| inputlookup diabetes.csv | score moment blood_pressure diabetes_pedigree glucose_concentration moment=3

Example output

Pearsonr

Calculate a Pearson correlation coefficient and the p-value for testing non-correlation.

Implements scipy.stats.spearmanr. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.pearsonr.html

Further reading: https://en.wikipedia.org/wiki/Pearson_correlation_coefficient

Parameters

- No parameters.

Syntax

|score pearsonr <a_field> against <b_field>

Syntax constraints

A pair of fields (1-to-1 comparison).

Returns

Pearson correlation coefficient and the p-value for testing non-correlation.

Example

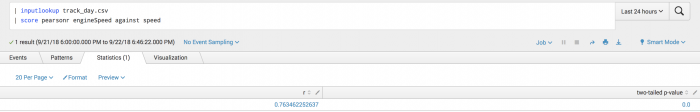

| inputlookup track_day.csv | score pearsonr engineSpeed against speed

Example output

Spearmanr

Calculate a Spearman rank-order correlation coefficient and the p-value to test for non-correlation.

Implements scipy.stats.spearmanr. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.spearmanr.html

Further reading: https://en.wikipedia.org/wiki/Spearman%27s_rank_correlation_coefficient

Parameters

- No parameters.

Syntax

|score spearmanr <a_field> against <b_field>

Syntax constraints

A pair of fields (1-to-1 comparison).

Returns

Spearman correlation coefficient and the p-value to test for non-correlation.

Example

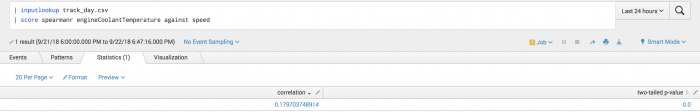

| inputlookup track_day.csv | score spearmanr engineSpeed against speed

Example output

Tmean

This function finds the arithmetic mean of given values, ignoring values outside the given limits.

Implements scipy.stats.tmean. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.tmean.html

Further reading: https://en.wikipedia.org/wiki/Truncated_mean

Parameters

- The optional

lower_limitparameter default is none.- The lower bound of data to include. If None, there is no lower bound.

- The optional

upper_limitparameter default is None.- The upper bound of data to include. If None, there is no upper bound.

Syntax

|score tmean <a_field_1> ... <a_field_n> lower_limit=<float|None> upper_limit=<float|None>

A global trimmed-mean is calculated across all fields.

Syntax constraints

A sequence of fields.

Returns

Returns a single value representing the trimmed mean of the data (ie. the mean ignoring samples outside of the given bounds).

Example

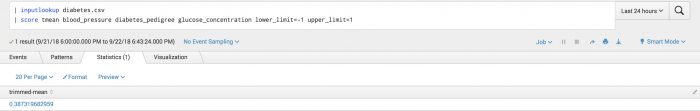

| inputlookup diabetes.csv | score tmean blood_pressure diabetes_pedigree glucose_concentration lower_limit=-1 upper_limit=1

Example output

Trim

Slices off a proportion of items from both ends of an array.

Implements scipy.stats.trim. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.trimboth.html

Parameters

- The

tailparameter default is both.- Whether to cut off (trim) data from the left, right or both sides of the distribution.

- In the

proportiontocutparameter (fraction to cut off),floatmust be specified.

Syntax

|score trim <a_field_1> ... <a_field_n> proportiontocut=<float> tail=<left|right|both>

Syntax constraints

A sequence of fields.

Returns

Trimmed version of the data. Note that the order of the trimmed content is undefined.

Example

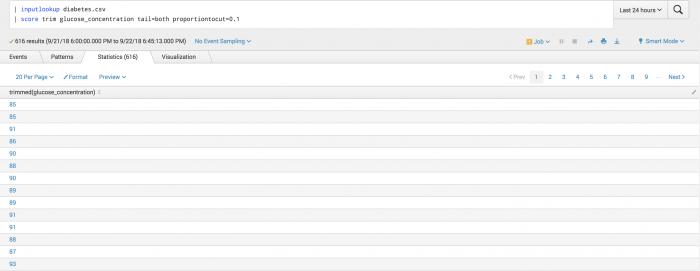

| inputlookup diabetes.csv | score trim glucose_concentration tail=both proportiontocut=0.1

Example output

Tvar

Compute the trimmed variance. This function computes the sample variance of an array of values, while ignoring values which are outside of given limits.

Implements scipy.stats.ttest_ind. Learn more here: https://docs.scipy.org/doc/scipy-0.14.0/reference/generated/scipy.stats.tvar.html

Parameters

- The optional

lower_limitparameter default is None.- The lower bound of data to include. If None, there is no lower bound.

- The optional

upper_limitparameter default is None.- The upper bound of data to include. If None, there is no upper bound.

Syntax

|score tvar <a1> <a2> ... <an> lower_limit=<float|None> upper_limit=<float|None>

A global trimmed-variance is calculated across all fields.

Syntax constraints

A sequence of fields.

Returns

Returns a single value representing the trimmed variance of the data (ie. the variance ignoring samples outside of the given bounds).

Example

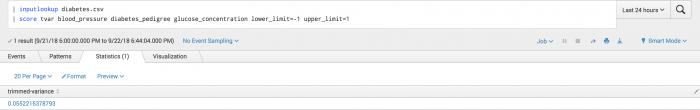

| inputlookup diabetes.csv | score tvar blood_pressure diabetes_pedigree glucose_concentration lower_limit=-1 upper_limit=1

Example output

Statistical testing (statstest)

Statistical testing (statstest) scoring is used to validate/invalidate a statistical hypothesis. The output of statstest scoring methods is a test-specific statistic, and a corresponding p-value.

All statistical-testing methods support the parameter alpha, which indicates the alpha-level (significant level) for the statistical test (i.e. the probability of rejecting the null hypothesis when the null hypothesis is true). The default value is 0.05.

Statistical testing in the Splunk Machine Learning Toolkit includes the following methods:

- Augmented Dickney-Fuller (Adfuller)

- KS-test (1 sample)

- KS-test (2 samples)

- Kwiatkowski-Phillips-Schmidt-Shin (KPSS)

- Mannwhitneyu

- Normal test

- One-way ANOVA

- T-test (1 sample)

- T-test (2 independent samples)

- T-test (2 related samples)

- Wilcoxon

Overview

- The inputs to the statstest scoring methods are array(s) of data specified by an ordered sequence of fields.

- The arrays for statistical testing methods are referred to as

a_arrayandb_array.- In general, statistical testing methods are commutative (ie.

a_fieldagainstb_fieldis equivalent tob_fieldagainsta_field). - Arrays

array_aandarray_bare specified by a sequence of fieldsa_field_1...a_field_nandb_field_1...b_field_n.

- In general, statistical testing methods are commutative (ie.

- Statstest scoring methods may operate on a single array. For example:

..| score describe <array_a>) or 2 arrays (eg. ..| score ks_2samp <array_a> against <array_b>)

Preprocessing

All statistical testing scoring methods follow the same preprocessing steps:

- Search commands pulled into memory.

- Data preparation including:

- All rows containing NAN values removed prior to computing the score.

- Errors raised should any categorical fields be found.

For scoring methods requiring 2 arrays, use the against (equivalently, ~) clause to separate the arrays.

Augmented Dickey-Fuller (Adfuller)

The Augmented Dickey-Fuller test can be used to test for a unit root in a univariate process in the presence of serial correlation.

Implements statsmodels.tsa.stattools.adfuller. Learn more here: https://www.statsmodels.org/dev/generated/statsmodels.tsa.stattools.adfuller.html

Further reading: https://en.wikipedia.org/wiki/Augmented_Dickey%E2%80%93Fuller_test

Parameters

- The

maxtagparameter default is 10. - The

maxtagparameter determines the maximum lag included in the test. - The

regressionparameter default is c. - The

regressionparameter determines the constant and trend order to include in the regression.c: constant only (default).ct: constant and trend.ctt: constant, and linear and quadratic trend.nc: no constant, no trend.

- The

autolagparameter default is AIC.- If None, then maxlag tags are used.

- If AIC or BIC, then the number of lags is chosed to minimize the corresponding information criterion.

- The parameter

statstarts with maxlag and drops a lag until the t-statistic on the last lag length is significant using a 5%-sized test.

- The

alphaparameter default is 0.05.

Null hypothesis

The null hypothesis of the Augmented Dickey-Fuller is that there is a unit root, with the alternative that there is no unit root.

Syntax

|score adfuller <field> autolag=<aic|bic|t-stat|none> regression=<c|ct|ctt|nc> maxlag=<int> alpha=<float>

Syntax constraints

A single field.

Example

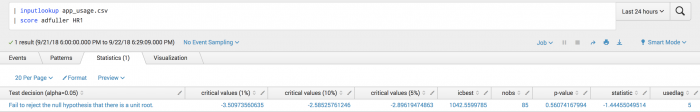

| inputlookup app_usage.csv | score adfuller HR1

Example output

KS-test (1 sample)

Test whether the specified field is statistically identical to the specified cumulative distribution function (cdf).

Implements scipy.stats.kstest. Learn more here: https://docs.scipy.org/doc/scipy-0.14.0/reference/generated/scipy.stats.kstest.html

Further reading: https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test

Parameters Each cdf has a required set of parameters that must be specified.

| Parameter | Required information |

|---|---|

| cdf = chi2 | df <int> loc <float> scale <float> |

| cdf = longnorm | s <float> loc <float> scale <float> |

| cdf = norm | loc <float> scale <float> |

Null hypothesis

The sample (<field>) distribution is identical to the specified distribution (cdf, with cdf params).

Syntax

|score kstest <field> cdf=<norm | lognorm | chi2> <required_cdf_parameters> alpha=<int>

All required cdf parameters must be supplied. Review in the Parameters table above.

Syntax constraints

A single field.

Example

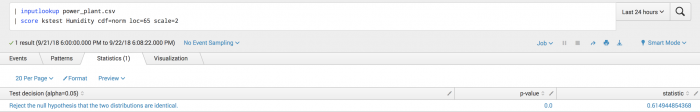

| inputlookup power_plant.csv | score kstest Humidity cdf=norm loc=65 scale=2

Example output

In the following visualization we can reject the hypothesis that the field Humidity is identical to a q-function with mean 65 and standard deviation 2.

KS-test (2 samples)

Computes the Kolmogorov-Smirnov statistic on two samples to test if two independent samples are drawn from the same distribution.

Implements scipy.stats.ttest_ind. Learn more here: https://docs.scipy.org/doc/scipy-0.14.0/reference/generated/scipy.stats.ks_2samp.html

Further reading: https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test#Two-sample%20Kolmogorov%E2%80%93Smirnov%20test

Parameters

- The

alphaparameter default is 0.05.

Null hypothesis

This is a two-sided test for the null hypothesis that two independent samples are drawn from the same continuous distribution.

Syntax

|score ks_2samp <a_field> against <b_field> alpha=<int>

Syntax constraints

A single pair of fields (1-to-1 comparison).

Example

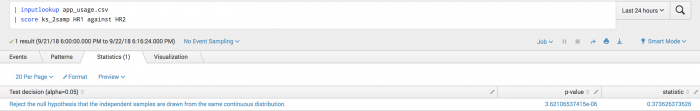

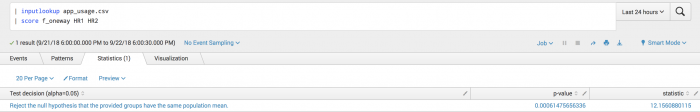

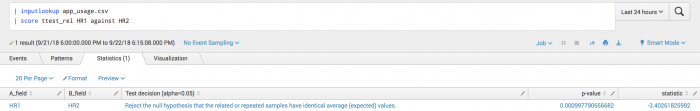

In the following example we want to see if the two measurements of the HR field are drawn from the same distribution.

| inputlookup app_usage.csv | score ks_2samp HR1 against HR2

Example output

In the following output we reject the null hypothesis that the two samples were were drawn from the same distribution.

Kwiatkowski-Phillips-Schmidt-Shin (KPSS)

Computes the Kwiatkowski-Phillips-Schmidt-Shin (KPSS) test for the null hypothesis that the field (<field>) is level or trend stationary.

Implements statsmodels.tsa.stattools.kpss. Learn more here: https://www.statsmodels.org/dev/generated/statsmodels.tsa.stattools.kpss.html

Further reading: https://en.wikipedia.org/wiki/KPSS_test

Parameters

- The

regressionparameter default is c.- The

regressionparameter indicates the null hypothesis for the KPSS test. - The

cparameter indicates that the data is stationary around a constant. - The

cfparameter indicates that the data is stationary around a trend.

- The

- The

lagsparameter default is none.- The

lagsparameter indicates the number of lags to be used. If None, set to int(12 * (n / 100)**(1 / 4)), where n is the number of samples.

- The

- The

alphadefault is 0.05.

Null hypothesis

The null hypothesis of the Kwiatkowski-Phillips-Schmidt-Shin is that the field (field) is level or trend stationary.

Syntax

|score kpss <field> regression=<c | ct> lags=<int> alpha=<float>

Syntax constraints

A single field.

Example

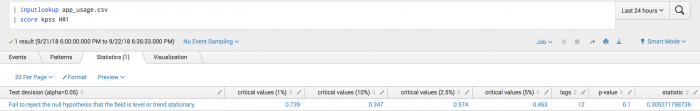

| inputlookup app_usage.csv | score kpss HR1

Example output

MannWhitneyU

Used to test whether a randomly selected value from one sample will be less or greater than a randomly selected value from another sample.

Implements scipy.stats.mannwhitneyu. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.mannwhitneyu.html

Further reading: https://en.wikipedia.org/wiki/Mann%E2%80%93Whitney_U_test

Parameters

- The

use continuityparameter determines whether a continuity correction (1/2) should be taken into account. - The

use continuityparameter default is true. - The

alternativeparameter determines whether to get the p-value for the one-sided hypothesis (less or greater) or for the two-sided hypothesis (two-sided). - The

alternativeparameter default is two-sided. - The

alphaparameter default is 0.05.

Null hypothesis

It is equally likely that a randomly selected value from one sample is less than or greater than a randomly selected value from another sample.

Syntax

|score mannwhitneyu <a_field> against <b_field> use_continuity=<true|false> alternative=<less|two-sided|greater> alpha=<int>

Syntax constraints

A single pair of fields (1-to-1).

Example

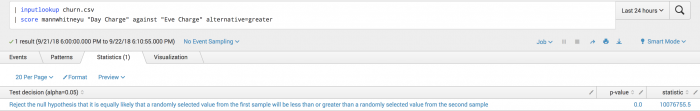

| inputlookup churn.csv | score mannwhitneyu "Day Charge" against "Eve Charge" alternative=greater

Example output

In the following visualization we can conclude that a random sample from Day Charge is likely greater than a random sample in Eve Charge.

Normal-test

Used to test whether a sample differs from a normal distribution.

Implements scipy.stats.normaltest. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.normaltest.html

Further reading:

- D’Agostino, R. B. (1971), “An omnibus test of normality for moderate and large sample size”, Biometrika, 58, 341-348

- D’Agostino, R. and Pearson, E. S. (1973), “Tests for departure from normality”, Biometrika, 60, 613-622

Parameters:

- The

alphaparameter is 0.05.

Null hypothesis

The sample (<a_field_1>, ..., <a_field_n>) comes from a normal distribution.

Syntax

...|score normaltest <field_1> <field_2> ... <field_n> alpha=<int>

An array (array_a) is specified by the ordered sequence of fields )<a1>, <a2>,...,<an>).

Syntax constraints

A single array (set of fields).

Example

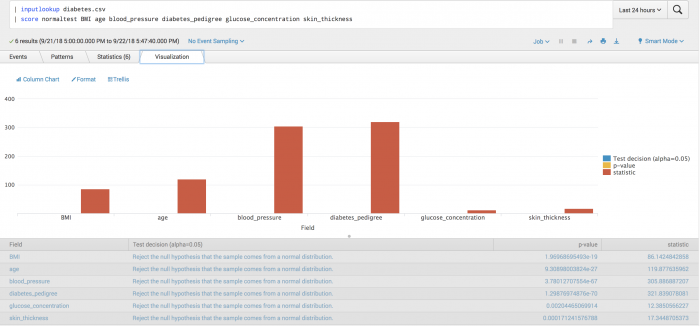

| inputlookup diabetes.csv | score normaltest BMI age blood_pressure diabetes_pedigree glucose_concentration skin_thickness | table field pvalue

Example output

The following visualization tests whether fields are different from that of a normal distribution. From the plot we can deduce

glucose_concentrationis the most likely value to come from a normal distribution.

One-way ANOVA

The one-way ANOVA tests the null hypothesis that two or more groups have the same population mean.

Implements scipy.stats.f_oneway. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.f_oneway.html

Further reading: https://en.wikipedia.org/wiki/One-way_analysis_of_variance

Parameters

- The

alphaparameter default is 0.05.

Null hypothesis

The specified groups (field_1, ..., field_n) have the same population mean.

Syntax

|score f_oneway <field_1> <field_2> ... <field_n> alpha=<int>

Syntax constraints

A single array (set of fields).

Example

| inputlookup app_usage.csv | score f_oneway HR1 HR2

Example output

T-test (1 sample)

Test whether the expected value (mean) of a sample of independent observations is equal to the specified population mean.

Implements scipy.stats.ttest_1samp. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.ttest_1samp.html

Further reading: http://www.biostathandbook.com/onesamplettest.html

Parameters:

- The

popmeanparameter has no default, and must be specified.- Population mean in null hypothesis.

- The

alphaparameter default is 0.05.

Null hypothesis

The expected value (mean) of the specified samples of independent observations (field_1, ... ,field_n) are equal to the given population mean (popmean).

Syntax

...|score ttest_1samp <field_1> ... <field_n> popmean=<float> alpha=<int>

Syntax constraints

A single array (set of fields).

Example

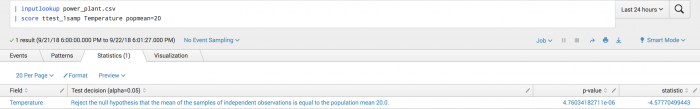

The following example tests whether the sample mean differs from an expected population mean.

| inputlookup power_plant.csv | score ttest_1samp Temperature popmean=20

Example output

The negative statistic indicates that the sample mean is less than the hypothesized mean of 20.

T-test (2 independent samples)

Used to test if two independent samples come from the same distribution.

Implements scipy.stats.ttest_ind. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.ttest_ind.html

Further reading: https://en.wikipedia.org/wiki/Student%27s_t-test#Independent_two-sample_t-test

Parameters

- The

equal_varparameter default is True.- If True (default), perform a standard independent 2 sample test that assumes equal population variances.

- If False, perform Welch’s t-test, which does not assume equal population variance.

- The

alphadefault is 0.05.

Null hypothesis

The null hypothesis is that the the pairs a_field_i and b_field_i (independent) samples have identical average (expected) values. This test assumes that the fields have identical variances by default.

Syntax

|score ttest_ind <a_field_1> ... <a_field_n> against <b_field_1>... <b_field_n> equal_var=<true|false> alpha=<int>

Syntax constraints

Two arrays specified by two ordered sequences of fields (1-to-1, n-to-n and 1-to-n comparison syntaxes).

Example

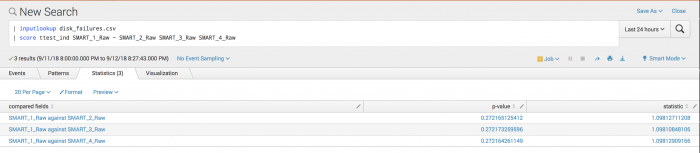

In the following example we want to analyze disk failures to see if disks are equally likely to fail, or some disks are more likely to cause failure.

| inputlookup disk_failures.csv | score ttest_ind SMART_1_Raw against SMART_2_Raw SMART_3_Raw SMART_4_Raw

Disk failures are assumed to be independent across disks.

Example output

In the following output, with an alpha of 0.05, we cannot reject the null hypothesis. It does not seem that disks 2, 3 and 4 are failing more than disk 1. All are close to each other.

Used to test if two related samples come from the same distribution.

Implements scipy.stats.ttest_ind. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.ttest_rel.html

Further reading: https://en.wikipedia.org/wiki/Student%27s_t-test#Dependent%20t-test%20for%20paired%20samples

Parameters

- The

alphaparameter default is 0.05.

Null hypothesis

The null hypothesis is that the the pairs a_field_i and b_field_i (related, as in two measurements of the same thing) samples have identical average (expected) values.

Syntax

|score ttest_rel <a_field_1> ... <a_field_n> against <b_field_1> ... <b_field_n> alpha=<int>

Syntax constraints

Two arrays specified by two ordered sequences of fields (1-to-1, n-to-n and 1-to-n comparison syntaxes).

Example

In the following example we want to see if the two measurements of the HR field taken at the same time are statistically identical or not.

| inputlookup app_usage.csv | score ttest_rel HR1 against HR2

Example output

In the following output we can reject the null hypothesis and conclude that the two measurements are statistically different, potentially indicating a shift from equilibrium.

Wilcoxon

Used to test if two related samples come from the same distribution.

Implements scipy.stats.wilcoxon. Learn more here: https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.wilcoxon.html

Further reading: https://en.wikipedia.org/wiki/Wilcoxon_signed-rank_test

Parameters

- The

zero-methodparameter default is wilcox. - Use the

prattparameter to include zero-differences in ranking process (more conservative). - Use the

wilcoxparameter to discard zero-differences. - Use the

zsplitparameter to split zero ranks between positive and negative. - The

correctionparameter default is False.- If the

correctionparameter is True, apply continuity correction by adjusting the Wilcoxon rank statistic by 0.5 towards the mean value when computing the z-statistic.

- If the

- The

alphayparameter default is 0.05.

Null hypothesis

The null hypothesis is that two related paired samples come from the same distribution. In particular, it tests whether the distribution of the differences x - y is symmetric about zero.

Syntax

|score wilcoxon <a_field> against <b_field> zero_method=<pratt | wilcox | zsplit> correction=<true | false> alpha=<int>

Syntax constraints

A single pair of fields (1-to-1).

Example

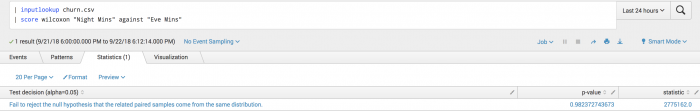

In the following example we want to see if the distribution of nighttime minutes used differs from the distribution of evening minutes:

| inputlookup churn.csv | score wilcoxon "Night Mins" against "Eve Mins"

Example output

K-fold scoring

Cross-validation is a general technique aimed to assess how well a statistical model generalizes on an independent dataset. That is, cross-validation will tell you how well your machine learning model is expected to perform on data that it has not been trained on. The scores obtained from K-fold cross-validation are generally a less biased / less optimistic estimate of the model performance than a standard train/test split.

There are many types of cross validation, but k-fold cross validation (kfold_cv) is one of the most common.

The kfold_cv command does not use the score command, but operates like a scoring method.

Cross validation is typically used for:

- Comparing two or more algorithms against each other for selecting the best choice on a particular dataset.

- Comparing different choices of hyper-parameters on the same algorithm for choosing the best hyper-parameters for a particular dataset.

- An improved method over a train/test split for quantifying model generalization.

Cross validation is not suited for time-series:

- In situations where the data is ordered (eg. time-series), cross validation is not well suited since the training data is shuffled. In these situations, other methods are better suited (eg. Forward Chaining).

- The simplest implementation would probably be to wrap sklearn's Time Series Split. Learn more here: https://en.wikipedia.org/wiki/Forward_chaining

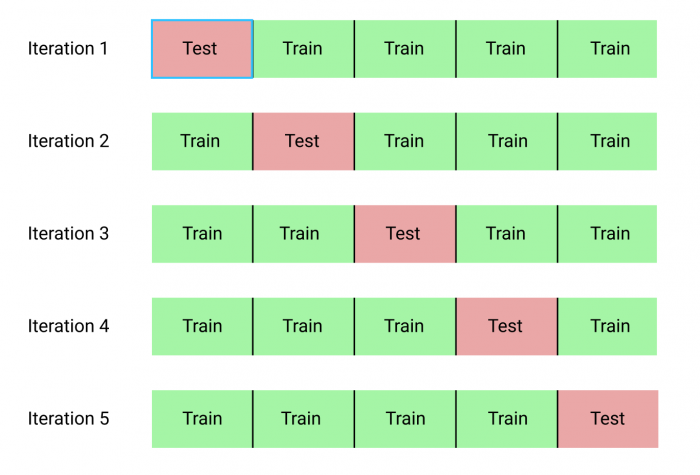

In kfold_cv, the training set is randomly partitioned into k equal-sized subsamples. Then, each sub-sample takes a turn at becoming the validation (test) set, predicted by the other k-1 training sets. Each sample is used exactly once in the validation set, and the variance of the resulting estimate is reduced as k is increased. The disadvantage of kfold_cv is that k different models have to be trained, leading to long execution times for large datasets and complex models.

In this way, we can obtain k performance metrics (one for each train/test split). These k performance metrics can then be averaged over to obtain a single estimate of how well the model will generalize to unseen data.

Syntax

The kfold_cv command is applicable to to all classification and regression algorithms, and can be appended to the end of an SPL search as:

..| fit <classification | regression algo> <targetVariable> from <featureVariables> [options] kfold_cv=<int>

Where kfold_cv=<int> specifies that k=<int> folds will be used. When a classification algorithm is specified, stratified k-fold will be used instead of k-fold. In stratified k-fold, each fold contains approximately the same percentage of samples for each class.

kfold_cv cannot be used when saving a model.

Output

The kfold_cv command returns performance metrics on each fold using the same model (ie. same algorithm, same hyper-parameters) specified in the SPL. It's only function is to give the user insight into how well their model generalizes – it does not perform any model selection or hyper-parameter tuning. In this way, the current implementation can be seen as a scoring method.

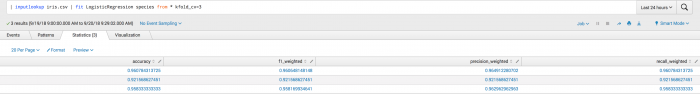

Example 1

The kfold_cv command used in classification. Note that the output is a set of metrics for each fold (accuracy, f1_weighted, precision_weighted, recall_weighted)

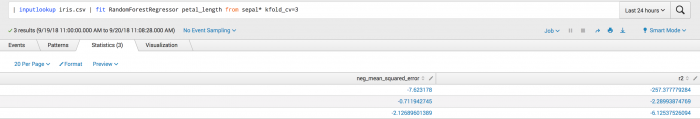

Example 2

The kfold_cv command used in classification. Note that the output is a set of metrics for each fold (neg_mean_squared_error, r^2)

| Configure the fit and apply commands | Algorithms |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 4.0.0

Download manual

Download manual

Feedback submitted, thanks!