About LLM-RAG

As technologies around large language models (LLMs) evolve, several key challenges have emerged:

- How to use LLM services securely.

- How to generate beneficial and accurate answers for your organization.

Current LLM services are commonly provided as Software-as-a-Service (SaaS) products, with customer data and searches sent through public clouds. This practice poses significant obstacles for many organizations aiming to use LLMs securely.

Additionally, LLMs generate responses based on their training data, making it challenging to produce answers that are relevant to customers' specific use cases without the latest information or access to internal knowledge.

To address securing LLM services, host you LLMs within an on-premises environment, where you have full control over your data. To generate beneficial and accurate answers, you can adopt retrieval-augmented generation (RAG).

RAG uses customizable knowledge bases as additional contextual information to enhance the LLMs' responses. Typically, materials containing the additional knowledge, such as internal documents and system log messages, are vectorized and stored in a vector database (vectorDB). When a search is run, related knowledge content is identified through vector similarity search and added to the context of the LLM prompt to assist the generation process.

Only Docker deployments are supported for running LLM-RAG on the Splunk App for Data Science and Deep Learning (DSDL).

LLM-RAG features

LLM-RAG provides a compute command , which accelerates DSDL searches, as well as dashboards to extend the Splunk App for Data Science and Deep Learning (DSDL). There are 3 key components to the LLM-RAG features:

- A DSDL container that includes the Python scripts and support packages.

- An LLM module that runs LLMs locally.

- A vector database (DB) to store vectorized knowledge bases.

In DSDL you can find the dashboards under the Assistants tab. The dashboards are grouped by the following operations:

- Encoding data to a vector database.

- Searching LLM with vector data.

LLM-RAG architecture

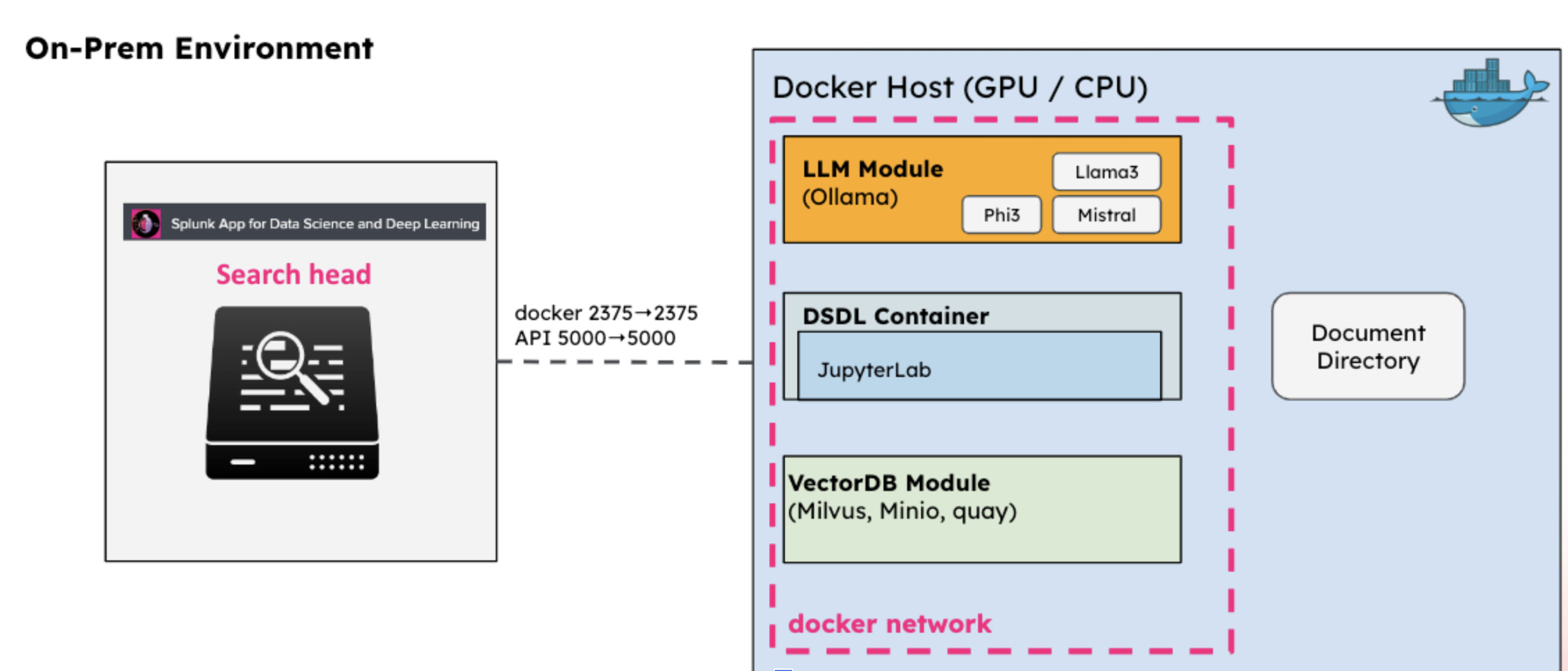

The following image shows the architecture of an on-premises LLM-RAG system:

The LLM-RAG system includes an LLM module using Ollama, a DSDL container, and a vectorDB module using Milvus. The DSDL container is the framework that orchestrates the LLM and vectorDB modules for RAG operations.

To learn more about Ollama and Milvus products, see https://ollama.com/ and https://milvus.io/.

LLM-RAG use cases

The LLM-RAG functionalities with assistive guidance handle the following use cases:

| Use case | Description |

|---|---|

| Standalone LLM | Use Standalone LLM for direct use of the LLM for Q&A inference or chat. |

| Standalone VectorDB | Use Standalone VectorDB when you want to encode machine data and conduct similarity searches. |

| Document-based LLM-RAG | Use Document-based LLM-RAG when you want to encode arbitrary documents and use them as additional contexts when prompting LLM models.. Model generation is based on an internal knowledge database. |

| Function Calling LLM-RAG | Use Function Calling LLM-RAG for the LLM to execute customizable function tools to obtain contextual information for response generation. Function Calling LLM-RAG provides example tools for searching Splunk data and searching vector database collections. |

Learn more

See the following for additional information:

- For more information on the

computecommand, see About the compute command. - For more information on LLM-RAG features, see Encode data into a vector database, and Query LLM with vector data.

- For more information on LLM-RAG use cases, see LLM-RAG use cases.

| Using the Deep Learning Text Summarization Assistant | Set up LLM-RAG |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0

Download manual

Download manual

Feedback submitted, thanks!