Encode data into a vector database

To encode data into a vector database, complete these tasks:

- Encode documents: Uses vectorizing data and stores the vectors in Milvus collections. You can then use documents in later stages of the LLM-RAG process.

- Encode data from the Splunk platform: Uses vectorizing data and stores the vectors in Milvus collections. You can use data in later stages of the LLM-RAG process.

- Conduct vector search on log data: Conduct vector similarity search on Splunk log data.

- Manage and explore your vector database: List, pull, and delete your vector database.

Encode documents

Go to the Encode documents page:

- In the Splunk App for Data Science and Deep Learning (DSDL), go to Assistants.

- Select LLM-RAG, then Encoding Data to Vector Database, and then Encode documents.

Parameters

The Encode documents page has the following parameters:

| Parameter | Description |

|---|---|

data_path

|

The directory on Docker volume where you store the raw document data. Sub-directories are read automatically and CSV, PDF, TXT, DOCX, XML, and IPYNB files are encoded. |

embedder_name

|

The name of the sentence-transformers embedding model. Use all-MiniLM-L6-v2 for English and intfloat/multilingual-e5-large for other languages.

|

use_local

|

Whether the embedding models are stored locally. When set to 0, the embedder model is downloaded from the internet. When set to 1, the command assumes that the embedder model files are stored in /srv/app/model/data/. For more details.see Set up LLM-RAG in an air-gapped environment. |

embedder_dimension

|

Dimensionality of the vector produced by the embedder model. Set to 384 for all-MiniLM-L6-v2 and 1024 for intfloat/multilingual-e5-large.

|

collection_name

|

A unique name of the collection to store the vectors in. The name must start with a letter or a number and contain no spaces. If you are adding data to an existing collection, make sure to use the same embedder model. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| makeresults | fit MLTKContainer algo=llm_rag_document_encoder data_path="/srv/notebooks/data/Buttercup/" embedder_name="all-MiniLM-L6-v2" use_local=1 embedder_dimension=384 collection_name="document_collection_example" _time into app:llm_rag_document_encoder as Encoding

- Run the

computecommand:| makeresults | compute algo:llm_rag_document_encoder data_path:"/srv/notebooks/data/Buttercup/" embedder_name:"all-MiniLM-L6-v2" use_local:1 embedder_dimension:384 collection_name:"document_collection_example" _time

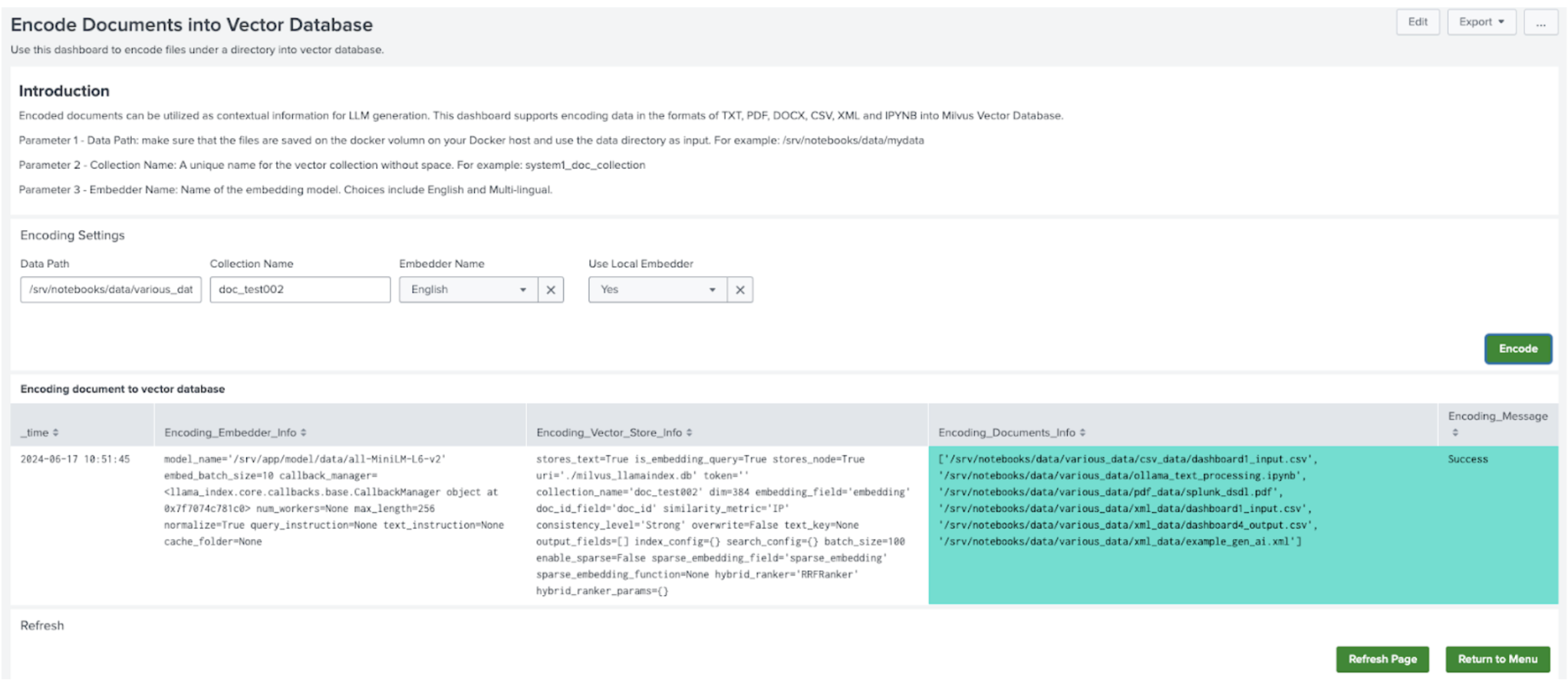

Dashboard view

The following image shows the dashboard view for the Encode documents page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Data Path | The directory on docker volume where you store the raw document data. Sub-directories are read automatically and files with extensions .CSV, .PDF, .TXT, .DOCX, .XML, and .IPYNB are encoded. |

| Collection Name | A unique name of the collection to store the vectors in. The name should start with an alphabet or a number and contain no space. If you are adding data to an existing collection, make sure to use the same embedder model. |

| Embedder Name | Choose between English and Multilingual based on your use case. |

| Use Local Embedder | Whether the embedder has been downloaded and saved on your Docker volume. |

| Encode | Select to start encoding after finishing all the inputs. |

| Refresh Page | Reset all the tokens on this dashboard. |

| Return to Menu | Return to the main menu. |

Encode data from the Splunk platform

In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Encoding Data to Vector Database, and then select Encode data from Splunk.

Concatenate the command to a search pipeline that produces a table containing only a field of the log data you want to encode as well as other fields of metadata you want to add. Avoid using embeddings or label as field names in the table, as these 2 field names are used in the vector database by default.

Encoding too much data at once can cause a failure. Keep the cardinality of logs under 30,000.

Parameters

The Encode data from Splunk page has the following parameters:

| Parameters | Description |

|---|---|

embedder_name |

The name of the sentence-transformers embedding model. Use all-MiniLM-L6-v2 for English and use intfloat/multilingual-e5-large for other languages.

|

use_local |

Whether the embedding models are stored locally. When set to 0, the embedder model is downloaded from the internet. When set to 1, the command assumes that the embedder model files are stored in /srv/app/model/data/. For more details see Set up LLM-RAG in an air-gapped environment. |

embedder_dimension |

Dimensionality of the vector produced by the embedder model. Set to 384 for all-MiniLM-L6-v2 and 1024 for intfloat/multilingual-e5-large.

|

collection_name |

A unique name of the collection to store the vectors in. The name must start with a letter or number and contain no spaces. If you are adding data to an existing collection, make sure to use the same embedder model. |

label_field_name |

Name of the field you want to encode. All the other fields in the table are treated as metadata in the collection. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| <search string> | fit MLTKContainer algo=llm_rag_log_encoder embedder_name="all-MiniLM-L6-v2" embedder_dimension=384 use_local=1 collection_name=log_events_example label_field_name=_raw * into app:llm_rag_log_encoder

- Run the

computecommand:| <search string> | compute algo:llm_rag_log_encoder embedder_name:"all-MiniLM-L6-v2" embedder_dimension:384 use_local:1 collection_name:log_events_example label_field_name:_raw _raw index sourcetype

The wildcard character ( * ) is not supported in the

computecommand. You must specify all the input fields within the command.

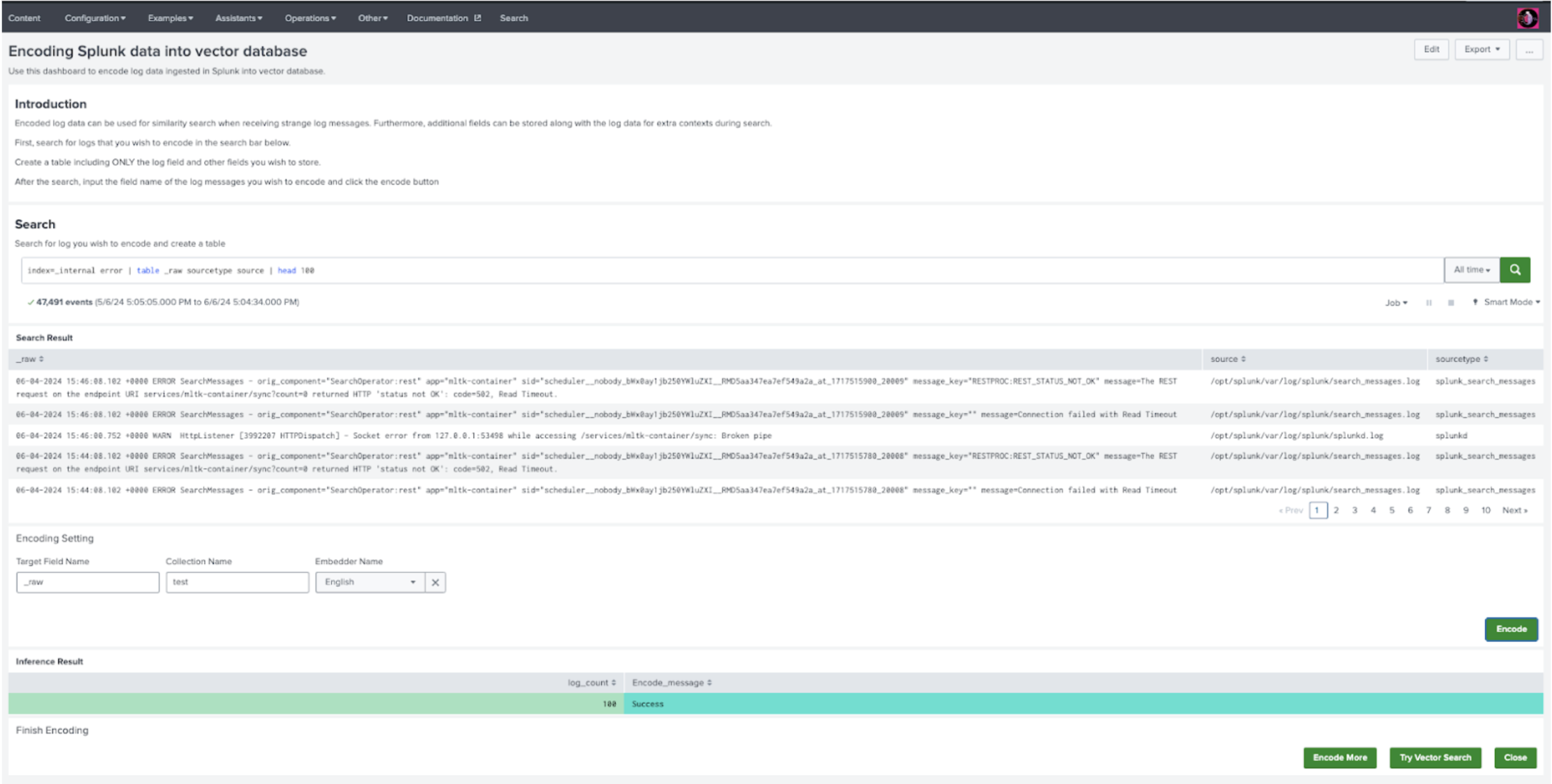

Dashboard view

The following image shows the dashboard view for the Encode data from Splunk page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Search bar | Search Splunk log data to encode. This search produces a table containing only a field of the log data you want to encode, as well as other fields of metadata you want to add. |

| Target Field Name | The name of the field you want to encode. All the other fields in the table are treated as metadata in the collection. |

| Collection Name | A unique name of the collection to store the vectors in. The name must start with a letter or number and do not include any spaces. If you are adding data to an existing collection, make sure to use the same embedder model. |

| Embedder Name | Choose between English and Multilingual based on your use case. |

| Use Local Embedder | Whether the embedder has been downloaded and saved on your Docker volume. |

| Encode | Select to start encoding after finishing all the inputs. |

| Refresh Page | Reset all the tokens on this dashboard. |

| Go to Vector Search | Navigate to the Vector Search dashboard. |

| Return to Menu | Return to the main menu. |

Conduct vector search on log data

In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Encoding Data to Vector Database, and then select Conduct Vector Search on log data.

Concatenate the command to a search pipeline that produces a table containing only a field of the log data you want to conduct similarity search on. Rename the field as "text".

Parameters

The Encode data from Splunk page has the following parameters:

| Parameters | Description |

|---|---|

embedder_name

|

The name of the sentence-transformers embedding model. Use all-MiniLM-L6-v2 for English and use intfloat/multilingual-e5-large for other languages. The embedder must be consistent with the one used for encoding.

|

use_local

|

Whether the embedding models are stored locally. When set to 0, the embedder model is downloaded from the internet. When set to 1, the command assumes that the embedder model files are stored in /srv/app/model/data/. For more details see Set up LLM-RAG in an air-gapped environment. |

top_k

|

Number of top results to return. |

collection_name

|

The existing collection to conduct similarity search on. |

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| search ... | table text | fit MLTKContainer algo=llm_rag_milvus_search embedder_name="all-MiniLM-L6-v2" collection_name=log_events_example use_local=1 top_k=5 text into app:llm_rag_milvus_search

- Run the

computecommand:| search ... | table text | compute algo:llm_rag_milvus_search embedder_name:"all-MiniLM-L6-v2" collection_name:log_events_example use_local:1 top_k:5 text

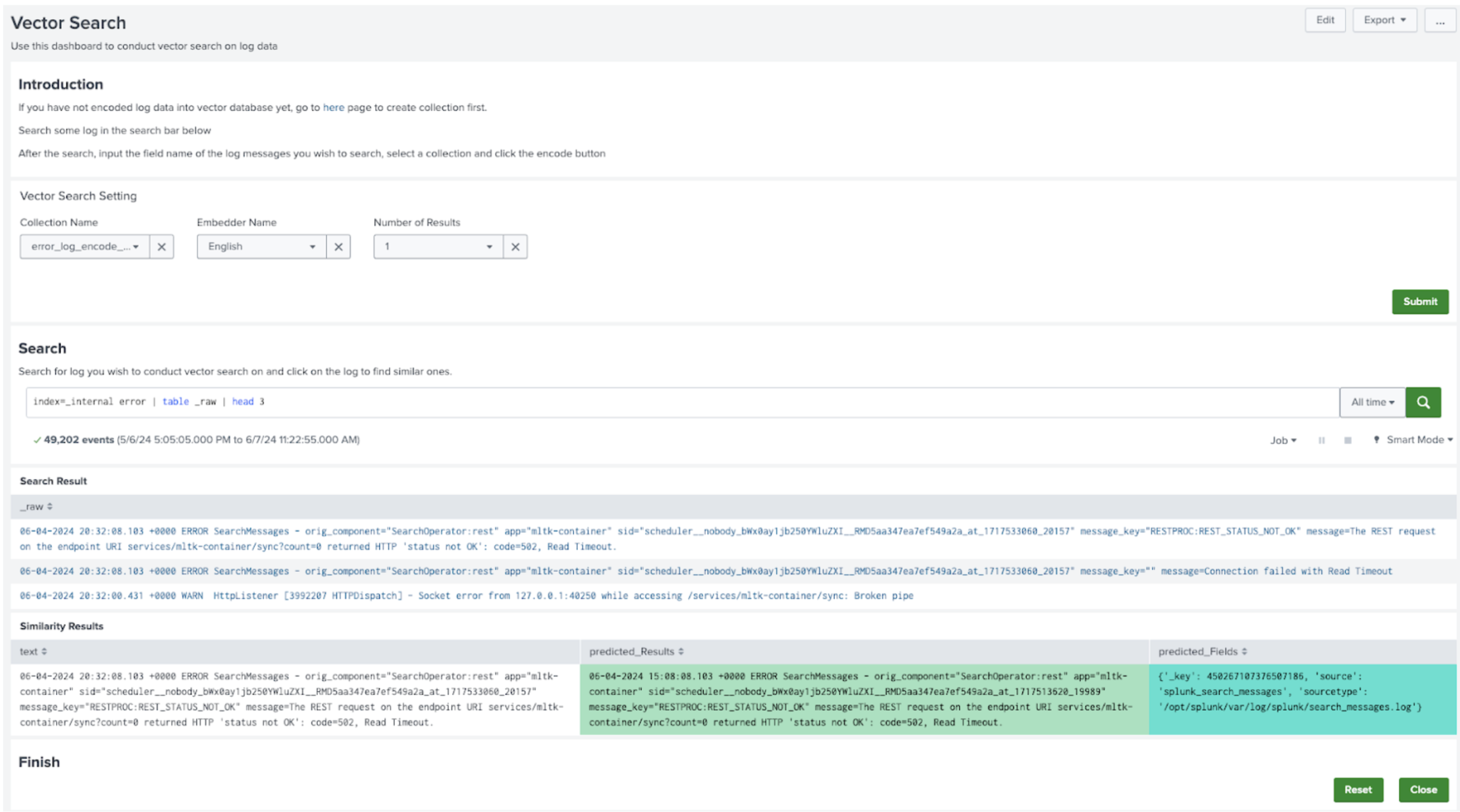

Dashboard view

The following image shows the dashboard view for the Conduct vector search on log data page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Collection Name | An existing collection you want to use for vector search. |

| Embedder Name | Choose between English and Multilingual based on your use case. |

| Use Local Embedder | Whether the embedder has been downloaded and saved on your Docker volume. |

| Number of Results | Number of top results to return. |

| Search bar | Search Splunk log data to conduct similarity search. This search produces a table containing only a field of the log data you want to search on. Select the specific log message to kick off vector search. |

| Submit | Select after finishing all the inputs. |

| Refresh Page | Reset all the tokens on this dashboard. |

| Return to Menu | Return to the menu. |

Manage and explore your vector database

In the Splunk App for Data Science and Deep Learning (DSDL), navigate to Assistants, then LLM-RAG, then Encoding Data to Vector Database, and then select Manage and Explore your vector database.

Parameters

Manage and explore your vector database with the following parameters:

| Parameters | Description |

|---|---|

task

|

The specific task for management. Use list_collections to list all the existing collections, delete_collection to delete a specific collection, show_schema to print the schema of a specific collection, and show_rows to print the number of vectors within a collection.

|

collection_name

|

The specific collection name. Required for all tasks except list_collections.

|

Run the fit or compute command

Use the following syntax to run the fit command or the compute command:

- Run the

fitcommand:| makeresults | fit MLTKContainer algo=llm_rag_milvus_management task=delete_collection collection_name=document_collection_example _time into app:llm_rag_milvus_management as RAG

- Run the

computecommand:| makeresults | compute algo:llm_rag_milvus_management task:delete_collection collection_name:document_collection_example _time

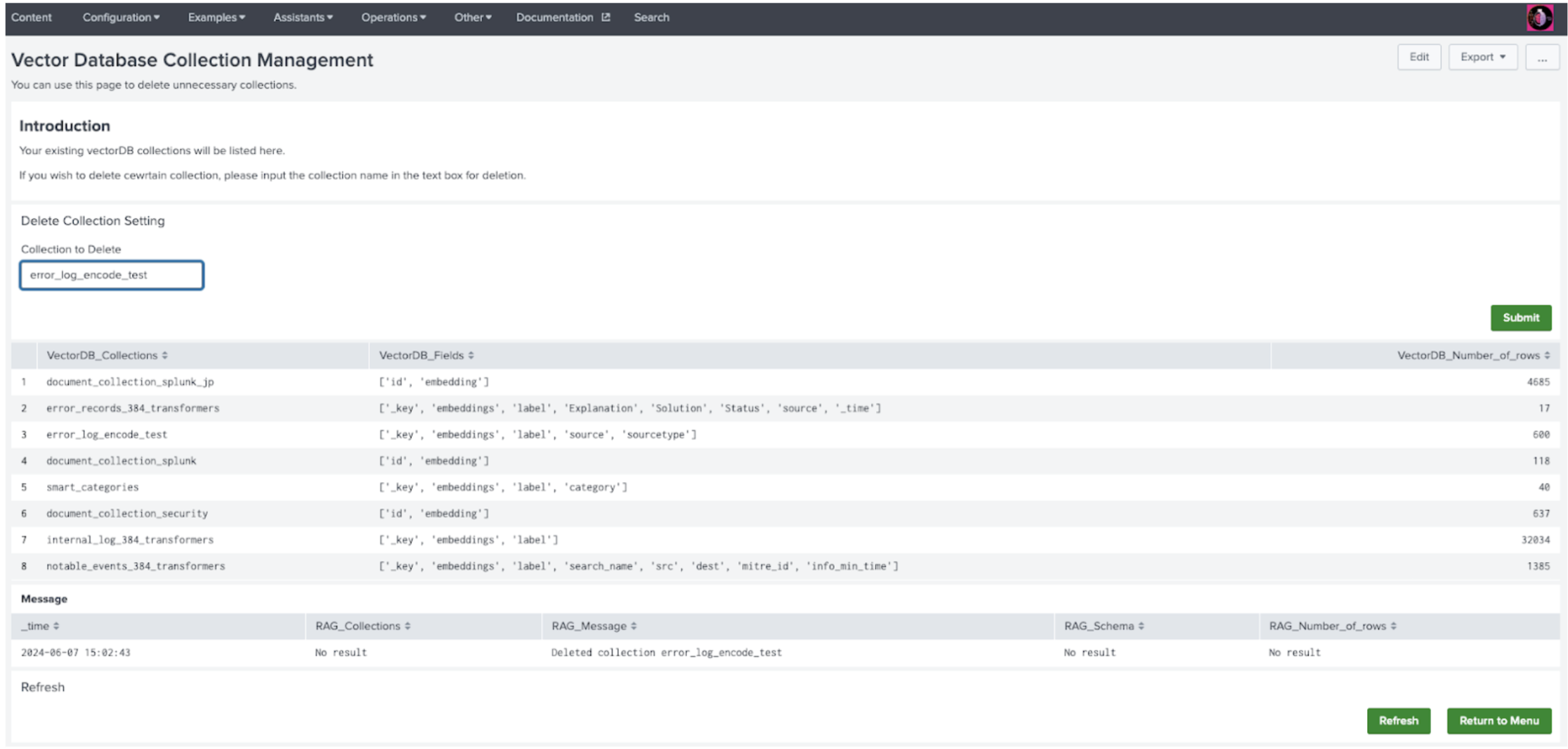

Dashboard view

The following image shows the dashboard view for the Manage and explore your vector database page:

The dashboard view includes the following components:

| Dashboard component | Description |

|---|---|

| Collection to delete | The specific collection name you want to delete. |

| Submit | Select to delete the input collection. |

| Refresh | Refresh the list of collections. |

| Return to Menu | Return to the main menu. |

Next step

After pulling the LLM model to your local Docker container and encoding document or log data into the vector database, you can carry out inferences using the LLM. See Query LLM with vector data.

| Use Function Calling LLM-RAG | Query LLM with vector data |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0

Download manual

Download manual

Feedback submitted, thanks!