Splunk App for Data Science and Deep Learning commands

The Splunk App for Data Science and Deep Learning (DSDL) integrates advanced custom machine learning and deep learning systems with the Splunk platform. The app extends the Splunk platform with prebuilt Docker containers for TensorFlow and PyTorch, and additional data science, NLP, and classical machine learning libraries.

Familiar SPL syntax from MLTK, including the ML-SPL commands fit, apply, and summary, is used to train and operationalize models in a container environment such as Docker, Kubernetes, or OpenShift.

Any processes that occur outside of the Splunk platform ecosystem that leverage third-party infrastructure such as Docker, Kubernetes, or OpenShift, are out of scope for Splunk platform support or troubleshooting.

Using the fit command

You can use the fit command to train a machine learning model on data within the Splunk platform. This command sends data and parameters to a container environment and saves the resulting model with the specified name.

When you run the fit command without additional parameters, training is performed based on the code in the associated notebook. When you include mode=stage, data is transferred to the container for development in JupyterLab without running full training.

Syntax

| fit MLTKContainer algo=<algorithm> mode=stage <feature_list> into app:<model_name>

Parameters

| Parameter | Description |

|---|---|

algo

|

Specifies the notebook name or algorithm in the container environment. |

mode

|

When set to stage, the command sends data to the container but does not perform training.

|

features_<feature_list>

|

Defines the feature fields to include in training. |

into app:<model_name>

|

Saves the model or staged data in DSDL under the specified name. |

Examples

The following example stages data without training so you can work iteratively in JupyterLab:

| fit MLTKContainer mode=stage algo=my_notebook into app:barebone_template

The following example sends additional data, including the _time field and feature*, to the barebone_template notebook for iterative development in JupyterLab:

| fit MLTKContainer mode=stage algo=barebone_template _time feature_* i into app:barebone_template

Using the apply command

Use the apply command to generate model predictions on new data within Splunk. This step can be automated through scheduled searches or integrated into dashboards and alerts for real-time monitoring.

Syntax

| apply <model_name>

Parameters

| Parameter | Description |

|---|---|

model_name

|

The name of the model to apply. This corresponds to the name used in the into app: clause when the model was trained.

|

Example

The following example runs inference on a dataset using the model named barebone_template. You can follow this with the score command to evaluate model performance, such as accuracy metrics for classification or R-squared for regression:

| apply barebone_template

Using the summary command

Use the summary command to retrieve model metadata and configuration details, including hyperparameters and feature lists. This command helps track or inspect the exact parameters used in training.

Syntax

| summary <model_name>

Parameters

| Parameter | Description |

|---|---|

model_name

|

The name of the model for which to retrieve metadata. |

Examples

The following example retrieves metadata for app:barebone_template, including training configurations and feature names.

| summary app:barebone_template

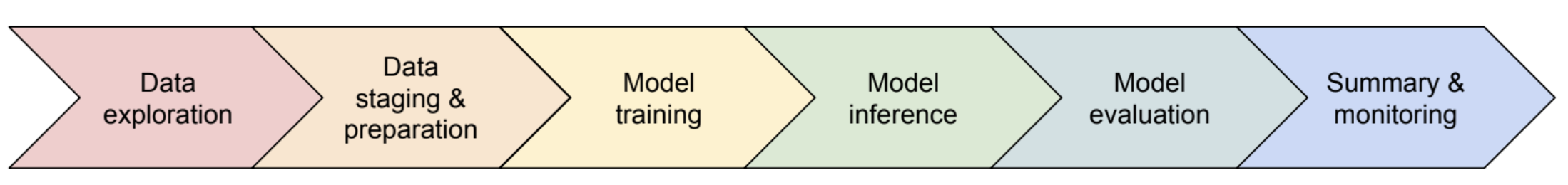

Using commands in a workflow

The following is a workflow of how you can use the different ML-SPL commands available in DSDL:

Data exploration

You can identify and refine your data in Splunk using SPL.

If you are using the Splunk access token, you can pull data interactively with SplunkSearch.SplunkSearch().

For data exploration with JupyterLab you can push a sample using | fit MLTKContainer mode=stage ... .

Data staging and preparation

You can use | fit MLTKContainer mode=stage ... to transfer data and metadata to the container.

Clean and configure the staged dataset within JupyterLab.

Model training

Model names must not include white spaces.

You can use | fit MLTKContainer algo=<algorithm> ... on your final training data.

Leverage GPUs if needed. Monitor progress in JupyterLab.

Model inference

You can use | apply <model_name> to generate predictions.

Integrate with Splunk dashboards, alerts, or scheduled searches for operational use.

Model evaluation

You can use | score for classification or regression metrics.

Return to JupyterLab to refine or retrain the model if needed.

Summary and monitoring

You can use | summary <model_name> to review metadata and configurations.

Set model permissions to Global if it needs to be served from a dedicated container.

Monitor performance in Splunk dashboards or alerts and detect potential drift.

Pull data using Splunk REST API

You can interactively search Splunk from JupyterLab, provided your container can connect to the Splunk REST API and you have configured a valid Splunk auth token in the DSDL setup page. This is useful for quickly testing different SPL queries, exploring data in a Pandas DataFrame, or refining your search logic without leaving Jupyter.

Complete the following steps:

This approach returns raw search results only. No metadata or parameter JSON is generated. If you need structured metadata, use the fit command with mode=stage to push data to the container.

- Generate a Splunk token:

- In the Splunk platform, go to Settings and then Tokens to create a new token.

- Copy the generated token for use in DSDL.

- (Optional) Set up Splunk access in Jupyter:

- In DSDL, go to Configuration and then Setup.

- Locate Splunk Access Settings and enter your Splunk host and the generated token. This makes the Splunk REST API available within your container environment.

The default management port is 8089.

- Now that Splunk access is configured, you can pull data interactively in JupyterLab:

from dsdlsupport import SplunkSearch # Option A: Open an interactive search box search = SplunkSearch.SplunkSearch() # Option B: Use a predefined query search = SplunkSearch.SplunkSearch(search='| makeresults count=10 \n' '| streamstats c as i \n' '| eval s = i%3 \n' '| eval feature_{s}=0 \n' '| foreach feature_* [eval <<FIELD>>=random()/pow(2,31)] \n' '| fit MLTKContainer mode=stage algo=barebone_template _time feature_* i into app:barebone_template') # Option C: Use a referenced query example_query = '| makeresults count=10 \n' '| streamstats c as i \n' '| eval s = i%3 \n' '| eval feature_{s}=0 \n' '| foreach feature_* [eval <<FIELD>>=random()/pow(2,31)] \n' '| fit MLTKContainer mode=stage algo=barebone_template _time feature_* i into app:barebone_template') search = SplunkSearch.SplunkSearch(search=example_query) # Run the search and then retrieve the results df = search.as_df() df.head()

Push data using the fit command

You can send data from the Splunk platform to the container using the following command:

fit MLTKContainer mode=stage

This writes both the dataset and relevant metadata, such as feature lists and parameters, to the container environment as CSV and JSON files. This approach is well suited for building or modifying a notebook in JupyterLab, while referencing a known dataset structure and configuration.

Example

The following example uses the fit command to send data from the Splunk platform to a container:

| fit MLTKContainer mode=stage algo=barebone_template _time feature_* i into app:barebone_template

# Retrieve data in notebook

def stage(name):

with open("data/"+name+".csv", 'r') as f:

df = pd.read_csv(f)

with open("data/"+name+".json", 'r') as f:

param = json.load(f)

return df, param

df, param = stage("barebone_template")

| Using multi-GPU computing for heavily parallelled processing | Using the Neural Network Designer Assistant |

This documentation applies to the following versions of Splunk® App for Data Science and Deep Learning: 5.2.0, 5.2.1

Download manual

Download manual

Feedback submitted, thanks!