Supported ingestion methods and data sources for the Splunk Data Stream Processor

You can get data into your data pipeline in the following ways.

Supported ingestion methods

The following ingestion methods are supported.

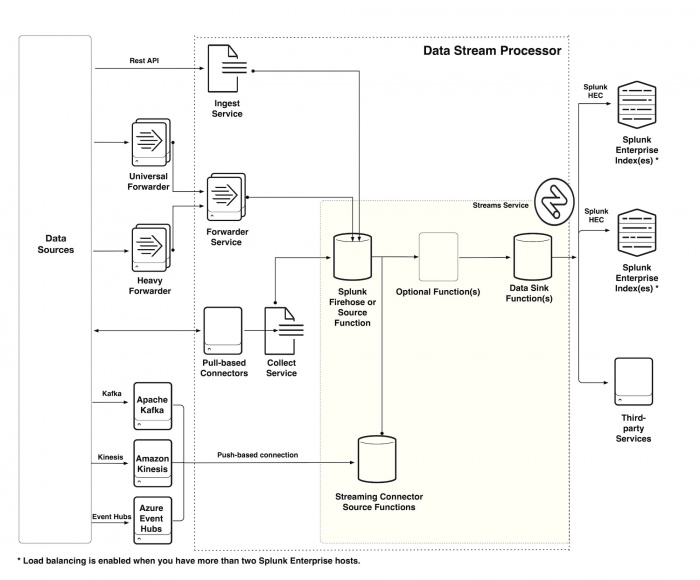

Send events using the Ingest REST API

Use the Ingest REST API to send JSON objects to the /events or the /metrics endpoint. See Format and send events using the Ingest REST API.

Send events using the Forwarders service

Send data from a Splunk forwarder to the Splunk Forwarders service. See Send events using a forwarder.

Send events using the DSP HTTP Event Collector

Send data to a DSP data pipeline using the DSP HTTP Event Collector (DSP HEC). See Send events to a DSP data pipeline using the DSP HTTP Event Collector.

Send syslog events using SC4S and the DSP HTTP Event Collector

Send syslog data to a DSP pipeline using SC4S and the DSP HTTP Event Collector (DSP HEC). See Send Syslog events to a DSP data pipeline using SC4S with DSP HEC

Get data in using the Collect service

You can use the Collect service to manage how data collection jobs ingest event and metric data. See the Collect service documentation.

Get data in using a connector

A connector connects a data pipeline with an external data source. There are two types of connectors:

- Push-based connectors continuously send data from an external source into a pipeline. See Get data in with a push-based connector. The following push-based connectors are supported:

- Pull-based connectors collect data from external sources and send them into your DSP pipeline though the Collect service. See Get data in with a pull-based connector. The following pull-based connectors are supported:

- Use the Amazon CloudWatch Metrics Connector with Splunk DSP

- Use the Amazon Metadata Connector with Splunk DSP

- Use the Amazon S3 Connector with Splunk DSP

- Use the Azure Monitor Metrics Connector with Splunk DSP

- Use the Google Cloud Monitoring Metrics Connector with Splunk DSP

- Use the Microsoft 365 Connector with Splunk DSP

Read from Splunk Firehose

Use Splunk Firehose to read data from the Ingest REST API, DSP HEC, Syslog, Forwarders, and Collect services. See Splunk Firehose.

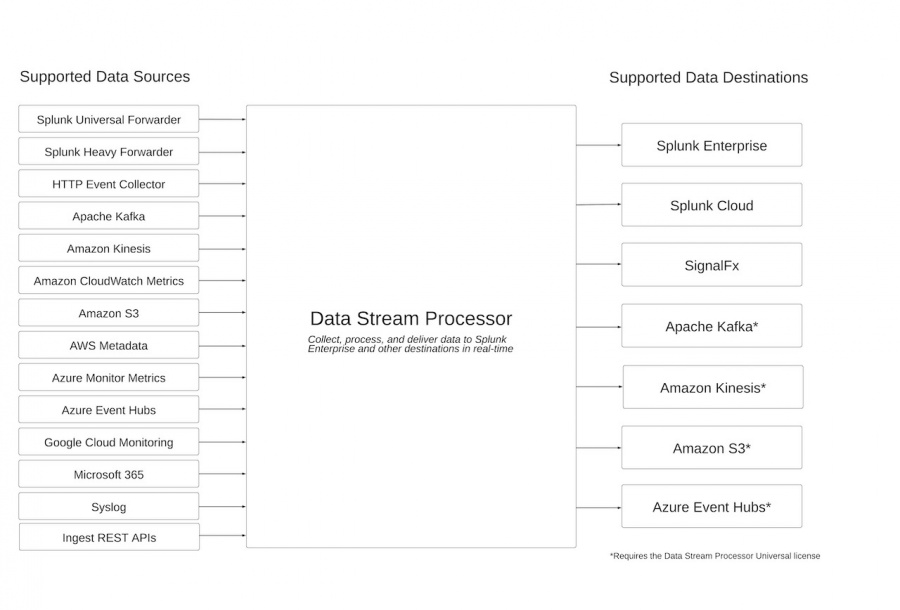

Supported data sinks

Use the Splunk Data Stream Processor to send data to the following sinks, or destinations:

Send data to a Splunk index

See Write to the Splunk platform (Default for Environment) and Write to the Splunk platform in the Splunk Data Stream Processor Function Reference manual.

Send data to Apache Kafka

See Write to Kafka in the Splunk Data Stream Processor Function Reference manual. Sending data to Apache Kafka requires the DSP universal license. See DSP universal license in the Install and administer the Data Stream Processor manual.

Send data to Amazon Kinesis

See Write to Kinesis in the Splunk Data Stream Processor Function Reference manual. Sending data to Amazon Kinesis requires the DSP universal license. See DSP universal license in the Install and administer the Data Stream Processor manual.

Send data to null

See Write to Null in the Splunk Data Stream Processor Function Reference manual.

Send data to S3-compatible storage

See Write to S3-compatible storage in the Splunk Data Stream Processor Function Reference manual. Sending data to S3-compatible storage equires the DSP universal license. See DSP universal license in the Install and administer the Data Stream Processor manual.

Send data to SignalFx

See Write to SignalFx in the Splunk Data Stream Processor Function Reference manual.

Send data to Azure Event Hubs using SAS Key (Beta)

See Write to Azure Event Hubs Using SAS Key (Beta) in the Splunk Data Stream Processor Function Reference manual. Sending data to Azure Event Hubs requires the DSP universal license. See DSP universal license in the Install and administer the Data Stream Processor manual.

Architecture diagrams

The following diagram summarizes supported data sources and data sinks.

The following diagram shows the different services and ways that data can enter your data pipeline.

| Getting data in overview for the Splunk Data Stream Processor |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.1.0

Download manual

Download manual

Feedback submitted, thanks!