Data collection methods

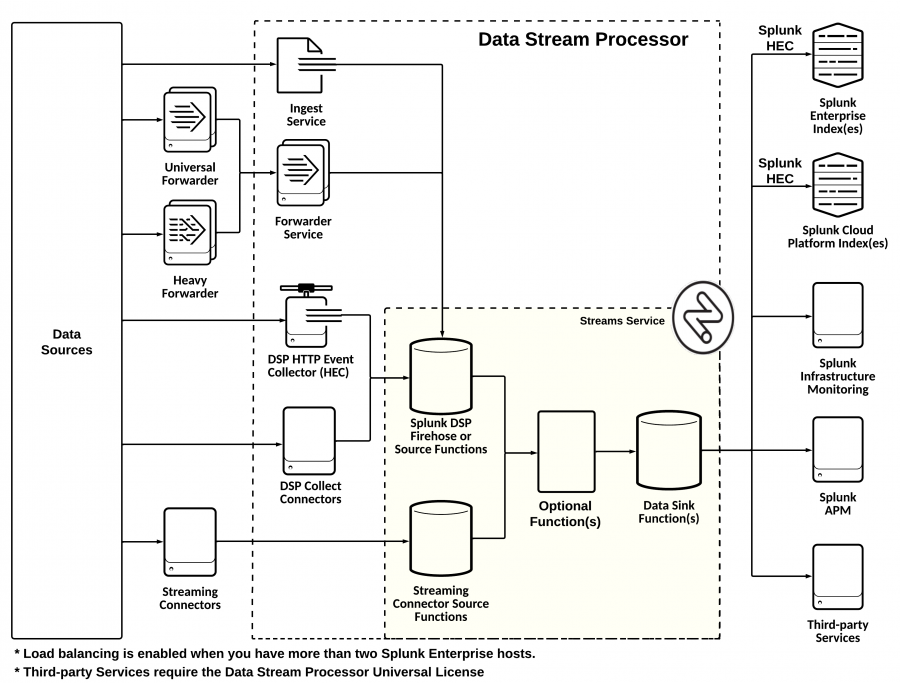

Depending on the specific type of data source that you are working with, the uses one of the following services or connector types to collect data from it:

| Data collection method | Description |

|---|---|

| Ingest service | Collects JSON objects from the /events and /metrics endpoints of the Ingest REST API. |

| Forwarder service | Collects data from Splunk forwarders. |

| DSP HTTP Event Collector (DSP HEC) | Collects data from HTTP clients and syslog data sources. |

| DSP Collect connectors | Supports data from several types of data sources, including Amazon CloudWatch, Amazon S3, Amazon metadata, Microsoft Azure Monitor, Google Cloud Monitoring, and Microsoft 365. This type of connector collects data using jobs that run according to a schedule, which you specify when configuring the connection. See the Scheduled data collection jobs section on this page for more information.

DSP Collect connectors (also known as "pull-based" connectors) are planned to be deprecated in a future release. See the Release Notes for more information. |

| Streaming connectors | Supports data from several types of data sources, including Amazon Kinesis Data Stream, Apache Kafka, Apache Pulsar, Microsoft Azure Event Hubs, and Google Cloud Pub/Sub. This type of connector works by continuously receiving the data that is emitted by the data source. |

The following diagram shows the different ways that data can enter your DSP pipeline:

Scheduled data collection jobs

When reading data from the following data sources, DSP uses data collection jobs that run according to a scheduled cadence:

- Amazon CloudWatch

- Amazon S3

- Amazon Web Services (AWS) metadata

- Google Cloud Monitoring

- Microsoft 365

- Microsoft Azure Monitor

When you activate a data pipeline that uses one of these data sources, the pipeline will receive batches of data that arrive based on the job schedule instead of receiving data as soon as it is emitted from the data source.

When you create a connection to one of these data sources, you must configure the following data collection job settings:

| Job setting | Description |

|---|---|

| Schedule | A time-based job schedule that determines when the connector runs jobs for collecting data. You can select a predefined value or write a custom CRON schedule. All CRON schedules are based on UTC. |

| Workers | The number of workers you want to use to collect data. A worker is a software module that collects and processes data, and then sends the results downstream in the pipeline. |

Limitations of scheduled data collection jobs

Scheduled data collection jobs have the following limitations:

- You cannot schedule jobs to run more frequently than once every 5 minutes. If a job is scheduled to run more frequently than that, some of the jobs are skipped. For example, if a job is scheduled to run once per minute, it runs only once in a 5-minute time period and 4 scheduled jobs are skipped.

- You can use a maximum of 20 workers per job.

- The maximum supported size for a record is 921,600 bytes. If any records exceed that size, the data collection job returns an error.

- The collected data can be delayed because of the following reasons:

- The latency in the data provided by the data source.

- The volume of raw data ingested. For example, collecting 1 GB of data takes longer than collecting 1 MB of data.

- The volume of data collected from upstream sources, such as from the Ingest REST API or a Splunk forwarder.

Adding additional workers might improve the data ingestion rates, but external factors will still influence the speed of data ingestion.

Data delivery guarantees

DSP delivers data from a pipeline to a data destination on an at-least-once delivery basis. If your data destination becomes unreachable, DSP stops sending data to it. Once the data destination becomes available again, DSP restarts the affected pipelines and resumes sending data. If your destination is unavailable for a period of time longer than the data retention period of your data source, then your data is dropped. See Data retention policies in the Install and administer the Data Stream Processor manual for more information.

| Data sources and destinations | Managing connections in the |

This documentation applies to the following versions of Splunk® Data Stream Processor: 1.2.1, 1.2.2-patch02, 1.2.4, 1.2.5, 1.3.0, 1.3.1

Download manual

Download manual

Feedback submitted, thanks!