Preprocess your data with the Splunk Machine Learning Toolkit

Preprocessing your data is an important part of a machine learning workflow. Machine learning works best when you provide a clean, numeric matrix of data as the foundation for building your machine learning models. Use preprocessing options included in the Splunk Machine Learning Toolkit (MLTK) to transform your data so that it is more suitable for machine learning modeling and visualization.

Preprocess data using MLTK assistants

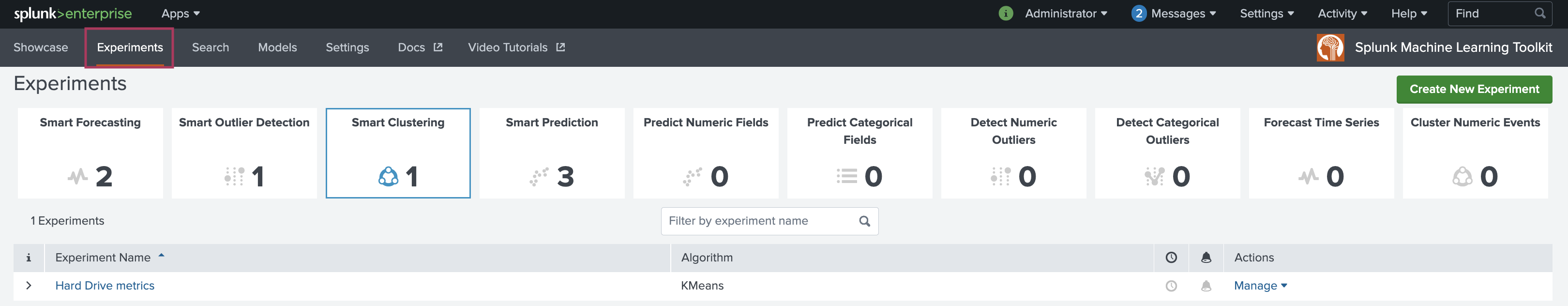

MLTK guided modeling assistants bring all aspects of a monitored machine learning pipeline into one interface. All of the available assistants are shown on the Experiments tab in MLTK as shown in the following image:

All MLTK Smart Assistants and a selection of Experiment Assistants include a data preprocessing option within the guided workflow.

As with other aspects of MLTK guided modeling assistants, any preprocessing steps taken also generate Splunk Search Processing Language (SPL) that can be viewed using the SPL button within each assistant. As you apply one or more preprocessing steps, review the results against your data. You can modify a preprocessing step as long as no other steps have been added.

Once you add another preprocessing step, previous steps cannot be modified. Removing a preprocessing step also removes all subsequent preprocessing steps.

See the following table for which assistants include data preprocessing options:

| MLTK assistant | Data preprocessing option |

|---|---|

| Smart Forecasting Assistant | Includes the preprocessing option to join special time entries using a Lookup file at the Learn stage of the assistant workflow.

To learn more see Smart Forecasting Assistant. |

| Smart Outlier Detection Assistant | Includes the preprocessing option to extract time from data at the Learn stage of the assistant workflow.

To learn more see Smart Outlier Detection Assistant. |

| Smart Clustering Assistant | Includes the preprocessing algorithm options of PCA, Kernel PCA, and StandardScaler at the Learn stage of the assistant workflow.

To learn more see Smart Clustering Assistant. |

| Smart Prediction Assistant | Includes the preprocessing algorithm options of PCA, Kernel PCA, and FieldSelector at the Learn stage of the assistant workflow.

To learn more see Smart Prediction Assistant. |

| Predict Numeric Fields Experiment Assistant | Includes the preprocessing algorithm options of StandardScaler, FieldSelector, PCA, Kernel PCA, and TFIDF in the workflow. The default option is StandardScaler.

To learn more see Predict Numeric Fields Experiment Assistant. |

| Predict Categorical Fields Experiment Assistant | Includes the preprocessing algorithm options of StandardScaler, FieldSelector, PCA, Kernel PCA, and TFIDF in the workflow. The default option is StandardScaler.

To learn more see Predict Categorical Fields Experiment Assistant. |

| Cluster Numeric Events Experiment Assistant | Includes the preprocessing algorithm options of StandardScaler, FieldSelector, PCA, Kernel PCA, and TFIDF in the workflow. The default option is StandardScaler.

To learn more see Cluster Numeric Events Experiment Assistant. |

Preprocess data using MLTK algorithms

MLTK supports over 30 algorithms including those for anomaly detection, classifiers, clustering, cross-validation, feature extraction, preprocessing, regressors, and times series analysis.

Use preprocessing algorithms to educe the number of fields in the data, produce numeric fields from unstructured text, and re-scale numeric fields. Choose the algorithms that best suit the preprocessing needs of your data. Select from the following preprocessing algorithm options:

FieldSelector

The FieldSelector algorithm uses the scikit-learn GenericUnivariateSelect to select the best predictor fields based on univariate statistical tests.

To learn more about the parameters, syntax, and syntax constraints for this algorithm, see FieldSelector.

| Field name | Field description |

|---|---|

| Field to predict/ Target variable | Make a single selection from list. |

| Predictor fields/ Future variable | Select one or more fields from list. |

| Type | Select if data is categorical or numeric. |

| Mode | Select from field selection modes including Percentile, K-best, False positivity rate, False discovery rate, and Family-wise error rate. |

KernelPCA

The KernelPCA algorithm uses the scikit-learn KernelPCA to reduce the number of fields by extracting uncorrelated new features out of data. It is strongly recommended to standardize fields using StandardScaler before using the KernelPCA method.

To reduce the number of dimensions, use the KernelPCA or PCA algorithms to increase performance. KernelPCA and PCA can also be used to reduce the number of dimensions for visualization purposes, for example, to project into 2D in order to display a scatterplot chart.

To learn more about the parameters, syntax, and syntax constraints for this algorithm, see KernelPCA.

| Field name | Field description |

|---|---|

| Fields to preprocess | Select one or more fields. |

| K # of components/ # new fields to create | Specify the number of principal components. K new fields are created with the prefix of "PC_". |

| Gamma | Enter the kernel coefficient for the rbf kernel. |

| Tolerance | Enter the convergence tolerance. If 0, an optimal value is chosen using arpack. |

| Max iteration | Enter the maximum number of iterations. If not specified, an optimal value is chosen using arpack. |

PCA

The PCA algorithm uses the scikit-learn PCA algorithm to reduce the number of fields by extracting new uncorrelated features out of the data. To reduce the number of dimensions, use the PCA or KernelPCA algorithms to increase performance.

PCA and KernelPCA can also be used to reduce the number of dimensions for visualization purposes, for example, to project into 2D in order to display a scatterplot chart.

To learn more about the parameters, syntax, and syntax constraints for this algorithm, see PCA.

| Field name | Field description |

|---|---|

| Fields to preprocess | Select one or more fields. |

| K # of componenets/ # new fields to create | Specify the number of principal components. K new fields are created with the prefix of "PC_". |

StandardScaler

The StandardScaler algorithm uses the scikit-learn StandardScaler algorithm to standardize the data fields by scaling their mean and standard deviation to 0 and 1, respectively. This standardization helps to avoid dominance of one or more fields over others in subsequent machine learning algorithms.

StandardScaler is useful when the fields have very different scales. StandardScaler standardizes numeric fields by centering about the mean, rescaling to have a standard deviation of one, or both.

To learn more about the parameters, syntax, and syntax constraints for this algorithm, see StandardScaler.

| Field name | Field description |

|---|---|

| Fields to preprocess | Select one or more fields. Any new fields are created with the prefix of "SS_". |

| Standardize fields | Specify whether to center values with respect to mean, to scale values with respect to the standard deviation, or both. |

TFIDF

The TFIDF algorithm converts raw text into numeric fields, making it possible to use that data with other machine learning algorithms. The TFIDF algorithm selects N-grams, which are groups of N consecutive string (or term), from fields containing free-form text and converts them into numeric fields amenable to machine learning. For example, running TFIDF on a field containing email Subjects might select the bi-gram 'project proposal' and create a field indicating the weighted frequency of that bi-gram in each Subject.

To learn more about the parameters, syntax, and syntax constraints for this algorithm, see TFIDF.

| Field name | Field description |

|---|---|

| Fields to preprocess | Select one or more fields. |

| Max features | Build a vocabulary that only considers the top K features ordered by term frequency. |

| Max document frequency | Ignore terms that have a document frequency strictly higher than the given threshold. Field supports a value type of integer or float. |

| Min document frequency (cut-off) | Ignore terms that have a document frequency strictly lower than the given threshold. Field supports a value type of integer or float. |

| N-gram range | The lower and upper boundary of the range of N-values for different N-grams to be extracted. |

| Analyzer | Select whether the feature is made of word or character N-grams. This field defaults as set to word. Choose "char" to treat each letter like a word, resulting in sub-strings of N consecutive characters, including spaces. |

| Norm | Norm used to normalize term vectors. |

| Token pattern | Regular expression denoting what constitutes a "token". |

| Stop words | Enter any words you want to omit from the analysis. Stop words typically include common words such as "the" or "an". |

| Preparing your data for machine learning | Smart Forecasting Assistant |

This documentation applies to the following versions of Splunk® Machine Learning Toolkit: 5.4.0, 5.4.1, 5.4.2, 5.5.0

Download manual

Download manual

Feedback submitted, thanks!